Stay informed

Receive updates on teaching and learning initiatives and events.

- Resources for teaching

- Assessment design

- Tests and exams

Short answer and essay questions

Short answer and essay questions are types of assessment that are commonly used to evaluate a student’s understanding and knowledge.

Tips for creating short answer and essay questions

e.g., What is __? or how could __ be put into practice?

- Consider the course learning outcomes . Design questions that appropriately assess the relevant learning objectives.

- Make sure the content measures knowledge appropriate to the desired learner level and learning goal.

- When students think critically they are required to step beyond recalling factual information , incorporating evidence and examples to corroborate and/or dispute the validity of assertions/suppositions and compare and contrast multiple perspectives on the same argument.

e.g., paragraphs? sentences? Is bullet point format acceptable or does it have to be an essay format?

- Specify how many marks each question is worth .

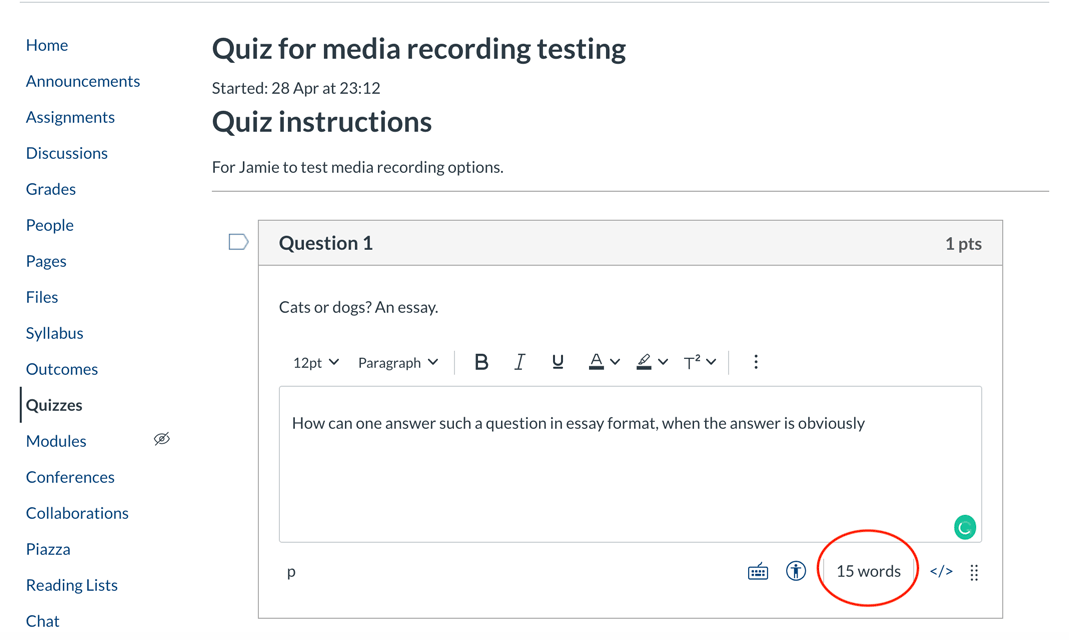

- Word limits should be applied within Canvas for discursive or essay-type responses.

- Check that your language and instructions are appropriate to the student population and discipline of study. Not all students have English as their first language.

- Ensure the instructions to students are clear , including optional and compulsory questions and the various components of the assessment.

Questions that promote deeper thinking

Use “open-ended” questions to provoke divergent thinking.

These questions will allow for a variety of possible answers and encourage students to think at a deeper level. Some generic question stems that trigger or stimulate different forms of critical thinking include:

- “What are the implications of …?”

- “Why is it important …?”

- “What is another way to look at …?”

Use questions that are deliberate in the types of higher order thinking to promote/assess

Rather than promoting recall of facts, use questions that allow students to demonstrate their comprehension, application and analysis of the concepts.

Generic question stems that can be used to trigger and assess higher order thinking

Comprehension.

Convert information into a form that makes sense to the individual .

- How would you put __ into your own words?

- What would be an example of __?

Application

Apply abstract or theoretical principles to concrete , practical situations.

- How can you make use of __?

- How could __ be put into practice?

Break down or dissect information.

- What are the most important/significant ideas or elements of __?

- What assumptions/biases underlie or are hidden within __?

Build up or connect separate pieces of information to form a larger, more coherent pattern

- How can these different ideas be grouped together into a more general category?

Critically judge the validity or aesthetic value of ideas, data, or products.

- How would you judge the accuracy or validity of __?

- How would you evaluate the ethical (moral) implications or consequences of __?

Draw conclusions about particular instances that are logically consistent.

- What specific conclusions can be drawn from this general __?

- What particular actions would be consistent with this general __?

Balanced thinking

Carefully consider arguments/evidence for and against a particular position.

- What evidence supports and contradicts __?

- What are arguments for and counterarguments against __?

Causal reasoning

Identify cause-effect relationships between different ideas or actions.

- How would you explain why __ occurred?

- How would __ affect or influence __?

Creative thinking

Generate imaginative ideas or novel approaches to traditional practices.

- What might be a metaphor or analogy for __?

- What might happen if __? (hypothetical reasoning)

Redesign test questions for open-book format

It is important to redesign the assessment tasks to authentically assess the intended learning outcomes in a way that is appropriate for this mode of assessment. Replacing questions that simply recall facts with questions that require higher level cognitive skills—for example analysis and explanation of why and how students reached an answer—provides opportunities for reflective questions based on students’ own experiences.

More quick, focused problem-solving and analysis—conducted with restricted access to limited allocated resources—will need to incorporate a student’s ability to demonstrate a more thoughtful research-based approach and/or the ability to negotiate an understanding of more complex problems, sometimes in an open-book format.

Layers can be added to the problem/process, and the inclusion of a reflective aspect can help achieve these goals, whether administered in an oral test or written examination format.

| Alternative format, focusing on explanation | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

Example 2: Analytic style multiple choice question or short answer

Acknowledgement: Deakin University and original multiple choice questions: Jennifer Lindley, Monash University. Setting word limits for discursive or essay-type responsesTry to set a fair and reasonable word count for long answer and essay questions. Some points to consider are:

Communicate your expectations around word count to students in your assessment instructions, including how you will deal with submissions that are outside the word count. E.g., Write 600-800 words evaluating the key concepts of XYZ. Excess text over the word limit will not be marked. Let students know how to check the word count in their submission:

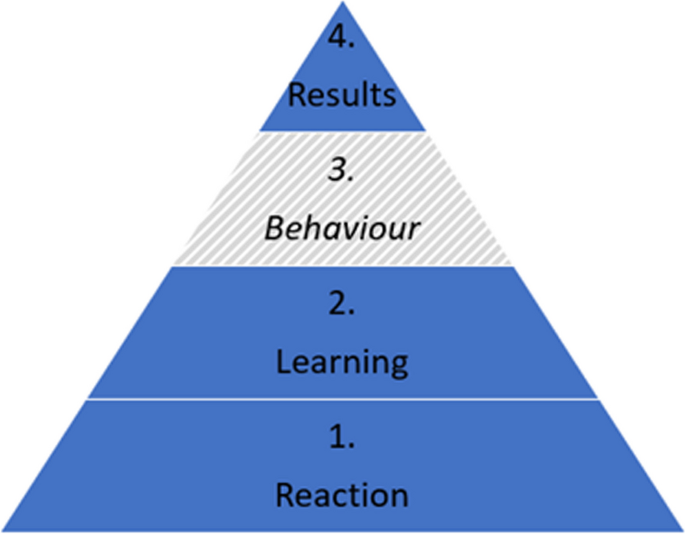

Multi-choice questionsWrite MCQs that assess reasoning, rather than recall. Page updated 16/03/2023 (added open-book section) What do you think about this page? Is there something missing? For enquiries unrelated to this content, please visit the Staff Service Centre This form is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply. Center for TeachingStudent assessment in teaching and learning.  Much scholarship has focused on the importance of student assessment in teaching and learning in higher education. Student assessment is a critical aspect of the teaching and learning process. Whether teaching at the undergraduate or graduate level, it is important for instructors to strategically evaluate the effectiveness of their teaching by measuring the extent to which students in the classroom are learning the course material.This teaching guide addresses the following: 1) defines student assessment and why it is important, 2) identifies the forms and purposes of student assessment in the teaching and learning process, 3) discusses methods in student assessment, and 4) makes an important distinction between assessment and grading., what is student assessment and why is it important. In their handbook for course-based review and assessment, Martha L. A. Stassen et al. define assessment as “the systematic collection and analysis of information to improve student learning.” (Stassen et al., 2001, pg. 5) This definition captures the essential task of student assessment in the teaching and learning process. Student assessment enables instructors to measure the effectiveness of their teaching by linking student performance to specific learning objectives. As a result, teachers are able to institutionalize effective teaching choices and revise ineffective ones in their pedagogy. The measurement of student learning through assessment is important because it provides useful feedback to both instructors and students about the extent to which students are successfully meeting course learning objectives. In their book Understanding by Design , Grant Wiggins and Jay McTighe offer a framework for classroom instruction—what they call “Backward Design”—that emphasizes the critical role of assessment. For Wiggens and McTighe, assessment enables instructors to determine the metrics of measurement for student understanding of and proficiency in course learning objectives. They argue that assessment provides the evidence needed to document and validate that meaningful learning has occurred in the classroom. Assessment is so vital in their pedagogical design that their approach “encourages teachers and curriculum planners to first ‘think like an assessor’ before designing specific units and lessons, and thus to consider up front how they will determine if students have attained the desired understandings.” (Wiggins and McTighe, 2005, pg. 18) For more on Wiggins and McTighe’s “Backward Design” model, see our Understanding by Design teaching guide. Student assessment also buttresses critical reflective teaching. Stephen Brookfield, in Becoming a Critically Reflective Teacher, contends that critical reflection on one’s teaching is an essential part of developing as an educator and enhancing the learning experience of students. Critical reflection on one’s teaching has a multitude of benefits for instructors, including the development of rationale for teaching practices. According to Brookfield, “A critically reflective teacher is much better placed to communicate to colleagues and students (as well as to herself) the rationale behind her practice. She works from a position of informed commitment.” (Brookfield, 1995, pg. 17) Student assessment, then, not only enables teachers to measure the effectiveness of their teaching, but is also useful in developing the rationale for pedagogical choices in the classroom. Forms and Purposes of Student AssessmentThere are generally two forms of student assessment that are most frequently discussed in the scholarship of teaching and learning. The first, summative assessment , is assessment that is implemented at the end of the course of study. Its primary purpose is to produce a measure that “sums up” student learning. Summative assessment is comprehensive in nature and is fundamentally concerned with learning outcomes. While summative assessment is often useful to provide information about patterns of student achievement, it does so without providing the opportunity for students to reflect on and demonstrate growth in identified areas for improvement and does not provide an avenue for the instructor to modify teaching strategy during the teaching and learning process. (Maki, 2002) Examples of summative assessment include comprehensive final exams or papers. The second form, formative assessment , involves the evaluation of student learning over the course of time. Its fundamental purpose is to estimate students’ level of achievement in order to enhance student learning during the learning process. By interpreting students’ performance through formative assessment and sharing the results with them, instructors help students to “understand their strengths and weaknesses and to reflect on how they need to improve over the course of their remaining studies.” (Maki, 2002, pg. 11) Pat Hutchings refers to this form of assessment as assessment behind outcomes. She states, “the promise of assessment—mandated or otherwise—is improved student learning, and improvement requires attention not only to final results but also to how results occur. Assessment behind outcomes means looking more carefully at the process and conditions that lead to the learning we care about…” (Hutchings, 1992, pg. 6, original emphasis). Formative assessment includes course work—where students receive feedback that identifies strengths, weaknesses, and other things to keep in mind for future assignments—discussions between instructors and students, and end-of-unit examinations that provide an opportunity for students to identify important areas for necessary growth and development for themselves. (Brown and Knight, 1994) It is important to recognize that both summative and formative assessment indicate the purpose of assessment, not the method . Different methods of assessment (discussed in the next section) can either be summative or formative in orientation depending on how the instructor implements them. Sally Brown and Peter Knight in their book, Assessing Learners in Higher Education, caution against a conflation of the purposes of assessment its method. “Often the mistake is made of assuming that it is the method which is summative or formative, and not the purpose. This, we suggest, is a serious mistake because it turns the assessor’s attention away from the crucial issue of feedback.” (Brown and Knight, 1994, pg. 17) If an instructor believes that a particular method is formative, he or she may fall into the trap of using the method without taking the requisite time to review the implications of the feedback with students. In such cases, the method in question effectively functions as a form of summative assessment despite the instructor’s intentions. (Brown and Knight, 1994) Indeed, feedback and discussion is the critical factor that distinguishes between formative and summative assessment. Methods in Student AssessmentBelow are a few common methods of assessment identified by Brown and Knight that can be implemented in the classroom. [1] It should be noted that these methods work best when learning objectives have been identified, shared, and clearly articulated to students. Self-AssessmentThe goal of implementing self-assessment in a course is to enable students to develop their own judgement. In self-assessment students are expected to assess both process and product of their learning. While the assessment of the product is often the task of the instructor, implementing student assessment in the classroom encourages students to evaluate their own work as well as the process that led them to the final outcome. Moreover, self-assessment facilitates a sense of ownership of one’s learning and can lead to greater investment by the student. It enables students to develop transferable skills in other areas of learning that involve group projects and teamwork, critical thinking and problem-solving, as well as leadership roles in the teaching and learning process. Things to Keep in Mind about Self-Assessment

Peer AssessmentPeer assessment is a type of collaborative learning technique where students evaluate the work of their peers and have their own evaluated by peers. This dimension of assessment is significantly grounded in theoretical approaches to active learning and adult learning . Like self-assessment, peer assessment gives learners ownership of learning and focuses on the process of learning as students are able to “share with one another the experiences that they have undertaken.” (Brown and Knight, 1994, pg. 52) Things to Keep in Mind about Peer Assessment

According to Euan S. Henderson, essays make two important contributions to learning and assessment: the development of skills and the cultivation of a learning style. (Henderson, 1980) Essays are a common form of writing assignment in courses and can be either a summative or formative form of assessment depending on how the instructor utilizes them in the classroom. Things to Keep in Mind about Essays

Exams and time-constrained, individual assessmentExaminations have traditionally been viewed as a gold standard of assessment in education, particularly in university settings. Like essays they can be summative or formative forms of assessment. Things to Keep in Mind about Exams

As Brown and Knight assert, utilizing multiple methods of assessment, including more than one assessor, improves the reliability of data. However, a primary challenge to the multiple methods approach is how to weigh the scores produced by multiple methods of assessment. When particular methods produce higher range of marks than others, instructors can potentially misinterpret their assessment of overall student performance. When multiple methods produce different messages about the same student, instructors should be mindful that the methods are likely assessing different forms of achievement. (Brown and Knight, 1994). For additional methods of assessment not listed here, see “Assessment on the Page” and “Assessment Off the Page” in Assessing Learners in Higher Education . In addition to the various methods of assessment listed above, classroom assessment techniques also provide a useful way to evaluate student understanding of course material in the teaching and learning process. For more on these, see our Classroom Assessment Techniques teaching guide. Assessment is More than GradingInstructors often conflate assessment with grading. This is a mistake. It must be understood that student assessment is more than just grading. Remember that assessment links student performance to specific learning objectives in order to provide useful information to instructors and students about student achievement. Traditional grading on the other hand, according to Stassen et al. does not provide the level of detailed and specific information essential to link student performance with improvement. “Because grades don’t tell you about student performance on individual (or specific) learning goals or outcomes, they provide little information on the overall success of your course in helping students to attain the specific and distinct learning objectives of interest.” (Stassen et al., 2001, pg. 6) Instructors, therefore, must always remember that grading is an aspect of student assessment but does not constitute its totality. Teaching Guides Related to Student AssessmentBelow is a list of other CFT teaching guides that supplement this one. They include:

References and Additional ResourcesThis teaching guide draws upon a number of resources listed below. These sources should prove useful for instructors seeking to enhance their pedagogy and effectiveness as teachers. Angelo, Thomas A., and K. Patricia Cross. Classroom Assessment Techniques: A Handbook for College Teachers . 2 nd edition. San Francisco: Jossey-Bass, 1993. Print. Brookfield, Stephen D. Becoming a Critically Reflective Teacher . San Francisco: Jossey-Bass, 1995. Print. Brown, Sally, and Peter Knight. Assessing Learners in Higher Education . 1 edition. London ; Philadelphia: Routledge, 1998. Print. Cameron, Jeanne et al. “Assessment as Critical Praxis: A Community College Experience.” Teaching Sociology 30.4 (2002): 414–429. JSTOR . Web. Gibbs, Graham and Claire Simpson. “Conditions under which Assessment Supports Student Learning. Learning and Teaching in Higher Education 1 (2004): 3-31. Henderson, Euan S. “The Essay in Continuous Assessment.” Studies in Higher Education 5.2 (1980): 197–203. Taylor and Francis+NEJM . Web. Maki, Peggy L. “Developing an Assessment Plan to Learn about Student Learning.” The Journal of Academic Librarianship 28.1 (2002): 8–13. ScienceDirect . Web. The Journal of Academic Librarianship. Sharkey, Stephen, and William S. Johnson. Assessing Undergraduate Learning in Sociology . ASA Teaching Resource Center, 1992. Print. Wiggins, Grant, and Jay McTighe. Understanding By Design . 2nd Expanded edition. Alexandria, VA: Assn. for Supervision & Curriculum Development, 2005. Print. [1] Brown and Night discuss the first two in their chapter entitled “Dimensions of Assessment.” However, because this chapter begins the second part of the book that outlines assessment methods, I have collapsed the two under the category of methods for the purposes of continuity. Teaching GuidesQuick Links

The Science of Reading2-day workshop sept 14 & 21, standards-based grading, 2-day workshop sept 17 & 24, social-emotional learning, 2-day workshop oct 22 & 29, engaging the 21st century learner, 4-day workshop oct 30, nov 6, 13, & 20, reclaiming the joy of teaching, full day workshop dec 7.  Assessing Student Learning: 6 Types of Assessment and How to Use Them Assessing student learning is a critical component of effective teaching and plays a significant role in fostering academic success. We will explore six different types of assessment and evaluation strategies that can help K-12 educators, school administrators, and educational organizations enhance both student learning experiences and teacher well-being. We will provide practical guidance on how to implement and utilize various assessment methods, such as formative and summative assessments, diagnostic assessments, performance-based assessments, self-assessments, and peer assessments. Additionally, we will also discuss the importance of implementing standard-based assessments and offer tips for choosing the right assessment strategy for your specific needs. Importance of Assessing Student LearningAssessment plays a crucial role in education, as it allows educators to measure students’ understanding, track their progress, and identify areas where intervention may be necessary. Assessing student learning not only helps educators make informed decisions about instruction but also contributes to student success and teacher well-being. Assessments provide insight into student knowledge, skills, and progress while also highlighting necessary adjustments in instruction. Effective assessment practices ultimately contribute to better educational outcomes and promote a culture of continuous improvement within schools and classrooms. 1. Formative assessment Formative assessment is a type of assessment that focuses on monitoring student learning during the instructional process. Its primary purpose is to provide ongoing feedback to both teachers and students, helping them identify areas of strength and areas in need of improvement. This type of assessment is typically low-stakes and does not contribute to a student’s final grade. Some common examples of formative assessments include quizzes, class discussions, exit tickets, and think-pair-share activities. This type of assessment allows educators to track student understanding throughout the instructional period and identify gaps in learning and intervention opportunities. To effectively use formative assessments in the classroom, teachers should implement them regularly and provide timely feedback to students. This feedback should be specific and actionable, helping students understand what they need to do to improve their performance. Teachers should use the information gathered from formative assessments to refine their instructional strategies and address any misconceptions or gaps in understanding. Formative assessments play a crucial role in supporting student learning and helping educators make informed decisions about their instructional practices. Check Out Our Online Course: Standards-Based Grading: How to Implement a Meaningful Grading System that Improves Student Success2. summative assessment.  Examples of summative assessments include final exams, end-of-unit tests, standardized tests, and research papers. To effectively use summative assessments in the classroom, it’s important to ensure that they are aligned with the learning objectives and content covered during instruction. This will help to provide an accurate representation of a student’s understanding and mastery of the material. Providing students with clear expectations and guidelines for the assessment can help reduce anxiety and promote optimal performance. Summative assessments should be used in conjunction with other assessment types, such as formative assessments, to provide a comprehensive evaluation of student learning and growth. 3. Diagnostic assessmentDiagnostic assessment, often used at the beginning of a new unit or term, helps educators identify students’ prior knowledge, skills, and understanding of a particular topic. This type of assessment enables teachers to tailor their instruction to meet the specific needs and learning gaps of their students. Examples of diagnostic assessments include pre-tests, entry tickets, and concept maps. To effectively use diagnostic assessments in the classroom, teachers should analyze the results to identify patterns and trends in student understanding. This information can be used to create differentiated instruction plans and targeted interventions for students struggling with the upcoming material. Sharing the results with students can help them understand their strengths and areas for improvement, fostering a growth mindset and encouraging active engagement in their learning. 4. Performance-based assessmentPerformance-based assessment is a type of evaluation that requires students to demonstrate their knowledge, skills, and abilities through the completion of real-world tasks or activities. The main purpose of this assessment is to assess students’ ability to apply their learning in authentic, meaningful situations that closely resemble real-life challenges. Examples of performance-based assessments include projects, presentations, portfolios, and hands-on experiments. These assessments allow students to showcase their understanding and application of concepts in a more active and engaging manner compared to traditional paper-and-pencil tests. To effectively use performance-based assessments in the classroom, educators should clearly define the task requirements and assessment criteria, providing students with guidelines and expectations for their work. Teachers should also offer support and feedback throughout the process, allowing students to revise and improve their performance. Incorporating opportunities for peer feedback and self-reflection can further enhance the learning process and help students develop essential skills such as collaboration, communication, and critical thinking. 5. Self-assessmentSelf-assessment is a valuable tool for encouraging students to engage in reflection and take ownership of their learning. This type of assessment requires students to evaluate their own progress, skills, and understanding of the subject matter. By promoting self-awareness and critical thinking, self-assessment can contribute to the development of lifelong learning habits and foster a growth mindset. Examples of self-assessment activities include reflective journaling, goal setting, self-rating scales, or checklists. These tools provide students with opportunities to assess their strengths, weaknesses, and areas for improvement. When implementing self-assessment in the classroom, it is important to create a supportive environment where students feel comfortable and encouraged to be honest about their performance. Teachers can guide students by providing clear criteria and expectations for self-assessment, as well as offering constructive feedback to help them set realistic goals for future learning. Incorporating self-assessment as part of a broader assessment strategy can reinforce learning objectives and empower students to take an active role in their education. Reflecting on their performance and understanding the assessment criteria can help them recognize both short-term successes and long-term goals. This ongoing process of self-evaluation can help students develop a deeper understanding of the material, as well as cultivate valuable skills such as self-regulation, goal setting, and critical thinking. 6. Peer assessmentPeer assessment, also known as peer evaluation, is a strategy where students evaluate and provide feedback on their classmates’ work. This type of assessment allows students to gain a better understanding of their own work, as well as that of their peers. Examples of peer assessment activities include group projects, presentations, written assignments, or online discussion boards. In these settings, students can provide constructive feedback on their peers’ work, identify strengths and areas for improvement, and suggest specific strategies for enhancing performance. Constructive peer feedback can help students gain a deeper understanding of the material and develop valuable skills such as working in groups, communicating effectively, and giving constructive criticism. To successfully integrate peer assessment in the classroom, consider incorporating a variety of activities that allow students to practice evaluating their peers’ work, while also receiving feedback on their own performance. Encourage students to focus on both strengths and areas for improvement, and emphasize the importance of respectful, constructive feedback. Provide opportunities for students to reflect on the feedback they receive and incorporate it into their learning process. Monitor the peer assessment process to ensure fairness, consistency, and alignment with learning objectives. Implementing Standard-Based Assessments Standard-based assessments are designed to measure students’ performance relative to established learning standards, such as those generated by the Common Core State Standards Initiative or individual state education guidelines. By implementing these types of assessments, educators can ensure that students meet the necessary benchmarks for their grade level and subject area, providing a clearer picture of student progress and learning outcomes. To successfully implement standard-based assessments, it is essential to align assessment tasks with the relevant learning standards. This involves creating assessments that directly measure students’ knowledge and skills in relation to the standards rather than relying solely on traditional testing methods. As a result, educators can obtain a more accurate understanding of student performance and identify areas that may require additional support or instruction. Grading formative and summative assessments within a standard-based framework requires a shift in focus from assigning letter grades or percentages to evaluating students’ mastery of specific learning objectives. This approach encourages educators to provide targeted feedback that addresses individual student needs and promotes growth and improvement. By utilizing rubrics or other assessment tools, teachers can offer clear, objective criteria for evaluating student work, ensuring consistency and fairness in the grading process. Tips For Choosing the Right Assessment StrategyWhen selecting an assessment strategy, it’s crucial to consider its purpose. Ask yourself what you want to accomplish with the assessment and how it will contribute to student learning. This will help you determine the most appropriate assessment type for your specific situation. Aligning assessments with learning objectives is another critical factor. Ensure that the assessment methods you choose accurately measure whether students have met the desired learning outcomes. This alignment will provide valuable feedback to both you and your students on their progress. Diversifying assessment methods is essential for a comprehensive evaluation of student learning. By using a variety of assessment types, you can gain a more accurate understanding of students’ strengths and weaknesses. This approach also helps support different learning styles and reduces the risk of overemphasis on a single assessment method. Incorporating multiple forms of assessment, such as formative, summative, diagnostic, performance-based, self-assessment, and peer assessment, can provide a well-rounded understanding of student learning. By doing so, educators can make informed decisions about instruction, support, and intervention strategies to enhance student success and overall classroom experience. Challenges and Solutions in Assessment ImplementationImplementing various assessment strategies can present several challenges for educators. One common challenge is the limited time and resources available for creating and administering assessments. To address this issue, teachers can collaborate with colleagues to share resources, divide the workload, and discuss best practices. Utilizing technology and online platforms can also streamline the assessment process and save time. Another challenge is ensuring that assessments are unbiased and inclusive. To overcome this, educators should carefully review assessment materials for potential biases and design assessments that are accessible to all students, regardless of their cultural backgrounds or learning abilities. Offering flexible assessment options for the varying needs of learners can create a more equitable and inclusive learning environment. It is essential to continually improve assessment practices and seek professional development opportunities. Seeking support from colleagues, attending workshops and conferences related to assessment practices, or enrolling in online courses can help educators stay up-to-date on best practices while also providing opportunities for networking with other professionals. Ultimately, these efforts will contribute to an improved understanding of the assessments used as well as their relevance in overall student learning. Assessing student learning is a crucial component of effective teaching and should not be overlooked. By understanding and implementing the various types of assessments discussed in this article, you can create a more comprehensive and effective approach to evaluating student learning in your classroom. Remember to consider the purpose of each assessment, align them with your learning objectives, and diversify your methods for a well-rounded evaluation of student progress. If you’re looking to further enhance your assessment practices and overall professional development, Strobel Education offers workshops , courses , keynotes , and coaching services tailored for K-12 educators. With a focus on fostering a positive school climate and enhancing student learning, Strobel Education can support your journey toward improved assessment implementation and greater teacher well-being. Related Posts SBG | Step 1 – Prioritizing SBG | Step 3 – Creating AssessmentsSubscribe to our blog today, keep in touch. Copyright 2024 Strobel Education, all rights reserved. Best Practices for Assessing Student LearningVarying assessment types. Best practices in assessing student learning include using several types of assessment that enable students to show evidence of their learning in various ways as they learn the content and achieve the learning outcomes.

Short and Frequent AssessmentsAssessment of student learning should be frequent throughout the course. These frequent experiences should require students to perform a task, answer questions, or other action that gives evidence of their learning. The most important thing is to give immediate and ongoing feedback so students will know what they are doing well and what improvements they need to make. You should quickly evaluate the assessments so that the students can have immediate feedback that will guide their continued learning. Although some of these assessments will provide data and scores to be included in student grades, it is not necessary to record scores for all assessments. Culminating AssessmentsCulminating assessments are not necessarily comprehensive exams. The BYU policy for final exams includes this statement (see https://policy.byu.edu/view/index.php?p=64 ): Final examinations:

A culminating assessment occurs at the end of the course and focuses on the student’s achievement of the learning outcomes. The student’s experience with this concluding assessment should be both representative of their learning and inspiring for continued growth. You can provide a culminating assessment in a variety of ways, including presentations, research papers, group/panel discussions, poster presentations, oral exams, traditional final exams, etc. Using Assessments to Improve Student LearningBy aligning your assessments with the learning outcomes, you can evaluate student performance and organize feedback and plans for your students’ further learning. There are several ways you can analyze and use student-performance data to help improve your students’ learning. Two main ways of doing this are using item-analysis data in Learning Suite Exams (for exams scored online or at the Testing Center), or using scoring rubrics. Using student-performance data will enable you to generate focused feedback for students to use as they improve their learning and move forward in your class. This data will also help you identify gaps in what you are intending for students to be able to know and do versus their actual achievement of those outcomes. You will then be able to make decisions and identify areas where you can improve your course organization and teaching. Assessment Services Links

Now searching for:

Essay assessments ask students to demonstrate a point of view supported by evidence. They allow students to demonstrate what they've learned and build their writing skills. An essay question prompts a written response, which may vary from a few paragraphs to a number of pages. Essay questions are generally open-ended. They differ from short answer questions in that they:

When to use an essayEssays can be used to test students' higher order thinking. Advantages and limitations

Guidelines for developing essay assessmentsEssay question. Effective essay questions provide students with a focus (types of thinking and content) to use in their response. Make sure your essay question:

Review the question and improve using the following questions:

Alignment to learning outcomesTo ensure the assessment item aligns with learning outcomes:

Student preparationMake sure your students are prepared by:

Examples of essay question verbsIn the table below you will find lists of verbs that are commonly used in essay questions. These words:

Students in School: Importance of Assessment Essay

Are tests important for students? Why? How should learning be assessed? Essays like the one on this page aim to answer these questions. IntroductionAssessment of students is a vital exercise aimed at evaluating their knowledge, talents, thoughts, or beliefs (Harlen, 2007). It involves testing a part of the content taught in class to ascertain the students’ learning progress. Assessment should put into consideration students’ class work and outside class work. For younger kids, the teacher should focus on language development. This will enhance the kids’ confidence when expressing their ideas whenever asked. As in organizations, checks on the performance of students’ progress should be undertaken regularly. Notably, organizations have a high probability of investing in futility because they lack opportunity for correction. However, in schools there are more chances of correcting mistakes. Similarly, teachers and parents should have a basis of nurturing and correcting the students. This is only possible through assessment of students at certain intervals during their learning progress. Equally, parents or teachers can use tests as they teach as a means of offering quick solutions to challenges experienced by students while learning. All trainers should work together with their students with the aim of achieving some goals. To evaluate if the goals are met, trainers use various assessment methods depending on the profession. This is exactly true when it comes to assessment in schools. Assessment should focus on the student learning progress. It should be employed from the kindergarten to the highest levels of learning institutions such as the university. The most essential fact about assessment is that it has to be specific. This implies that each test should try to evaluate if a student is able to demonstrate the understanding of certain concepts taught in class. Contrary to what most examiners believe, assessment should never be used as a means of ranking students. I this case the key aims of assessment will be lost. Ranking is not bad, but to some extent it might create a negative impression and demoralize the students who are not ranked at top in class. They feel that they are foolish, which is not the case. In general, assessment should be used for evaluation of results and thus creating and formulation of strategies for improving the students’ learning and performance. Importance of assessment in schoolAssessment forms an important part of learning that determines whether the objectives of education have been attained or not (Salvia, 2001). For important decision making concerning the student’s performance, assessment is inevitable. It is very crucial since it determines what course or career can the student partake depending on class performance. This is not possible without an exam assessment. It engages instructors with a number of questions, which include whether they are teaching the students what they are supposed to be taught or not, and whether their teaching approach is suitable for students. Students should be subjected to assessment beyond class work, because the world is changing and they are supposed to adapt to dynamics they encounter in their everyday lives. Assessment is important for parents, students, and teachers. Teachers should be able to identify the students’ level of knowledge and their special needs. They should be able to identify skills, design lesson plans, and come up with the goals of learning. Similarly, instructors should be able to create new learning arrangements and select appropriate learning materials to meet individual student’s needs. Teachers have to inform parents about the student’s progress in class. This is only possible with the assessment of the students through either exam or group assessment. The assessment will make teachers improve learning mechanisms to meet the needs and abilities of all students. It provides teachers with a way of informing the public about the student’s progress in school. Whenever parents are informed about the results of their children, they have to contribute to decision making concerning the student’s education needs (Harlen, 2007). Parents are able to select and pay for the relevant curriculum for their students. They can hire personal tutors or pay tuition to promote the learning of the student. Students should be able to evaluate their performance and learning in school with the use of assessment results. It forms the basis of self-motivation as through it students are able to put extra efforts in order improve their exam performance. Without results, a student might be tempted to assume that he or she has mastered everything taught in class. Methods of assessmentVarious mechanisms can be used to assess the students in school. These include both group assessment and various examinations issued during the learning session. The exam could be done on a weekly, monthly, or terminal basis. Through this, a student is required to submit a written paper or oral presentation. Assignments are normally given with a fixed date of submission. The teacher determines the amount of time required depending on the complexity of the assignment. It can take a day, a week, or even a month and this ensures that the student does not only rely on class work. It promotes research work and instills the self-driven virtue to the student. In addition, short time exam gives a quick feedback to the teacher about the student performance. Exam methods of assessmentBefore looking at the various methods of exam assessment, it is important to understand the major role that the assessment plays in the learning of the student. Carrying out an assessment at regular intervals allows the teachers to know how their students are progressing over time with respect to their previous assessments (Harlen, 2007). Actually, testing of students helps in their learning and creates motivation to learn more and improve their performance in the future examination. It also guides the teacher on ways of passing on the knowledge to the students. There are three purposes of assessment and these include assessment for learning, assessment to learning, and assessment of learning. All these help the teacher in planning of his lessons and means of getting feedback from students. Moreover, these three factors of learning join the efforts of parents, student, and teachers in the process of learning. There are several repercussions realized when parents do not monitor closely the performance of their kids. Education experts assert that parents who fail to monitor their children’s learning progress are like farmers who sow seeds during planting season and wait to reap during the harvesting season yet they did nothing about it. The success of the student is easily achieved when there is harmony among the parents, teachers, and the students. Methods of assessment can be categorized into three steps: baseline, formative and summative (Stefanakis, 2010). The baseline is considered as the basic and marks the beginning of learning. The summative one carries the bigger weight than the formative in the overall performance of the student. It carries more marks and it is usually done at the end of the teaching period in the term paper. The aim is to check for the overall understanding of the unit or topic by the student. As the formative assessment is a continuous process during the learning session in the classroom, the instructor should use the general feedback and observations while teaching. It can provide an immediate solution to the teacher because the area that troubles the student is easily identified and the teacher takes appropriate action. Teachers should never ignore the formative or wait for the summative at the end of the learning term. Even if the teacher discovers weakness of the student, it might be less useful since there will be no room for improvement. Actually, it is more of a reactive measure rather than proactive summative assessment. Various mechanisms can be used to realize the formative assessment. These include surveys, which involve collecting of students’ opinions, attitudes, and behaviors during class (Nitko, 2001). They help the instructor to interact with the student more closely, creating a supportive learning environment for the student. The teacher is able to clear any existing misconception from the students due to prior knowledge. It can also involve reflections of the student. Here, the student is required to take some time and reflect on what was taught. It necessitates the student to ask several questions regarding what was taught, for instance, questions about the hottest topic, new concepts, or questions left unanswered. It also involves the teacher asking questions during a teaching session. This makes the teacher to point out the areas the students have not understood. By doing so, the teacher is able to focus and put more effort on some topics as compared to others. The teacher can also decide to issue homework or assignments to students. This gives students an opportunity to build confidence on the knowledge acquired during class work (Stefanakis, 2010). Most importantly, the teacher could include the objectives and expectations of each lesson and this can be in form of questions. These questions create awareness and curiosity of students about the topic. For the above methods of assessment, various formats have been adopted. First is the baseline assessment, which aims at examining individual’s experience as well as the prior knowledge. There are pencil and paper easement method, which is a written test. It can be a short essay or multiple choice questions. It checks for the student’s understanding of certain concepts. The third is the embedded assessment. It deals with testing the students in contextual learning and it is done in the formative stage. The fourth involves oral reports that aim at capturing the student’s communication and scientific skills. They are carried out in the formative stage. Interviews evaluate the group and individual performance during the formative stage. There is also a performance task, which requires the student to work on an action related to the problem while explaining a scientific idea. Usually, it is assessed both in the summative and formative stages. All these formats ensure the objective of the assessment is achieved (Harlen, 2007). The above exam method promotes learning and acquiring of knowledge among the students. Group methods of assessmentAssessment is a flexible activity as what is done to an individual during assessment can also be done in a group and still achieve the objectives of the assessment. Group work aims to ensure that students work together. The method is not as smooth as that of an individual’s assessment since awarding of grades is a bit tricky and not straightforward. The instructors will not know which student has contributed a lot in the group work, unless the same grade is given to group members to create fairness in the process of assessment (Paquette, 2010). It is advisable to consider both the process and finished product when assessing group work. By just looking at the final work of the group, no one can tell who did what and did not. Individual contributions are implicit in the final project. The teacher should employ some other measures to be able to distribute grades fairly. The solutions of assessing group include consideration of the process and the final work. The instructor should assess the process involved in the development of the final work. The aspect of the project includes punctuality, cooperation and contribution of the individual student to the group work (Stefanakis, 2010). The participation of each student and teamwork should be assessed. Fair grading requires looking at the achievement of the objectives of the project. In addition, the instructors can let the students assess and evaluate themselves through group participation. This enhances group teamwork and yields a fair distribution of grades. This is realized because the members of the group know how to research and present written analysis of their work. Self-assessment aims at realizing respect, promptness, and listening to minority views within the group. Another effective way of ensuring that group work becomes successful is by holding group members accountable. This actually curbs the issue of joy riding among the group members. Individuals are allocated with a certain portion of the entire job. This involves asking members to demonstrate what they have learned and how they have contributed into the group. In addition, the products and processes are assessed. Another interesting scenario is realized when the instructor gives students the opportunity to evaluate the work of other team members. The gauging of individuals involves the investigating of various aspects of the projects. These include communication skills, efforts, cooperation, and participation of individual members. It is facilitated by the use of forms, which are completed by the students. Group work aims at improving both accountability of individuals and vital information due to dynamics experienced in the group. To some extent, an instructor can involve the external feedbacks. These feedbacks are finally incorporated into the final score of the student’s group grade. There are various mechanisms for assessing and grading the group. First, there is shared grading. Through this, the submitted work of the group is assessed and same grade to all members is awarded without considering the individual’s contribution. Secondly, there is averaging of the group grade. Through this, each member is required to submit the portion allocated. After assessing the individual’s work, an average of all the members is evaluated and this grade is awarded to group members. This average group grade promotes members to focus on group and individual work. There is also individual grading, where the student’s allocated work is assessed and grades given to individuals. This enhances efforts during working with all the members. In fact, this method is the fairest way of grading group work. There is also an individual report grading in which each member is required to write individual report. After submitting, assessment is done and a grade is given to the student. Finally, there is an individual examination grading where questions are examined based on the project. This encourages students to participate fully during the project. It is hard to answer the questions if you have not participated in the group work. How assessment prepares students for higher education/ workforce/ student characterIt is a fact that in any institution exam is an inevitable criterion of assessing students. Whichever the system adopted by the governments of various countries worldwide, exam is an important event as teachers are able to allow those students who perform well to progress in their learning (Stefanakis, 2010). Those who have not met the minimum grading will require extra tuition before they are promoted. This will involve the initiatives of parents to hire tutors for the student. Exam assessment prepares the student for higher levels of learning, because the higher institutions of learning have exam assessment too. Therefore, it is important for the students to get used to exam as well as research, which will boost the student understanding during lectures in the university or in college. Similarly, at the end of a university degree course the students are required to carry out a project either as individual or group work. The knowledge and experience of teamwork gained during the lower study levels will play a great role in successful completion of tasks in the university. Another important factor of assessment is that it helps a student to develop his or her character from childhood to adulthood. For the first time a student joins the school the test should be initiated. From small things the student is asked by the teacher or by other colleagues, he or she learns how to associate with other students especially during the group work tasks. The student learns and embraces teamwork, cooperation, and accountability. These virtues are a foundation for character. In addition, the student acquires communication skills especially during the presentation of project work or during class sessions. These small facts about life accumulate and contribute to life outside the school. The student is able to work in any environment. The exam credentials are vital requirements in the job market. All firms base their employment qualification on exams. More often, employers choose best workers based on their exam papers. This approach has been vital since employers might not have time to assess ability to demonstrate their skills (Stefanakis, 2010). Therefore, the underlying basis is both exam and group assessment. Group assessment helps to build teamwork, which is a vital virtue in the workplace. Most projects in an organization are done in groups. Hence, teamwork aspects are very crucial during implementation. The student utilizes the knowledge and experience of group work during school. The working environment is not so much different from socialization in school. In any organization, the success of a company is determined by the teamwork and unity of the workers. These vital virtues are learnt and developed in school and are enhanced by assessment. Harlen, W. (2007). Assessment of learning . Los Angeles, CA: SAGE Publications. Nitko, A. J. (2001). Educational assessment of students (3rd ed.). Upper Saddle River, N.J.: Merrill. Paquette, K. R. (2010). Striving for the perfect classroom instructional and assessment strategies to meet the needs of today’s diverse learners . New York: Nova Science Publishers. Salvia, J. (2001). Assessment (8th ed.). Boston: Houghton Mifflin. Stefanakis, E. H. (2010). Differentiated assessment how to assess the learning potential of every student . San Francisco: Jossey-Bass.

IvyPanda. (2019, April 22). Students in School: Importance of Assessment Essay. https://ivypanda.com/essays/assessment-of-students-in-schools-essay/ "Students in School: Importance of Assessment Essay." IvyPanda , 22 Apr. 2019, ivypanda.com/essays/assessment-of-students-in-schools-essay/. IvyPanda . (2019) 'Students in School: Importance of Assessment Essay'. 22 April. IvyPanda . 2019. "Students in School: Importance of Assessment Essay." April 22, 2019. https://ivypanda.com/essays/assessment-of-students-in-schools-essay/. 1. IvyPanda . "Students in School: Importance of Assessment Essay." April 22, 2019. https://ivypanda.com/essays/assessment-of-students-in-schools-essay/. Bibliography IvyPanda . "Students in School: Importance of Assessment Essay." April 22, 2019. https://ivypanda.com/essays/assessment-of-students-in-schools-essay/.

Constructing testsWhether you use low-stakes assessments, such as practice quizzes, or high-stakes assessments, such as midterms and finals, the careful design of your tests and quizzes can provide you with better information on what and how much students have learned, as well as whether they are able to apply what they have learned. On this page, you can explore strategies for: Designing your test or quiz Creating multiple choice questions Creating essay and short answer questions Helping students succeed on your test/quiz Promoting academic integrity Assessing your assessment Designing your test or quizTests and quizzes can help instructors work toward a number of different goals. For example, a frequent cadence of quizzes can help motivate students, give you insight into students’ progress, and identify aspects of the course you might need to adjust. Understanding what you want to accomplish with the test or quiz will help guide your decisionmaking about things like length, format, level of detail expected from students, and the time frame for providing feedback to the students. Regardless of what type of test or quiz you develop, it is good to:

Creating multiple choice questionsWhile it is not advisable to rely solely on multiple choice questions to gauge student learning, they are often necessary in large-enrollment courses. And multiple choice questions can add value to any course by providing instructors with quick insight into whether students have a basic understanding of key information. They are also a great way to incorporate more formative assessment into your teaching . Creating effective multiple choice questions can be difficult, especially if you want students to go beyond simply recalling information. But it is possible to develop multiple choice questions that require higher-order thinking. Here are some strategies for writing effective multiple choice questions:

Creating essay and short answer questionsEssay and short answer questions that require students to compose written responses of several sentences or paragraphs offer instructors insights into students’ ability to reason, synthesize, and evaluate information. Here are some strategies for writing effective essay questions:

Strategies for grading essay and short answer questionsAlthough essay and short paragraph questions are more labor-intensive to grade than multiple-choice questions, the pay-off is often greater – they provide more insight into students’ critical thinking skills. Here are some strategies to help streamline the essay/short answer grading process:

Helping students succeedWhile important in university settings, tests aren’t commonly found outside classroom settings. Think about your own work – how often are you expected to sit down, turn over a sheet of paper, and demonstrate the isolated skill or understanding listed on the paper in less than an hour? Sound stressful? It is! And sometimes that stress can be compounded by students’ lives beyond the classroom. “Giving a traditional test feels fair from the vantage point of an instructor….The students take it at the same time and work in the same room. But their lives outside the test spill into it. Some students might have to find child care to take an evening exam. Others…have ADHD and are under additional stress in a traditional testing environment. So test conditions can be inequitable. They are also artificial: People are rarely required to solve problems under similar pressure, without outside resources, after they graduate. They rarely have to wait for a professor to grade them to understand their own performance.” “ What fixing a snowblower taught one professor about teaching ” Chronicle of Higher Education Many students understandably experience stress, anxiety, and apprehension about taking tests and that can affect their performance. Here are some strategies for reducing stress in testing environments:

Promoting academic integrityThe primary goal of a test or quiz is to provide insight into a student’s understanding or ability. If a student cheats, the instructor has no opportunity to assess learning and help the student grow. There are a variety of strategies instructors can employ to create a culture of academic integrity . Here are a few specifically related to developing tests and quizzes:

Assessing your assessmentObservation and iteration are key parts of a reflective teaching practice . Take time after you’ve graded a test or quiz to examine its effectiveness and identify ways to improve it. Start by asking yourself some basic questions:

Ohio State nav barThe Ohio State University

Designing Assessments of Student LearningImage Hollie Nyseth Brehm, Associate Professor, Department of Sociology Professor Hollie Nyseth Brehm was a graduate student the first time she taught a class, “I didn’t have any training on how to teach, so I assigned a final paper and gave them instructions: ‘Turn it in at the end of course.’ That was sort of it.” Brehm didn’t have a rubric or a process to check in with students along the way. Needless to say, the assignment didn’t lead to any major breakthroughs for her students. But it was a learning experience for Brehm. As she grew her teaching skills, she began to carefully craft assignments to align to course goals, make tasks realistic and meaningful, and break down large assignments into manageable steps. "Now I always have rubrics. … I always scaffold the assignment such that they’ll start by giving me their paper topic and a couple of sources and then turn in a smaller portion of it, and we write it in pieces. And that leads to a much better learning experience for them—and also for me, frankly, when I turn to grade it .” Reflect Have you ever planned a big assignment that didn’t turn out as you’d hoped? What did you learn, and how would you design that assignment differently now? What are students learning in your class? Are they meeting your learning outcomes? You simply cannot answer these questions without assessment of some kind. As educators, we measure student learning through many means, including assignments, quizzes, and tests. These assessments can be formal or informal, graded or ungraded. But assessment is not simply about awarding points and assigning grades. Learning is a process, not a product, and that process takes place during activities such as recall and practice. Assessing skills in varied ways helps you adjust your teaching throughout your course to support student learning  Research tells us that our methods of assessment don’t only measure how much students have learned. They also play an important role in the learning process. A phenomenon known as the “testing effect” suggests students learn more from repeated testing than from repeated exposure to the material they are trying to learn (Karpicke & Roediger, 2008). While exposure to material, such as during lecture or study, helps students store new information, it’s crucial that students actively practice retrieving that information and putting it to use. Frequent assessment throughout a course provides students with the practice opportunities that are essential to learning. In addition we can’t assume students can transfer what they have practiced in one context to a different context. Successful transfer of learning requires understanding of deep, structural features and patterns that novices to a subject are still developing (Barnett & Ceci, 2002; Bransford & Schwartz, 1999). If we want students to be able to apply their learning in a wide variety of contexts, they must practice what they’re learning in a wide variety of contexts . Providing a variety of assessment types gives students multiple opportunities to practice and demonstrate learning. One way to categorize the range of assessment options is as formative or summative. Formative and Summative AssessmentOpportunities not simply to practice, but to receive feedback on that practice, are crucial to learning (Ambrose et al., 2010). Formative assessment facilitates student learning by providing frequent low-stakes practice coupled with immediate and focused feedback. Whether graded or ungraded, formative assessment helps you monitor student progress and guide students to understand which outcomes they’ve mastered, which they need to focus on, and what strategies can support their learning. Formative assessment also informs how you modify your teaching to better meet student needs throughout your course. Technology Tip Design quizzes in CarmenCanvas to provide immediate and useful feedback to students based on their answers. Learn more about setting up quizzes in Carmen. Summative assessment measures student learning by comparing it to a standard. Usually these types of assessments evaluate a range of skills or overall performance at the end of a unit, module, or course. Unlike formative assessment, they tend to focus more on product than process. These high-stakes experiences are typically graded and should be less frequent (Ambrose et al., 2010).

Using Bloom's Taxonomy![essay questions about assessment of learning A visual depiction of the Bloom's Taxonomy categories positioned like the layers of a cake. [row 1, at bottom] Remember; Recognizing and recalling facts. [Row 2] Understand: Understanding what the facts mean. [Row 3] Apply: Applying the facts, rules, concepts, and ideas. [Row 4] Analyze: Breaking down information into component parts. [Row 5] Evaluate: Judging the value of information or ideas. [Row 6, at top] Create: Combining parts to make a new whole.](https://teaching.resources.osu.edu/sites/default/files/styles/max_960x960/public/2023-08/BloomsTaxonomy.jpeg?itok=1BcPgz2m) Bloom’s Taxonomy is a common framework for thinking about how students can demonstrate their learning on assessments, as well as for articulating course and lesson learning outcomes . Benjamin Bloom (alongside collaborators Max Englehart, Edward Furst, Walter Hill, and David Krathwohl) published Taxonomy of Educational Objectives in 1956. The taxonomy provided a system for categorizing educational goals with the intent of aiding educators with assessment. Commonly known as Bloom’s Taxonomy, the framework has been widely used to guide and define instruction in both K-12 and university settings. The original taxonomy from 1956 included a cognitive domain made up of six categories: Knowledge, Comprehension, Application, Analysis, Synthesis, and Evaluation. The categories after Knowledge were presented as “skills and abilities,” with the understanding that knowledge was the necessary precondition for putting these skills and abilities into practice. A revised Bloom's Taxonomy from 2001 updated these six categories to reflect how learners interact with knowledge. In the revised version, students can: Remember content, Understand ideas, Apply information to new situations, Analyze relationships between ideas, Evaluate information to justify perspectives or decisions, and Create new ideas or original work. In the graphic pictured here, the categories from the revised taxonomy are imagined as the layers of a cake. Assessing students on a variety of Bloom's categories will give you a better sense of how well they understand your course content. The taxonomy can be a helpful guide to predicting which tasks will be most difficult for students so you can provide extra support where it is needed. It can also be used to craft more transparent assignments and test questions by honing in on the specific skills you want to assess and finding the right language to communicate exactly what you want students to do. See the Sample Bloom's Verbs in the Examples section below. Diving deeper into Bloom's Taxonomy Like most aspects of our lives, activities and assessments in today’s classroom are inextricably linked with technology. In 2008, Andrew Churches extended Bloom’s Taxonomy to address the emerging changes in learning behaviors and opportunities as “technology advances and becomes more ubiquitous.” Consult Bloom’s Digital Taxonomy for ideas on using digital tools to facilitate and assess learning across the six categories of learning. Did you know that the cognitive domain (commonly referred to simply as Bloom's Taxonomy) was only one of three domains in the original Bloom's Taxonomy (1956)? While it is certainly the most well-known and widely used, the other two domains— psychomotor and affective —may be of interest to some educators. The psychomotor domain relates to physical movement, coordination, and motor skills—it might apply to the performing arts or other courses that involve movement, manipulation of objects, and non-discursive communication like body language. The affective domain pertains to feelings, values, motivations, and attitudes and is used more often in disciplines like medicine, social work, and education, where emotions and values are integral aspects of learning. Explore the full taxonomy in Three Domains of Learning: Cognitive, Affective, and Psychomotor (Hoque, 2017). In PracticeConsider the following to make your assessments of student learning effective and meaningful. Align assignments, quizzes, and tests closely to learning outcomes.It goes without saying that you want students to achieve the learning outcomes for your course. The testing effect implies, then, that your assessments must help them retrieve the knowledge and practice the skills that are relevant to those outcomes. Plan assessments that measure specific outcomes for your course. Instead of choosing quizzes and tests that are easy to grade or assignment types common to your discipline, carefully consider what assessments will best help students practice important skills. When assignments and feedback are aligned to learning outcomes, and you share this alignment with students, they have a greater appreciation for your course and develop more effective strategies for study and practice targeted at achieving those outcomes (Wang, et al., 2013).  Provide authentic learning experiences.Consider how far removed from “the real world” traditional assessments like academic essays, standard textbook problems, and multiple-choice exams feel to students. In contrast, assignments that are authentic resemble real-world tasks. They feel relevant and purposeful, which can increase student motivation and engagement (Fink, 2013). Authentic assignments also help you assess whether students will be able to transfer what they learn into realistic contexts beyond your course. Integrate assessment opportunities that prepare students to be effective and successful once they graduate, whether as professionals, as global citizens, or in their personal lives. To design authentic assignments:

Simulations, role plays, case studies, portfolios, project-based learning, and service learning are all great avenues to bring authentic assessment into your course. Make sure assignments are achievable.Your students juggle coursework from several classes, so it’s important to be conscious of workload. Assign tasks they can realistically handle at a given point in the term. If it takes you three hours to do something, it will likely take your students six hours or more. Choose assignments that assess multiple learning outcomes from your course to keep your grading manageable and your feedback useful (Rayner et al., 2016). Scaffold assignments so students can develop knowledge and skills over time.For large assignments, use scaffolding to integrate multiple opportunities for feedback, reflection, and improvement. Scaffolding means breaking a complex assignment down into component parts or smaller progressive tasks over time. Practicing these smaller tasks individually before attempting to integrate them into a completed assignment supports student learning by reducing the amount of information they need to process at a given time (Salden et al., 2006). Scaffolding ensures students will start earlier and spend more time on big assignments. And it provides you more opportunities to give feedback and guidance to support their ultimate success. Additionally, scaffolding can draw students’ attention to important steps in a process that are often overlooked, such as planning and revision, leading them to be more independent and thoughtful about future work. A familiar example of scaffolding is a research paper. You might ask students to submit a topic or thesis in Week 3 of the semester, an annotated bibliography of sources in Week 6, a detailed outline in Week 9, a first draft on which they can get peer feedback in Week 11, and the final draft in the last week of the semester. Your course journey is decided in part by how you sequence assignments. Consider where students are in their learning and place assignments at strategic points throughout the term. Scaffold across the course journey by explaining how each assignment builds upon the learning achieved in previous ones (Walvoord & Anderson, 2011). Be transparent about assignment instructions and expectations.Communicate clearly to students about the purpose of each assignment, the process for completing the task, and the criteria you will use to evaluate it before they begin the work. Studies have shown that transparent assignments support students to meet learning goals and result in especially large increases in success and confidence for underserved students (Winkelmes et al., 2016). To increase assignment transparency:

Engage students in reflection or discussion to increase assignment transparency. Have them consider how the assessed outcomes connect to their personal lives or future careers. In-class activities that ask them to grade sample assignments and discuss the criteria they used, compare exemplars and non-exemplars, engage in self- or peer-evaluation, or complete steps of the assignment when you are present to give feedback can all support student success. Technology Tip Enter all assignments and due dates in your Carmen course to increase transparency. When assignments are entered in Carmen, they also populate to Calendar, Syllabus, and Grades areas so students can easily track their upcoming work. Carmen also allows you to develop rubrics for every assignment in your course. Sample Bloom’s VerbsBuilding a question bank, using the transparent assignment template, sample assignment: ai-generated lesson plan. Include frequent low-stakes assignments and assessments throughout your course to provide the opportunities for practice and feedback that are essential to learning. Consider a variety of formative and summative assessment types so students can demonstrate learning in multiple ways. Use Bloom’s Taxonomy to determine—and communicate—the specific skills you want to assess. Remember that effective assessments of student learning are:

Ambrose, S.A., Bridges, M.W., Lovett, M.C., DiPietro, M., & Norman, M.K. (2010). How learning works: Seven research-based principles for smart teaching . John Wiley & Sons. Barnett, S.M., & Ceci, S.J. (2002). When and where do we apply what we learn? A taxonomy for far transfer. Psychological Bulletin , 128 (4). 612–637. doi.org/10.1037/0033-2909.128.4.612 Bransford, J.D, & Schwartz, D.L. (1999). Rethinking transfer: A simple proposal with multiple implications. Review of Research in Education , 24 . 61–100. doi.org/10.3102/0091732X024001061 Fink, L. D. (2013). Creating significant learning experiences: An integrated approach to designing college courses . John Wiley & Sons. Karpicke, J.D., & Roediger, H.L., III. (2008). The critical importance of retrieval for learning. Science , 319 . 966–968. doi.org/10.1126/science.1152408 Rayner, K., Schotter, E. R., Masson, M. E., Potter, M. C., & Treiman, R. (2016). So much to read, so little time: How do we read, and can speed reading help?. Psychological Science in the Public Interest , 17 (1), 4-34. doi.org/10.1177/1529100615623267 Salden, R.J.C.M., Paas, F., van Merriënboer, J.J.G. (2006). A comparison of approaches to learning task selection in the training of complex cognitive skills. Computers in Human Behavior , 22 (3). 321–333. doi.org/10.1016/j.chb.2004.06.003 Walvoord, B. E., & Anderson, V. J. (2010). Effective grading: A tool for learning and assessment in college . John Wiley & Sons. Wang, X., Su, Y., Cheung, S., Wong, E., & Kwong, T. (2013). An exploration of Biggs’ constructive alignment in course design and its impact on students’ learning approaches. Assessment & Evaluation in Higher Education , 38 (4). 477–491. doi.org/10.1016/j.chb.2004.06.003 Winkelmes, M., Bernacki, M., Butler, J., Zochowski, M., Golanics, J., & Weavil, K.H. (2016). A teaching intervention that increases underserved college students’ success. Peer Review , 18 (1/2). 31–36. Retrieved from https://www.aacu.org/peerreview/2016/winter-spring/Winkelmes Related Teaching TopicsA positive approach to academic integrity, creating and adapting assignments for online courses, ai teaching strategies: transparent assignment design, designing research or inquiry-based assignments, using backward design to plan your course, universal design for learning: planning with all students in mind, search for resources.

Search FormAssessing student writing, what does it mean to assess writing.