Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Perspective

- Published: 22 November 2022

Single case studies are a powerful tool for developing, testing and extending theories

- Lyndsey Nickels ORCID: orcid.org/0000-0002-0311-3524 1 , 2 ,

- Simon Fischer-Baum ORCID: orcid.org/0000-0002-6067-0538 3 &

- Wendy Best ORCID: orcid.org/0000-0001-8375-5916 4

Nature Reviews Psychology volume 1 , pages 733–747 ( 2022 ) Cite this article

838 Accesses

6 Citations

25 Altmetric

Metrics details

- Neurological disorders

Psychology embraces a diverse range of methodologies. However, most rely on averaging group data to draw conclusions. In this Perspective, we argue that single case methodology is a valuable tool for developing and extending psychological theories. We stress the importance of single case and case series research, drawing on classic and contemporary cases in which cognitive and perceptual deficits provide insights into typical cognitive processes in domains such as memory, delusions, reading and face perception. We unpack the key features of single case methodology, describe its strengths, its value in adjudicating between theories, and outline its benefits for a better understanding of deficits and hence more appropriate interventions. The unique insights that single case studies have provided illustrate the value of in-depth investigation within an individual. Single case methodology has an important place in the psychologist’s toolkit and it should be valued as a primary research tool.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 digital issues and online access to articles

55,14 € per year

only 4,60 € per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Comparing meta-analyses and preregistered multiple-laboratory replication projects

The fundamental importance of method to theory

A critical evaluation of the p -factor literature

Corkin, S. Permanent Present Tense: The Unforgettable Life Of The Amnesic Patient, H. M . Vol. XIX, 364 (Basic Books, 2013).

Lilienfeld, S. O. Psychology: From Inquiry To Understanding (Pearson, 2019).

Schacter, D. L., Gilbert, D. T., Nock, M. K. & Wegner, D. M. Psychology (Worth Publishers, 2019).

Eysenck, M. W. & Brysbaert, M. Fundamentals Of Cognition (Routledge, 2018).

Squire, L. R. Memory and brain systems: 1969–2009. J. Neurosci. 29 , 12711–12716 (2009).

Article PubMed PubMed Central Google Scholar

Corkin, S. What’s new with the amnesic patient H.M.? Nat. Rev. Neurosci. 3 , 153–160 (2002).

Article PubMed Google Scholar

Schubert, T. M. et al. Lack of awareness despite complex visual processing: evidence from event-related potentials in a case of selective metamorphopsia. Proc. Natl Acad. Sci. USA 117 , 16055–16064 (2020).

Behrmann, M. & Plaut, D. C. Bilateral hemispheric processing of words and faces: evidence from word impairments in prosopagnosia and face impairments in pure alexia. Cereb. Cortex 24 , 1102–1118 (2014).

Plaut, D. C. & Behrmann, M. Complementary neural representations for faces and words: a computational exploration. Cogn. Neuropsychol. 28 , 251–275 (2011).

Haxby, J. V. et al. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293 , 2425–2430 (2001).

Hirshorn, E. A. et al. Decoding and disrupting left midfusiform gyrus activity during word reading. Proc. Natl Acad. Sci. USA 113 , 8162–8167 (2016).

Kosakowski, H. L. et al. Selective responses to faces, scenes, and bodies in the ventral visual pathway of infants. Curr. Biol. 32 , 265–274.e5 (2022).

Harlow, J. Passage of an iron rod through the head. Boston Med. Surgical J . https://doi.org/10.1176/jnp.11.2.281 (1848).

Broca, P. Remarks on the seat of the faculty of articulated language, following an observation of aphemia (loss of speech). Bull. Soc. Anat. 6 , 330–357 (1861).

Google Scholar

Dejerine, J. Contribution A L’étude Anatomo-pathologique Et Clinique Des Différentes Variétés De Cécité Verbale: I. Cécité Verbale Avec Agraphie Ou Troubles Très Marqués De L’écriture; II. Cécité Verbale Pure Avec Intégrité De L’écriture Spontanée Et Sous Dictée (Société de Biologie, 1892).

Liepmann, H. Das Krankheitsbild der Apraxie (“motorischen Asymbolie”) auf Grund eines Falles von einseitiger Apraxie (Fortsetzung). Eur. Neurol. 8 , 102–116 (1900).

Article Google Scholar

Basso, A., Spinnler, H., Vallar, G. & Zanobio, M. E. Left hemisphere damage and selective impairment of auditory verbal short-term memory. A case study. Neuropsychologia 20 , 263–274 (1982).

Humphreys, G. W. & Riddoch, M. J. The fractionation of visual agnosia. In Visual Object Processing: A Cognitive Neuropsychological Approach 281–306 (Lawrence Erlbaum, 1987).

Whitworth, A., Webster, J. & Howard, D. A Cognitive Neuropsychological Approach To Assessment And Intervention In Aphasia (Psychology Press, 2014).

Caramazza, A. On drawing inferences about the structure of normal cognitive systems from the analysis of patterns of impaired performance: the case for single-patient studies. Brain Cogn. 5 , 41–66 (1986).

Caramazza, A. & McCloskey, M. The case for single-patient studies. Cogn. Neuropsychol. 5 , 517–527 (1988).

Shallice, T. Cognitive neuropsychology and its vicissitudes: the fate of Caramazza’s axioms. Cogn. Neuropsychol. 32 , 385–411 (2015).

Shallice, T. From Neuropsychology To Mental Structure (Cambridge Univ. Press, 1988).

Coltheart, M. Assumptions and methods in cognitive neuropscyhology. In The Handbook Of Cognitive Neuropsychology: What Deficits Reveal About The Human Mind (ed. Rapp, B.) 3–22 (Psychology Press, 2001).

McCloskey, M. & Chaisilprungraung, T. The value of cognitive neuropsychology: the case of vision research. Cogn. Neuropsychol. 34 , 412–419 (2017).

McCloskey, M. The future of cognitive neuropsychology. In The Handbook Of Cognitive Neuropsychology: What Deficits Reveal About The Human Mind (ed. Rapp, B.) 593–610 (Psychology Press, 2001).

Lashley, K. S. In search of the engram. In Physiological Mechanisms in Animal Behavior 454–482 (Academic Press, 1950).

Squire, L. R. & Wixted, J. T. The cognitive neuroscience of human memory since H.M. Annu. Rev. Neurosci. 34 , 259–288 (2011).

Stone, G. O., Vanhoy, M. & Orden, G. C. V. Perception is a two-way street: feedforward and feedback phonology in visual word recognition. J. Mem. Lang. 36 , 337–359 (1997).

Perfetti, C. A. The psycholinguistics of spelling and reading. In Learning To Spell: Research, Theory, And Practice Across Languages 21–38 (Lawrence Erlbaum, 1997).

Nickels, L. The autocue? self-generated phonemic cues in the treatment of a disorder of reading and naming. Cogn. Neuropsychol. 9 , 155–182 (1992).

Rapp, B., Benzing, L. & Caramazza, A. The autonomy of lexical orthography. Cogn. Neuropsychol. 14 , 71–104 (1997).

Bonin, P., Roux, S. & Barry, C. Translating nonverbal pictures into verbal word names. Understanding lexical access and retrieval. In Past, Present, And Future Contributions Of Cognitive Writing Research To Cognitive Psychology 315–522 (Psychology Press, 2011).

Bonin, P., Fayol, M. & Gombert, J.-E. Role of phonological and orthographic codes in picture naming and writing: an interference paradigm study. Cah. Psychol. Cogn./Current Psychol. Cogn. 16 , 299–324 (1997).

Bonin, P., Fayol, M. & Peereman, R. Masked form priming in writing words from pictures: evidence for direct retrieval of orthographic codes. Acta Psychol. 99 , 311–328 (1998).

Bentin, S., Allison, T., Puce, A., Perez, E. & McCarthy, G. Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 8 , 551–565 (1996).

Jeffreys, D. A. Evoked potential studies of face and object processing. Vis. Cogn. 3 , 1–38 (1996).

Laganaro, M., Morand, S., Michel, C. M., Spinelli, L. & Schnider, A. ERP correlates of word production before and after stroke in an aphasic patient. J. Cogn. Neurosci. 23 , 374–381 (2011).

Indefrey, P. & Levelt, W. J. M. The spatial and temporal signatures of word production components. Cognition 92 , 101–144 (2004).

Valente, A., Burki, A. & Laganaro, M. ERP correlates of word production predictors in picture naming: a trial by trial multiple regression analysis from stimulus onset to response. Front. Neurosci. 8 , 390 (2014).

Kittredge, A. K., Dell, G. S., Verkuilen, J. & Schwartz, M. F. Where is the effect of frequency in word production? Insights from aphasic picture-naming errors. Cogn. Neuropsychol. 25 , 463–492 (2008).

Domdei, N. et al. Ultra-high contrast retinal display system for single photoreceptor psychophysics. Biomed. Opt. Express 9 , 157 (2018).

Poldrack, R. A. et al. Long-term neural and physiological phenotyping of a single human. Nat. Commun. 6 , 8885 (2015).

Coltheart, M. The assumptions of cognitive neuropsychology: reflections on Caramazza (1984, 1986). Cogn. Neuropsychol. 34 , 397–402 (2017).

Badecker, W. & Caramazza, A. A final brief in the case against agrammatism: the role of theory in the selection of data. Cognition 24 , 277–282 (1986).

Fischer-Baum, S. Making sense of deviance: Identifying dissociating cases within the case series approach. Cogn. Neuropsychol. 30 , 597–617 (2013).

Nickels, L., Howard, D. & Best, W. On the use of different methodologies in cognitive neuropsychology: drink deep and from several sources. Cogn. Neuropsychol. 28 , 475–485 (2011).

Dell, G. S. & Schwartz, M. F. Who’s in and who’s out? Inclusion criteria, model evaluation, and the treatment of exceptions in case series. Cogn. Neuropsychol. 28 , 515–520 (2011).

Schwartz, M. F. & Dell, G. S. Case series investigations in cognitive neuropsychology. Cogn. Neuropsychol. 27 , 477–494 (2010).

Cohen, J. A power primer. Psychol. Bull. 112 , 155–159 (1992).

Martin, R. C. & Allen, C. Case studies in neuropsychology. In APA Handbook Of Research Methods In Psychology Vol. 2 Research Designs: Quantitative, Qualitative, Neuropsychological, And Biological (eds Cooper, H. et al.) 633–646 (American Psychological Association, 2012).

Leivada, E., Westergaard, M., Duñabeitia, J. A. & Rothman, J. On the phantom-like appearance of bilingualism effects on neurocognition: (how) should we proceed? Bilingualism 24 , 197–210 (2021).

Arnett, J. J. The neglected 95%: why American psychology needs to become less American. Am. Psychol. 63 , 602–614 (2008).

Stolz, J. A., Besner, D. & Carr, T. H. Implications of measures of reliability for theories of priming: activity in semantic memory is inherently noisy and uncoordinated. Vis. Cogn. 12 , 284–336 (2005).

Cipora, K. et al. A minority pulls the sample mean: on the individual prevalence of robust group-level cognitive phenomena — the instance of the SNARC effect. Preprint at psyArXiv https://doi.org/10.31234/osf.io/bwyr3 (2019).

Andrews, S., Lo, S. & Xia, V. Individual differences in automatic semantic priming. J. Exp. Psychol. Hum. Percept. Perform. 43 , 1025–1039 (2017).

Tan, L. C. & Yap, M. J. Are individual differences in masked repetition and semantic priming reliable? Vis. Cogn. 24 , 182–200 (2016).

Olsson-Collentine, A., Wicherts, J. M. & van Assen, M. A. L. M. Heterogeneity in direct replications in psychology and its association with effect size. Psychol. Bull. 146 , 922–940 (2020).

Gratton, C. & Braga, R. M. Editorial overview: deep imaging of the individual brain: past, practice, and promise. Curr. Opin. Behav. Sci. 40 , iii–vi (2021).

Fedorenko, E. The early origins and the growing popularity of the individual-subject analytic approach in human neuroscience. Curr. Opin. Behav. Sci. 40 , 105–112 (2021).

Xue, A. et al. The detailed organization of the human cerebellum estimated by intrinsic functional connectivity within the individual. J. Neurophysiol. 125 , 358–384 (2021).

Petit, S. et al. Toward an individualized neural assessment of receptive language in children. J. Speech Lang. Hear. Res. 63 , 2361–2385 (2020).

Jung, K.-H. et al. Heterogeneity of cerebral white matter lesions and clinical correlates in older adults. Stroke 52 , 620–630 (2021).

Falcon, M. I., Jirsa, V. & Solodkin, A. A new neuroinformatics approach to personalized medicine in neurology: the virtual brain. Curr. Opin. Neurol. 29 , 429–436 (2016).

Duncan, G. J., Engel, M., Claessens, A. & Dowsett, C. J. Replication and robustness in developmental research. Dev. Psychol. 50 , 2417–2425 (2014).

Open Science Collaboration. Estimating the reproducibility of psychological science. Science 349 , aac4716 (2015).

Tackett, J. L., Brandes, C. M., King, K. M. & Markon, K. E. Psychology’s replication crisis and clinical psychological science. Annu. Rev. Clin. Psychol. 15 , 579–604 (2019).

Munafò, M. R. et al. A manifesto for reproducible science. Nat. Hum. Behav. 1 , 0021 (2017).

Oldfield, R. C. & Wingfield, A. The time it takes to name an object. Nature 202 , 1031–1032 (1964).

Oldfield, R. C. & Wingfield, A. Response latencies in naming objects. Q. J. Exp. Psychol. 17 , 273–281 (1965).

Brysbaert, M. How many participants do we have to include in properly powered experiments? A tutorial of power analysis with reference tables. J. Cogn. 2 , 16 (2019).

Brysbaert, M. Power considerations in bilingualism research: time to step up our game. Bilingualism https://doi.org/10.1017/S1366728920000437 (2020).

Machery, E. What is a replication? Phil. Sci. 87 , 545–567 (2020).

Nosek, B. A. & Errington, T. M. What is replication? PLoS Biol. 18 , e3000691 (2020).

Li, X., Huang, L., Yao, P. & Hyönä, J. Universal and specific reading mechanisms across different writing systems. Nat. Rev. Psychol. 1 , 133–144 (2022).

Rapp, B. (Ed.) The Handbook Of Cognitive Neuropsychology: What Deficits Reveal About The Human Mind (Psychology Press, 2001).

Code, C. et al. Classic Cases In Neuropsychology (Psychology Press, 1996).

Patterson, K., Marshall, J. C. & Coltheart, M. Surface Dyslexia: Neuropsychological And Cognitive Studies Of Phonological Reading (Routledge, 2017).

Marshall, J. C. & Newcombe, F. Patterns of paralexia: a psycholinguistic approach. J. Psycholinguist. Res. 2 , 175–199 (1973).

Castles, A. & Coltheart, M. Varieties of developmental dyslexia. Cognition 47 , 149–180 (1993).

Khentov-Kraus, L. & Friedmann, N. Vowel letter dyslexia. Cogn. Neuropsychol. 35 , 223–270 (2018).

Winskel, H. Orthographic and phonological parafoveal processing of consonants, vowels, and tones when reading Thai. Appl. Psycholinguist. 32 , 739–759 (2011).

Hepner, C., McCloskey, M. & Rapp, B. Do reading and spelling share orthographic representations? Evidence from developmental dysgraphia. Cogn. Neuropsychol. 34 , 119–143 (2017).

Hanley, J. R. & Sotiropoulos, A. Developmental surface dysgraphia without surface dyslexia. Cogn. Neuropsychol. 35 , 333–341 (2018).

Zihl, J. & Heywood, C. A. The contribution of single case studies to the neuroscience of vision: single case studies in vision neuroscience. Psych. J. 5 , 5–17 (2016).

Bouvier, S. E. & Engel, S. A. Behavioral deficits and cortical damage loci in cerebral achromatopsia. Cereb. Cortex 16 , 183–191 (2006).

Zihl, J. & Heywood, C. A. The contribution of LM to the neuroscience of movement vision. Front. Integr. Neurosci. 9 , 6 (2015).

Dotan, D. & Friedmann, N. Separate mechanisms for number reading and word reading: evidence from selective impairments. Cortex 114 , 176–192 (2019).

McCloskey, M. & Schubert, T. Shared versus separate processes for letter and digit identification. Cogn. Neuropsychol. 31 , 437–460 (2014).

Fayol, M. & Seron, X. On numerical representations. Insights from experimental, neuropsychological, and developmental research. In Handbook of Mathematical Cognition (ed. Campbell, J.) 3–23 (Psychological Press, 2005).

Bornstein, B. & Kidron, D. P. Prosopagnosia. J. Neurol. Neurosurg. Psychiat. 22 , 124–131 (1959).

Kühn, C. D., Gerlach, C., Andersen, K. B., Poulsen, M. & Starrfelt, R. Face recognition in developmental dyslexia: evidence for dissociation between faces and words. Cogn. Neuropsychol. 38 , 107–115 (2021).

Barton, J. J. S., Albonico, A., Susilo, T., Duchaine, B. & Corrow, S. L. Object recognition in acquired and developmental prosopagnosia. Cogn. Neuropsychol. 36 , 54–84 (2019).

Renault, B., Signoret, J.-L., Debruille, B., Breton, F. & Bolgert, F. Brain potentials reveal covert facial recognition in prosopagnosia. Neuropsychologia 27 , 905–912 (1989).

Bauer, R. M. Autonomic recognition of names and faces in prosopagnosia: a neuropsychological application of the guilty knowledge test. Neuropsychologia 22 , 457–469 (1984).

Haan, E. H. F., de, Young, A. & Newcombe, F. Face recognition without awareness. Cogn. Neuropsychol. 4 , 385–415 (1987).

Ellis, H. D. & Lewis, M. B. Capgras delusion: a window on face recognition. Trends Cogn. Sci. 5 , 149–156 (2001).

Ellis, H. D., Young, A. W., Quayle, A. H. & De Pauw, K. W. Reduced autonomic responses to faces in Capgras delusion. Proc. R. Soc. Lond. B 264 , 1085–1092 (1997).

Collins, M. N., Hawthorne, M. E., Gribbin, N. & Jacobson, R. Capgras’ syndrome with organic disorders. Postgrad. Med. J. 66 , 1064–1067 (1990).

Enoch, D., Puri, B. K. & Ball, H. Uncommon Psychiatric Syndromes 5th edn (Routledge, 2020).

Tranel, D., Damasio, H. & Damasio, A. R. Double dissociation between overt and covert face recognition. J. Cogn. Neurosci. 7 , 425–432 (1995).

Brighetti, G., Bonifacci, P., Borlimi, R. & Ottaviani, C. “Far from the heart far from the eye”: evidence from the Capgras delusion. Cogn. Neuropsychiat. 12 , 189–197 (2007).

Coltheart, M., Langdon, R. & McKay, R. Delusional belief. Annu. Rev. Psychol. 62 , 271–298 (2011).

Coltheart, M. Cognitive neuropsychiatry and delusional belief. Q. J. Exp. Psychol. 60 , 1041–1062 (2007).

Coltheart, M. & Davies, M. How unexpected observations lead to new beliefs: a Peircean pathway. Conscious. Cogn. 87 , 103037 (2021).

Coltheart, M. & Davies, M. Failure of hypothesis evaluation as a factor in delusional belief. Cogn. Neuropsychiat. 26 , 213–230 (2021).

McCloskey, M. et al. A developmental deficit in localizing objects from vision. Psychol. Sci. 6 , 112–117 (1995).

McCloskey, M., Valtonen, J. & Cohen Sherman, J. Representing orientation: a coordinate-system hypothesis and evidence from developmental deficits. Cogn. Neuropsychol. 23 , 680–713 (2006).

McCloskey, M. Spatial representations and multiple-visual-systems hypotheses: evidence from a developmental deficit in visual location and orientation processing. Cortex 40 , 677–694 (2004).

Gregory, E. & McCloskey, M. Mirror-image confusions: implications for representation and processing of object orientation. Cognition 116 , 110–129 (2010).

Gregory, E., Landau, B. & McCloskey, M. Representation of object orientation in children: evidence from mirror-image confusions. Vis. Cogn. 19 , 1035–1062 (2011).

Laine, M. & Martin, N. Cognitive neuropsychology has been, is, and will be significant to aphasiology. Aphasiology 26 , 1362–1376 (2012).

Howard, D. & Patterson, K. The Pyramids And Palm Trees Test: A Test Of Semantic Access From Words And Pictures (Thames Valley Test Co., 1992).

Kay, J., Lesser, R. & Coltheart, M. PALPA: Psycholinguistic Assessments Of Language Processing In Aphasia. 2: Picture & Word Semantics, Sentence Comprehension (Erlbaum, 2001).

Franklin, S. Dissociations in auditory word comprehension; evidence from nine fluent aphasic patients. Aphasiology 3 , 189–207 (1989).

Howard, D., Swinburn, K. & Porter, G. Putting the CAT out: what the comprehensive aphasia test has to offer. Aphasiology 24 , 56–74 (2010).

Conti-Ramsden, G., Crutchley, A. & Botting, N. The extent to which psychometric tests differentiate subgroups of children with SLI. J. Speech Lang. Hear. Res. 40 , 765–777 (1997).

Bishop, D. V. M. & McArthur, G. M. Individual differences in auditory processing in specific language impairment: a follow-up study using event-related potentials and behavioural thresholds. Cortex 41 , 327–341 (2005).

Bishop, D. V. M., Snowling, M. J., Thompson, P. A. & Greenhalgh, T., and the CATALISE-2 consortium. Phase 2 of CATALISE: a multinational and multidisciplinary Delphi consensus study of problems with language development: terminology. J. Child. Psychol. Psychiat. 58 , 1068–1080 (2017).

Wilson, A. J. et al. Principles underlying the design of ‘the number race’, an adaptive computer game for remediation of dyscalculia. Behav. Brain Funct. 2 , 19 (2006).

Basso, A. & Marangolo, P. Cognitive neuropsychological rehabilitation: the emperor’s new clothes? Neuropsychol. Rehabil. 10 , 219–229 (2000).

Murad, M. H., Asi, N., Alsawas, M. & Alahdab, F. New evidence pyramid. Evidence-based Med. 21 , 125–127 (2016).

Greenhalgh, T., Howick, J. & Maskrey, N., for the Evidence Based Medicine Renaissance Group. Evidence based medicine: a movement in crisis? Br. Med. J. 348 , g3725–g3725 (2014).

Best, W., Ping Sze, W., Edmundson, A. & Nickels, L. What counts as evidence? Swimming against the tide: valuing both clinically informed experimentally controlled case series and randomized controlled trials in intervention research. Evidence-based Commun. Assess. Interv. 13 , 107–135 (2019).

Best, W. et al. Understanding differing outcomes from semantic and phonological interventions with children with word-finding difficulties: a group and case series study. Cortex 134 , 145–161 (2021).

OCEBM Levels of Evidence Working Group. The Oxford Levels of Evidence 2. CEBM https://www.cebm.ox.ac.uk/resources/levels-of-evidence/ocebm-levels-of-evidence (2011).

Holler, D. E., Behrmann, M. & Snow, J. C. Real-world size coding of solid objects, but not 2-D or 3-D images, in visual agnosia patients with bilateral ventral lesions. Cortex 119 , 555–568 (2019).

Duchaine, B. C., Yovel, G., Butterworth, E. J. & Nakayama, K. Prosopagnosia as an impairment to face-specific mechanisms: elimination of the alternative hypotheses in a developmental case. Cogn. Neuropsychol. 23 , 714–747 (2006).

Hartley, T. et al. The hippocampus is required for short-term topographical memory in humans. Hippocampus 17 , 34–48 (2007).

Pishnamazi, M. et al. Attentional bias towards and away from fearful faces is modulated by developmental amygdala damage. Cortex 81 , 24–34 (2016).

Rapp, B., Fischer-Baum, S. & Miozzo, M. Modality and morphology: what we write may not be what we say. Psychol. Sci. 26 , 892–902 (2015).

Yong, K. X. X., Warren, J. D., Warrington, E. K. & Crutch, S. J. Intact reading in patients with profound early visual dysfunction. Cortex 49 , 2294–2306 (2013).

Rockland, K. S. & Van Hoesen, G. W. Direct temporal–occipital feedback connections to striate cortex (V1) in the macaque monkey. Cereb. Cortex 4 , 300–313 (1994).

Haynes, J.-D., Driver, J. & Rees, G. Visibility reflects dynamic changes of effective connectivity between V1 and fusiform cortex. Neuron 46 , 811–821 (2005).

Tanaka, K. Mechanisms of visual object recognition: monkey and human studies. Curr. Opin. Neurobiol. 7 , 523–529 (1997).

Fischer-Baum, S., McCloskey, M. & Rapp, B. Representation of letter position in spelling: evidence from acquired dysgraphia. Cognition 115 , 466–490 (2010).

Houghton, G. The problem of serial order: a neural network model of sequence learning and recall. In Current Research In Natural Language Generation (eds Dale, R., Mellish, C. & Zock, M.) 287–319 (Academic Press, 1990).

Fieder, N., Nickels, L., Biedermann, B. & Best, W. From “some butter” to “a butter”: an investigation of mass and count representation and processing. Cogn. Neuropsychol. 31 , 313–349 (2014).

Fieder, N., Nickels, L., Biedermann, B. & Best, W. How ‘some garlic’ becomes ‘a garlic’ or ‘some onion’: mass and count processing in aphasia. Neuropsychologia 75 , 626–645 (2015).

Schröder, A., Burchert, F. & Stadie, N. Training-induced improvement of noncanonical sentence production does not generalize to comprehension: evidence for modality-specific processes. Cogn. Neuropsychol. 32 , 195–220 (2015).

Stadie, N. et al. Unambiguous generalization effects after treatment of non-canonical sentence production in German agrammatism. Brain Lang. 104 , 211–229 (2008).

Schapiro, A. C., Gregory, E., Landau, B., McCloskey, M. & Turk-Browne, N. B. The necessity of the medial temporal lobe for statistical learning. J. Cogn. Neurosci. 26 , 1736–1747 (2014).

Schapiro, A. C., Kustner, L. V. & Turk-Browne, N. B. Shaping of object representations in the human medial temporal lobe based on temporal regularities. Curr. Biol. 22 , 1622–1627 (2012).

Baddeley, A., Vargha-Khadem, F. & Mishkin, M. Preserved recognition in a case of developmental amnesia: implications for the acaquisition of semantic memory? J. Cogn. Neurosci. 13 , 357–369 (2001).

Snyder, J. J. & Chatterjee, A. Spatial-temporal anisometries following right parietal damage. Neuropsychologia 42 , 1703–1708 (2004).

Ashkenazi, S., Henik, A., Ifergane, G. & Shelef, I. Basic numerical processing in left intraparietal sulcus (IPS) acalculia. Cortex 44 , 439–448 (2008).

Lebrun, M.-A., Moreau, P., McNally-Gagnon, A., Mignault Goulet, G. & Peretz, I. Congenital amusia in childhood: a case study. Cortex 48 , 683–688 (2012).

Vannuscorps, G., Andres, M. & Pillon, A. When does action comprehension need motor involvement? Evidence from upper limb aplasia. Cogn. Neuropsychol. 30 , 253–283 (2013).

Jeannerod, M. Neural simulation of action: a unifying mechanism for motor cognition. NeuroImage 14 , S103–S109 (2001).

Blakemore, S.-J. & Decety, J. From the perception of action to the understanding of intention. Nat. Rev. Neurosci. 2 , 561–567 (2001).

Rizzolatti, G. & Craighero, L. The mirror-neuron system. Annu. Rev. Neurosci. 27 , 169–192 (2004).

Forde, E. M. E., Humphreys, G. W. & Remoundou, M. Disordered knowledge of action order in action disorganisation syndrome. Neurocase 10 , 19–28 (2004).

Mazzi, C. & Savazzi, S. The glamor of old-style single-case studies in the neuroimaging era: insights from a patient with hemianopia. Front. Psychol. 10 , 965 (2019).

Coltheart, M. What has functional neuroimaging told us about the mind (so far)? (Position Paper Presented to the European Cognitive Neuropsychology Workshop, Bressanone, 2005). Cortex 42 , 323–331 (2006).

Page, M. P. A. What can’t functional neuroimaging tell the cognitive psychologist? Cortex 42 , 428–443 (2006).

Blank, I. A., Kiran, S. & Fedorenko, E. Can neuroimaging help aphasia researchers? Addressing generalizability, variability, and interpretability. Cogn. Neuropsychol. 34 , 377–393 (2017).

Niv, Y. The primacy of behavioral research for understanding the brain. Behav. Neurosci. 135 , 601–609 (2021).

Crawford, J. R. & Howell, D. C. Comparing an individual’s test score against norms derived from small samples. Clin. Neuropsychol. 12 , 482–486 (1998).

Crawford, J. R., Garthwaite, P. H. & Ryan, K. Comparing a single case to a control sample: testing for neuropsychological deficits and dissociations in the presence of covariates. Cortex 47 , 1166–1178 (2011).

McIntosh, R. D. & Rittmo, J. Ö. Power calculations in single-case neuropsychology: a practical primer. Cortex 135 , 146–158 (2021).

Patterson, K. & Plaut, D. C. “Shallow draughts intoxicate the brain”: lessons from cognitive science for cognitive neuropsychology. Top. Cogn. Sci. 1 , 39–58 (2009).

Lambon Ralph, M. A., Patterson, K. & Plaut, D. C. Finite case series or infinite single-case studies? Comments on “Case series investigations in cognitive neuropsychology” by Schwartz and Dell (2010). Cogn. Neuropsychol. 28 , 466–474 (2011).

Horien, C., Shen, X., Scheinost, D. & Constable, R. T. The individual functional connectome is unique and stable over months to years. NeuroImage 189 , 676–687 (2019).

Epelbaum, S. et al. Pure alexia as a disconnection syndrome: new diffusion imaging evidence for an old concept. Cortex 44 , 962–974 (2008).

Fischer-Baum, S. & Campana, G. Neuroplasticity and the logic of cognitive neuropsychology. Cogn. Neuropsychol. 34 , 403–411 (2017).

Paul, S., Baca, E. & Fischer-Baum, S. Cerebellar contributions to orthographic working memory: a single case cognitive neuropsychological investigation. Neuropsychologia 171 , 108242 (2022).

Feinstein, J. S., Adolphs, R., Damasio, A. & Tranel, D. The human amygdala and the induction and experience of fear. Curr. Biol. 21 , 34–38 (2011).

Crawford, J., Garthwaite, P. & Gray, C. Wanted: fully operational definitions of dissociations in single-case studies. Cortex 39 , 357–370 (2003).

McIntosh, R. D. Simple dissociations for a higher-powered neuropsychology. Cortex 103 , 256–265 (2018).

McIntosh, R. D. & Brooks, J. L. Current tests and trends in single-case neuropsychology. Cortex 47 , 1151–1159 (2011).

Best, W., Schröder, A. & Herbert, R. An investigation of a relative impairment in naming non-living items: theoretical and methodological implications. J. Neurolinguistics 19 , 96–123 (2006).

Franklin, S., Howard, D. & Patterson, K. Abstract word anomia. Cogn. Neuropsychol. 12 , 549–566 (1995).

Coltheart, M., Patterson, K. E. & Marshall, J. C. Deep Dyslexia (Routledge, 1980).

Nickels, L., Kohnen, S. & Biedermann, B. An untapped resource: treatment as a tool for revealing the nature of cognitive processes. Cogn. Neuropsychol. 27 , 539–562 (2010).

Download references

Acknowledgements

The authors thank all of those pioneers of and advocates for single case study research who have mentored, inspired and encouraged us over the years, and the many other colleagues with whom we have discussed these issues.

Author information

Authors and affiliations.

School of Psychological Sciences & Macquarie University Centre for Reading, Macquarie University, Sydney, New South Wales, Australia

Lyndsey Nickels

NHMRC Centre of Research Excellence in Aphasia Recovery and Rehabilitation, Australia

Psychological Sciences, Rice University, Houston, TX, USA

Simon Fischer-Baum

Psychology and Language Sciences, University College London, London, UK

You can also search for this author in PubMed Google Scholar

Contributions

L.N. led and was primarily responsible for the structuring and writing of the manuscript. All authors contributed to all aspects of the article.

Corresponding author

Correspondence to Lyndsey Nickels .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Peer review

Peer review information.

Nature Reviews Psychology thanks Yanchao Bi, Rob McIntosh, and the other, anonymous, reviewer for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Cite this article.

Nickels, L., Fischer-Baum, S. & Best, W. Single case studies are a powerful tool for developing, testing and extending theories. Nat Rev Psychol 1 , 733–747 (2022). https://doi.org/10.1038/s44159-022-00127-y

Download citation

Accepted : 13 October 2022

Published : 22 November 2022

Issue Date : December 2022

DOI : https://doi.org/10.1038/s44159-022-00127-y

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Single-Case Experimental Designs: A Systematic Review of Published Research and Current Standards

Justin d smith.

- Author information

- Article notes

- Copyright and License information

Address correspondence to Justin D. Smith, Child and Family Center, University of Oregon, 195 West 12th Avenue, Eugene, OR 97401-3408; [email protected]

Issue date 2012 Dec.

This article systematically reviews the research design and methodological characteristics of single-case experimental design (SCED) research published in peer-reviewed journals between 2000 and 2010. SCEDs provide researchers with a flexible and viable alternative to group designs with large sample sizes. However, methodological challenges have precluded widespread implementation and acceptance of the SCED as a viable complementary methodology to the predominant group design. This article includes a description of the research design, measurement, and analysis domains distinctive to the SCED; a discussion of the results within the framework of contemporary standards and guidelines in the field; and a presentation of updated benchmarks for key characteristics (e.g., baseline sampling, method of analysis), and overall, it provides researchers and reviewers with a resource for conducting and evaluating SCED research. The results of the systematic review of 409 studies suggest that recently published SCED research is largely in accordance with contemporary criteria for experimental quality. Analytic method emerged as an area of discord. Comparison of the findings of this review with historical estimates of the use of statistical analysis indicates an upward trend, but visual analysis remains the most common analytic method and also garners the most support amongst those entities providing SCED standards. Although consensus exists along key dimensions of single-case research design and researchers appear to be practicing within these parameters, there remains a need for further evaluation of assessment and sampling techniques and data analytic methods.

Keywords: daily diary, single-case experimental design, systematic review, time-series

The single-case experiment has a storied history in psychology dating back to the field’s founders: Fechner (1889) , Watson (1925) , and Skinner (1938) . It has been used to inform and develop theory, examine interpersonal processes, study the behavior of organisms, establish the effectiveness of psychological interventions, and address a host of other research questions (for a review, see Morgan & Morgan, 2001 ). In recent years the single-case experimental design (SCED) has been represented in the literature more often than in past decades, as is evidenced by recent reviews ( Hammond & Gast, 2010 ; Shadish & Sullivan, 2011 ), but it still languishes behind the more prominent group design in nearly all subfields of psychology. Group designs are often professed to be superior because they minimize, although do not necessarily eliminate, the major internal validity threats to drawing scientifically valid inferences from the results ( Shadish, Cook, & Campbell, 2002 ). SCEDs provide a rigorous, methodologically sound alternative method of evaluation (e.g., Barlow, Nock, & Hersen, 2008 ; Horner et al., 2005 ; Kazdin, 2010 ; Kratochwill & Levin, 2010 ; Shadish et al., 2002 ) but are often overlooked as a true experimental methodology capable of eliciting legitimate inferences (e.g., Barlow et al., 2008 ; Kazdin, 2010 ). Despite a shift in the zeitgeist from single-case experiments to group designs more than a half century ago, recent and rapid methodological advancements suggest that SCEDs are poised for resurgence.

Basics of the SCED

Single case refers to the participant or cluster of participants (e.g., a classroom, hospital, or neighborhood) under investigation. In contrast to an experimental group design in which one group is compared with another, participants in a single-subject experiment research provide their own control data for the purpose of comparison in a within-subject rather than a between-subjects design. SCEDs typically involve a comparison between two experimental time periods, known as phases. This approach typically includes collecting a representative baseline phase to serve as a comparison with subsequent phases. In studies examining single subjects that are actually groups (i.e., classroom, school), there are additional threats to internal validity of the results, as noted by Kratochwill and Levin (2010) , which include setting or site effects.

The central goal of the SCED is to determine whether a causal or functional relationship exists between a researcher-manipulated independent variable (IV) and a meaningful change in the dependent variable (DV). SCEDs generally involve repeated, systematic assessment of one or more IVs and DVs over time. The DV is measured repeatedly across and within all conditions or phases of the IV. Experimental control in SCEDs includes replication of the effect either within or between participants ( Horner et al., 2005 ). Randomization is another way in which threats to internal validity can be experimentally controlled. Kratochwill and Levin (2010) recently provided multiple suggestions for adding a randomization component to SCEDs to improve the methodological rigor and internal validity of the findings.

Examination of the effectiveness of interventions is perhaps the area in which SCEDs are most well represented ( Morgan & Morgan, 2001 ). Researchers in behavioral medicine and in clinical, health, educational, school, sport, rehabilitation, and counseling psychology often use SCEDs because they are particularly well suited to examining the processes and outcomes of psychological and behavioral interventions (e.g., Borckardt et al., 2008 ; Kazdin, 2010 ; Robey, Schultz, Crawford, & Sinner, 1999 ). Skepticism about the clinical utility of the randomized controlled trial (e.g., Jacobsen & Christensen, 1996 ; Wachtel, 2010 ; Westen & Bradley, 2005 ; Westen, Novotny, & Thompson-Brenner, 2004 ) has renewed researchers’ interest in SCEDs as a means to assess intervention outcomes (e.g., Borckardt et al., 2008 ; Dattilio, Edwards, & Fishman, 2010 ; Horner et al., 2005 ; Kratochwill, 2007 ; Kratochwill & Levin, 2010 ). Although SCEDs are relatively well represented in the intervention literature, it is by no means their sole home: Examples appear in nearly every subfield of psychology (e.g., Bolger, Davis, & Rafaeli, 2003 ; Piasecki, Hufford, Solham, & Trull, 2007 ; Reis & Gable, 2000 ; Shiffman, Stone, & Hufford, 2008 ; Soliday, Moore, & Lande, 2002 ). Aside from the current preference for group-based research designs, several methodological challenges have repressed the proliferation of the SCED.

Methodological Complexity

SCEDs undeniably present researchers with a complex array of methodological and research design challenges, such as establishing a representative baseline, managing the nonindependence of sequential observations (i.e., autocorrelation, serial dependence), interpreting single-subject effect sizes, analyzing the short data streams seen in many applications, and appropriately addressing the matter of missing observations. In the field of intervention research for example, Hser et al. (2001) noted that studies using SCEDs are “rare” because of the minimum number of observations that are necessary (e.g., 3–5 data points in each phase) and the complexity of available data analysis approaches. Advances in longitudinal person-based trajectory analysis (e.g., Nagin, 1999 ), structural equation modeling techniques (e.g., Lubke & Muthén, 2005 ), time-series forecasting (e.g., autoregressive integrated moving averages; Box & Jenkins, 1970 ), and statistical programs designed specifically for SCEDs (e.g., Simulation Modeling Analysis; Borckardt, 2006 ) have provided researchers with robust means of analysis, but they might not be feasible methods for the average psychological scientist.

Application of the SCED has also expanded. Today, researchers use variants of the SCED to examine complex psychological processes and the relationship between daily and momentary events in peoples’ lives and their psychological correlates. Research in nearly all subfields of psychology has begun to use daily diary and ecological momentary assessment (EMA) methods in the context of the SCED, opening the door to understanding increasingly complex psychological phenomena (see Bolger et al., 2003 ; Shiffman et al., 2008 ). In contrast to the carefully controlled laboratory experiment that dominated research in the first half of the twentieth century (e.g., Skinner, 1938 ; Watson, 1925 ), contemporary proponents advocate application of the SCED in naturalistic studies to increase the ecological validity of empirical findings (e.g., Bloom, Fisher, & Orme, 2003 ; Borckardt et al., 2008 ; Dattilio et al., 2010 ; Jacobsen & Christensen, 1996 ; Kazdin, 2008 ; Morgan & Morgan, 2001 ; Westen & Bradley, 2005 ; Westen et al., 2004 ). Recent advancements and expanded application of SCEDs indicate a need for updated design and reporting standards.

This Review

Many current benchmarks in the literature concerning key parameters of the SCED were established well before current advancements and innovations, such as the suggested minimum number of data points in the baseline phase(s), which remains a disputed area of SCED research (e.g., Center, Skiba, & Casey, 1986 ; Huitema, 1985 ; R. R. Jones, Vaught, & Weinrott, 1977 ; Sharpley, 1987 ). This article comprises (a) an examination of contemporary SCED methodological and reporting standards; (b) a systematic review of select design, measurement, and statistical characteristics of published SCED research during the past decade; and (c) a broad discussion of the critical aspects of this research to inform methodological improvements and study reporting standards. The reader will garner a fundamental understanding of what constitutes appropriate methodological soundness in single-case experimental research according to the established standards in the field, which can be used to guide the design of future studies, improve the presentation of publishable empirical findings, and inform the peer-review process. The discussion begins with the basic characteristics of the SCED, including an introduction to time-series, daily diary, and EMA strategies, and describes how current reporting and design standards apply to each of these areas of single-case research. Interweaved within this presentation are the results of a systematic review of SCED research published between 2000 and 2010 in peer-reviewed outlets and a discussion of the way in which these findings support, or differ from, existing design and reporting standards and published SCED benchmarks.

Review of Current SCED Guidelines and Reporting Standards

In contrast to experimental group comparison studies, which conform to generally well agreed upon methodological design and reporting guidelines, such as the CONSORT ( Moher, Schulz, Altman, & the CONSORT Group, 2001 ) and TREND ( Des Jarlais, Lyles, & Crepaz, 2004 ) statements for randomized and nonrandomized trials, respectively, there is comparatively much less consensus when it comes to the SCED. Until fairly recently, design and reporting guidelines for single-case experiments were almost entirely absent in the literature and were typically determined by the preferences of a research subspecialty or a particular journal’s editorial board. Factions still exist within the larger field of psychology, as can be seen in the collection of standards presented in this article, particularly in regard to data analytic methods of SCEDs, but fortunately there is budding agreement about certain design and measurement characteristics. A number of task forces, professional groups, and independent experts in the field have recently put forth guidelines; each has a relatively distinct purpose, which likely accounts for some of the discrepancies between them. In what is to be a central theme of this article, researchers are ultimately responsible for thoughtfully and synergistically combining research design, measurement, and analysis aspects of a study.

This review presents the more prominent, comprehensive, and recently established SCED standards. Six sources are discussed: (1) Single-Case Design Technical Documentation from the What Works Clearinghouse (WWC; Kratochwill et al., 2010 ); (2) the APA Division 12 Task Force on Psychological Interventions, with contributions from the Division 12 Task Force on Promotion and Dissemination of Psychological Procedures and the APA Task Force for Psychological Intervention Guidelines (DIV12; presented in Chambless & Hollon, 1998 ; Chambless & Ollendick, 2001 ), adopted and expanded by APA Division 53, the Society for Clinical Child and Adolescent Psychology ( Weisz & Hawley, 1998 , 1999 ); (3) the APA Division 16 Task Force on Evidence-Based Interventions in School Psychology (DIV16; Members of the Task Force on Evidence-Based Interventions in School Psychology. Chair: T. R. Kratochwill, 2003); (4) the National Reading Panel (NRP; National Institute of Child Health and Human Development, 2000 ); (5) the Single-Case Experimental Design Scale ( Tate et al., 2008 ); and (6) the reporting guidelines for EMA put forth by Stone & Shiffman (2002) . Although the specific purposes of each source differ somewhat, the overall aim is to provide researchers and reviewers with agreed-upon criteria to be used in the conduct and evaluation of SCED research. The standards provided by WWC, DIV12, DIV16, and the NRP represent the efforts of task forces. The Tate et al. scale was selected for inclusion in this review because it represents perhaps the only psychometrically validated tool for assessing the rigor of SCED methodology. Stone and Shiffman’s (2002) standards were intended specifically for EMA methods, but many of their criteria also apply to time-series, daily diary, and other repeated-measurement and sampling methods, making them pertinent to this article. The design, measurement, and analysis standards are presented in the later sections of this article and notable concurrences, discrepancies, strengths, and deficiencies are summarized.

Systematic Review Search Procedures and Selection Criteria

Search strategy.

A comprehensive search strategy of SCEDs was performed to identify studies published in peer-reviewed journals meeting a priori search and inclusion criteria. First, a computer-based PsycINFO search of articles published between 2000 and 2010 (search conducted in July 2011) was conducted that used the following primary key terms and phrases that appeared anywhere in the article (asterisks denote that any characters/letters can follow the last character of the search term): alternating treatment design, changing criterion design, experimental case*, multiple baseline design, replicated single-case design, simultaneous treatment design, time-series design. The search was limited to studies published in the English language and those appearing in peer-reviewed journals within the specified publication year range. Additional limiters of the type of article were also used in PsycINFO to increase specificity: The search was limited to include methodologies indexed as either quantitative study OR treatment outcome/randomized clinical trial and NOT field study OR interview OR focus group OR literature review OR systematic review OR mathematical model OR qualitative study.

Study selection

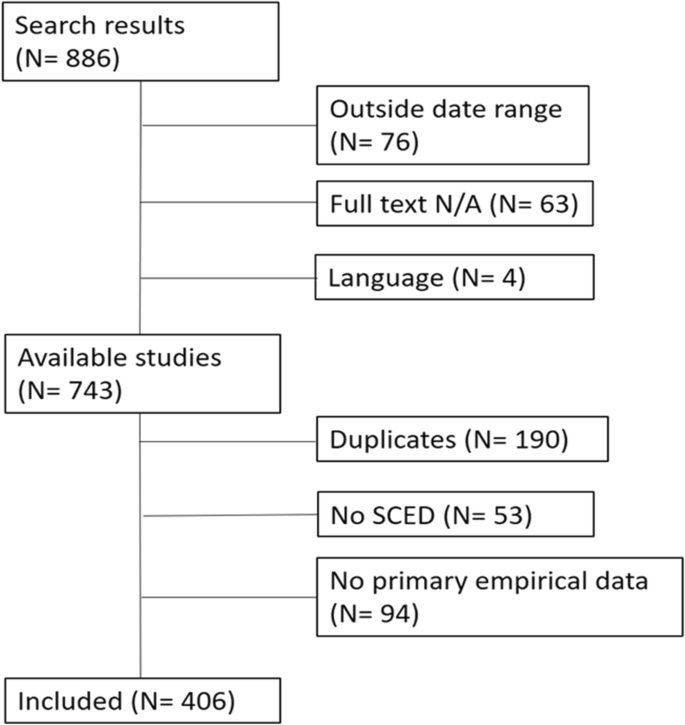

The author used a three-phase study selection, screening, and coding procedure to select the highest number of applicable studies. Phase 1 consisted of the initial systematic review conducted using PsycINFO, which resulted in 571 articles. In Phase 2, titles and abstracts were screened: Articles appearing to use a SCED were retained (451) for Phase 3, in which the author and a trained research assistant read each full-text article and entered the characteristics of interest into a database. At each phase of the screening process, studies that did not use a SCED or that either self-identified as, or were determined to be, quasi-experimental were dropped. Of the 571 original studies, 82 studies were determined to be quasi-experimental. The definition of a quasi-experimental design used in the screening procedure conforms to the descriptions provided by Kazdin (2010) and Shadish et al. (2002) regarding the necessary components of an experimental design. For example, reversal designs require a minimum of four phases (e.g., ABAB), and multiple baseline designs must demonstrate replication of the effect across at least three conditions (e.g., subjects, settings, behaviors). Sixteen studies were unavailable in full text in English, and five could not be obtained in full text and were thus dropped. The remaining articles that were not retained for review (59) were determined not to be SCED studies meeting our inclusion criteria, but had been identified in our PsycINFO search using the specified keyword and methodology terms. For this review, 409 studies were selected. The sources of the 409 reviewed studies are summarized in Table 1 . A complete bibliography of the 571 studies appearing in the initial search, with the included studies marked, is available online as an Appendix or from the author.

Journal Sources of Studies Included in the Systematic Review (N = 409)

Note: Each of the following journal titles contributed 1 study unless otherwise noted in parentheses: Augmentative and Alternative Communication; Acta Colombiana de Psicología; Acta Comportamentalia; Adapted Physical Activity Quarterly (2); Addiction Research and Theory; Advances in Speech Language Pathology; American Annals of the Deaf; American Journal of Education; American Journal of Occupational Therapy; American Journal of Speech-Language Pathology; The American Journal on Addictions; American Journal on Mental Retardation; Applied Ergonomics; Applied Psychophysiology and Biofeedback; Australian Journal of Guidance & Counseling; Australian Psychologist; Autism; The Behavior Analyst; The Behavior Analyst Today; Behavior Analysis in Practice (2); Behavior and Social Issues (2); Behaviour Change (2); Behavioural and Cognitive Psychotherapy; Behaviour Research and Therapy (3); Brain and Language (2); Brain Injury (2); Canadian Journal of Occupational Therapy (2); Canadian Journal of School Psychology; Career Development for Exceptional Individuals; Chinese Mental Health Journal; Clinical Linguistics and Phonetics; Clinical Psychology & Psychotherapy; Cognitive and Behavioral Practice; Cognitive Computation; Cognitive Therapy and Research; Communication Disorders Quarterly; Developmental Medicine & Child Neurology (2); Developmental Neurorehabilitation (2); Disability and Rehabilitation: An International, Multidisciplinary Journal (3); Disability and Rehabilitation: Assistive Technology; Down Syndrome: Research & Practice; Drug and Alcohol Dependence (2); Early Childhood Education Journal (2); Early Childhood Services: An Interdisciplinary Journal of Effectiveness; Educational Psychology (2); Education and Training in Autism and Developmental Disabilities; Electronic Journal of Research in Educational Psychology; Environment and Behavior (2); European Eating Disorders Review; European Journal of Sport Science; European Review of Applied Psychology; Exceptional Children; Exceptionality; Experimental and Clinical Psychopharmacology; Family & Community Health: The Journal of Health Promotion & Maintenance; Headache: The Journal of Head and Face Pain; International Journal of Behavioral Consultation and Therapy (2); International Journal of Disability; Development and Education (2); International Journal of Drug Policy; International Journal of Psychology; International Journal of Speech-Language Pathology; International Psychogeriatrics; Japanese Journal of Behavior Analysis (3); Japanese Journal of Special Education; Journal of Applied Research in Intellectual Disabilities (2); Journal of Applied Sport Psychology (3); Journal of Attention Disorders (2); Journal of Behavior Therapy and Experimental Psychiatry; Journal of Child Psychology and Psychiatry; Journal of Clinical Psychology in Medical Settings; Journal of Clinical Sport Psychology; Journal of Cognitive Psychotherapy; Journal of Consulting and Clinical Psychology (2); Journal of Deaf Studies and Deaf Education; Journal of Educational & Psychological Consultation (2); Journal of Evidence-Based Practices for Schools (2); Journal of the Experimental Analysis of Behavior (2); Journal of General Internal Medicine; Journal of Intellectual and Developmental Disabilities; Journal of Intellectual Disability Research (2); Journal of Medical Speech-Language Pathology; Journal of Neurology, Neurosurgery & Psychiatry; Journal of Paediatrics and Child Health; Journal of Prevention and Intervention in the Community; Journal of Safety Research; Journal of School Psychology (3); The Journal of Socio-Economics; The Journal of Special Education; Journal of Speech, Language, and Hearing Research (2); Journal of Sport Behavior; Journal of Substance Abuse Treatment; Journal of the International Neuropsychological Society; Journal of Traumatic Stress; The Journals of Gerontology: Series B: Psychological Sciences and Social Sciences; Language, Speech, and Hearing Services in Schools; Learning Disabilities Research & Practice (2); Learning Disability Quarterly (2); Music Therapy Perspectives; Neurorehabilitation and Neural Repair; Neuropsychological Rehabilitation (2); Pain; Physical Education and Sport Pedagogy (2); Preventive Medicine: An International Journal Devoted to Practice and Theory; Psychological Assessment; Psychological Medicine: A Journal of Research in Psychiatry and the Allied Sciences; The Psychological Record; Reading and Writing; Remedial and Special Education (3); Research and Practice for Persons with Severe Disabilities (2); Restorative Neurology and Neuroscience; School Psychology International; Seminars in Speech and Language; Sleep and Hypnosis; School Psychology Quarterly; Social Work in Health Care; The Sport Psychologist (3); Therapeutic Recreation Journal (2); The Volta Review; Work: Journal of Prevention, Assessment & Rehabilitation.

Coding criteria amplifications

A comprehensive description of the coding criteria for each category in this review is available from the author by request. The primary coding criteria are described here and in later sections of this article.

Research design was classified into one of the types discussed later in the section titled Predominant Single-Case Experimental Designs on the basis of the authors’ stated design type. Secondary research designs were then coded when applicable (i.e., mixed designs). Distinctions between primary and secondary research designs were made based on the authors’ description of their study. For example, if an author described the study as a “multiple baseline design with time-series measurement,” the primary research design would be coded as being multiple baseline, and time-series would be coded as the secondary research design.

Observer ratings were coded as present when observational coding procedures were described and/or the results of a test of interobserver agreement were reported.

Interrater reliability for observer ratings was coded as present in any case in which percent agreement, alpha, kappa, or another appropriate statistic was reported, regardless of the amount of the total data that were examined for agreement.

Daily diary, daily self-report, and EMA codes were given when authors explicitly described these procedures in the text by name. Coders did not infer the use of these measurement strategies.

The number of baseline observations was either taken directly from the figures provided in text or was simply counted in graphical displays of the data when this was determined to be a reliable approach. In some cases, it was not possible to reliably determine the number of baseline data points from the graphical display of data, in which case, the “unavailable” code was assigned. Similarly, the “unavailable” code was assigned when the number of observations was either unreported or ambiguous, or only a range was provided and thus no mean could be determined. Similarly, the mean number of baseline observations was calculated for each study prior to further descriptive statistical analyses because a number of studies reported means only.

The coding of the analytic method used in the reviewed studies is discussed later in the section titled Discussion of Review Results and Coding of Analytic Methods .

Results of the Systematic Review

Descriptive statistics of the design, measurement, and analysis characteristics of the reviewed studies are presented in Table 2 . The results and their implications are discussed in the relevant sections throughout the remainder of the article.

Descriptive Statistics of Reviewed SCED Characteristics

Note. % refers to the proportion of reviewed studies that satisfied criteria for this code: For example, the percent of studies reporting observer ratings.

The categories in the “Research design” subsection are the primary designs identified by the authors.

Categories in the “Mixed designs” subsection are included in the “Research design” subsection. Only the 3 most prevalent mixed designs are reported.

One study of 624 subjects was excluded from the calculation of the number of subjects because it was a significant outlier.

Similarly, one study with 500 subjects and one study with 950 subjects were excluded from the number of subject analyses for the simultaneous condition and time-series designs, respectively. This resulted in only one simultaneous condition study, which is why no standard deviation or range is reported.

Because of reporting inconsistencies in the reviewed articles, the mean number of baseline observations for each study was first calculated and then combined and reported in this table.

In contrast to the results reported in text, the findings here are based on the total number of studies and are not divided into those that reported an analysis and those that did not. Visual and statistical analyses are not applicable to most studies using changing criterion designs. However, some authors reported using visual analysis methods.

Discussion of the Systematic Review Results in Context

The SCED is a very flexible methodology and has many variants. Those mentioned here are the building blocks from which other designs are then derived. For those readers interested in the nuances of each design, Barlow et al., (2008) ; Franklin, Allison, and Gorman (1997) ; Kazdin (2010) ; and Kratochwill and Levin (1992) , among others, provide cogent, in-depth discussions. Identifying the appropriate SCED depends upon many factors, including the specifics of the IV, the setting in which the study will be conducted, participant characteristics, the desired or hypothesized outcomes, and the research question(s). Similarly, the researcher’s selection of measurement and analysis techniques is determined by these factors.

Predominant Single-Case Experimental Designs

Alternating/simultaneous designs (6%; primary design of the studies reviewed).

Alternating and simultaneous designs involve an iterative manipulation of the IV(s) across different phases to show that changes in the DV vary systematically as a function of manipulating the IV(s). In these multielement designs, the researcher has the option to alternate the introduction of two or more IVs or present two or more IVs at the same time. In the alternating variation, the researcher is able to determine the relative impact of two different IVs on the DV, when all other conditions are held constant. Another variation of this design is to alternate IVs across various conditions that could be related to the DV (e.g., class period, interventionist). Similarly, the simultaneous design would occur when the IVs were presented at the same time within the same phase of the study.

Changing criterion design (4%)

Changing criterion designs are used to demonstrate a gradual change in the DV over the course of the phase involving the active manipulation of the IV. Criteria indicating that a change has occurred happen in a step-wise manner, in which the criterion shifts as the participant responds to the presence of the manipulated IV. The changing criterion design is particularly useful in applied intervention research for a number of reasons. The IV is continuous and never withdrawn, unlike the strategy used in a reversal design. This is particularly important in situations where removal of a psychological intervention would be either detrimental or dangerous to the participant, or would be otherwise unfeasible or unethical. The multiple baseline design also does not withdraw intervention, but it requires replicating the effects of the intervention across participants, settings, or situations. A changing criterion design can be accomplished with one participant in one setting without withholding or withdrawing treatment.

Multiple baseline/combined series design (69%)

The multiple baseline or combined series design can be used to test within-subject change across conditions and often involves multiple participants in a replication context. The multiple baseline design is quite simple in many ways, essentially consisting of a number of repeated, miniature AB experiments or variations thereof. Introduction of the IV is staggered temporally across multiple participants or across multiple within-subject conditions, which allows the researcher to demonstrate that changes in the DV reliably occur only when the IV is introduced, thus controlling for the effects of extraneous factors. Multiple baseline designs can be used both within and across units (i.e., persons or groups of persons). When the baseline phase of each subject begins simultaneously, it is called a concurrent multiple baseline design. In a nonconcurrent variation, baseline periods across subjects begin at different points in time. The multiple baseline design is useful in many settings in which withdrawal of the IV would not be appropriate or when introduction of the IV is hypothesized to result in permanent change that would not reverse when the IV is withdrawn. The major drawback of this design is that the IV must be initially withheld for a period of time to ensure different starting points across the different units in the baseline phase. Depending upon the nature of the research questions, withholding an IV, such as a treatment, could be potentially detrimental to participants.

Reversal designs (17%)

Reversal designs are also known as introduction and withdrawal and are denoted as ABAB designs in their simplest form. As the name suggests, the reversal design involves collecting a baseline measure of the DV (the first A phase), introducing the IV (the first B phase), removing the IV while continuing to assess the DV (the second A phase), and then reintroducing the IV (the second B phase). This pattern can be repeated as many times as is necessary to demonstrate an effect or otherwise address the research question. Reversal designs are useful when the manipulation is hypothesized to result in changes in the DV that are expected to reverse or discontinue when the manipulation is not present. Maintenance of an effect is often necessary to uphold the findings of reversal designs. The demonstration of an effect is evident in reversal designs when improvement occurs during the first manipulation phase, compared to the first baseline phase, then reverts to or approaches original baseline levels during the second baseline phase when the manipulation has been withdrawn, and then improves again when the manipulation in then reinstated. This pattern of reversal, when the manipulation is introduced and then withdrawn, is essential to attributing changes in the DV to the IV. However, maintenance of the effects in a reversal design, in which the DV is hypothesized to reverse when the IV is withdrawn, is not incompatible ( Kazdin, 2010 ). Maintenance is demonstrated by repeating introduction–withdrawal segments until improvement in the DV becomes permanent even when the IV is withdrawn. There is not always a need to demonstrate maintenance in all applications, nor is it always possible or desirable, but it is paramount in the learning and intervention research contexts.

Mixed designs (10%)

Mixed designs include a combination of more than one SCED (e.g., a reversal design embedded within a multiple baseline) or an SCED embedded within a group design (i.e., a randomized controlled trial comparing two groups of multiple baseline experiments). Mixed designs afford the researcher even greater flexibility in designing a study to address complex psychological hypotheses, but also capitalize on the strengths of the various designs. See Kazdin (2010) for a discussion of the variations and utility of mixed designs.

Related Nonexperimental Designs

Quasi-experimental designs.

In contrast to the designs previously described, all of which constitute “true experiments” ( Kazdin, 2010 ; Shadish et al., 2002 ), in quasi-experimental designs the conditions of a true experiment (e.g., active manipulation of the IV, replication of the effect) are approximated and are not readily under the control of the researcher. Because the focus of this article is on experimental designs, quasi-experiments are not discussed in detail; instead the reader is referred to Kazdin (2010) and Shadish et al. (2002) .

Ecological and naturalistic single-case designs

For a single-case design to be experimental, there must be active manipulation of the IV, but in some applications, such as those that might be used in social and personality psychology, the researcher might be interested in measuring naturally occurring phenomena and examining their temporal relationships. Thus, the researcher will not use a manipulation. An example of this type of research might be a study about the temporal relationship between alcohol consumption and depressed mood, which can be measured reliably using EMA methods. Psychotherapy process researchers also use this type of design to assess dyadic relationship dynamics between therapists and clients (e.g., Tschacher & Ramseyer, 2009 ).

Research Design Standards

Each of the reviewed standards provides some degree of direction regarding acceptable research designs. The WWC provides the most detailed and specific requirements regarding design characteristics. Those guidelines presented in Tables 3 , 4 , and 5 are consistent with the methodological rigor necessary to meet the WWC distinction “meets standards.” The WWC also provides less-stringent standards for a “meets standards with reservations” distinction. When minimum criteria in the design, measurement, or analysis sections of a study are not met, it is rated “does not meet standards” ( Kratochwill et al., 2010 ). Many SCEDs are acceptable within the standards of DIV12, DIV16, NRP, and in the Tate et al. SCED scale. DIV12 specifies that replication occurs across a minimum of three successive cases, which differs from the WWC specifications, which allow for three replications within a single-subject design but does not necessarily need to be across multiple subjects. DIV16 does not require, but seems to prefer, a multiple baseline design with a between-subject replication. Tate et al. state that the “design allows for the examination of cause and effect relationships to demonstrate efficacy” (p. 400, 2008). Determining whether or not a design meets this requirement is left up to the evaluator, who might then refer to one of the other standards or another source for direction.

Research Design Standards and Guidelines

Measurement and Assessment Standards and Guidelines

Analysis Standards and Guidelines

The Stone and Shiffman (2002) standards for EMA are concerned almost entirely with the reporting of measurement characteristics and less so with research design. One way in which these standards differ from those of other sources is in the active manipulation of the IV. Many research questions in EMA, daily diary, and time-series designs are concerned with naturally occurring phenomena, and a researcher manipulation would run counter to this aim. The EMA standards become important when selecting an appropriate measurement strategy within the SCED. In EMA applications, as is also true in some other time-series and daily diary designs, researcher manipulation occurs as a function of the sampling interval in which DVs of interest are measured according to fixed time schedules (e.g., reporting occurs at the end of each day), random time schedules (e.g., the data collection device prompts the participant to respond at random intervals throughout the day), or on an event-based schedule (e.g., reporting occurs after a specified event takes place).

Measurement

The basic measurement requirement of the SCED is a repeated assessment of the DV across each phase of the design in order to draw valid inferences regarding the effect of the IV on the DV. In other applications, such as those used by personality and social psychology researchers to study various human phenomena ( Bolger et al., 2003 ; Reis & Gable, 2000 ), sampling strategies vary widely depending on the topic area under investigation. Regardless of the research area, SCEDs are most typically concerned with within-person change and processes and involve a time-based strategy, most commonly to assess global daily averages or peak daily levels of the DV. Many sampling strategies, such as time-series, in which reporting occurs at uniform intervals or on event-based, fixed, or variable schedules, are also appropriate measurement methods and are common in psychological research (see Bolger et al., 2003 ).

Repeated-measurement methods permit the natural, even spontaneous, reporting of information ( Reis, 1994 ), which reduces the biases of retrospection by minimizing the amount of time elapsed between an experience and the account of this experience ( Bolger et al., 2003 ). Shiffman et al. (2008) aptly noted that the majority of research in the field of psychology relies heavily on retrospective assessment measures, even though retrospective reports have been found to be susceptible to state-congruent recall (e.g., Bower, 1981 ) and a tendency to report peak levels of the experience instead of giving credence to temporal fluctuations ( Redelmeier & Kahneman, 1996 ; Stone, Broderick, Kaell, Deles-Paul, & Porter, 2000 ). Furthermore, Shiffman et al. (1997) demonstrated that subjective aggregate accounts were a poor fit to daily reported experiences, which can be attributed to reductions in measurement error resulting in increased validity and reliability of the daily reports.

The necessity of measuring at least one DV repeatedly means that the selected assessment method, instrument, and/or construct must be sensitive to change over time and be capable of reliably and validly capturing change. Horner et al. (2005) discusses the important features of outcome measures selected for use in these types of designs. Kazdin (2010) suggests that measures be dimensional, which can more readily detect effects than categorical and binary measures. Although using an established measure or scale, such as the Outcome Questionnaire System ( M. J. Lambert, Hansen, & Harmon, 2010 ), provides empirically validated items for assessing various outcomes, most measure validation studies conducted on this type of instrument involve between-subject designs, which is no guarantee that these measures are reliable and valid for assessing within-person variability. Borsboom, Mellenbergh, and van Heerden (2003) suggest that researchers adapting validated measures should consider whether the items they propose using have a factor structure within subjects similar to that obtained between subjects. This is one of the reasons that SCEDs often use observational assessments from multiple sources and report the interrater reliability of the measure. Self-report measures are acceptable practice in some circles, but generally additional assessment methods or informants are necessary to uphold the highest methodological standards. The results of this review indicate that the majority of studies include observational measurement (76.0%). Within those studies, nearly all (97.1%) reported interrater reliability procedures and results. The results within each design were similar, with the exception of time-series designs, which used observer ratings in only half of the reviewed studies.

Time-series

Time-series designs are defined by repeated measurement of variables of interest over a period of time ( Box & Jenkins, 1970 ). Time-series measurement most often occurs in uniform intervals; however, this is no longer a constraint of time-series designs (see Harvey, 2001 ). Although uniform interval reporting is not necessary in SCED research, repeated measures often occur at uniform intervals, such as once each day or each week, which constitutes a time-series design. The time-series design has been used in various basic science applications ( Scollon, Kim-Pietro, & Diener, 2003 ) across nearly all subspecialties in psychology (e.g., Bolger et al., 2003 ; Piasecki et al., 2007 ; for a review, see Reis & Gable, 2000 ; Soliday et al., 2002 ). The basic time-series formula for a two-phase (AB) data stream is presented in Equation 1 . In this formula α represents the step function of the data stream; S represents the change between the first and second phases, which is also the intercept in a two-phase data stream and a step function being 0 at times i = 1, 2, 3…n1 and 1 at times i = n1+1, n1+2, n1+3…n; n 1 is the number of observations in the baseline phase; n is the total number of data points in the data stream; i represents time; and ε i = ρε i −1 + e i , which indicates the relationship between the autoregressive function (ρ) and the distribution of the data in the stream.

Time-series formulas become increasingly complex when seasonality and autoregressive processes are modeled in the analytic procedures, but these are rarely of concern for short time-series data streams in SCEDs. For a detailed description of other time-series design and analysis issues, see Borckardt et al. (2008) , Box and Jenkins (1970) , Crosbie (1993) , R. R. Jones et al. (1977) , and Velicer and Fava (2003) .

Time-series and other repeated-measures methodologies also enable examination of temporal effects. Borckardt et al. (2008) and others have noted that time-series designs have the potential to reveal how change occurs, not simply if it occurs. This distinction is what most interested Skinner (1938) , but it often falls below the purview of today’s researchers in favor of group designs, which Skinner felt obscured the process of change. In intervention and psychopathology research, time-series designs can assess mediators of change ( Doss & Atkins, 2006 ), treatment processes ( Stout, 2007 ; Tschacher & Ramseyer, 2009 ), and the relationship between psychological symptoms (e.g., Alloy, Just, & Panzarella, 1997 ; Hanson & Chen, 2010 ; Oslin, Cary, Slaymaker, Colleran, & Blow, 2009 ), and might be capable of revealing mechanisms of change ( Kazdin, 2007 , 2009 , 2010 ). Between- and within-subject SCED designs with repeated measurements enable researchers to examine similarities and differences in the course of change, both during and as a result of manipulating an IV. Temporal effects have been largely overlooked in many areas of psychological science ( Bolger et al., 2003 ): Examining temporal relationships is sorely needed to further our understanding of the etiology and amplification of numerous psychological phenomena.

Time-series studies were very infrequently found in this literature search (2%). Time-series studies traditionally occur in subfields of psychology in which single-case research is not often used (e.g., personality, physiological/biological). Recent advances in methods for collecting and analyzing time-series data (e.g., Borckardt et al., 2008 ) could expand the use of time-series methodology in the SCED community. One problem with drawing firm conclusions from this particular review finding is a semantic factor: Time-series is a specific term reserved for measurement occurring at a uniform interval. However, SCED research appears to not yet have adopted this language when referring to data collected in this fashion. When time-series data analytic methods are not used, the matter of measurement interval is of less importance and might not need to be specified or described as a time-series. An interesting extension of this work would be to examine SCED research that used time-series measurement strategies but did not label it as such. This is important because then it could be determined how many SCEDs could be analyzed with time-series statistical methods.

Daily diary and ecological momentary assessment methods