Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

4.2 Definitions and Characteristics of Qualitative Research

Qualitative research aims to uncover the meaning and understanding of phenomena that cannot be broken down into measurable elements. It is based on naturalistic, interpretative and humanistic notions. 5 This research method seeks to discover, explore, identify or describe subjective human experiences using non-statistical methods and develops themes from the study participants’ stories. 5 Figure 4.1 depicts major features/ characteristics of qualitative research. It utilises exploratory open-ended questions and observations to search for patterns of meaning in collected data (e.g. observation, verbal/written narrative data, photographs, etc.) and uses inductive thinking (from specific observations to more general rules) to interpret meaning. 6 Participants’ voice is evident through quotations and description of the work. 6 The context/ setting of the study and the researcher’s reflexivity (i.e. “reflection on and awareness of their bias”, the effect of the researcher’s experience on the data and interpretations) are very important and described as part of data collection. 6 Analysis of collected data is complex, often involves inductive data analysis (exploration, contrasts, specific to general) and requires multiple coding and development of themes from participant stories. 6

Reflexivity- avoiding bias/Role of the qualitative researcher

Qualitative researchers generally begin their work with the recognition that their position (or worldview) has a significant impact on the overall research process. 7 Researcher worldview shapes the way the research is conducted, i.e., how the questions are formulated, methods are chosen, data are collected and analysed, and results are reported. Therefore, it is essential for qualitative researchers to acknowledge, articulate, reflect on and clarify their own underlying biases and assumptions before embarking on any research project. 7 Reflexivity helps to ensure that the researcher’s own experiences, values, and beliefs do not unintentionally bias the data collection, analysis, and interpretation. 7 It is the gold standard for establishing trustworthiness and has been established as one of the ways qualitative researchers should ensure rigour and quality in their work. 8 The following questions in Table 4.1 may help you begin the reflective process. 9

Table 4.1: Questions to aid the reflection process

| What piques my interest in this subject? | You need to consider what motivates your excitement, energy, and interest in investigating this topic to answer this question |

| What exactly do I believe the solution is? | Asking this question allows you to detect any biases by honestly reflecting on what you anticipate finding. The assumptions can be grouped/classified to allow the participants’ opinions to be heard. |

| What exactly am I getting out of this? | In many circumstances, the “pressure to publish” reduces research to nothing more than a job necessity. What effect does this have on your interest in the subject and its results? To what extent are you willing to go to find information? |

| What do my colleagues think of this project—and me? | You will not work in a vacuum as a researcher; you will be part of a social and interpersonal world. These outside factors will impact your perceptions of yourself and your job. Recognising this impact and its possible implications on human behaviour will allow for more self-reflection during the study process. |

Philosophical underpinnings to qualitative research

Qualitative research uses an inductive approach and stems from interpretivism or constructivism and assumes that realities are multiple, socially constructed, and holistic. 10 According to this philosophical viewpoint, humans build reality through their interactions with the world around them. 10 As a result, qualitative research aims to comprehend how individuals make sense of their experiences and build meaning in their lives. 10 Because reality is complex/nuanced and context-bound, participants constantly construct it depending on their understanding. Thus, the interactions between the researcher and the participants are considered necessary to offer a rich description of the concept and provide an in-depth understanding of the phenomenon under investigation. 11

An Introduction to Research Methods for Undergraduate Health Profession Students Copyright © 2023 by Faith Alele and Bunmi Malau-Aduli is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License , except where otherwise noted.

Criteria for Good Qualitative Research: A Comprehensive Review

- Regular Article

- Open access

- Published: 18 September 2021

- Volume 31 , pages 679–689, ( 2022 )

Cite this article

You have full access to this open access article

- Drishti Yadav ORCID: orcid.org/0000-0002-2974-0323 1

107k Accesses

52 Citations

68 Altmetric

Explore all metrics

This review aims to synthesize a published set of evaluative criteria for good qualitative research. The aim is to shed light on existing standards for assessing the rigor of qualitative research encompassing a range of epistemological and ontological standpoints. Using a systematic search strategy, published journal articles that deliberate criteria for rigorous research were identified. Then, references of relevant articles were surveyed to find noteworthy, distinct, and well-defined pointers to good qualitative research. This review presents an investigative assessment of the pivotal features in qualitative research that can permit the readers to pass judgment on its quality and to condemn it as good research when objectively and adequately utilized. Overall, this review underlines the crux of qualitative research and accentuates the necessity to evaluate such research by the very tenets of its being. It also offers some prospects and recommendations to improve the quality of qualitative research. Based on the findings of this review, it is concluded that quality criteria are the aftereffect of socio-institutional procedures and existing paradigmatic conducts. Owing to the paradigmatic diversity of qualitative research, a single and specific set of quality criteria is neither feasible nor anticipated. Since qualitative research is not a cohesive discipline, researchers need to educate and familiarize themselves with applicable norms and decisive factors to evaluate qualitative research from within its theoretical and methodological framework of origin.

Similar content being viewed by others

Good Qualitative Research: Opening up the Debate

Beyond qualitative/quantitative structuralism: the positivist qualitative research and the paradigmatic disclaimer.

What is Qualitative in Research

Avoid common mistakes on your manuscript.

Introduction

“… It is important to regularly dialogue about what makes for good qualitative research” (Tracy, 2010 , p. 837)

To decide what represents good qualitative research is highly debatable. There are numerous methods that are contained within qualitative research and that are established on diverse philosophical perspectives. Bryman et al., ( 2008 , p. 262) suggest that “It is widely assumed that whereas quality criteria for quantitative research are well‐known and widely agreed, this is not the case for qualitative research.” Hence, the question “how to evaluate the quality of qualitative research” has been continuously debated. There are many areas of science and technology wherein these debates on the assessment of qualitative research have taken place. Examples include various areas of psychology: general psychology (Madill et al., 2000 ); counseling psychology (Morrow, 2005 ); and clinical psychology (Barker & Pistrang, 2005 ), and other disciplines of social sciences: social policy (Bryman et al., 2008 ); health research (Sparkes, 2001 ); business and management research (Johnson et al., 2006 ); information systems (Klein & Myers, 1999 ); and environmental studies (Reid & Gough, 2000 ). In the literature, these debates are enthused by the impression that the blanket application of criteria for good qualitative research developed around the positivist paradigm is improper. Such debates are based on the wide range of philosophical backgrounds within which qualitative research is conducted (e.g., Sandberg, 2000 ; Schwandt, 1996 ). The existence of methodological diversity led to the formulation of different sets of criteria applicable to qualitative research.

Among qualitative researchers, the dilemma of governing the measures to assess the quality of research is not a new phenomenon, especially when the virtuous triad of objectivity, reliability, and validity (Spencer et al., 2004 ) are not adequate. Occasionally, the criteria of quantitative research are used to evaluate qualitative research (Cohen & Crabtree, 2008 ; Lather, 2004 ). Indeed, Howe ( 2004 ) claims that the prevailing paradigm in educational research is scientifically based experimental research. Hypotheses and conjectures about the preeminence of quantitative research can weaken the worth and usefulness of qualitative research by neglecting the prominence of harmonizing match for purpose on research paradigm, the epistemological stance of the researcher, and the choice of methodology. Researchers have been reprimanded concerning this in “paradigmatic controversies, contradictions, and emerging confluences” (Lincoln & Guba, 2000 ).

In general, qualitative research tends to come from a very different paradigmatic stance and intrinsically demands distinctive and out-of-the-ordinary criteria for evaluating good research and varieties of research contributions that can be made. This review attempts to present a series of evaluative criteria for qualitative researchers, arguing that their choice of criteria needs to be compatible with the unique nature of the research in question (its methodology, aims, and assumptions). This review aims to assist researchers in identifying some of the indispensable features or markers of high-quality qualitative research. In a nutshell, the purpose of this systematic literature review is to analyze the existing knowledge on high-quality qualitative research and to verify the existence of research studies dealing with the critical assessment of qualitative research based on the concept of diverse paradigmatic stances. Contrary to the existing reviews, this review also suggests some critical directions to follow to improve the quality of qualitative research in different epistemological and ontological perspectives. This review is also intended to provide guidelines for the acceleration of future developments and dialogues among qualitative researchers in the context of assessing the qualitative research.

The rest of this review article is structured in the following fashion: Sect. Methods describes the method followed for performing this review. Section Criteria for Evaluating Qualitative Studies provides a comprehensive description of the criteria for evaluating qualitative studies. This section is followed by a summary of the strategies to improve the quality of qualitative research in Sect. Improving Quality: Strategies . Section How to Assess the Quality of the Research Findings? provides details on how to assess the quality of the research findings. After that, some of the quality checklists (as tools to evaluate quality) are discussed in Sect. Quality Checklists: Tools for Assessing the Quality . At last, the review ends with the concluding remarks presented in Sect. Conclusions, Future Directions and Outlook . Some prospects in qualitative research for enhancing its quality and usefulness in the social and techno-scientific research community are also presented in Sect. Conclusions, Future Directions and Outlook .

For this review, a comprehensive literature search was performed from many databases using generic search terms such as Qualitative Research , Criteria , etc . The following databases were chosen for the literature search based on the high number of results: IEEE Explore, ScienceDirect, PubMed, Google Scholar, and Web of Science. The following keywords (and their combinations using Boolean connectives OR/AND) were adopted for the literature search: qualitative research, criteria, quality, assessment, and validity. The synonyms for these keywords were collected and arranged in a logical structure (see Table 1 ). All publications in journals and conference proceedings later than 1950 till 2021 were considered for the search. Other articles extracted from the references of the papers identified in the electronic search were also included. A large number of publications on qualitative research were retrieved during the initial screening. Hence, to include the searches with the main focus on criteria for good qualitative research, an inclusion criterion was utilized in the search string.

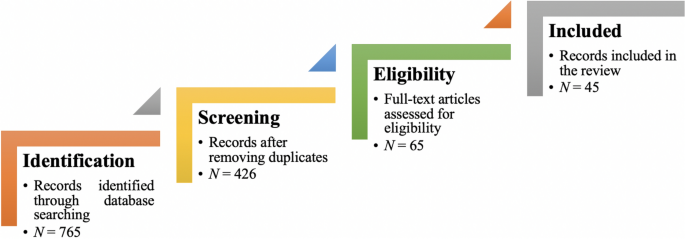

From the selected databases, the search retrieved a total of 765 publications. Then, the duplicate records were removed. After that, based on the title and abstract, the remaining 426 publications were screened for their relevance by using the following inclusion and exclusion criteria (see Table 2 ). Publications focusing on evaluation criteria for good qualitative research were included, whereas those works which delivered theoretical concepts on qualitative research were excluded. Based on the screening and eligibility, 45 research articles were identified that offered explicit criteria for evaluating the quality of qualitative research and were found to be relevant to this review.

Figure 1 illustrates the complete review process in the form of PRISMA flow diagram. PRISMA, i.e., “preferred reporting items for systematic reviews and meta-analyses” is employed in systematic reviews to refine the quality of reporting.

PRISMA flow diagram illustrating the search and inclusion process. N represents the number of records

Criteria for Evaluating Qualitative Studies

Fundamental criteria: general research quality.

Various researchers have put forward criteria for evaluating qualitative research, which have been summarized in Table 3 . Also, the criteria outlined in Table 4 effectively deliver the various approaches to evaluate and assess the quality of qualitative work. The entries in Table 4 are based on Tracy’s “Eight big‐tent criteria for excellent qualitative research” (Tracy, 2010 ). Tracy argues that high-quality qualitative work should formulate criteria focusing on the worthiness, relevance, timeliness, significance, morality, and practicality of the research topic, and the ethical stance of the research itself. Researchers have also suggested a series of questions as guiding principles to assess the quality of a qualitative study (Mays & Pope, 2020 ). Nassaji ( 2020 ) argues that good qualitative research should be robust, well informed, and thoroughly documented.

Qualitative Research: Interpretive Paradigms

All qualitative researchers follow highly abstract principles which bring together beliefs about ontology, epistemology, and methodology. These beliefs govern how the researcher perceives and acts. The net, which encompasses the researcher’s epistemological, ontological, and methodological premises, is referred to as a paradigm, or an interpretive structure, a “Basic set of beliefs that guides action” (Guba, 1990 ). Four major interpretive paradigms structure the qualitative research: positivist and postpositivist, constructivist interpretive, critical (Marxist, emancipatory), and feminist poststructural. The complexity of these four abstract paradigms increases at the level of concrete, specific interpretive communities. Table 5 presents these paradigms and their assumptions, including their criteria for evaluating research, and the typical form that an interpretive or theoretical statement assumes in each paradigm. Moreover, for evaluating qualitative research, quantitative conceptualizations of reliability and validity are proven to be incompatible (Horsburgh, 2003 ). In addition, a series of questions have been put forward in the literature to assist a reviewer (who is proficient in qualitative methods) for meticulous assessment and endorsement of qualitative research (Morse, 2003 ). Hammersley ( 2007 ) also suggests that guiding principles for qualitative research are advantageous, but methodological pluralism should not be simply acknowledged for all qualitative approaches. Seale ( 1999 ) also points out the significance of methodological cognizance in research studies.

Table 5 reflects that criteria for assessing the quality of qualitative research are the aftermath of socio-institutional practices and existing paradigmatic standpoints. Owing to the paradigmatic diversity of qualitative research, a single set of quality criteria is neither possible nor desirable. Hence, the researchers must be reflexive about the criteria they use in the various roles they play within their research community.

Improving Quality: Strategies

Another critical question is “How can the qualitative researchers ensure that the abovementioned quality criteria can be met?” Lincoln and Guba ( 1986 ) delineated several strategies to intensify each criteria of trustworthiness. Other researchers (Merriam & Tisdell, 2016 ; Shenton, 2004 ) also presented such strategies. A brief description of these strategies is shown in Table 6 .

It is worth mentioning that generalizability is also an integral part of qualitative research (Hays & McKibben, 2021 ). In general, the guiding principle pertaining to generalizability speaks about inducing and comprehending knowledge to synthesize interpretive components of an underlying context. Table 7 summarizes the main metasynthesis steps required to ascertain generalizability in qualitative research.

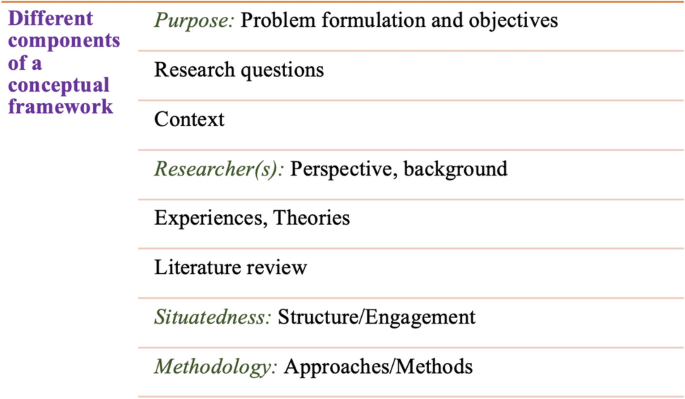

Figure 2 reflects the crucial components of a conceptual framework and their contribution to decisions regarding research design, implementation, and applications of results to future thinking, study, and practice (Johnson et al., 2020 ). The synergy and interrelationship of these components signifies their role to different stances of a qualitative research study.

Essential elements of a conceptual framework

In a nutshell, to assess the rationale of a study, its conceptual framework and research question(s), quality criteria must take account of the following: lucid context for the problem statement in the introduction; well-articulated research problems and questions; precise conceptual framework; distinct research purpose; and clear presentation and investigation of the paradigms. These criteria would expedite the quality of qualitative research.

How to Assess the Quality of the Research Findings?

The inclusion of quotes or similar research data enhances the confirmability in the write-up of the findings. The use of expressions (for instance, “80% of all respondents agreed that” or “only one of the interviewees mentioned that”) may also quantify qualitative findings (Stenfors et al., 2020 ). On the other hand, the persuasive reason for “why this may not help in intensifying the research” has also been provided (Monrouxe & Rees, 2020 ). Further, the Discussion and Conclusion sections of an article also prove robust markers of high-quality qualitative research, as elucidated in Table 8 .

Quality Checklists: Tools for Assessing the Quality

Numerous checklists are available to speed up the assessment of the quality of qualitative research. However, if used uncritically and recklessly concerning the research context, these checklists may be counterproductive. I recommend that such lists and guiding principles may assist in pinpointing the markers of high-quality qualitative research. However, considering enormous variations in the authors’ theoretical and philosophical contexts, I would emphasize that high dependability on such checklists may say little about whether the findings can be applied in your setting. A combination of such checklists might be appropriate for novice researchers. Some of these checklists are listed below:

The most commonly used framework is Consolidated Criteria for Reporting Qualitative Research (COREQ) (Tong et al., 2007 ). This framework is recommended by some journals to be followed by the authors during article submission.

Standards for Reporting Qualitative Research (SRQR) is another checklist that has been created particularly for medical education (O’Brien et al., 2014 ).

Also, Tracy ( 2010 ) and Critical Appraisal Skills Programme (CASP, 2021 ) offer criteria for qualitative research relevant across methods and approaches.

Further, researchers have also outlined different criteria as hallmarks of high-quality qualitative research. For instance, the “Road Trip Checklist” (Epp & Otnes, 2021 ) provides a quick reference to specific questions to address different elements of high-quality qualitative research.

Conclusions, Future Directions, and Outlook

This work presents a broad review of the criteria for good qualitative research. In addition, this article presents an exploratory analysis of the essential elements in qualitative research that can enable the readers of qualitative work to judge it as good research when objectively and adequately utilized. In this review, some of the essential markers that indicate high-quality qualitative research have been highlighted. I scope them narrowly to achieve rigor in qualitative research and note that they do not completely cover the broader considerations necessary for high-quality research. This review points out that a universal and versatile one-size-fits-all guideline for evaluating the quality of qualitative research does not exist. In other words, this review also emphasizes the non-existence of a set of common guidelines among qualitative researchers. In unison, this review reinforces that each qualitative approach should be treated uniquely on account of its own distinctive features for different epistemological and disciplinary positions. Owing to the sensitivity of the worth of qualitative research towards the specific context and the type of paradigmatic stance, researchers should themselves analyze what approaches can be and must be tailored to ensemble the distinct characteristics of the phenomenon under investigation. Although this article does not assert to put forward a magic bullet and to provide a one-stop solution for dealing with dilemmas about how, why, or whether to evaluate the “goodness” of qualitative research, it offers a platform to assist the researchers in improving their qualitative studies. This work provides an assembly of concerns to reflect on, a series of questions to ask, and multiple sets of criteria to look at, when attempting to determine the quality of qualitative research. Overall, this review underlines the crux of qualitative research and accentuates the need to evaluate such research by the very tenets of its being. Bringing together the vital arguments and delineating the requirements that good qualitative research should satisfy, this review strives to equip the researchers as well as reviewers to make well-versed judgment about the worth and significance of the qualitative research under scrutiny. In a nutshell, a comprehensive portrayal of the research process (from the context of research to the research objectives, research questions and design, speculative foundations, and from approaches of collecting data to analyzing the results, to deriving inferences) frequently proliferates the quality of a qualitative research.

Prospects : A Road Ahead for Qualitative Research

Irrefutably, qualitative research is a vivacious and evolving discipline wherein different epistemological and disciplinary positions have their own characteristics and importance. In addition, not surprisingly, owing to the sprouting and varied features of qualitative research, no consensus has been pulled off till date. Researchers have reflected various concerns and proposed several recommendations for editors and reviewers on conducting reviews of critical qualitative research (Levitt et al., 2021 ; McGinley et al., 2021 ). Following are some prospects and a few recommendations put forward towards the maturation of qualitative research and its quality evaluation:

In general, most of the manuscript and grant reviewers are not qualitative experts. Hence, it is more likely that they would prefer to adopt a broad set of criteria. However, researchers and reviewers need to keep in mind that it is inappropriate to utilize the same approaches and conducts among all qualitative research. Therefore, future work needs to focus on educating researchers and reviewers about the criteria to evaluate qualitative research from within the suitable theoretical and methodological context.

There is an urgent need to refurbish and augment critical assessment of some well-known and widely accepted tools (including checklists such as COREQ, SRQR) to interrogate their applicability on different aspects (along with their epistemological ramifications).

Efforts should be made towards creating more space for creativity, experimentation, and a dialogue between the diverse traditions of qualitative research. This would potentially help to avoid the enforcement of one's own set of quality criteria on the work carried out by others.

Moreover, journal reviewers need to be aware of various methodological practices and philosophical debates.

It is pivotal to highlight the expressions and considerations of qualitative researchers and bring them into a more open and transparent dialogue about assessing qualitative research in techno-scientific, academic, sociocultural, and political rooms.

Frequent debates on the use of evaluative criteria are required to solve some potentially resolved issues (including the applicability of a single set of criteria in multi-disciplinary aspects). Such debates would not only benefit the group of qualitative researchers themselves, but primarily assist in augmenting the well-being and vivacity of the entire discipline.

To conclude, I speculate that the criteria, and my perspective, may transfer to other methods, approaches, and contexts. I hope that they spark dialog and debate – about criteria for excellent qualitative research and the underpinnings of the discipline more broadly – and, therefore, help improve the quality of a qualitative study. Further, I anticipate that this review will assist the researchers to contemplate on the quality of their own research, to substantiate research design and help the reviewers to review qualitative research for journals. On a final note, I pinpoint the need to formulate a framework (encompassing the prerequisites of a qualitative study) by the cohesive efforts of qualitative researchers of different disciplines with different theoretic-paradigmatic origins. I believe that tailoring such a framework (of guiding principles) paves the way for qualitative researchers to consolidate the status of qualitative research in the wide-ranging open science debate. Dialogue on this issue across different approaches is crucial for the impending prospects of socio-techno-educational research.

Amin, M. E. K., Nørgaard, L. S., Cavaco, A. M., Witry, M. J., Hillman, L., Cernasev, A., & Desselle, S. P. (2020). Establishing trustworthiness and authenticity in qualitative pharmacy research. Research in Social and Administrative Pharmacy, 16 (10), 1472–1482.

Article Google Scholar

Barker, C., & Pistrang, N. (2005). Quality criteria under methodological pluralism: Implications for conducting and evaluating research. American Journal of Community Psychology, 35 (3–4), 201–212.

Bryman, A., Becker, S., & Sempik, J. (2008). Quality criteria for quantitative, qualitative and mixed methods research: A view from social policy. International Journal of Social Research Methodology, 11 (4), 261–276.

Caelli, K., Ray, L., & Mill, J. (2003). ‘Clear as mud’: Toward greater clarity in generic qualitative research. International Journal of Qualitative Methods, 2 (2), 1–13.

CASP (2021). CASP checklists. Retrieved May 2021 from https://casp-uk.net/casp-tools-checklists/

Cohen, D. J., & Crabtree, B. F. (2008). Evaluative criteria for qualitative research in health care: Controversies and recommendations. The Annals of Family Medicine, 6 (4), 331–339.

Denzin, N. K., & Lincoln, Y. S. (2005). Introduction: The discipline and practice of qualitative research. In N. K. Denzin & Y. S. Lincoln (Eds.), The sage handbook of qualitative research (pp. 1–32). Sage Publications Ltd.

Google Scholar

Elliott, R., Fischer, C. T., & Rennie, D. L. (1999). Evolving guidelines for publication of qualitative research studies in psychology and related fields. British Journal of Clinical Psychology, 38 (3), 215–229.

Epp, A. M., & Otnes, C. C. (2021). High-quality qualitative research: Getting into gear. Journal of Service Research . https://doi.org/10.1177/1094670520961445

Guba, E. G. (1990). The paradigm dialog. In Alternative paradigms conference, mar, 1989, Indiana u, school of education, San Francisco, ca, us . Sage Publications, Inc.

Hammersley, M. (2007). The issue of quality in qualitative research. International Journal of Research and Method in Education, 30 (3), 287–305.

Haven, T. L., Errington, T. M., Gleditsch, K. S., van Grootel, L., Jacobs, A. M., Kern, F. G., & Mokkink, L. B. (2020). Preregistering qualitative research: A Delphi study. International Journal of Qualitative Methods, 19 , 1609406920976417.

Hays, D. G., & McKibben, W. B. (2021). Promoting rigorous research: Generalizability and qualitative research. Journal of Counseling and Development, 99 (2), 178–188.

Horsburgh, D. (2003). Evaluation of qualitative research. Journal of Clinical Nursing, 12 (2), 307–312.

Howe, K. R. (2004). A critique of experimentalism. Qualitative Inquiry, 10 (1), 42–46.

Johnson, J. L., Adkins, D., & Chauvin, S. (2020). A review of the quality indicators of rigor in qualitative research. American Journal of Pharmaceutical Education, 84 (1), 7120.

Johnson, P., Buehring, A., Cassell, C., & Symon, G. (2006). Evaluating qualitative management research: Towards a contingent criteriology. International Journal of Management Reviews, 8 (3), 131–156.

Klein, H. K., & Myers, M. D. (1999). A set of principles for conducting and evaluating interpretive field studies in information systems. MIS Quarterly, 23 (1), 67–93.

Lather, P. (2004). This is your father’s paradigm: Government intrusion and the case of qualitative research in education. Qualitative Inquiry, 10 (1), 15–34.

Levitt, H. M., Morrill, Z., Collins, K. M., & Rizo, J. L. (2021). The methodological integrity of critical qualitative research: Principles to support design and research review. Journal of Counseling Psychology, 68 (3), 357.

Lincoln, Y. S., & Guba, E. G. (1986). But is it rigorous? Trustworthiness and authenticity in naturalistic evaluation. New Directions for Program Evaluation, 1986 (30), 73–84.

Lincoln, Y. S., & Guba, E. G. (2000). Paradigmatic controversies, contradictions and emerging confluences. In N. K. Denzin & Y. S. Lincoln (Eds.), Handbook of qualitative research (2nd ed., pp. 163–188). Sage Publications.

Madill, A., Jordan, A., & Shirley, C. (2000). Objectivity and reliability in qualitative analysis: Realist, contextualist and radical constructionist epistemologies. British Journal of Psychology, 91 (1), 1–20.

Mays, N., & Pope, C. (2020). Quality in qualitative research. Qualitative Research in Health Care . https://doi.org/10.1002/9781119410867.ch15

McGinley, S., Wei, W., Zhang, L., & Zheng, Y. (2021). The state of qualitative research in hospitality: A 5-year review 2014 to 2019. Cornell Hospitality Quarterly, 62 (1), 8–20.

Merriam, S., & Tisdell, E. (2016). Qualitative research: A guide to design and implementation. San Francisco, US.

Meyer, M., & Dykes, J. (2019). Criteria for rigor in visualization design study. IEEE Transactions on Visualization and Computer Graphics, 26 (1), 87–97.

Monrouxe, L. V., & Rees, C. E. (2020). When I say… quantification in qualitative research. Medical Education, 54 (3), 186–187.

Morrow, S. L. (2005). Quality and trustworthiness in qualitative research in counseling psychology. Journal of Counseling Psychology, 52 (2), 250.

Morse, J. M. (2003). A review committee’s guide for evaluating qualitative proposals. Qualitative Health Research, 13 (6), 833–851.

Nassaji, H. (2020). Good qualitative research. Language Teaching Research, 24 (4), 427–431.

O’Brien, B. C., Harris, I. B., Beckman, T. J., Reed, D. A., & Cook, D. A. (2014). Standards for reporting qualitative research: A synthesis of recommendations. Academic Medicine, 89 (9), 1245–1251.

O’Connor, C., & Joffe, H. (2020). Intercoder reliability in qualitative research: Debates and practical guidelines. International Journal of Qualitative Methods, 19 , 1609406919899220.

Reid, A., & Gough, S. (2000). Guidelines for reporting and evaluating qualitative research: What are the alternatives? Environmental Education Research, 6 (1), 59–91.

Rocco, T. S. (2010). Criteria for evaluating qualitative studies. Human Resource Development International . https://doi.org/10.1080/13678868.2010.501959

Sandberg, J. (2000). Understanding human competence at work: An interpretative approach. Academy of Management Journal, 43 (1), 9–25.

Schwandt, T. A. (1996). Farewell to criteriology. Qualitative Inquiry, 2 (1), 58–72.

Seale, C. (1999). Quality in qualitative research. Qualitative Inquiry, 5 (4), 465–478.

Shenton, A. K. (2004). Strategies for ensuring trustworthiness in qualitative research projects. Education for Information, 22 (2), 63–75.

Sparkes, A. C. (2001). Myth 94: Qualitative health researchers will agree about validity. Qualitative Health Research, 11 (4), 538–552.

Spencer, L., Ritchie, J., Lewis, J., & Dillon, L. (2004). Quality in qualitative evaluation: A framework for assessing research evidence.

Stenfors, T., Kajamaa, A., & Bennett, D. (2020). How to assess the quality of qualitative research. The Clinical Teacher, 17 (6), 596–599.

Taylor, E. W., Beck, J., & Ainsworth, E. (2001). Publishing qualitative adult education research: A peer review perspective. Studies in the Education of Adults, 33 (2), 163–179.

Tong, A., Sainsbury, P., & Craig, J. (2007). Consolidated criteria for reporting qualitative research (COREQ): A 32-item checklist for interviews and focus groups. International Journal for Quality in Health Care, 19 (6), 349–357.

Tracy, S. J. (2010). Qualitative quality: Eight “big-tent” criteria for excellent qualitative research. Qualitative Inquiry, 16 (10), 837–851.

Download references

Open access funding provided by TU Wien (TUW).

Author information

Authors and affiliations.

Faculty of Informatics, Technische Universität Wien, 1040, Vienna, Austria

Drishti Yadav

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Drishti Yadav .

Ethics declarations

Conflict of interest.

The author declares no conflict of interest.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Yadav, D. Criteria for Good Qualitative Research: A Comprehensive Review. Asia-Pacific Edu Res 31 , 679–689 (2022). https://doi.org/10.1007/s40299-021-00619-0

Download citation

Accepted : 28 August 2021

Published : 18 September 2021

Issue Date : December 2022

DOI : https://doi.org/10.1007/s40299-021-00619-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Qualitative research

- Evaluative criteria

- Find a journal

- Publish with us

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

The PMC website is updating on October 15, 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Grad Med Educ

- v.3(4); 2011 Dec

Qualities of Qualitative Research: Part I

Many important medical education research questions cry out for a qualitative research approach: How do teacher characteristics affect learning? Why do learners choose particular specialties? How is professionalism influenced by experiences, mentors, or the curriculum? The medical paradigm, the “hard” science most often taught in medical schools, usually employs quantitative approaches. 1 As a result, clinicians 5 be less familiar with qualitative research or its applicability to medical education questions. For these why types of questions, qualitative or mixed qualitative and quantitative approaches 5 be more appropriate and helpful. 2 Thus, we wish to encourage submissions to the Journal of Graduate Medical Education that are for qualitative purposes or use qualitative methods.

This editorial is the first in a series of two, and it will provide an introduction to qualitative approaches and compare features of quantitative and qualitative research. The second editorial will review in more detail the approaches for selecting participants, analyzing data, and ensuring rigor and study quality in qualitative research. The aims of the editorials are to enhance readers' understanding of articles using this approach and to encourage more researchers to explore qualitative approaches.

Theory and Methodology

Good research follows from a reasonable starting point, a theoretical concept or perspective. Quantitative research uses a positivist perspective in which evidence is objectively and systematically obtained to prove a causal model or hypothesis; what works is the focus. 3 Alternatively, qualitative approaches focus on how and why something works, to build understanding. 3 In the positivist model, study objects (eg, learners) are independent of the researchers, and knowledge or facts are determined through direct observations. Also, the context in which the observations occur is controlled or assumed to be stable. In contrast, in a qualitative paradigm researchers might interact with the study objects (learners) to collect observations, which are highly context specific. 3

Qualitative research has often been differentiated from quantitative as hypothesis generating rather than hypothesis testing . 4 Qualitative research methods “explore, describe, or generate theory, especially for uncertain and ‘immature’ concepts; sensitive and socially dependent concepts; and complex human intentions and motivations.” 4 In education, qualitative research strives to understand how learning occurs through close study of small numbers of learners and a focus on the individual. It attempts to explain a phenomenon or relationship. Typically, results from qualitative research have been assumed to apply only to the small groups studied, such that generalizability of the results to other populations is not expected. For this reason, qualitative research is considered to be hypothesis generating, although some experts dispute this limitation. 5 table 1 presents a comparison of qualitative and quantitative approaches.

Quantitative Versus Qualitative Research

When Qualitative Studies Make Sense

Qualitative studies are helpful to understand why and how; quantitative studies focus on cause and effect, how much, and numeric correlations. Qualitative approaches are used when the potential answer to a question requires an explanation, not a straightforward yes/no. Generally, qualitative research is concerned with cases rather than variables, and understanding differences rather than calculating the mean of responses. 4 In-depth interviews, focus groups, case studies, and open-ended questions are often employed to find these answers. A qualitative study is concerned with the point of view of the individual under study. 6

For example, the changes in duty hours for residents in 2003 have generated many quantitative research articles, which have counted and correlated the changes in numbers of procedures, patient safety parameters, resident test results, and resident sleep hours. However, to determine why residents still sleep about the same number of hours since 2003, one could start from a qualitative framework in order to understand residents' decisions regarding sleep. Similarly, to understand how residents perceive the influence of resident work hour restrictions on aspects of professionalism, a qualitative study would start with the learners rather than by measuring and correlating scores on professionalism assessments. Because learning takes place in social environments characterized by complex interactions, the quantitative “cause and effect” model is often too simplistic. 7

A variety of ways to collect information are available to researchers, such as observation, field notes, reflexive journals, interviews, focus groups, and analysis of documents and materials; table 2 provides examples of these methods. Interviews and focus groups are usually audiorecorded and transcribed for analysis, whereas observations are recorded in field notes by the observer.

Potential Data Sources for Qualitative Research 8

After data collection, accepted methods are employed to interpret the data. Researchers review the observations and report their impressions in a structured format, with subsequent analysis also standardized. table 3 provides one example of an analysis plan. Strategies to ensure rigor in data collection and trustworthiness of the data and data analysis will be discussed in the second editorial in the series.

Iterative Team Process to Interpret Data 8

In contrast to quantitative methods, subjective responses are critical findings, both in participant responses and observer reactions. The unique or outlier response has value in contributing to understanding the experience of others, and thus individual responses are not lost in the aggregation of findings or in the development of research group consensus. 2 , 4 Qualitative methods acknowledge the “myth of objectivity” between researcher and subjects of study. 7 In fact, the researcher is unlikely to be a purely detached observer.

Ethical Issues

As qualitative researchers usually attempt to study subjects and interactions in their “natural settings,” ethical issues frequently arise. Because of the sensitive nature of some discussions as well as the relationship between researchers and participants, informed consent is often required. The very reason for doing qualitative research—to discover why and how, particularly for thorny topics—can lead to potential exposure of sensitive opinions, feelings, and personal information. Thus, consideration of how to protect participants from harm is essential from the very onset of the study.

Quality Assessment

Qualitative researchers need to show that their findings are credible. As with quantitative approaches, a strong research project starts with a basic review of existing knowledge: a solid literature search. However, in contrast to quantitative approaches, most qualitative paradigms do not look to find a single “truth,” but rather multiple views of a context-specific “reality.” The concepts of validity and reliability originally evolved from the quantitative tradition, and therefore their accepted definitions are considered inadequate for qualitative research. Instead, concepts of precision, credibility, and transferability are key aspects of evaluating a qualitative study. 9

Although some experts find that reliability has little relevance to qualitative studies, others propose the term “dependability” as the analogous metric for this type of research. Dependability is gained though consistency of data, which is evaluated through transparent research steps and research findings. 9 , 10 Trustworthiness and rigor are terms used to establish credible findings. One technique often used to enhance trustworthiness and rigor is triangulation, in which multiple data sources (eg, observation, interviews, and recordings), multiple analytic methods, or multiple researchers are used to study the question. 9 The overall goal is to minimize and understand potential bias while ensuring the researcher's “truthfulness” of interpretation. 9

A potentially helpful appraisal checklist for qualitative studies, developed by Coté and Turgeon, 11 is found in table 4 . This appraisal checklist has not been examined systematically. table 5 includes a list of terms commonly used in qualitative research. Approaches to ensure rigor and trustworthiness in qualitative research will be addressed in greater detail in Part 2.

Sample Quality Appraisal Checklist for Qualitative Studies 11 , a

Commonly Used Terms in Qualitative Research 8

Both quantitative and qualitative approaches have strengths and weaknesses; medical education research will benefit from each type of inquiry. The best approach will depend on the kind of question asked, and the best methods will be those most appropriate to the question. 4 To learn more about this topic, the references below are a useful start, as is talking to colleagues engaged in qualitative research at your institution or in your specialty.

Gail M. Sullivan, MD, MPH, is Editor-in-Chief, Journal of Graduate Medical Education; and Joan Sargeant, PhD, is Professor in the Division of Medical Education, Dalhousie University, Halifax, Nova Scotia, Canada.

Qualitative Research: Characteristics, Design, Methods & Examples

Lauren McCall

MSc Health Psychology Graduate

MSc, Health Psychology, University of Nottingham

Lauren obtained an MSc in Health Psychology from The University of Nottingham with a distinction classification.

Learn about our Editorial Process

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Qualitative research is a type of research methodology that focuses on gathering and analyzing non-numerical data to gain a deeper understanding of human behavior, experiences, and perspectives.

It aims to explore the “why” and “how” of a phenomenon rather than the “what,” “where,” and “when” typically addressed by quantitative research.

Unlike quantitative research, which focuses on gathering and analyzing numerical data for statistical analysis, qualitative research involves researchers interpreting data to identify themes, patterns, and meanings.

Qualitative research can be used to:

- Gain deep contextual understandings of the subjective social reality of individuals

- To answer questions about experience and meaning from the participant’s perspective

- To design hypotheses, theory must be researched using qualitative methods to determine what is important before research can begin.

Examples of qualitative research questions include:

- How does stress influence young adults’ behavior?

- What factors influence students’ school attendance rates in developed countries?

- How do adults interpret binge drinking in the UK?

- What are the psychological impacts of cervical cancer screening in women?

- How can mental health lessons be integrated into the school curriculum?

Characteristics

Naturalistic setting.

Individuals are studied in their natural setting to gain a deeper understanding of how people experience the world. This enables the researcher to understand a phenomenon close to how participants experience it.

Naturalistic settings provide valuable contextual information to help researchers better understand and interpret the data they collect.

The environment, social interactions, and cultural factors can all influence behavior and experiences, and these elements are more easily observed in real-world settings.

Reality is socially constructed

Qualitative research aims to understand how participants make meaning of their experiences – individually or in social contexts. It assumes there is no objective reality and that the social world is interpreted (Yilmaz, 2013).

The primacy of subject matter

The primary aim of qualitative research is to understand the perspectives, experiences, and beliefs of individuals who have experienced the phenomenon selected for research rather than the average experiences of groups of people (Minichiello, 1990).

An in-depth understanding is attained since qualitative techniques allow participants to freely disclose their experiences, thoughts, and feelings without constraint (Tenny et al., 2022).

Variables are complex, interwoven, and difficult to measure

Factors such as experiences, behaviors, and attitudes are complex and interwoven, so they cannot be reduced to isolated variables , making them difficult to measure quantitatively.

However, a qualitative approach enables participants to describe what, why, or how they were thinking/ feeling during a phenomenon being studied (Yilmaz, 2013).

Emic (insider’s point of view)

The phenomenon being studied is centered on the participants’ point of view (Minichiello, 1990).

Emic is used to describe how participants interact, communicate, and behave in the research setting (Scarduzio, 2017).

Interpretive analysis

In qualitative research, interpretive analysis is crucial in making sense of the collected data.

This process involves examining the raw data, such as interview transcripts, field notes, or documents, and identifying the underlying themes, patterns, and meanings that emerge from the participants’ experiences and perspectives.

Collecting Qualitative Data

There are four main research design methods used to collect qualitative data: observations, interviews, focus groups, and ethnography.

Observations

This method involves watching and recording phenomena as they occur in nature. Observation can be divided into two types: participant and non-participant observation.

In participant observation, the researcher actively participates in the situation/events being observed.

In non-participant observation, the researcher is not an active part of the observation and tries not to influence the behaviors they are observing (Busetto et al., 2020).

Observations can be covert (participants are unaware that a researcher is observing them) or overt (participants are aware of the researcher’s presence and know they are being observed).

However, awareness of an observer’s presence may influence participants’ behavior.

Interviews give researchers a window into the world of a participant by seeking their account of an event, situation, or phenomenon. They are usually conducted on a one-to-one basis and can be distinguished according to the level at which they are structured (Punch, 2013).

Structured interviews involve predetermined questions and sequences to ensure replicability and comparability. However, they are unable to explore emerging issues.

Informal interviews consist of spontaneous, casual conversations which are closer to the truth of a phenomenon. However, information is gathered using quick notes made by the researcher and is therefore subject to recall bias.

Semi-structured interviews have a flexible structure, phrasing, and placement so emerging issues can be explored (Denny & Weckesser, 2022).

The use of probing questions and clarification can lead to a detailed understanding, but semi-structured interviews can be time-consuming and subject to interviewer bias.

Focus groups

Similar to interviews, focus groups elicit a rich and detailed account of an experience. However, focus groups are more dynamic since participants with shared characteristics construct this account together (Denny & Weckesser, 2022).

A shared narrative is built between participants to capture a group experience shaped by a shared context.

The researcher takes on the role of a moderator, who will establish ground rules and guide the discussion by following a topic guide to focus the group discussions.

Typically, focus groups have 4-10 participants as a discussion can be difficult to facilitate with more than this, and this number allows everyone the time to speak.

Ethnography

Ethnography is a methodology used to study a group of people’s behaviors and social interactions in their environment (Reeves et al., 2008).

Data are collected using methods such as observations, field notes, or structured/ unstructured interviews.

The aim of ethnography is to provide detailed, holistic insights into people’s behavior and perspectives within their natural setting. In order to achieve this, researchers immerse themselves in a community or organization.

Due to the flexibility and real-world focus of ethnography, researchers are able to gather an in-depth, nuanced understanding of people’s experiences, knowledge and perspectives that are influenced by culture and society.

In order to develop a representative picture of a particular culture/ context, researchers must conduct extensive field work.

This can be time-consuming as researchers may need to immerse themselves into a community/ culture for a few days, or possibly a few years.

Qualitative Data Analysis Methods

Different methods can be used for analyzing qualitative data. The researcher chooses based on the objectives of their study.

The researcher plays a key role in the interpretation of data, making decisions about the coding, theming, decontextualizing, and recontextualizing of data (Starks & Trinidad, 2007).

Grounded theory

Grounded theory is a qualitative method specifically designed to inductively generate theory from data. It was developed by Glaser and Strauss in 1967 (Glaser & Strauss, 2017).

This methodology aims to develop theories (rather than test hypotheses) that explain a social process, action, or interaction (Petty et al., 2012). To inform the developing theory, data collection and analysis run simultaneously.

There are three key types of coding used in grounded theory: initial (open), intermediate (axial), and advanced (selective) coding.

Throughout the analysis, memos should be created to document methodological and theoretical ideas about the data. Data should be collected and analyzed until data saturation is reached and a theory is developed.

Content analysis

Content analysis was first used in the early twentieth century to analyze textual materials such as newspapers and political speeches.

Content analysis is a research method used to identify and analyze the presence and patterns of themes, concepts, or words in data (Vaismoradi et al., 2013).

This research method can be used to analyze data in different formats, which can be written, oral, or visual.

The goal of content analysis is to develop themes that capture the underlying meanings of data (Schreier, 2012).

Qualitative content analysis can be used to validate existing theories, support the development of new models and theories, and provide in-depth descriptions of particular settings or experiences.

The following six steps provide a guideline for how to conduct qualitative content analysis.

- Define a Research Question : To start content analysis, a clear research question should be developed.

- Identify and Collect Data : Establish the inclusion criteria for your data. Find the relevant sources to analyze.

- Define the Unit or Theme of Analysis : Categorize the content into themes. Themes can be a word, phrase, or sentence.

- Develop Rules for Coding your Data : Define a set of coding rules to ensure that all data are coded consistently.

- Code the Data : Follow the coding rules to categorize data into themes.

- Analyze the Results and Draw Conclusions : Examine the data to identify patterns and draw conclusions in relation to your research question.

Discourse analysis

Discourse analysis is a research method used to study written/ spoken language in relation to its social context (Wood & Kroger, 2000).

In discourse analysis, the researcher interprets details of language materials and the context in which it is situated.

Discourse analysis aims to understand the functions of language (how language is used in real life) and how meaning is conveyed by language in different contexts. Researchers use discourse analysis to investigate social groups and how language is used to achieve specific communication goals.

Different methods of discourse analysis can be used depending on the aims and objectives of a study. However, the following steps provide a guideline on how to conduct discourse analysis.

- Define the Research Question : Develop a relevant research question to frame the analysis.

- Gather Data and Establish the Context : Collect research materials (e.g., interview transcripts, documents). Gather factual details and review the literature to construct a theory about the social and historical context of your study.

- Analyze the Content : Closely examine various components of the text, such as the vocabulary, sentences, paragraphs, and structure of the text. Identify patterns relevant to the research question to create codes, then group these into themes.

- Review the Results : Reflect on the findings to examine the function of the language, and the meaning and context of the discourse.

Thematic analysis

Thematic analysis is a method used to identify, interpret, and report patterns in data, such as commonalities or contrasts.

Although the origin of thematic analysis can be traced back to the early twentieth century, understanding and clarity of thematic analysis is attributed to Braun and Clarke (2006).

Thematic analysis aims to develop themes (patterns of meaning) across a dataset to address a research question.

In thematic analysis, qualitative data is gathered using techniques such as interviews, focus groups, and questionnaires. Audio recordings are transcribed. The dataset is then explored and interpreted by a researcher to identify patterns.

This occurs through the rigorous process of data familiarisation, coding, theme development, and revision. These identified patterns provide a summary of the dataset and can be used to address a research question.

Themes are developed by exploring the implicit and explicit meanings within the data. Two different approaches are used to generate themes: inductive and deductive.

An inductive approach allows themes to emerge from the data. In contrast, a deductive approach uses existing theories or knowledge to apply preconceived ideas to the data.

Phases of Thematic Analysis

Braun and Clarke (2006) provide a guide of the six phases of thematic analysis. These phases can be applied flexibly to fit research questions and data.

| Phase | |

|---|---|

| 1. Gather and transcribe data | Gather raw data, for example interviews or focus groups, and transcribe audio recordings fully |

| 2. Familiarization with data | Read and reread all your data from beginning to end; note down initial ideas |

| 3. Create initial codes | Start identifying preliminary codes which highlight important features of the data and may be relevant to the research question |

| 4. Create new codes which encapsulate potential themes | Review initial codes and explore any similarities, differences, or contradictions to uncover underlying themes; create a map to visualize identified themes |

| 5. Take a break then return to the data | Take a break and then return later to review themes |

| 6. Evaluate themes for good fit | Last opportunity for analysis; check themes are supported and saturated with data |

Template analysis

Template analysis refers to a specific method of thematic analysis which uses hierarchical coding (Brooks et al., 2014).

Template analysis is used to analyze textual data, for example, interview transcripts or open-ended responses on a written questionnaire.

To conduct template analysis, a coding template must be developed (usually from a subset of the data) and subsequently revised and refined. This template represents the themes identified by researchers as important in the dataset.

Codes are ordered hierarchically within the template, with the highest-level codes demonstrating overarching themes in the data and lower-level codes representing constituent themes with a narrower focus.

A guideline for the main procedural steps for conducting template analysis is outlined below.

- Familiarization with the Data : Read (and reread) the dataset in full. Engage, reflect, and take notes on data that may be relevant to the research question.

- Preliminary Coding : Identify initial codes using guidance from the a priori codes, identified before the analysis as likely to be beneficial and relevant to the analysis.

- Organize Themes : Organize themes into meaningful clusters. Consider the relationships between the themes both within and between clusters.

- Produce an Initial Template : Develop an initial template. This may be based on a subset of the data.

- Apply and Develop the Template : Apply the initial template to further data and make any necessary modifications. Refinements of the template may include adding themes, removing themes, or changing the scope/title of themes.

- Finalize Template : Finalize the template, then apply it to the entire dataset.

Frame analysis

Frame analysis is a comparative form of thematic analysis which systematically analyzes data using a matrix output.

Ritchie and Spencer (1994) developed this set of techniques to analyze qualitative data in applied policy research. Frame analysis aims to generate theory from data.

Frame analysis encourages researchers to organize and manage their data using summarization.

This results in a flexible and unique matrix output, in which individual participants (or cases) are represented by rows and themes are represented by columns.

Each intersecting cell is used to summarize findings relating to the corresponding participant and theme.

Frame analysis has five distinct phases which are interrelated, forming a methodical and rigorous framework.

- Familiarization with the Data : Familiarize yourself with all the transcripts. Immerse yourself in the details of each transcript and start to note recurring themes.

- Develop a Theoretical Framework : Identify recurrent/ important themes and add them to a chart. Provide a framework/ structure for the analysis.

- Indexing : Apply the framework systematically to the entire study data.

- Summarize Data in Analytical Framework : Reduce the data into brief summaries of participants’ accounts.

- Mapping and Interpretation : Compare themes and subthemes and check against the original transcripts. Group the data into categories and provide an explanation for them.

Preventing Bias in Qualitative Research

To evaluate qualitative studies, the CASP (Critical Appraisal Skills Programme) checklist for qualitative studies can be used to ensure all aspects of a study have been considered (CASP, 2018).

The quality of research can be enhanced and assessed using criteria such as checklists, reflexivity, co-coding, and member-checking.

Co-coding

Relying on only one researcher to interpret rich and complex data may risk key insights and alternative viewpoints being missed. Therefore, coding is often performed by multiple researchers.

A common strategy must be defined at the beginning of the coding process (Busetto et al., 2020). This includes establishing a useful coding list and finding a common definition of individual codes.

Transcripts are initially coded independently by researchers and then compared and consolidated to minimize error or bias and to bring confirmation of findings.

Member checking

Member checking (or respondent validation) involves checking back with participants to see if the research resonates with their experiences (Russell & Gregory, 2003).

Data can be returned to participants after data collection or when results are first available. For example, participants may be provided with their interview transcript and asked to verify whether this is a complete and accurate representation of their views.

Participants may then clarify or elaborate on their responses to ensure they align with their views (Shenton, 2004).

This feedback becomes part of data collection and ensures accurate descriptions/ interpretations of phenomena (Mays & Pope, 2000).

Reflexivity in qualitative research

Reflexivity typically involves examining your own judgments, practices, and belief systems during data collection and analysis. It aims to identify any personal beliefs which may affect the research.

Reflexivity is essential in qualitative research to ensure methodological transparency and complete reporting. This enables readers to understand how the interaction between the researcher and participant shapes the data.

Depending on the research question and population being researched, factors that need to be considered include the experience of the researcher, how the contact was established and maintained, age, gender, and ethnicity.

These details are important because, in qualitative research, the researcher is a dynamic part of the research process and actively influences the outcome of the research (Boeije, 2014).

Reflexivity Example

Who you are and your characteristics influence how you collect and analyze data. Here is an example of a reflexivity statement for research on smoking. I am a 30-year-old white female from a middle-class background. I live in the southwest of England and have been educated to master’s level. I have been involved in two research projects on oral health. I have never smoked, but I have witnessed how smoking can cause ill health from my volunteering in a smoking cessation clinic. My research aspirations are to help to develop interventions to help smokers quit.

Establishing Trustworthiness in Qualitative Research

Trustworthiness is a concept used to assess the quality and rigor of qualitative research. Four criteria are used to assess a study’s trustworthiness: credibility, transferability, dependability, and confirmability.

1. Credibility in Qualitative Research

Credibility refers to how accurately the results represent the reality and viewpoints of the participants.

To establish credibility in research, participants’ views and the researcher’s representation of their views need to align (Tobin & Begley, 2004).

To increase the credibility of findings, researchers may use data source triangulation, investigator triangulation, peer debriefing, or member checking (Lincoln & Guba, 1985).

2. Transferability in Qualitative Research

Transferability refers to how generalizable the findings are: whether the findings may be applied to another context, setting, or group (Tobin & Begley, 2004).

Transferability can be enhanced by giving thorough and in-depth descriptions of the research setting, sample, and methods (Nowell et al., 2017).

3. Dependability in Qualitative Research

Dependability is the extent to which the study could be replicated under similar conditions and the findings would be consistent.

Researchers can establish dependability using methods such as audit trails so readers can see the research process is logical and traceable (Koch, 1994).

4. Confirmability in Qualitative Research

Confirmability is concerned with establishing that there is a clear link between the researcher’s interpretations/ findings and the data.

Researchers can achieve confirmability by demonstrating how conclusions and interpretations were arrived at (Nowell et al., 2017).

This enables readers to understand the reasoning behind the decisions made.

Audit Trails in Qualitative Research

An audit trail provides evidence of the decisions made by the researcher regarding theory, research design, and data collection, as well as the steps they have chosen to manage, analyze, and report data.

The researcher must provide a clear rationale to demonstrate how conclusions were reached in their study.

A clear description of the research path must be provided to enable readers to trace through the researcher’s logic (Halpren, 1983).

Researchers should maintain records of the raw data, field notes, transcripts, and a reflective journal in order to provide a clear audit trail.

Discovery of unexpected data

Open-ended questions in qualitative research mean the researcher can probe an interview topic and enable the participant to elaborate on responses in an unrestricted manner.

This allows unexpected data to emerge, which can lead to further research into that topic.

The exploratory nature of qualitative research helps generate hypotheses that can be tested quantitatively (Busetto et al., 2020).

Flexibility

Data collection and analysis can be modified and adapted to take the research in a different direction if new ideas or patterns emerge in the data.

This enables researchers to investigate new opportunities while firmly maintaining their research goals.

Naturalistic settings

The behaviors of participants are recorded in real-world settings. Studies that use real-world settings have high ecological validity since participants behave more authentically.

Limitations

Time-consuming .

Qualitative research results in large amounts of data which often need to be transcribed and analyzed manually.

Even when software is used, transcription can be inaccurate, and using software for analysis can result in many codes which need to be condensed into themes.

Subjectivity

The researcher has an integral role in collecting and interpreting qualitative data. Therefore, the conclusions reached are from their perspective and experience.

Consequently, interpretations of data from another researcher may vary greatly.

Limited generalizability

The aim of qualitative research is to provide a detailed, contextualized understanding of an aspect of the human experience from a relatively small sample size.

Despite rigorous analysis procedures, conclusions drawn cannot be generalized to the wider population since data may be biased or unrepresentative.

Therefore, results are only applicable to a small group of the population.

While individual qualitative studies are often limited in their generalizability due to factors such as sample size and context, metasynthesis enables researchers to synthesize findings from multiple studies, potentially leading to more generalizable conclusions.

By integrating findings from studies conducted in diverse settings and with different populations, metasynthesis can provide broader insights into the phenomenon of interest.

Extraneous variables

Qualitative research is often conducted in real-world settings. This may cause results to be unreliable since extraneous variables may affect the data, for example:

- Situational variables : different environmental conditions may influence participants’ behavior in a study. The random variation in factors (such as noise or lighting) may be difficult to control in real-world settings.

- Participant characteristics : this includes any characteristics that may influence how a participant answers/ behaves in a study. This may include a participant’s mood, gender, age, ethnicity, sexual identity, IQ, etc.

- Experimenter effect : experimenter effect refers to how a researcher’s unintentional influence can change the outcome of a study. This occurs when (i) their interactions with participants unintentionally change participants’ behaviors or (ii) due to errors in observation, interpretation, or analysis.

What sample size should qualitative research be?

The sample size for qualitative studies has been recommended to include a minimum of 12 participants to reach data saturation (Braun, 2013).

Are surveys qualitative or quantitative?

Surveys can be used to gather information from a sample qualitatively or quantitatively. Qualitative surveys use open-ended questions to gather detailed information from a large sample using free text responses.

The use of open-ended questions allows for unrestricted responses where participants use their own words, enabling the collection of more in-depth information than closed-ended questions.

In contrast, quantitative surveys consist of closed-ended questions with multiple-choice answer options. Quantitative surveys are ideal to gather a statistical representation of a population.

What are the ethical considerations of qualitative research?

Before conducting a study, you must think about any risks that could occur and take steps to prevent them. Participant Protection : Researchers must protect participants from physical and mental harm. This means you must not embarrass, frighten, offend, or harm participants. Transparency : Researchers are obligated to clearly communicate how they will collect, store, analyze, use, and share the data. Confidentiality : You need to consider how to maintain the confidentiality and anonymity of participants’ data.

What is triangulation in qualitative research?

Triangulation refers to the use of several approaches in a study to comprehensively understand phenomena. This method helps to increase the validity and credibility of research findings.

Types of triangulation include method triangulation (using multiple methods to gather data); investigator triangulation (multiple researchers for collecting/ analyzing data), theory triangulation (comparing several theoretical perspectives to explain a phenomenon), and data source triangulation (using data from various times, locations, and people; Carter et al., 2014).

Why is qualitative research important?

Qualitative research allows researchers to describe and explain the social world. The exploratory nature of qualitative research helps to generate hypotheses that can then be tested quantitatively.

In qualitative research, participants are able to express their thoughts, experiences, and feelings without constraint.

Additionally, researchers are able to follow up on participants’ answers in real-time, generating valuable discussion around a topic. This enables researchers to gain a nuanced understanding of phenomena which is difficult to attain using quantitative methods.

What is coding data in qualitative research?

Coding data is a qualitative data analysis strategy in which a section of text is assigned with a label that describes its content.

These labels may be words or phrases which represent important (and recurring) patterns in the data.

This process enables researchers to identify related content across the dataset. Codes can then be used to group similar types of data to generate themes.

What is the difference between qualitative and quantitative research?

Qualitative research involves the collection and analysis of non-numerical data in order to understand experiences and meanings from the participant’s perspective.

This can provide rich, in-depth insights on complicated phenomena. Qualitative data may be collected using interviews, focus groups, or observations.

In contrast, quantitative research involves the collection and analysis of numerical data to measure the frequency, magnitude, or relationships of variables. This can provide objective and reliable evidence that can be generalized to the wider population.

Quantitative data may be collected using closed-ended questionnaires or experiments.

What is trustworthiness in qualitative research?

Trustworthiness is a concept used to assess the quality and rigor of qualitative research. Four criteria are used to assess a study’s trustworthiness: credibility, transferability, dependability, and confirmability.

Credibility refers to how accurately the results represent the reality and viewpoints of the participants. Transferability refers to whether the findings may be applied to another context, setting, or group.

Dependability is the extent to which the findings are consistent and reliable. Confirmability refers to the objectivity of findings (not influenced by the bias or assumptions of researchers).

What is data saturation in qualitative research?