Start your free trial

Arrange a trial for your organisation and discover why FSTA is the leading database for reliable research on the sciences of food and health.

REQUEST A FREE TRIAL

- Research Skills Blog

5 software tools to support your systematic review processes

By Dr. Mina Kalantar on 19-Jan-2021 13:01:01

Systematic reviews are a reassessment of scholarly literature to facilitate decision making. This methodical approach of re-evaluating evidence was initially applied in healthcare, to set policies, create guidelines and answer medical questions.

Systematic reviews are large, complex projects and, depending on the purpose, they can be quite expensive to conduct. A team of researchers, data analysts and experts from various fields may collaborate to review and examine incredibly large numbers of research articles for evidence synthesis. Depending on the spectrum, systematic reviews often take at least 6 months, and sometimes upwards of 18 months to complete.

The main principles of transparency and reproducibility require a pragmatic approach in the organisation of the required research activities and detailed documentation of the outcomes. As a result, many software tools have been developed to help researchers with some of the tedious tasks required as part of the systematic review process.

hbspt.cta._relativeUrls=true;hbspt.cta.load(97439, 'ccc20645-09e2-4098-838f-091ed1bf1f4e', {"useNewLoader":"true","region":"na1"});

The first generation of these software tools were produced to accommodate and manage collaborations, but gradually developed to help with screening literature and reporting outcomes. Some of these software packages were initially designed for medical and healthcare studies and have specific protocols and customised steps integrated for various types of systematic reviews. However, some are designed for general processing, and by extending the application of the systematic review approach to other fields, they are being increasingly adopted and used in software engineering, health-related nutrition, agriculture, environmental science, social sciences and education.

Software tools

There are various free and subscription-based tools to help with conducting a systematic review. Many of these tools are designed to assist with the key stages of the process, including title and abstract screening, data synthesis, and critical appraisal. Some are designed to facilitate the entire process of review, including protocol development, reporting of the outcomes and help with fast project completion.

As time goes on, more functions are being integrated into such software tools. Technological advancement has allowed for more sophisticated and user-friendly features, including visual graphics for pattern recognition and linking multiple concepts. The idea is to digitalise the cumbersome parts of the process to increase efficiency, thus allowing researchers to focus their time and efforts on assessing the rigorousness and robustness of the research articles.

This article introduces commonly used systematic review tools that are relevant to food research and related disciplines, which can be used in a similar context to the process in healthcare disciplines.

These reviews are based on IFIS' internal research, thus are unbiased and not affiliated with the companies.

This online platform is a core component of the Cochrane toolkit, supporting parts of the systematic review process, including title/abstract and full-text screening, documentation, and reporting.

The Covidence platform enables collaboration of the entire systematic reviews team and is suitable for researchers and students at all levels of experience.

From a user perspective, the interface is intuitive, and the citation screening is directed step-by-step through a well-defined workflow. Imports and exports are straightforward, with easy export options to Excel and CVS.

Access is free for Cochrane authors (a single reviewer), and Cochrane provides a free trial to other researchers in healthcare. Universities can also subscribe on an institutional basis.

Rayyan is a free and open access web-based platform funded by the Qatar Foundation, a non-profit organisation supporting education and community development initiative . Rayyan is used to screen and code literature through a systematic review process.

Unlike Covidence, Rayyan does not follow a standard SR workflow and simply helps with citation screening. It is accessible through a mobile application with compatibility for offline screening. The web-based platform is known for its accessible user interface, with easy and clear export options.

Function comparison of 5 software tools to support the systematic review process

|

|

|

|

|

|

|

| Protocol development |

|

|

|

|

|

| Database integration |

|

| Only PubMed |

| PubMed |

| Ease of import & export |

|

|

|

|

|

| Duplicate removal |

|

|

|

|

|

| Article screening | Inc. full text | Title & abstract | Inc. full text | Inc. full text | Inc. full text |

| Critical appraisal |

|

|

|

|

|

| Assist with reporting |

|

|

|

|

|

| Meta-analysis |

|

|

|

|

|

| Cost | Subscription | Free | Subscription | Free | Subscription |

EPPI-Reviewer

EPPI-Reviewer is a web-based software programme developed by the Evidence for Policy and Practice Information and Co-ordinating Centre (EPPI) at the UCL Institute for Education, London .

It provides comprehensive functionalities for coding and screening. Users can create different levels of coding in a code set tool for clustering, screening, and administration of documents. EPPI-Reviewer allows direct search and import from PubMed. The import of search results from other databases is feasible in different formats. It stores, references, identifies and removes duplicates automatically. EPPI-Reviewer allows full-text screening, text mining, meta-analysis and the export of data into different types of reports.

There is no limit for concurrent use of the software and the number of articles being reviewed. Cochrane reviewers can access EPPI reviews using their Cochrane subscription details.

EPPI-Centre has other tools for facilitating the systematic review process, including coding guidelines and data management tools.

CADIMA is a free, online, open access review management tool, developed to facilitate research synthesis and structure documentation of the outcomes.

The Julius Institute and the Collaboration for Environmental Evidence established the software programme to support and guide users through the entire systematic review process, including protocol development, literature searching, study selection, critical appraisal, and documentation of the outcomes. The flexibility in choosing the steps also makes CADIMA suitable for conducting systematic mapping and rapid reviews.

CADIMA was initially developed for research questions in agriculture and environment but it is not limited to these, and as such, can be used for managing review processes in other disciplines. It enables users to export files and work offline.

The software allows for statistical analysis of the collated data using the R statistical software. Unlike EPPI-Reviewer, CADIMA does not have a built-in search engine to allow for searching in literature databases like PubMed.

DistillerSR

DistillerSR is an online software maintained by the Canadian company, Evidence Partners which specialises in literature review automation. DistillerSR provides a collaborative platform for every stage of literature review management. The framework is flexible and can accommodate literature reviews of different sizes. It is configurable to different data curation procedures, workflows and reporting standards. The platform integrates necessary features for screening, quality assessment, data extraction and reporting. The software uses Artificial Learning (AL)-enabled technologies in priority screening. It is to cut the screening process short by reranking the most relevant references nearer to the top. It can also use AL, as a second reviewer, in quality control checks of screened studies by human reviewers. DistillerSR is used to manage systematic reviews in various medical disciplines, surveillance, pharmacovigilance and public health reviews including food and nutrition topics. The software does not support statistical analyses. It provides configurable forms in standard formats for data extraction.

DistillerSR allows direct search and import of references from PubMed. It provides an add on feature called LitConnect which can be set to automatically import newly published references from data providers to keep reviews up to date during their progress.

The systematic review Toolbox is a web-based catalogue of various tools, including software packages which can assist with single or multiple tasks within the evidence synthesis process. Researchers can run a quick search or tailor a more sophisticated search by choosing their approach, budget, discipline, and preferred support features, to find the right tools for their research.

If you enjoyed this blog post, you may also be interested in our recently published blog post addressing the difference between a systematic review and a systematic literature review.

- FSTA - Food Science & Technology Abstracts

- IFIS Collections

- Resources Hub

- Diversity Statement

- Sustainability Commitment

- Company news

- Frequently Asked Questions

- Privacy Policy

- Terms of Use for IFIS Collections

Ground Floor, 115 Wharfedale Road, Winnersh Triangle, Wokingham, Berkshire RG41 5RB

Get in touch with IFIS

© International Food Information Service (IFIS Publishing) operating as IFIS – All Rights Reserved | Charity Reg. No. 1068176 | Limited Company No. 3507902 | Designed by Blend

10 Best Literature Review Tools for Researchers

Boost your research game with these Best Literature Review Tools for Researchers! Uncover hidden gems, organize your findings, and ace your next research paper!

Researchers struggle to identify key sources, extract relevant information, and maintain accuracy while manually conducting literature reviews. This leads to inefficiency, errors, and difficulty in identifying gaps or trends in existing literature.

Table of Contents

Top 10 Literature Review Tools for Researchers: In A Nutshell (2023)

| 1. | Semantic Scholar | Researchers to access and analyze scholarly literature, particularly focused on leveraging AI and semantic analysis |

| 2. | Elicit | Researchers in extracting, organizing, and synthesizing information from various sources, enabling efficient data analysis |

| 3. | Scite.Ai | Determine the credibility and reliability of research articles, facilitating evidence-based decision-making |

| 4. | DistillerSR | Streamlining and enhancing the process of literature screening, study selection, and data extraction |

| 5. | Rayyan | Facilitating efficient screening and selection of research outputs |

| 6. | Consensus | Researchers to work together, annotate, and discuss research papers in real-time, fostering team collaboration and knowledge sharing |

| 7. | RAx | Researchers to perform efficient literature search and analysis, aiding in identifying relevant articles, saving time, and improving the quality of research |

| 8. | Lateral | Discovering relevant scientific articles and identify potential research collaborators based on user interests and preferences |

| 9. | Iris AI | Exploring and mapping the existing literature, identifying knowledge gaps, and generating research questions |

| 10. | Scholarcy | Extracting key information from research papers, aiding in comprehension and saving time |

#1. Semantic Scholar – A free, AI-powered research tool for scientific literature

Not all scholarly content may be indexed, and occasional false positives or inaccurate associations can occur. Furthermore, the tool primarily focuses on computer science and related fields, potentially limiting coverage in other disciplines.

#2. Elicit – Research assistant using language models like GPT-3

Elicit is a game-changing literature review tool that has gained popularity among researchers worldwide. With its user-friendly interface and extensive database of scholarly articles, it streamlines the research process, saving time and effort.

However, users should be cautious when using Elicit. It is important to verify the credibility and accuracy of the sources found through the tool, as the database encompasses a wide range of publications.

Additionally, occasional glitches in the search function have been reported, leading to incomplete or inaccurate results. While Elicit offers tremendous benefits, researchers should remain vigilant and cross-reference information to ensure a comprehensive literature review.

#3. Scite.Ai – Your personal research assistant

Scite.Ai is a popular literature review tool that revolutionizes the research process for scholars. With its innovative citation analysis feature, researchers can evaluate the credibility and impact of scientific articles, making informed decisions about their inclusion in their own work.

However, while Scite.Ai offers numerous advantages, there are a few aspects to be cautious about. As with any data-driven tool, occasional errors or inaccuracies may arise, necessitating researchers to cross-reference and verify results with other reputable sources.

Rayyan offers the following paid plans:

#4. DistillerSR – Literature Review Software

Despite occasional technical glitches reported by some users, the developers actively address these issues through updates and improvements, ensuring a better user experience.

#5. Rayyan – AI Powered Tool for Systematic Literature Reviews

However, it’s important to be aware of a few aspects. The free version of Rayyan has limitations, and upgrading to a premium subscription may be necessary for additional functionalities.

#6. Consensus – Use AI to find you answers in scientific research

With Consensus, researchers can save significant time by efficiently organizing and accessing relevant research material.People consider Consensus for several reasons.

Consensus offers both free and paid plans:

#7. RAx – AI-powered reading assistant

#8. lateral – advance your research with ai.

Additionally, researchers must be mindful of potential biases introduced by the tool’s algorithms and should critically evaluate and interpret the results.

#9. Iris AI – Introducing the researcher workspace

Researchers are drawn to this tool because it saves valuable time by automating the tedious task of literature review and provides comprehensive coverage across multiple disciplines.

#10. Scholarcy – Summarize your literature through AI

Scholarcy’s ability to extract key information and generate concise summaries makes it an attractive option for scholars looking to quickly grasp the main concepts and findings of multiple papers.

Scholarcy’s automated summarization may not capture the nuanced interpretations or contextual information presented in the full text.

Final Thoughts

In conclusion, conducting a comprehensive literature review is a crucial aspect of any research project, and the availability of reliable and efficient tools can greatly facilitate this process for researchers. This article has explored the top 10 literature review tools that have gained popularity among researchers.

Q1. What are literature review tools for researchers?

Q2. what criteria should researchers consider when choosing literature review tools.

When choosing literature review tools, researchers should consider factors such as the tool’s search capabilities, database coverage, user interface, collaboration features, citation management, annotation and highlighting options, integration with reference management software, and data extraction capabilities.

Q3. Are there any literature review tools specifically designed for systematic reviews or meta-analyses?

Meta-analysis support: Some literature review tools include statistical analysis features that assist in conducting meta-analyses. These features can help calculate effect sizes, perform statistical tests, and generate forest plots or other visual representations of the meta-analytic results.

Q4. Can literature review tools help with organizing and annotating collected references?

Integration with citation managers: Some literature review tools integrate with popular citation managers like Zotero, Mendeley, or EndNote, allowing seamless transfer of references and annotations between platforms.

By leveraging these features, researchers can streamline the organization and annotation of their collected references, making it easier to retrieve relevant information during the literature review process.

Leave a Comment Cancel reply

Systematic Review

- Library Help

- What is a Systematic Review (SR)?

Steps of a Systematic Review

- Framing a Research Question

- Developing a Search Strategy

- Searching the Literature

- Managing the Process

- Meta-analysis

- Publishing your Systematic Review

Forms and templates

Image: David Parmenter's Shop

- PICO Template

- Inclusion/Exclusion Criteria

- Database Search Log

- Review Matrix

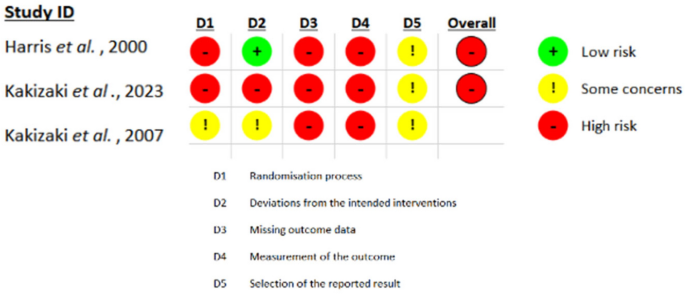

- Cochrane Tool for Assessing Risk of Bias in Included Studies

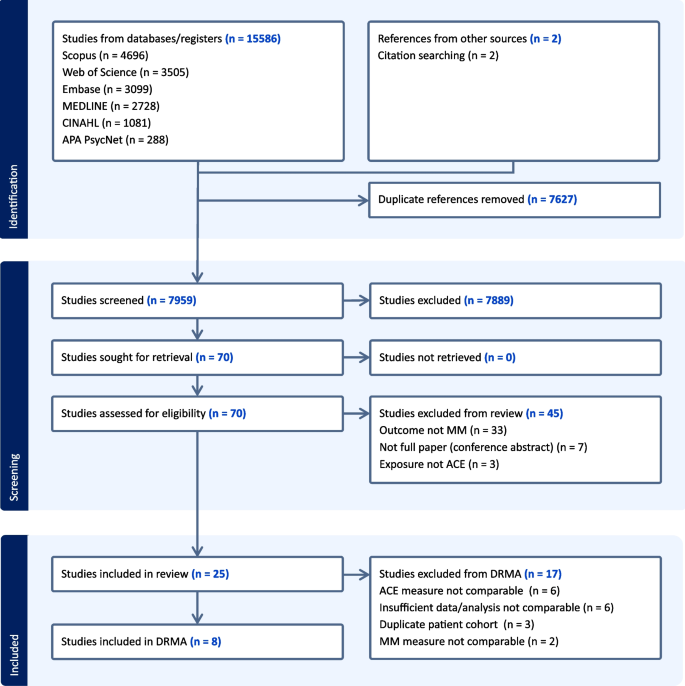

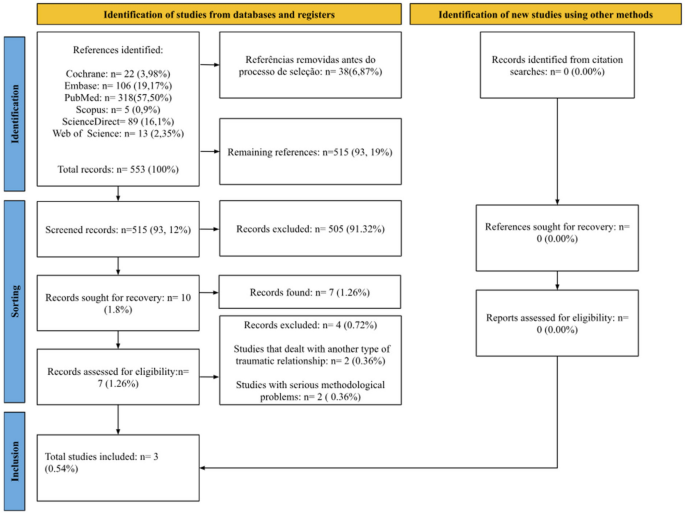

• PRISMA Flow Diagram - Record the numbers of retrieved references and included/excluded studies. You can use the Create Flow Diagram tool to automate the process.

• PRISMA Checklist - Checklist of items to include when reporting a systematic review or meta-analysis

PRISMA 2020 and PRISMA-S: Common Questions on Tracking Records and the Flow Diagram

- PROSPERO Template

- Manuscript Template

- Steps of SR (text)

- Steps of SR (visual)

- Steps of SR (PIECES)

|

Image by | from the UMB HSHSL Guide. (26 min) on how to conduct and write a systematic review from RMIT University from the VU Amsterdam . , (1), 6–23. https://doi.org/10.3102/0034654319854352 . (1), 49-60. . (4), 471-475. (2020) (2020) - Methods guide for effectiveness and comparative effectiveness reviews (2017) - Finding what works in health care: Standards for systematic reviews (2011) - Systematic reviews: CRD’s guidance for undertaking reviews in health care (2008) |

|

| entify your research question. Formulate a clear, well-defined research question of appropriate scope. Define your terminology. Find existing reviews on your topic to inform the development of your research question, identify gaps, and confirm that you are not duplicating the efforts of previous reviews. Consider using a framework like or to define you question scope. Use to record search terms under each concept. It is a good idea to register your protocol in a publicly accessible way. This will help avoid other people completing a review on your topic. Similarly, before you start doing a systematic review, it's worth checking the different registries that nobody else has already registered a protocol on the same topic. - Systematic reviews of health care and clinical interventions - Systematic reviews of the effects of social interventions (Collaborative Approach to Meta-Analysis and Review of Animal Data from Experimental Studies) - The protocol is published immediately and subjected to open peer review. When two reviewers approve it, the paper is sent to Medline, Embase and other databases for indexing. - upload a protocol for your scoping review - Systematic reviews of healthcare practices to assist in the improvement of healthcare outcomes globally - Registry of a protocol on OSF creates a frozen, time-stamped record of the protocol, thus ensuring a level of transparency and accountability for the research. There are no limits to the types of protocols that can be hosted on OSF. - International prospective register of systematic reviews. This is the primary database for registering systematic review protocols and searching for published protocols. . PROSPERO accepts protocols from all disciplines (e.g., psychology, nutrition) with the stipulation that they must include health-related outcomes. - Similar to PROSPERO. Based in the UK, fee-based service, quick turnaround time. - Submit a pre-print, or a protocol for a scoping review. - Share your search strategy and research protocol. No limit on the format, size, access restrictions or license.outlining the details and documentation necessary for conducting a systematic review: , (1), 28. |

| Clearly state the criteria you will use to determine whether or not a study will be included in your search. Consider study populations, study design, intervention types, comparison groups, measured outcomes. Use some database-supplied limits such as language, dates, humans, female/male, age groups, and publication/study types (randomized controlled trials, etc.). | |

| Run your searches in the to your topic. Work with to help you design comprehensive search strategies across a variety of databases. Approach the grey literature methodically and purposefully. Collect ALL of the retrieved records from each search into , such as , or , and prior to screening. using the and . | |

| - export your Endnote results in this screening software | Start with a title/abstract screening to remove studies that are clearly not related to your topic. Use your to screen the full-text of studies. It is highly recommended that two independent reviewers screen all studies, resolving areas of disagreement by consensus. |

| Use , or systematic review software (e.g. , ), to extract all relevant data from each included study. It is recommended that you pilot your data extraction tool, to determine if other fields should be included or existing fields clarified. | |

| Risk of Bias (Quality) Assessment - (download the Excel spreadsheet to see all data) | Use a Risk of Bias tool (such as the ) to assess the potential biases of studies in regards to study design and other factors. Read the to learn about the topic of assessing risk of bias in included studies. You can adapt ( ) to best meet the needs of your review, depending on the types of studies included. |

| - - - | Clearly present your findings, including detailed methodology (such as search strategies used, selection criteria, etc.) such that your review can be easily updated in the future with new research findings. Perform a meta-analysis, if the studies allow. Provide recommendations for practice and policy-making if sufficient, high quality evidence exists, or future directions for research to fill existing gaps in knowledge or to strengthen the body of evidence. For more information, see: . (2), 217–226. https://doi.org/10.2450/2012.0247-12 - Get some inspiration and find some terms and phrases for writing your manuscript - Automated high-quality spelling, grammar and rephrasing corrections using artificial intelligence (AI) to improve the flow of your writing. Free and subscription plans available. |

| - - | 8. Find the best journal to publish your work. Identifying the best journal to submit your research to can be a difficult process. To help you make the choice of where to submit, simply insert your title and abstract in any of the listed under the tab. |

Adapted from A Guide to Conducting Systematic Reviews: Steps in a Systematic Review by Cornell University Library

|

This diagram illustrates in a visual way and in plain language what review authors actually do in the process of undertaking a systematic review. |

This diagram illustrates what is actually in a published systematic review and gives examples from the relevant parts of a systematic review housed online on The Cochrane Library. It will help you to read or navigate a systematic review. |

Source: Cochrane Consumers and Communications (infographics are free to use and licensed under Creative Commons )

Check the following visual resources titled " What Are Systematic Reviews?"

- Video with closed captions available

- Animated Storyboard

|

Image: | - the methods of the systematic review are generally decided before conducting it.

Source: Foster, M. (2018). Systematic reviews service: Introduction to systematic reviews. Retrieved September 18, 2018, from |

- << Previous: What is a Systematic Review (SR)?

- Next: Framing a Research Question >>

- Last Updated: Jul 11, 2024 6:38 AM

- URL: https://lib.guides.umd.edu/SR

♨️ A step-by-step process

Using the PRISMA 2020 (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guidelines involves a step-by-step process to ensure that your systematic review or meta-analysis is reported transparently and comprehensively. Below are the key steps to follow when using PRISMA 2020:

1. Understand the PRISMA 2020 Checklist: Familiarize yourself with the PRISMA 2020 Checklist and its 27 essential items. You can access the checklist and an explanation of each item from the official PRISMA website or publication.

2. Plan Your Systematic Review: Before starting your review, clearly define your research question, objectives, and inclusion/exclusion criteria for selecting studies. Ensure that your research question aligns with the PRISMA 2020 framework.

3. Develop a Protocol: Create a systematic review protocol that outlines the methodology you'll use, including search strategies, data extraction methods, and the approach to assessing risk of bias (if applicable). Register your protocol on a relevant platform like PROSPERO.

4. Conduct the Literature Search: Search for relevant studies using a systematic and comprehensive approach. Document the search strategy, databases used, search terms, and any filters applied. Ensure that your search covers the time period and study designs specified in your protocol.

5. Study Selection: Implement your inclusion/exclusion criteria to screen and select studies. Maintain detailed records of the screening process, including reasons for exclusion.

6. Data Extraction: Extract data from the selected studies using a predefined template. Include information on study characteristics, outcomes, and any other relevant data points. Ensure that your data extraction process is consistent and well-documented.

7. Risk of Bias Assessment: If applicable, assess the risk of bias in the included studies. Use appropriate tools or criteria and clearly report the results of the assessment.

8. Data Synthesis and Meta-Analysis: If relevant, conduct data synthesis and meta-analysis. Follow established statistical methods and guidelines for pooling data, calculating effect sizes, and assessing heterogeneity.

9. Report According to PRISMA 2020: When writing your systematic review or meta-analysis manuscript, ensure that you follow the PRISMA 2020 Checklist. Address each of the 27 items in the checklist in your manuscript. This includes providing clear information on your research question, search strategy, inclusion/exclusion criteria, data extraction process, risk of bias assessment, and results.

10. Transparency and Supplementary Materials: Provide supplementary materials such as a PRISMA flow diagram showing the study selection process and a summary table of included studies. These add to the transparency of your review.

11. Peer Review and Revision: Submit your manuscript to a peer-reviewed journal that accepts systematic reviews and meta-analyses. Be prepared to respond to reviewers' comments and make necessary revisions to adhere to PRISMA 2020.

12. Publish and Share: Once your systematic review or meta-analysis is accepted and published, consider sharing it on platforms like PROSPERO or other relevant databases for greater visibility.

Throughout the process, maintaining transparency, consistency, and adherence to the PRISMA 2020 guidelines will help ensure that your systematic review or meta-analysis is of high quality and can be effectively used by researchers, policymakers, and practitioners in your field.

Last updated 11 months ago

- Help Center

GET STARTED

COLLABORATE ON YOUR REVIEWS WITH ANYONE, ANYWHERE, ANYTIME

Save precious time and maximize your productivity with a Rayyan membership. Receive training, priority support, and access features to complete your systematic reviews efficiently.

Rayyan Teams+ makes your job easier. It includes VIP Support, AI-powered in-app help, and powerful tools to create, share and organize systematic reviews, review teams, searches, and full-texts.

RESEARCHERS

Rayyan makes collaborative systematic reviews faster, easier, and more convenient. Training, VIP support, and access to new features maximize your productivity. Get started now!

Over 1 billion reference articles reviewed by research teams, and counting...

Intelligent, scalable and intuitive.

Rayyan understands language, learns from your decisions and helps you work quickly through even your largest systematic literature reviews.

WATCH A TUTORIAL NOW

Solutions for Organizations and Businesses

Rayyan Enterprise and Rayyan Teams+ make it faster, easier and more convenient for you to manage your research process across your organization.

- Accelerate your research across your team or organization and save valuable researcher time.

- Build and preserve institutional assets, including literature searches, systematic reviews, and full-text articles.

- Onboard team members quickly with access to group trainings for beginners and experts.

- Receive priority support to stay productive when questions arise.

- SCHEDULE A DEMO

- LEARN MORE ABOUT RAYYAN TEAMS+

RAYYAN SYSTEMATIC LITERATURE REVIEW OVERVIEW

LEARN ABOUT RAYYAN’S PICO HIGHLIGHTS AND FILTERS

Join now to learn why Rayyan is trusted by already more than 500,000 researchers

Individual plans, teams plans.

For early career researchers just getting started with research.

Free forever

- 3 Active Reviews

- Invite Unlimited Reviewers

- Import Directly from Mendeley

- Industry Leading De-Duplication

- 5-Star Relevance Ranking

- Advanced Filtration Facets

- Mobile App Access

- 100 Decisions on Mobile App

- Standard Support

- Revoke Reviewer

- Online Training

- PICO Highlights & Filters

- PRISMA (Beta)

- Auto-Resolver

- Multiple Teams & Management Roles

- Monitor & Manage Users, Searches, Reviews, Full Texts

- Onboarding and Regular Training

Professional

For researchers who want more tools for research acceleration.

per month, billed annually

- Unlimited Active Reviews

- Unlimited Decisions on Mobile App

- Priority Support

- Auto-Resolver

For currently enrolled students with valid student ID.

per month, billed quarterly

For a team that wants professional licenses for all members.

per month, per user, billed annually

- Single Team

- High Priority Support

For teams that want support and advanced tools for members.

- Multiple Teams

- Management Roles

For organizations who want access to all of their members.

Annual Subscription

Contact Sales

- Organizational Ownership

- For an organization or a company

- Access to all the premium features such as PICO Filters, Auto-Resolver, PRISMA and Mobile App

- Store and Reuse Searches and Full Texts

- A management console to view, organize and manage users, teams, review projects, searches and full texts

- Highest tier of support – Support via email, chat and AI-powered in-app help

- GDPR Compliant

- Single Sign-On

- API Integration

- Training for Experts

- Training Sessions Students Each Semester

- More options for secure access control

———————–

ANNUAL ONLY

Rayyan Subscription

membership starts with 2 users. You can select the number of additional members that you’d like to add to your membership.

Total amount:

Click Proceed to get started.

Great usability and functionality. Rayyan has saved me countless hours. I even received timely feedback from staff when I did not understand the capabilities of the system, and was pleasantly surprised with the time they dedicated to my problem. Thanks again!

This is a great piece of software. It has made the independent viewing process so much quicker. The whole thing is very intuitive.

Rayyan makes ordering articles and extracting data very easy. A great tool for undertaking literature and systematic reviews!

Excellent interface to do title and abstract screening. Also helps to keep a track on the the reasons for exclusion from the review. That too in a blinded manner.

Rayyan is a fantastic tool to save time and improve systematic reviews!!! It has changed my life as a researcher!!! thanks

Easy to use, friendly, has everything you need for cooperative work on the systematic review.

Rayyan makes life easy in every way when conducting a systematic review and it is easy to use.

- Open access

- Published: 01 December 2022

The Systematic Review Toolbox: keeping up to date with tools to support evidence synthesis

- Eugenie Evelynne Johnson ORCID: orcid.org/0000-0003-3324-7141 1 , 2 ,

- Hannah O’Keefe 1 , 2 ,

- Anthea Sutton 3 &

- Christopher Marshall 4

Systematic Reviews volume 11 , Article number: 258 ( 2022 ) Cite this article

6013 Accesses

8 Citations

16 Altmetric

Metrics details

The Systematic Review (SR) Toolbox was developed in 2014 to collate tools that can be used to support the systematic review process. Since its inception, the breadth of evidence synthesis methodologies has expanded greatly. This work describes the process of updating the SR Toolbox in 2022 to reflect these changes in evidence synthesis methodology. We also briefly analysed included tools and guidance to identify any potential gaps in what is currently available to researchers.

We manually extracted all guidance and software tools contained within the SR Toolbox in February 2022. A single reviewer, with a second checking a proportion, extracted and analysed information from records contained within the SR Toolbox using Microsoft Excel. Using this spreadsheet and Microsoft Access, the SR Toolbox was updated to reflect expansion of evidence synthesis methodologies and brief analysis conducted.

The updated version of the SR Toolbox was launched on 13 May 2022, with 235 software tools and 112 guidance documents included. Regarding review families, most software tools ( N = 223) and guidance documents ( N = 78) were applicable to systematic reviews. However, there were fewer tools and guidance documents applicable to reviews of reviews ( N = 66 and N = 22, respectively), while qualitative reviews were less served by guidance documents ( N = 19). In terms of review production stages, most guidance documents surrounded quality assessment ( N = 70), while software tools related to searching and synthesis ( N = 84 and N = 82, respectively). There appears to be a paucity of tools and guidance relating to stakeholder engagement ( N = 2 and N = 3, respectively).

Conclusions

The SR Toolbox provides a platform for those undertaking evidence syntheses to locate guidance and software tools to support different aspects of the review process across multiple review types. However, this work has also identified potential gaps in guidance and software that could inform future research.

Peer Review reports

Introduction

The Systematic Review Toolbox (SR Toolbox) was developed in 2014 by Christopher Marshall (CM) as part of his PhD surrounding tools that can be used to support the systematic review process within software engineering [ 1 ]. Whilst originally developed for the field of computer science, the methodologies for conducting systematic reviews and evidence synthesis are applicable across disciplines. Therefore, the scope of the SR Toolbox was expanded to include health topics. Its aim is to assist researchers by providing an open, free and searchable web-based catalogue of tools and guidance papers that assist with various tasks within the systematic review and wider evidence synthesis process. The SR Toolbox is regularly maintained by conducting a specialised search on MEDLINE, before being screened according to a defined inclusion and exclusion criteria by a single Editor, checked by a second editor (see Additional file 1 : Supplementary Material). Guidance and software tools that meet the eligibility criteria are added to the SR Toolbox on a rolling basis.

In January 2022, the SR Toolbox website gained approximately 28,500 hits and 6100 visits from around 4500 unique visitors, showing the popularity of the platform and its potential reach to researchers looking to find tools and guidance for use within evidence syntheses. However, since the initial launch of the SR Toolbox in 2014, there has been an increase in the number and types of evidence syntheses being produced. Many systematic review typologies and taxonomies had been developed since the SR Toolbox’s inception, including large numbers of review types. For example, Booth et al. (2016) identified 22 review types [ 2 ], Cook et al. (2017) identify 9 [ 3 ], while the typology by Munn et al. (2018) suggested there were 10 different review types [ 4 ].

More recently, a taxonomy proposed by Sutton et al (2019) incorporating research from several other previously published works suggests that 48 review types exist [ 5 ], which can be broadly categorised into seven review “families”:

Traditional reviews (that tend to use a purposive sampling approach as opposed to a systematic approach);

Systematic reviews;

Review of reviews;

Rapid reviews;

Qualitative systematic reviews;

Mixed-methods reviews; and

Purpose-specific reviews (i.e. reviews that are tailored to individual needs, such as Health Technology Assessment).

In the version of the SR Toolbox prior to 2022, the ability to search by review type was limited and not reflective of the expanding evidence synthesis landscape. The SR Toolbox’s ability to suggest support for the varying demands of different review types was therefore limited.

Additionally, although there is now a large array of tools available to support the process of conducting systematic reviews and other forms of evidence syntheses, a potential barrier to adoption includes inexperience of some of the underlying principles of tools, such as machine learning [ 6 ]. In the iteration of the SR Toolbox maintained until 2022, software tools were searchable according to their underlying approach (e.g. text mining, machine learning, visualisation), discipline (healthcare, social sciences, software engineering or multidisciplinary), and their financial cost (e.g. completely free or payment required). “Other” tools were only searchable by discipline and type (e.g. guideline, reporting standards). As such, for those with less experience or knowledge of the processes underpinning software tools, effective searching of the SR Toolbox could potentially be challenging.

We therefore set out to update the SR Toolbox interface, so it continues to be able to respond to the needs of users within a changing and continually developing evidence synthesis landscape, as well as being more accessible to a wide variety of researchers. In this paper, we describe our methods for reconstructing the platform by conducting a mapping exercise of all tools within the SR Toolbox to re-categorise them and check their validity. In addition, we also describe a brief analysis based on the mapping exercise to identify review types and processes that are both well-served and underserved by the tools currently contained within the platform.

SR Toolbox update methods

In February 2022, we embarked on a mapping exercise of all software and other tools indexed within the SR Toolbox to inform the restructuring of the platform. A coding tool was developed in Excel to extract data relevant to each tool indexed within the SR Toolbox to that point. Domains were either completed using free text or ticked using a check box. Details of domains assessed and how they were coded are detailed in Table 1 .

Part of the coding framework was adapted from the review family taxonomy proposed by Sutton et al. (2019) [ 5 ]. However, we did not include traditional reviews and purpose-specific reviews within the mapping exercise. This is because traditional reviews as described by the Sutton taxonomy were not considered systematic enough to be within scope for the SR Toolbox, while purpose-specific reviews were too broad and (potentially) too diverse to include in a systematic manner, as they include a wide variety of evidence syntheses including scoping reviews, mapping reviews and Health Technology Assessment [ 5 ]. Although both scoping reviews and mapping reviews are part of the purpose-specific family within the Sutton taxonomy [ 5 ], we separated these into their own categories. This is because it has been noted that scoping reviews are growing in number [ 7 ], while mapping reviews are becoming increasingly conducted as a way of visually representing the breadth of a body of evidence, despite being rare until as recently as 2010 [ 8 ]. Mapping reviews can also be considered distinct from scoping reviews, as although both present a broad overview of evidence relating to a topic, they are highly visual in nature [ 9 ]. Furthermore, it has been posited that scoping reviews can act as a precursor to a predefined systematic review, whereas mapping reviews may aim to identify research areas for systematic review or gaps in the evidence base [ 5 ].

All records currently contained within the SR Toolbox up to February 2022 ( n = 352) were manually extracted and coded according to the framework by a single reviewer (EEJ). The same reviewer checked all current records to ensure that hyperlinks were not broken and that tools still appeared to be active. If links to software tools were no longer active and could not be located elsewhere, these were excluded from the mapping exercise and, subsequently, the SR Toolbox ( N = 5). Tools and guidance could be coded to more than one review family and more than one stage of a review, where appropriate. A second reviewer (HOK) checked a small percentage of the records coded for accuracy before the spreadsheet was imported into a Microsoft Access database.

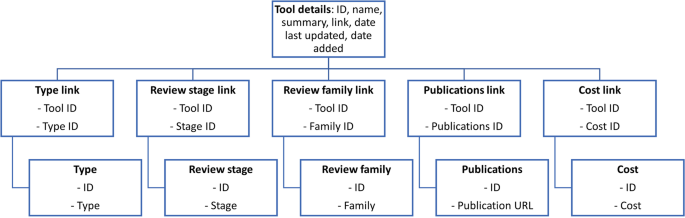

Microsoft Access databases are relational, meaning that relationships can be built between tables. We included a table for tool details, tool type, review stage, review family, publications, and cost. The tool details table acted as the main reference point, with all other tables being related to it via interim linker tables (Fig. 1 ).

Diagram of Microsoft Access framework

The tables contained in the local database in Access were exported as separate CSV files, then imported using phpMyAdmin to create the same database, online, in MySQL. Custom structured query language (SQL) statements, which accounted for any combination of user query, were hard coded into the website’s hypertext preprocessor (PHP) scripts. Furthermore, the graphical user interface that facilitates users in running advanced searches was updated to reflect the updated database and new tool categories.

Analysis methods

We undertook a basic analysis of the different software tools and guidance documents included within the SR Toolbox up to February 2022 in order to assess: what review families were being covered by the included tools; what review stages and aspects were being covered by the included tools; how up to date included software tools are; and the trajectory of research for guidance and reporting documents relating to evidence syntheses.

Using the same coding document developed in Excel for the mapping exercise described above, we filtered the spreadsheet to contain either relevant software tools or relevant guidance so they could be analysed as separate entities. From here, we tabulated the number of times tools or guidance documents were checked against each review family or review stage. We also added an additional column to the spreadsheet to indicate where tools or guidance documents could be applicable to multiple review families or multiple review stages; these were manually coded within the spreadsheet. The numbers tabulated from each of these exercises were used to create tables and graphs demonstrating the volume of tools in each category.

SR Toolbox update

At the time of updating the SR Toolbox interface, there were 235 software tools and 112 guidance or reporting documents included within the platform. The new SR Toolbox interface was launched on 13 May 2022.

Analysis results

Table 2 documents the relevance of guidance documents and software tools contained within the SR Toolbox to different review families. Of the 235 software tools and 112 guidance documents currently contained within the SR Toolbox, 215 software tools (91.5%) and 61 guidance documents (54.5%) can be applicable to multiple review families. Most software tools ( N = 223) and guidance documents ( N = 78) are applicable to systematic reviews, though far less are applicable to reviews of reviews ( N = 66, 28.1% and N = 22, 19.6% respectively). Qualitative reviews were slightly better served in terms of software tools ( N = 108, 46%), but were the most under-served review family in terms of guidance documents ( N = 19, 17%).

Table 3 shows the amount of software tools and guidance contained within the SR Toolbox at the time of update in relation to what stage of the review production process they assist with. Seventy-five (32%) of the software tools were applicable to more than one review production stage, while only 16 (14.3%) guidance documents were applicable to multiple stages of the process. Guidance documents within the SR Toolbox are currently dominated by research relating to quality assessment ( N = 70; 62.5%), followed by guidelines for reporting reviews ( N = 26; 23.2%). There appears to be a paucity of software tools ( N = 2; 0.9%) and guidance ( N = 3; 2.7%) that relates to stakeholder engagement within the review process.

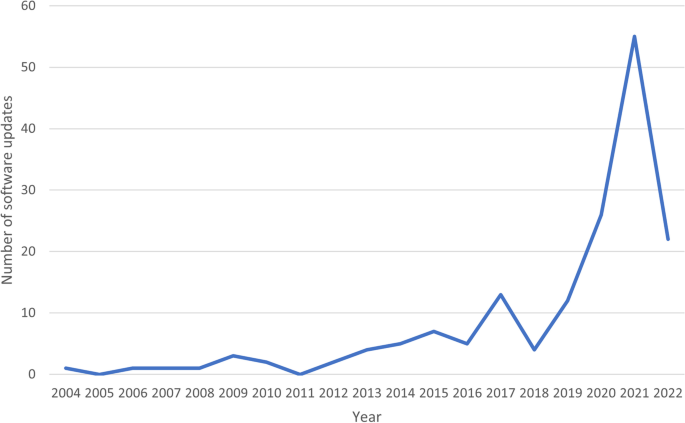

Figure 2 shows how up to date the software tools included within the SR Toolbox are. Most of the tools for which a new version was available have been updated within the past 4 years up to and including the first quarter of 2022 ( N = 115), with the most updates occurring in 2021 ( N = 51). However, although this is suggestive that most of the tools included in the SR Toolbox could be considered up to date, there were 71 software tools where we could not identify the latest update date (30.2% of all included software tools). We therefore cannot be certain that a relatively large proportion of software tools within the SR Toolbox are up to date.

Number of updates for software tools included in the Toolbox by year ( N = 164)

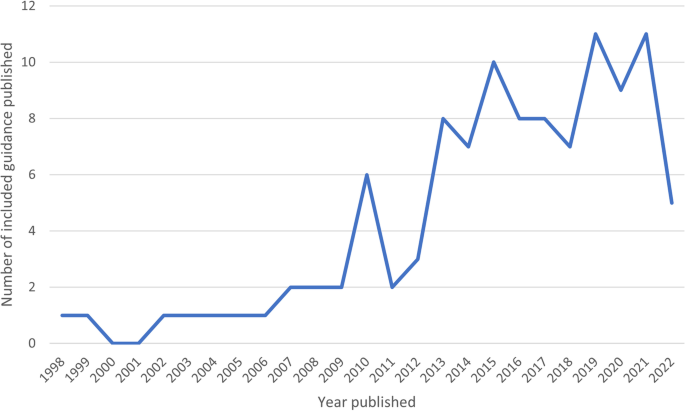

Similarly, Fig. 3 shows the amount of guidance and reporting tools included within the SR Toolbox by the year in which they were published. Although it was not clear when four of the guidance documents were originally published or updated, this only represents a small proportion of the guidance included within the Toolbox (3.6%). The earliest guidance publication date included within the SR Toolbox dates to 1998. However, of all the guidance and reporting documents included within the SR Toolbox, the majority have been published since 2015 (63.9%). The greatest number of guidance documents or reporting tools were published in 2019 and 2021 (11 per year). Before beginning the SR Toolbox updating exercise, we had already identified five new eligible guidance and reporting documents published in 2022. These data suggest that there has been a steady increase in the number of publications offering guidance and reporting standards relating to systematic reviews and wider evidence syntheses since 1998 and the trajectory of publications in this area has been particularly high since 2015.

Number of guidelines and reporting frameworks included in the Toolbox published by year ( N = 108)

Most of the included software tools are free to use (181/235, 77%). Of the 21 software tools that required payment, 12 had a free trial available and 3 had a free version available. Similarly, most of the guidance documents are open access (96/112, 85.7%).

Summary of main results

The update of the SR Toolbox aims to provide a simple and easily navigable interface for researchers to discover guidance and software tools to help conduct systematic reviews and wider evidence syntheses projects. The new structure of the SR Toolbox, which incorporates the ability to search by review family and review stage, has been developed and implemented to make it easier for researchers and other stakeholders with less familiarity and experience with the underlying computational concepts of tools. Stakeholders should be more able to identify and access software and guidance that may assist them with their evidence syntheses projects.

Our brief analysis of tools included in the platform up to February 2022 suggests that many software tools and guidance documents currently within the SR Toolbox can potentially be applicable to multiple review families, though reviews of reviews and qualitative reviews may currently be less well served. Guidance documents largely focus on methods for critical appraisal, followed by reporting guidelines, with far fewer publications surrounding other aspects of the review production process. Additionally, software tools to support the systematic review process may be mostly well-maintained and up to date, though there is some uncertainty surrounding this. The trajectory of guidance and reporting frameworks for evidence syntheses being published has been steadily increasing and has seen a particular increase since 2015.

Strengths and limitations of this work

Well-defined categories were used to map the guidance and software tools, based on widely accepted published standards [ 5 ]. These categories were agreed upon by highly experienced systematic reviewers (EEJ and CM) and information specialists (HOK and AS). Two editors with considerable expertise in computational and data science (CM and HOK) were responsible for the construction of the updated SR Toolbox.

However, there are some limitations of this work. The initial mapping exercise was conducted by a single reviewer, with a second checking some records for accuracy. This may be considered a bias, as it is possible that there may some minor inaccuracies in coding and charting of the tools and guidelines.

Potential areas for future research

As part of the mapping exercise for this work, we added a column in our Excel sheet to identify when the software tool or guideline was added to the SR Toolbox. This will allow us to determine the trajectory of publications and the rate at which new software tools are being added in the future more accurately.

This column may be one way of identifying areas for expansion or refinement within future iterations of the SR Toolbox. For example, there may also be an argument to further refine the ‘Other’ category in the SR Toolbox in future updates, particularly to highlight software tools and guidance relating to network meta-analyses and prognostic reviews. A 2016 review identified 456 network meta-analyses including at least four interventions [ 10 ], suggesting that the review type is increasing in number. Prognostic reviews have been formally adopted by Cochrane, with the first two Cochrane prognostic reviews published in 2018 [ 11 , 12 ], while there have also been calls for more prognostic reviews to be conducted in response to a growing amount of primary prognostic research [ 13 ].

Living systematic reviews have also been proposed as a contribution to evidence synthesis by providing high-quality reviews that are updated as new research in the area becomes available [ 14 ]. We discussed the inclusion of living systematic reviews as a standalone review category within the new iteration of the SR Toolbox, as there has been some evidence that machine learning has been used to support the production of these reviews [ 15 ], but currently the SR Toolbox does not contain any specific guidance or software tools relating to living systematic reviews. If software tools and guidelines become available for living systematic reviews, we will consider adding this review category to the Toolbox in the future.

More generally, the mapping exercise and subsequent analysis has highlighted some areas for further research and tool production. Tools and guidance to support reviews other than systematic reviews of intervention effectiveness may be needed, particularly for reviews of reviews and qualitative reviews. Additionally, there are also very few tools or guidelines relating to stakeholder engagement in the review production process. While general guidance on how to report patient and public involvement in research exists in the form of GRIPP2 [ 16 ], and the ACTIVE framework has been developed to describe stakeholder involvement in systematic reviews [ 17 ], there are currently few other frameworks or tools specifically designed to help researchers undertaking evidence syntheses to involve wider stakeholders in the process.

The updated version of the SR Toolbox is designed to be an easily-navigable interface to aid researchers in finding guidance and software tools to help conduct varying forms of evidence synthesis, informed by the evolution in evidence synthesis methodologies since its inception. Our analysis of the contents of the SR Toolbox has revealed that there are specific review families and stages of the review process that are currently well-served by guidance and software but that gaps remain surrounding others. Further investigation into these gaps may help researchers to conduct other types of review in future.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

Diagnostic test accuracy

Medical Literature Analysis and Retrieval System Online

Hypertext preprocessor

Structured Query Language

- Systematic review

Marshall C, Sutton A, O'Keefe H, Johnson E. The Systematic Review Toolbox. 2022. Available from: http://www.systematicreviewtools.com/ . Accessed Feb 2022.

Booth A, Noyes J, Flemming K, Gerhardus A, Wahlster P, van der Wilt GJ, et al. Guidance on choosing qualitative evidence synthesis methods for use in health technology assessments of complex interventions. 2016. Available from: https://www.integrate-hta.eu/wp-content/uploads/2016/02/Guidance-on-choosing-qualitative-evidence-synthesis-methods-for-use-in-HTA-of-complex-interventions.pdf . Accessed Feb 2022.

Cook CN, Nichols SJ, Webb JA, Fuller RA, Richards RM. Simplifying the selection of evidence synthesis methods to inform environmental decisions: a guide for decision makers and scientists. Biol Conserv. 2017;213:135–45.

Article Google Scholar

Munn Z, Stern C, Aromataris E, et al. What kind of systematic review should I conduct? A proposed typology and guidance for systematic reviewers in the medical and health sciences. BMC Med Res Methodol. 2018;18(5). https://doi.org/10.1186/s12874-017-0468-4 .

Sutton A, Clowes M, Preston L, Booth A. Meeting the review family: exploring review types and associated information retrieval requirements. Health Inf Libr J. 2019;36:202–22.

Arno A, Elliott J, Wallace B, Turner T, Thomas J. The views of health guideline developers on the use of automation in health evidence synthesis. BMC Syst Rev. 2021;10(16).

Tricco AC, Lillie E, Zarin W, O'Brien K, Colquhoun H, Kastner M, et al. A scoping review on the conduct and reporting of scoping reviews. BMC Med Res Methodol. 2016;16(15).

Miake-Lye IM, Hempel S, Shanman R, Shekelle PG. What is an evidence map? A systematic review of published evidence maps and their definitions, methods, and products. BMC Syst Rev. 2016;5(28).

Snilstveit B, Vojtkova M, Bhavsar A, Stevenson J, Gaarder M. Evidence & gap maps: a tool for promoting evidence informed policy and strategic research agendas. J Clin Epidemiol. 2016;79:120–9.

Article PubMed Google Scholar

Petropoulou M, Nikolakopoulou A, Veroniki A-A, Rios P, Vafaei A, Zarin W, et al. Bibliographic study showed improving statistical methodology of network meta-analyses published between 1999 and 2015. J Clin Epidemiol. 2017;82:20–8.

Westby MJ, Dumville JC, Stubbs N, Norman G, Wong JKF, Cullum N, et al. Protease activity as a prognostic factor for wound healing in venous leg ulcers. Cochrane Database Syst Rev. 2018;(9):Art. No. CD012841. https://doi.org/10.1002/14651858.CD012841.pub2 .

Richter B, Hemmingsen B, Metzendorf MI, Takwoingi Y. Development of type 2 diabetes mellitus in people with intermediate hyperglycaemia. Cochrane Database Syst Rev. 2018;(10).

Damen JAAG, Hooft L. The increasing need for systematic reviews of prognosis studies: strategies to facilitate review production and improve quality of primary research. Diagnostic and prognostic. Research. 2019;3(2).

Elliott JH, Turner T, Clavisi O, Thomas J, Higgins JPT, Mavergames C, et al. Living systematic reviews: an emerging opportunity to narrow the evidence-practice gap. PLoS Med. 2014;11(2):e1001603.

Article PubMed PubMed Central Google Scholar

Millard T, Synnot A, Elliott J, Green S, McDonald S, Turner T. Feasibility and acceptability of living systematic reviews: results from a mixed-methods evaluation. BMC Syst Rev. 2019;8(325).

Staniszewska S, Brett J, Simera I, Seers K, Mockford C, Goodlad S, et al. GRIPP2 reporting checklists: tools to improve reporting of patient and public involvement in research. BMJ. 2017;358:j3453.

Article CAS PubMed PubMed Central Google Scholar

Pollock A, Campbell P, Struthers C, Synnot A, Nunn J, Hill S, et al. Development of the ACTIVE framework to describe stakeholder involvement in systematic reviews. J Health Serv Res Policy. 2019;24(4):245–55.

Download references

Acknowledgements

We did not receive any funding for this work.

Author information

Authors and affiliations.

Population Health Sciences Institute, Newcastle University, Newcastle upon Tyne, UK

Eugenie Evelynne Johnson & Hannah O’Keefe

NIHR Innovation Observatory, Newcastle University, Newcastle upon Tyne, UK

School of Health and Related Research (ScHARR), The University of Sheffield, Sheffield, UK

Anthea Sutton

York Health Economics Consortium, University of York, York, UK

Christopher Marshall

You can also search for this author in PubMed Google Scholar

Contributions

EEJ undertook initial mapping of existing tools to new domains and contributed in writing and editing the manuscript. CM transferred initial mapping into database format, design, backend and frontend development of the new SR Toolbox and helped in editing the manuscript. AS helped in editing the manuscript. HOK provided assistance in initial mapping of existing tools, building the Access database, editing the manuscript. All authors read and approved the final manuscript.

Corresponding author

Correspondence to Eugenie Evelynne Johnson .

Ethics declarations

Ethics approval and consent to participate.

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: supplementary material..

Eligibility criteria for SR Toolbox.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

Johnson, E.E., O’Keefe, H., Sutton, A. et al. The Systematic Review Toolbox: keeping up to date with tools to support evidence synthesis. Syst Rev 11 , 258 (2022). https://doi.org/10.1186/s13643-022-02122-z

Download citation

Received : 01 July 2022

Accepted : 05 November 2022

Published : 01 December 2022

DOI : https://doi.org/10.1186/s13643-022-02122-z

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Evidence synthesis

Systematic Reviews

ISSN: 2046-4053

- Submission enquiries: Access here and click Contact Us

- General enquiries: [email protected]

Literature Review Tips & Tools

- Tips & Examples

Organizational Tools

Tools for systematic reviews.

- Bubbl.us Free online brainstorming/mindmapping tool that also has a free iPad app.

- Coggle Another free online mindmapping tool.

- Organization & Structure tips from Purdue University Online Writing Lab

- Literature Reviews from The Writing Center at University of North Carolina at Chapel Hill Gives several suggestions and descriptions of ways to organize your lit review.

- Cochrane Handbook for Systematic Reviews of Interventions "The Cochrane Handbook for Systematic Reviews of Interventions is the official guide that describes in detail the process of preparing and maintaining Cochrane systematic reviews on the effects of healthcare interventions. "

- Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) website "PRISMA is an evidence-based minimum set of items for reporting in systematic reviews and meta-analyses. PRISMA focuses on the reporting of reviews evaluating randomized trials, but can also be used as a basis for reporting systematic reviews of other types of research, particularly evaluations of interventions."

- PRISMA Flow Diagram Generator Free tool that will generate a PRISMA flow diagram from a CSV file (sample CSV template provided) more... less... Please cite as: Haddaway, N. R., Page, M. J., Pritchard, C. C., & McGuinness, L. A. (2022). PRISMA2020: An R package and Shiny app for producing PRISMA 2020-compliant flow diagrams, with interactivity for optimised digital transparency and Open Synthesis Campbell Systematic Reviews, 18, e1230. https://doi.org/10.1002/cl2.1230

- Rayyan "Rayyan is a 100% FREE web application to help systematic review authors perform their job in a quick, easy and enjoyable fashion. Authors create systematic reviews, collaborate on them, maintain them over time and get suggestions for article inclusion."

- Covidence Covidence is a tool to help manage systematic reviews (and create PRISMA flow diagrams). **UMass Amherst doesn't subscribe, but Covidence offers a free trial for 1 review of no more than 500 records. It is also set up for researchers to pay for each review.

- PROSPERO - Systematic Review Protocol Registry "PROSPERO accepts registrations for systematic reviews, rapid reviews and umbrella reviews. PROSPERO does not accept scoping reviews or literature scans. Sibling PROSPERO sites registers systematic reviews of human studies and systematic reviews of animal studies."

- Critical Appraisal Tools from JBI Joanna Briggs Institute at the University of Adelaide provides these checklists to help evaluate different types of publications that could be included in a review.

- Systematic Review Toolbox "The Systematic Review Toolbox is a community-driven, searchable, web-based catalogue of tools that support the systematic review process across multiple domains. The resource aims to help reviewers find appropriate tools based on how they provide support for the systematic review process. Users can perform a simple keyword search (i.e. Quick Search) to locate tools, a more detailed search (i.e. Advanced Search) allowing users to select various criteria to find specific types of tools and submit new tools to the database. Although the focus of the Toolbox is on identifying software tools to support systematic reviews, other tools or support mechanisms (such as checklists, guidelines and reporting standards) can also be found."

- Abstrackr Free, open-source tool that "helps you upload and organize the results of a literature search for a systematic review. It also makes it possible for your team to screen, organize, and manipulate all of your abstracts in one place." -From Center for Evidence Synthesis in Health

- SRDR Plus (Systematic Review Data Repository: Plus) An open-source tool for extracting, managing,, and archiving data developed by the Center for Evidence Synthesis in Health at Brown University

- RoB 2 Tool (Risk of Bias for Randomized Trials) A revised Cochrane risk of bias tool for randomized trials

- << Previous: Tips & Examples

- Next: Writing & Citing Help >>

- Last Updated: Jul 30, 2024 9:23 AM

- URL: https://guides.library.umass.edu/litreviews

© 2022 University of Massachusetts Amherst • Site Policies • Accessibility

- University of Texas Libraries

Literature Reviews

Steps in the literature review process.

- What is a literature review?

- Define your research question

- Determine inclusion and exclusion criteria

- Choose databases and search

- Review Results

- Synthesize Results

- Analyze Results

- Librarian Support

- Artificial Intelligence (AI) Tools

- You may need to some exploratory searching of the literature to get a sense of scope, to determine whether you need to narrow or broaden your focus

- Identify databases that provide the most relevant sources, and identify relevant terms (controlled vocabularies) to add to your search strategy

- Finalize your research question

- Think about relevant dates, geographies (and languages), methods, and conflicting points of view

- Conduct searches in the published literature via the identified databases

- Check to see if this topic has been covered in other discipline's databases

- Examine the citations of on-point articles for keywords, authors, and previous research (via references) and cited reference searching.

- Save your search results in a citation management tool (such as Zotero, Mendeley or EndNote)

- De-duplicate your search results

- Make sure that you've found the seminal pieces -- they have been cited many times, and their work is considered foundational

- Check with your professor or a librarian to make sure your search has been comprehensive

- Evaluate the strengths and weaknesses of individual sources and evaluate for bias, methodologies, and thoroughness

- Group your results in to an organizational structure that will support why your research needs to be done, or that provides the answer to your research question

- Develop your conclusions

- Are there gaps in the literature?

- Where has significant research taken place, and who has done it?

- Is there consensus or debate on this topic?

- Which methodological approaches work best?

- For example: Background, Current Practices, Critics and Proponents, Where/How this study will fit in

- Organize your citations and focus on your research question and pertinent studies

- Compile your bibliography

Note: The first four steps are the best points at which to contact a librarian. Your librarian can help you determine the best databases to use for your topic, assess scope, and formulate a search strategy.

Videos Tutorials about Literature Reviews

This 4.5 minute video from Academic Education Materials has a Creative Commons License and a British narrator.

Recommended Reading

- Last Updated: Aug 20, 2024 1:59 PM

- URL: https://guides.lib.utexas.edu/literaturereviews

An SLR-tool: search process in practice: a tool to conduct and manage systematic literature review (SLR)

New citation alert added.

This alert has been successfully added and will be sent to:

You will be notified whenever a record that you have chosen has been cited.

To manage your alert preferences, click on the button below.

New Citation Alert!

Please log in to your account

Information & Contributors

Bibliometrics & citations.

- Wu C Chakravorti T Carroll J Rajtmajer S (2024) Integrating measures of replicability into scholarly search: Challenges and opportunities Proceedings of the CHI Conference on Human Factors in Computing Systems 10.1145/3613904.3643043 (1-18) Online publication date: 11-May-2024 https://dl.acm.org/doi/10.1145/3613904.3643043

- Sina L Secco C Blazevic M Nazemi K (2024) Guided Visual Analytics—A Visual Analytics Guidance Approach for Systematic Reviews in Research Artificial Intelligence and Visualization: Advancing Visual Knowledge Discovery 10.1007/978-3-031-46549-9_11 (319-343) Online publication date: 25-Apr-2024 https://doi.org/10.1007/978-3-031-46549-9_11

- De Felice F Petrillo A Iovine G Salzano C Baffo I (2023) How Does the Metaverse Shape Education? A Systematic Literature Review Applied Sciences 10.3390/app13095682 13 :9 (5682) Online publication date: 5-May-2023 https://doi.org/10.3390/app13095682

- Show More Cited By

Index Terms

Information systems

Information retrieval

Information systems applications

Software and its engineering

Software creation and management

Software post-development issues

Software verification and validation

Software notations and tools

Software configuration management and version control systems

Recommendations

Sesra: a web-based automated tool to support the systematic literature review process.

Systematic Literature Review (SLR) is a key tool for evidence-based practice as it combines results from multiple studies of a specific topic of research. Due its characteristics, it is a time consuming, hard process that requires a properly documented ...

Decision support tools for SLR search string construction

Systematic literature reviews (SLRs) have gained popularity during the last years as a form of providing state of the art about previous research. As part of the SLR tasks, devising the search strategy and particularly finding the right keywords to be ...

Guidelines for snowballing in systematic literature studies and a replication in software engineering

Background: Systematic literature studies have become common in software engineering, and hence it is important to understand how to conduct them efficiently and reliably.

Objective: This paper presents guidelines for conducting literature reviews using ...

Information

Published in.

- General Chairs:

North Carolina State University

KAIST, South Korea

- SIGSOFT: ACM Special Interest Group on Software Engineering

In-Cooperation

- KIISE: Korean Institute of Information Scientists and Engineers

Association for Computing Machinery

New York, NY, United States

Publication History

Check for updates, author tags.

- systematic literature review

- Demonstration

Acceptance Rates

Upcoming conference, contributors, other metrics, bibliometrics, article metrics.

- 7 Total Citations View Citations

- 327 Total Downloads

- Downloads (Last 12 months) 68

- Downloads (Last 6 weeks) 3

- Yahaya H Nadarajah G (2023) Determining key factors influencing SMEs’ performance: A systematic literature review and experts’ verification Cogent Business & Management 10.1080/23311975.2023.2251195 10 :3 Online publication date: 9-Nov-2023 https://doi.org/10.1080/23311975.2023.2251195

- Jabar T Mahinderjit Singh M (2022) Exploration of Mobile Device Behavior for Mitigating Advanced Persistent Threats (APT): A Systematic Literature Review and Conceptual Framework Sensors 10.3390/s22134662 22 :13 (4662) Online publication date: 21-Jun-2022 https://doi.org/10.3390/s22134662

- Duzen Z Riveni M Aktas M (2022) Misinformation Detection in Social Networks: A Systematic Literature Review Computational Science and Its Applications – ICCSA 2022 Workshops 10.1007/978-3-031-10545-6_5 (57-74) Online publication date: 23-Jul-2022 https://doi.org/10.1007/978-3-031-10545-6_5

- Bahaa A Abdelaziz A Sayed A Elfangary L Fahmy H (2021) Monitoring Real Time Security Attacks for IoT Systems Using DevSecOps: A Systematic Literature Review Information 10.3390/info12040154 12 :4 (154) Online publication date: 7-Apr-2021 https://doi.org/10.3390/info12040154

- Napoleao B Petrillo F Halle S (2021) Automated Support for Searching and Selecting Evidence in Software Engineering: A Cross-domain Systematic Mapping 2021 47th Euromicro Conference on Software Engineering and Advanced Applications (SEAA) 10.1109/SEAA53835.2021.00015 (45-53) Online publication date: Sep-2021 https://doi.org/10.1109/SEAA53835.2021.00015

View Options

Login options.

Check if you have access through your login credentials or your institution to get full access on this article.

Full Access

View options.

View or Download as a PDF file.

View online with eReader .

Share this Publication link

Copying failed.

Share on social media

Affiliations, export citations.

- Please download or close your previous search result export first before starting a new bulk export. Preview is not available. By clicking download, a status dialog will open to start the export process. The process may take a few minutes but once it finishes a file will be downloadable from your browser. You may continue to browse the DL while the export process is in progress. Download

- Download citation

- Copy citation

We are preparing your search results for download ...

We will inform you here when the file is ready.

Your file of search results citations is now ready.

Your search export query has expired. Please try again.

Advertisement

How to conduct systematic literature reviews in management research: a guide in 6 steps and 14 decisions

- Review Paper

- Open access

- Published: 12 May 2023

- Volume 17 , pages 1899–1933, ( 2023 )

Cite this article

You have full access to this open access article

- Philipp C. Sauer ORCID: orcid.org/0000-0002-1823-0723 1 &

- Stefan Seuring ORCID: orcid.org/0000-0003-4204-9948 2

29k Accesses

64 Citations

6 Altmetric

Explore all metrics

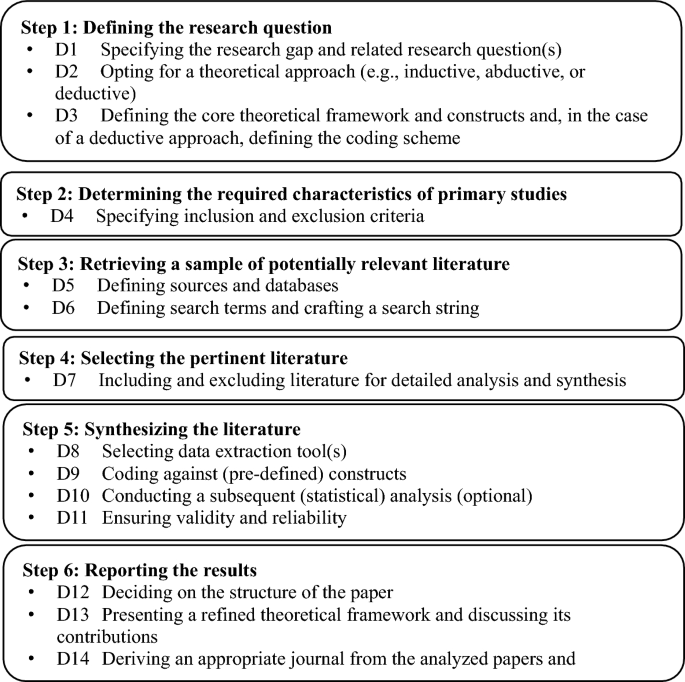

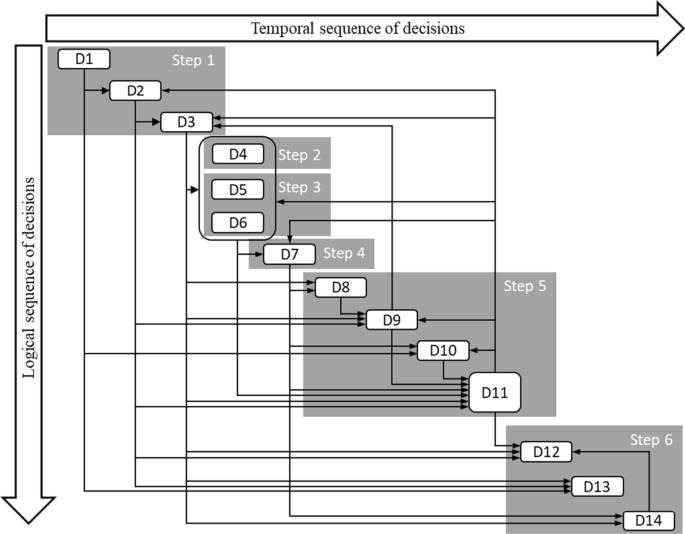

Systematic literature reviews (SLRs) have become a standard tool in many fields of management research but are often considerably less stringently presented than other pieces of research. The resulting lack of replicability of the research and conclusions has spurred a vital debate on the SLR process, but related guidance is scattered across a number of core references and is overly centered on the design and conduct of the SLR, while failing to guide researchers in crafting and presenting their findings in an impactful way. This paper offers an integrative review of the widely applied and most recent SLR guidelines in the management domain. The paper adopts a well-established six-step SLR process and refines it by sub-dividing the steps into 14 distinct decisions: (1) from the research question, via (2) characteristics of the primary studies, (3) to retrieving a sample of relevant literature, which is then (4) selected and (5) synthesized so that, finally (6), the results can be reported. Guided by these steps and decisions, prior SLR guidelines are critically reviewed, gaps are identified, and a synthesis is offered. This synthesis elaborates mainly on the gaps while pointing the reader toward the available guidelines. The paper thereby avoids reproducing existing guidance but critically enriches it. The 6 steps and 14 decisions provide methodological, theoretical, and practical guidelines along the SLR process, exemplifying them via best-practice examples and revealing their temporal sequence and main interrelations. The paper guides researchers in the process of designing, executing, and publishing a theory-based and impact-oriented SLR.

Similar content being viewed by others

The burgeoning role of literature review articles in management research: an introduction and outlook

On being ‘systematic’ in literature reviews

On being ‘systematic’ in literature reviews in IS

Explore related subjects.

- Artificial Intelligence

Avoid common mistakes on your manuscript.

1 Introduction