The 10 Most Significant Education Studies of 2021

From reframing our notion of “good” schools to mining the magic of expert teachers, here’s a curated list of must-read research from 2021.

Your content has been saved!

It was a year of unprecedented hardship for teachers and school leaders. We pored through hundreds of studies to see if we could follow the trail of exactly what happened: The research revealed a complex portrait of a grueling year during which persistent issues of burnout and mental and physical health impacted millions of educators. Meanwhile, many of the old debates continued: Does paper beat digital? Is project-based learning as effective as direct instruction? How do you define what a “good” school is?

Other studies grabbed our attention, and in a few cases, made headlines. Researchers from the University of Chicago and Columbia University turned artificial intelligence loose on some 1,130 award-winning children’s books in search of invisible patterns of bias. (Spoiler alert: They found some.) Another study revealed why many parents are reluctant to support social and emotional learning in schools—and provided hints about how educators can flip the script.

1. What Parents Fear About SEL (and How to Change Their Minds)

When researchers at the Fordham Institute asked parents to rank phrases associated with social and emotional learning , nothing seemed to add up. The term “social-emotional learning” was very unpopular; parents wanted to steer their kids clear of it. But when the researchers added a simple clause, forming a new phrase—”social-emotional & academic learning”—the program shot all the way up to No. 2 in the rankings.

What gives?

Parents were picking up subtle cues in the list of SEL-related terms that irked or worried them, the researchers suggest. Phrases like “soft skills” and “growth mindset” felt “nebulous” and devoid of academic content. For some, the language felt suspiciously like “code for liberal indoctrination.”

But the study suggests that parents might need the simplest of reassurances to break through the political noise. Removing the jargon, focusing on productive phrases like “life skills,” and relentlessly connecting SEL to academic progress puts parents at ease—and seems to save social and emotional learning in the process.

2. The Secret Management Techniques of Expert Teachers

In the hands of experienced teachers, classroom management can seem almost invisible: Subtle techniques are quietly at work behind the scenes, with students falling into orderly routines and engaging in rigorous academic tasks almost as if by magic.

That’s no accident, according to new research . While outbursts are inevitable in school settings, expert teachers seed their classrooms with proactive, relationship-building strategies that often prevent misbehavior before it erupts. They also approach discipline more holistically than their less-experienced counterparts, consistently reframing misbehavior in the broader context of how lessons can be more engaging, or how clearly they communicate expectations.

Focusing on the underlying dynamics of classroom behavior—and not on surface-level disruptions—means that expert teachers often look the other way at all the right times, too. Rather than rise to the bait of a minor breach in etiquette, a common mistake of new teachers, they tend to play the long game, asking questions about the origins of misbehavior, deftly navigating the terrain between discipline and student autonomy, and opting to confront misconduct privately when possible.

3. The Surprising Power of Pretesting

Asking students to take a practice test before they’ve even encountered the material may seem like a waste of time—after all, they’d just be guessing.

But new research concludes that the approach, called pretesting, is actually more effective than other typical study strategies. Surprisingly, pretesting even beat out taking practice tests after learning the material, a proven strategy endorsed by cognitive scientists and educators alike. In the study, students who took a practice test before learning the material outperformed their peers who studied more traditionally by 49 percent on a follow-up test, while outperforming students who took practice tests after studying the material by 27 percent.

The researchers hypothesize that the “generation of errors” was a key to the strategy’s success, spurring student curiosity and priming them to “search for the correct answers” when they finally explored the new material—and adding grist to a 2018 study that found that making educated guesses helped students connect background knowledge to new material.

Learning is more durable when students do the hard work of correcting misconceptions, the research suggests, reminding us yet again that being wrong is an important milestone on the road to being right.

4. Confronting an Old Myth About Immigrant Students

Immigrant students are sometimes portrayed as a costly expense to the education system, but new research is systematically dismantling that myth.

In a 2021 study , researchers analyzed over 1.3 million academic and birth records for students in Florida communities, and concluded that the presence of immigrant students actually has “a positive effect on the academic achievement of U.S.-born students,” raising test scores as the size of the immigrant school population increases. The benefits were especially powerful for low-income students.

While immigrants initially “face challenges in assimilation that may require additional school resources,” the researchers concluded, hard work and resilience may allow them to excel and thus “positively affect exposed U.S.-born students’ attitudes and behavior.” But according to teacher Larry Ferlazzo, the improvements might stem from the fact that having English language learners in classes improves pedagogy , pushing teachers to consider “issues like prior knowledge, scaffolding, and maximizing accessibility.”

5. A Fuller Picture of What a ‘Good’ School Is

It’s time to rethink our definition of what a “good school” is, researchers assert in a study published in late 2020. That’s because typical measures of school quality like test scores often provide an incomplete and misleading picture, the researchers found.

The study looked at over 150,000 ninth-grade students who attended Chicago public schools and concluded that emphasizing the social and emotional dimensions of learning—relationship-building, a sense of belonging, and resilience, for example—improves high school graduation and college matriculation rates for both high- and low-income students, beating out schools that focus primarily on improving test scores.

“Schools that promote socio-emotional development actually have a really big positive impact on kids,” said lead researcher C. Kirabo Jackson in an interview with Edutopia . “And these impacts are particularly large for vulnerable student populations who don’t tend to do very well in the education system.”

The findings reinforce the importance of a holistic approach to measuring student progress, and are a reminder that schools—and teachers—can influence students in ways that are difficult to measure, and may only materialize well into the future.

6. Teaching Is Learning

One of the best ways to learn a concept is to teach it to someone else. But do you actually have to step into the shoes of a teacher, or does the mere expectation of teaching do the trick?

In a 2021 study , researchers split students into two groups and gave them each a science passage about the Doppler effect—a phenomenon associated with sound and light waves that explains the gradual change in tone and pitch as a car races off into the distance, for example. One group studied the text as preparation for a test; the other was told that they’d be teaching the material to another student.

The researchers never carried out the second half of the activity—students read the passages but never taught the lesson. All of the participants were then tested on their factual recall of the Doppler effect, and their ability to draw deeper conclusions from the reading.

The upshot? Students who prepared to teach outperformed their counterparts in both duration and depth of learning, scoring 9 percent higher on factual recall a week after the lessons concluded, and 24 percent higher on their ability to make inferences. The research suggests that asking students to prepare to teach something—or encouraging them to think “could I teach this to someone else?”—can significantly alter their learning trajectories.

7. A Disturbing Strain of Bias in Kids’ Books

Some of the most popular and well-regarded children’s books—Caldecott and Newbery honorees among them—persistently depict Black, Asian, and Hispanic characters with lighter skin, according to new research .

Using artificial intelligence, researchers combed through 1,130 children’s books written in the last century, comparing two sets of diverse children’s books—one a collection of popular books that garnered major literary awards, the other favored by identity-based awards. The software analyzed data on skin tone, race, age, and gender.

Among the findings: While more characters with darker skin color begin to appear over time, the most popular books—those most frequently checked out of libraries and lining classroom bookshelves—continue to depict people of color in lighter skin tones. More insidiously, when adult characters are “moral or upstanding,” their skin color tends to appear lighter, the study’s lead author, Anjali Aduki, told The 74 , with some books converting “Martin Luther King Jr.’s chocolate complexion to a light brown or beige.” Female characters, meanwhile, are often seen but not heard.

Cultural representations are a reflection of our values, the researchers conclude: “Inequality in representation, therefore, constitutes an explicit statement of inequality of value.”

8. The Never-Ending ‘Paper Versus Digital’ War

The argument goes like this: Digital screens turn reading into a cold and impersonal task; they’re good for information foraging, and not much more. “Real” books, meanwhile, have a heft and “tactility” that make them intimate, enchanting—and irreplaceable.

But researchers have often found weak or equivocal evidence for the superiority of reading on paper. While a recent study concluded that paper books yielded better comprehension than e-books when many of the digital tools had been removed, the effect sizes were small. A 2021 meta-analysis further muddies the water: When digital and paper books are “mostly similar,” kids comprehend the print version more readily—but when enhancements like motion and sound “target the story content,” e-books generally have the edge.

Nostalgia is a force that every new technology must eventually confront. There’s plenty of evidence that writing with pen and paper encodes learning more deeply than typing. But new digital book formats come preloaded with powerful tools that allow readers to annotate, look up words, answer embedded questions, and share their thinking with other readers.

We may not be ready to admit it, but these are precisely the kinds of activities that drive deeper engagement, enhance comprehension, and leave us with a lasting memory of what we’ve read. The future of e-reading, despite the naysayers, remains promising.

9. New Research Makes a Powerful Case for PBL

Many classrooms today still look like they did 100 years ago, when students were preparing for factory jobs. But the world’s moved on: Modern careers demand a more sophisticated set of skills—collaboration, advanced problem-solving, and creativity, for example—and those can be difficult to teach in classrooms that rarely give students the time and space to develop those competencies.

Project-based learning (PBL) would seem like an ideal solution. But critics say PBL places too much responsibility on novice learners, ignoring the evidence about the effectiveness of direct instruction and ultimately undermining subject fluency. Advocates counter that student-centered learning and direct instruction can and should coexist in classrooms.

Now two new large-scale studies —encompassing over 6,000 students in 114 diverse schools across the nation—provide evidence that a well-structured, project-based approach boosts learning for a wide range of students.

In the studies, which were funded by Lucas Education Research, a sister division of Edutopia , elementary and high school students engaged in challenging projects that had them designing water systems for local farms, or creating toys using simple household objects to learn about gravity, friction, and force. Subsequent testing revealed notable learning gains—well above those experienced by students in traditional classrooms—and those gains seemed to raise all boats, persisting across socioeconomic class, race, and reading levels.

10. Tracking a Tumultuous Year for Teachers

The Covid-19 pandemic cast a long shadow over the lives of educators in 2021, according to a year’s worth of research.

The average teacher’s workload suddenly “spiked last spring,” wrote the Center for Reinventing Public Education in its January 2021 report, and then—in defiance of the laws of motion—simply never let up. By the fall, a RAND study recorded an astonishing shift in work habits: 24 percent of teachers reported that they were working 56 hours or more per week, compared to 5 percent pre-pandemic.

The vaccine was the promised land, but when it arrived nothing seemed to change. In an April 2021 survey conducted four months after the first vaccine was administered in New York City, 92 percent of teachers said their jobs were more stressful than prior to the pandemic, up from 81 percent in an earlier survey.

It wasn’t just the length of the work days; a close look at the research reveals that the school system’s failure to adjust expectations was ruinous. It seemed to start with the obligations of hybrid teaching, which surfaced in Edutopia ’s coverage of overseas school reopenings. In June 2020, well before many U.S. schools reopened, we reported that hybrid teaching was an emerging problem internationally, and warned that if the “model is to work well for any period of time,” schools must “recognize and seek to reduce the workload for teachers.” Almost eight months later, a 2021 RAND study identified hybrid teaching as a primary source of teacher stress in the U.S., easily outpacing factors like the health of a high-risk loved one.

New and ever-increasing demands for tech solutions put teachers on a knife’s edge. In several important 2021 studies, researchers concluded that teachers were being pushed to adopt new technology without the “resources and equipment necessary for its correct didactic use.” Consequently, they were spending more than 20 hours a week adapting lessons for online use, and experiencing an unprecedented erosion of the boundaries between their work and home lives, leading to an unsustainable “always on” mentality. When it seemed like nothing more could be piled on—when all of the lights were blinking red—the federal government restarted standardized testing .

Change will be hard; many of the pathologies that exist in the system now predate the pandemic. But creating strict school policies that separate work from rest, eliminating the adoption of new tech tools without proper supports, distributing surveys regularly to gauge teacher well-being, and above all listening to educators to identify and confront emerging problems might be a good place to start, if the research can be believed.

REVIEW article

Innovative pedagogies of the future: an evidence-based selection.

- Institute of Educational Technology, The Open University, Milton Keynes, United Kingdom

There is a widespread notion that educational systems should empower learners with skills and competences to cope with a constantly changing landscape. Reference is often made to skills such as critical thinking, problem solving, collaborative skills, innovation, digital literacy, and adaptability. What is negotiable is how best to achieve the development of those skills, in particular which teaching and learning approaches are suitable for facilitating or enabling complex skills development. In this paper, we build on our previous work of exploring new forms of pedagogy for an interactive world, as documented in our Innovating Pedagogy report series. We present a set of innovative pedagogical approaches that have the potential to guide teaching and transform learning. An integrated framework has been developed to select pedagogies for inclusion in this paper, consisting of the following five dimensions: (a) relevance to effective educational theories, (b) research evidence about the effectiveness of the proposed pedagogies, (c) relation to the development of twenty-first century skills, (d) innovative aspects of pedagogy, and (e) level of adoption in educational practice. The selected pedagogies, namely formative analytics, teachback, place-based learning, learning with drones, learning with robots, and citizen inquiry are either attached to specific technological developments, or they have emerged due to an advanced understanding of the science of learning. Each one is presented in terms of the five dimensions of the framework.

Introduction

In its vision for the future of education in 2030, the Organization for Economic Co-operation and Development ( OECD, 2018 ) views essential learner qualities as the acquisition of skills to embrace complex challenges and the development of the person as a whole, valuing common prosperity, sustainability and wellbeing. Wellbeing is perceived as “inclusive growth” related to equitable access to “ quality of life, including health, civic engagement, social connections, education, security, life satisfaction and the environment” (p. 4). To achieve this vision, a varied set of skills and competences is needed, that would allow learners to act as “change agents” who can achieve positive impact on their surroundings by developing empathy and anticipating the consequences of their actions.

Several frameworks have been produced over the years detailing specific skills and competences for the citizens of the future (e.g., Trilling and Fadel, 2009 ; OECD, 2015 , 2018 ; Council of the European Union, 2018 ). These frameworks refer to skills such as critical thinking, problem solving, team work, communication and negotiation skills; and competences related to literacy, multilingualism, STEM, digital, personal, social, and “learning to learn” competences, citizenship, entrepreneurship, and cultural awareness ( Trilling and Fadel, 2009 ; Council of the European Union, 2018 ). In a similar line of thinking, in the OECD Learning Framework 2030 ( OECD, 2018 ) cognitive, health and socio-emotional foundations are stressed, including literacy, numeracy, digital literacy and data numeracy, physical and mental health, morals, and ethics.

The question we are asked to answer is whether the education vision of the future, or the development of the skills needed to cope with an ever-changing society, has been met, or can be met. The short answer is not yet. For example, the Programme for International Student Assessment (PISA) has been ranking educational systems based on 15-year-old students' performance on tests about reading, mathematics and science every 3 years in more than 90 countries. In the latest published report ( OECD, 2015 ), Japan, Estonia, Finland, and Canada are the four highest performing OECD countries in science. This means that students from these countries on average can “ creatively and autonomously apply their knowledge and skills to a wide variety of situations, including unfamiliar ones” ( OECD, 2016a , p.2). Yet about 20% of students across participating countries are shown to perform below the baseline in science and proficiency in reading ( OECD, 2016b ). Those most at risk are socio-economically disadvantaged students, who are almost three times more likely than their peers not to meet the given baselines. These outcomes are quite alarming; they stress the need for evidence-based, effective, and innovative teaching and learning approaches that can result in not only improved learning outcomes but also greater student wellbeing. Overall, an increasing focus on memorization and testing has been observed in education, including early years, that leaves no space for active exploration and playful learning ( Mitchell, 2018 ), and threatens the wellbeing and socioemotional growth of learners. There is an increased evidence-base that shows that although teachers would like to implement more active, innovative forms of education to meet the diverse learning needs of their students, due to a myriad of constraints teachers often resort to more traditional, conservative approaches to teaching and learning ( Ebert-May et al., 2011 ; Herodotou et al., 2019 ).

In this paper, we propose that the distance between educational vision and current teaching practice can be bridged through the adoption and use of appropriate pedagogy that has been tested and proven to contribute to the development of the person as a whole. Evidence of impact becomes a central component of the teaching practice; what works and for whom in terms of learning and development can provide guidelines to teaching practitioners as to how to modify or update their teaching in order to achieve desirable learning outcomes. Educational institutions may have already adopted innovations in educational technology equipment (such as mobile devices), yet this change has not necessarily been accompanied by respective changes in the practice of teaching and learning. Enduring transformations can be brought about through “pedagogy,” that is improvements in “the theory and practice of teaching, learning, and assessment” and not the mere introduction of technology in classrooms ( Sharples, 2019 ). PISA analysis of the impact of Information Communication Technology (ICT) on reading, mathematics, and science in countries heavily invested in educational technology showed mixed effects and “no appreciable improvements” ( OECD, 2015 , p.3).

The aim of this study is to review and present a set of innovative, evidence-based pedagogical approaches that have the potential to guide teaching practitioners and transform learning processes and outcomes. The selected pedagogies draw from the successful Innovating Pedagogy report series ( https://iet.open.ac.uk/innovating-pedagogy ), produced by The Open University UK (OU) in collaboration with other centers of research in teaching and learning, that explore innovative forms of teaching, learning and assessment. Since 2012, the OU has produced seven Innovating Pedagogy reports with SRI international (USA), National Institute of Education (Singapore), Learning In a NetworKed Society (Israel), and the Center for the Science of Learning & Technology (Norway). For each report, teams of researchers shared ideas, proposed innovations, read research papers and blogs, and commented on each other's draft contributions in an organic manner ( Sharples et al., 2012 , 2013 , 2014 , 2015 , 2016 ; Ferguson et al., 2017 , 2019 ). Starting from an initial list of potential promising educational innovations that may already be in currency but not yet reached a critical mass of influence on education, these lists were critically and collaboratively examined, and reduced to 9–11 main topics identified as having the potential to provoke major shifts in educational practice.

After seven years of gathering a total of 70 innovative pedagogies, in this paper seven academics from the OU, authors of the various Innovating Pedagogy reports, critically reflected on which of these approaches have the strongest evidence and/or potential to transform learning processes and outcomes to meet the future educational skills and competences described by OECD and others. Based upon five criteria and extensive discussions, we selected six approaches that we believe have the most evidence and/or potential for future education:

• Formative analytics,

• Teachback,

• Place-based learning,

• Learning with robots,

• Learning with drones,

• Citizen inquiry.

Formative analytics is defined as “supporting the learner to reflect on what is learned, what can be improved, which goals can be achieved, and how to move forward” ( Sharples et al., 2016 , p.32). Teachback is a means for two or more people to demonstrate that they are progressing toward a shared understanding of a complex topic. Place-based learning derives learning opportunities from local community settings, which help students connect abstract concepts from the classroom and textbooks with practical challenges encountered in their own localities. Learning with robots could help teachers to free up time on simple, repetitive tasks, and provide scaffolding to learners. Learning with drones is being used to support fieldwork by enhancing students' capability to explore outdoor physical environments. Finally, citizen inquiry describes ways that members of the public can learn by initiating or joining shared inquiry-led scientific investigations.

Devising a Framework for Selection: The Role of Evidence

Building on previous work ( Puttick and Ludlow, 2012 ; Ferguson and Clow, 2017 ; Herodotou et al., 2017a ; John and McNeal, 2017 ; Batty et al., 2019 ), we propose an integrated framework for how to select pedagogies. The framework resulted from ongoing discussions amongst the seven authors of this paper as to how educational practitioners should identify and use certain ways of teaching and learning, while avoiding others. The five components of the model are presented below:

• Relevance to effective educational theories: the first criterion refers to whether the proposed pedagogy relates to specific educational theories that have shown to be effective in terms of improving learning.

• Research evidence about the effectiveness of the proposed pedagogies: the second criterion refers to actual studies testing the proposed pedagogy and their outcomes.

• Relation to the development of twenty-first century skills: the third criterion refers to whether the pedagogy can contribute to the development of the twenty-first century skills or the education vision of 2030 (as described in the introduction section).

• Innovative aspects of pedagogy: the fourth criterion details what is innovative or new in relation to the proposed pedagogy.

• Level of adoption in educational practice: the last criterion brings in evidence about the current level of adoption in education, in an effort to identify gaps in our knowledge and propose future directions of research.

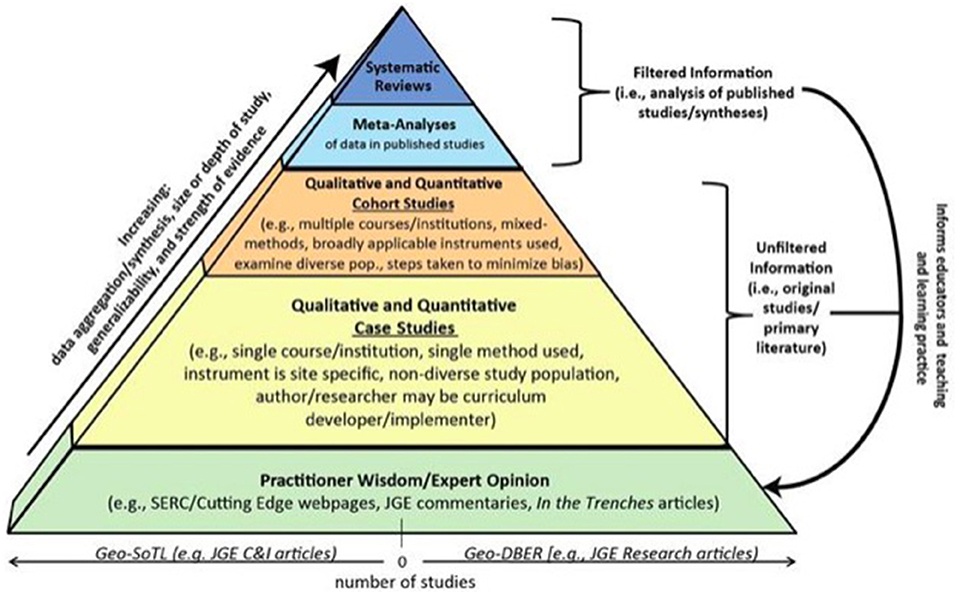

A major component of the proposed framework is effectiveness , or the generation of evidence of impact . The definition of what constitutes evidence varies ( Ferguson and Clow, 2017 ; Batty et al., 2019 ), and this often relates to the quality or strength of evidence presented. The Strength of Evidence pyramid by John and McNeal (2017) (see Figure 1 ) categorizes different types of evidence based on their strength, ranging from expert opinions as the least strong type of evidence to meta-analysis or synthesis as the strongest or most reliable form of evidence. While the bottom of the pyramid refers to “practitioners' wisdom about teaching and learning,” the next two levels refer to peer-reviewed and published primary sources of evidence, both qualitative and quantitative. They are mostly case-studies, based on either the example of a single institution, or a cross-institutional analysis involving multiple courses or institutions. The top two levels involve careful consideration of existing resources of evidence and inclusion in a synthesis or meta-analysis. For example, variations of this pyramid in medical studies present Randomized Control Trials (RCTs) at the second top level of the pyramid, indicating the value of this approach for gaining less biased quality evidence.

Figure 1 . The strength of evidence pyramid ( John and McNeal, 2017 ).

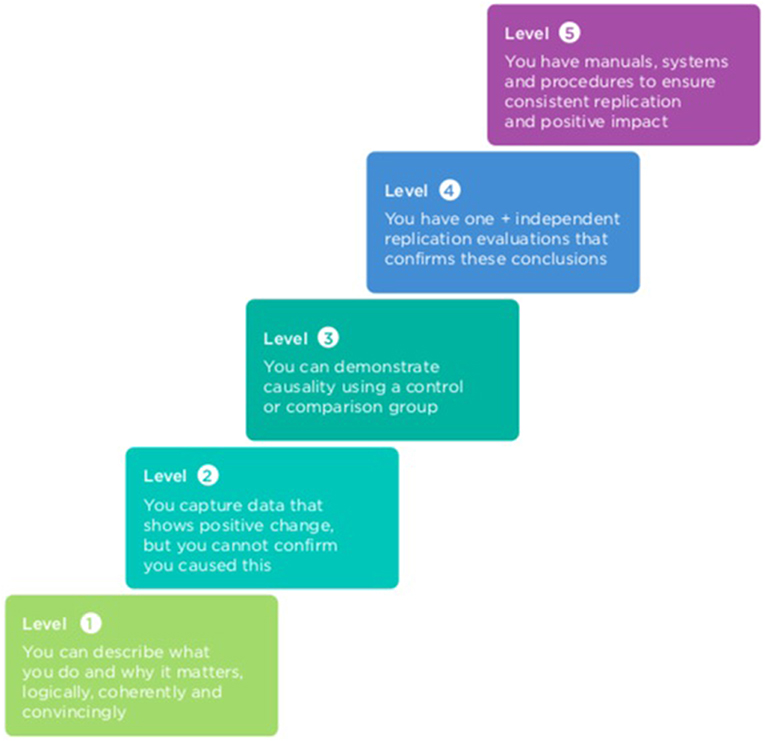

Another approach proposed by the innovation foundation Nesta presents evidence on a scale of 1 to 5, showcasing the level of confidence with the impact of an intervention ( Puttick and Ludlow, 2012 ). Level 1 studies describe logically, coherently and convincingly what has been done and why it matters, while level 5 studies produce manuals ensuring consistent replication of a study. The evidence becomes stronger when studies prove causality (e.g., through experimental approaches) and can be replicated successfully. While these frameworks are useful for assessing the quality or strength of evidence, they do not make any reference to how the purpose of a study can define which type of evidence to collect. Different types of evidence could effectively address different purposes; depending on the objective of a given study a different type of evidence could be used ( Batty et al., 2019 ). For example, the UK government-funded research work by the Educational Endowment Foundation (EEF) is using RCTs, instead of for example expert opinions, as the purpose of their studies is to capture the impact of certain interventions nationally across schools in the UK.

Education, as opposed to other disciplines such as medicine and agriculture, has been less concerned with evaluating different pedagogical approaches and determining their impact on learning outcomes. The argument often made is the difficulty in evaluating learning processes, especially through experimental methodologies, due to variability in teaching conditions across classrooms and between different practitioners, that may inhibit any comparisons and valid conclusions. In particular, RCTs have been sparse and often criticized as not explaining any impact (or absence of impact) on learning, a limitation that could be overcome by combining RCT outcomes with qualitative methodologies ( Herodotou et al., 2017a ). Mixed-methods evaluations could identify how faithfully an intervention is applied to different learning contexts or for example, the degree to which teachers have been engaged with it. An alternative approach is Design-Based Research (DBR); this is a form of action-based research where a problem in the educational process is identified, solutions informed by existing literature are proposed, and iterative cycles of testing and refinement take place in order to identify what works in practice in order to improve the solution. DBR often results in guidelines or theory development (e.g., Anderson and Shattuck, 2012 ).

An evidence-based mindset in education has been recently popularized through the EEF. Their development of the teaching and learning toolkit provides an overview of existing evidence about certain approaches to improving teaching and learning, summarized in terms of impact on attainment, cost and the supporting strength of evidence. Amongst the most effective teaching approaches are the provision of feedback, development of metacognition and self-regulation, homework for secondary students, and mastery learning ( https://educationendowmentfoundation.org.uk ). Similarly, the National Center for Education and Evaluation (NCEE) in the US conducts large-scale evaluations of education programs with funds from the government. Amongst the interventions with the highest effectiveness ratings are phonological awareness training, reading recovery, and dialogic reading ( https://ies.ed.gov/ncee/ ).

The importance of evidence generation is also evident in the explicit focus of Higher Education institutions in understanding and increasing educational effectiveness as a means to: tackle inequalities and promote educational justice (see Durham University Evidence Center for Education; DECE), provide high quality education for independent and lifelong learners (Learning and Teaching strategy, Imperial College London), develop criticality and deepen learning (London Center for Leadership in Learning, UCL Institute of Education), and improve student retention and performance in online and distance settings [Institute of Educational Technology (IET) OU].

The generation of evidence can help identify or debunk possible myths in education and distinguish between practitioners' beliefs about what works in their practice as opposed to research evidence emerging from systematically assessing a specific teaching approach. A characteristic example is the “Learning Styles” myth and the assumption that teachers should identify and accommodate each learner's special way of learning such as visual, auditory and kinesthetic. While there is no consistent evidence that considering learning styles can improve learning outcomes (e.g., Rohrer and Pashler, 2010 ; Kirschner and van Merriënboer, 2013 ; Newton and Miah, 2017 ), many teachers believe in learning styles and make efforts in organizing their teaching around them ( Newton and Miah, 2017 ). In the same study, one third of participants stated that they would continue to use learning styles in their practice despite being presented with negative evidence. This suggests that we are rather in the early days of transforming the practice of education and in particular, developing a shared evidence-based mindset across researchers and practitioners.

In order to critically review the 70 innovative pedagogies from the seven Innovating Pedagogy reports, over a period of 2 months the seven authors critically evaluated academic and gray literature that was published after the respective reports were launched. In line with the five criteria defined above, each author contributed in a dynamic Google sheet what evidence was available for promising approaches. Based upon the initial list of 70, a short-list of 10 approaches was pre-selected. These were further fine-tuned to the final six approaches identified for this study based upon the emerging evidence of impact available as well as potential opportunity for future educational innovation. The emerging evidence and impact of the six approaches were peer-reviewed by the authoring team after contributions had been anonymized, and the lead author assigned the final categorizations.

In the next section, we present each of the proposed pedagogies in relation to how they meet the framework criteria, in an effort to understand what we know about their effectiveness, what evidence exist showcasing impact on learning, how each pedagogy accommodates the vision of the twenty-first century skills development, innovation aspects and current levels of adoption in educational practice.

Selected Pedagogies

Formative analytics, relevance to effective educational theories.

As indicated by the Innovating Pedagogy 2016 report ( Sharples et al., 2016 , p.32), “formative analytics are focused on supporting the learner to reflect on what is learned, what can be improved, which goals can be achieved, and how to move forward.” In contrast to most analytics approaches that focus on analytics of learning, formative analytics aims to support analytics for learning, for a learner to reach his or her goals through “smart” analytics, such as visualizations of potential learning paths or personalized feedback. For example, these formative analytics might help learners to effectively self-regulate their learning. Zimmerman (2000) defined self-regulation as “self-generated thoughts, feelings and actions that are planned and cyclically adapted to the attainment of personal learning goals.” Students have a range of choices and options when they are learning in blended or online environments as to when, what, how, and with whom to study, with minimal guidance from teachers. Therefore, “appropriate” Self-Regulated Learning (SRL) strategies are needed for achieving individual learning goals ( Hadwin et al., 2011 ; Trevors et al., 2016 ).

With the arrival of fine-grained log-data and the emergence of learning analytics there are potentially more, and perhaps new, opportunities to map how to support students with different SRL ( Winne, 2017 ). With trace data on students' affect (e.g., emotional expression in text, self-reported dispositions), behavior (e.g., engagement, time on task, clicks), and cognition (e.g., how to work through a task, mastery of task, problem-solving techniques), researchers and teachers are able to potentially test and critically examine pedagogical theories like SRL theories on a micro as well as macro-level ( Panadero et al., 2016 ; D'Mello et al., 2017 ).

Research Evidence About the Effectiveness of the Proposed Pedagogies

There is an emergence of literature that uses formative analytics to support SRL and to understand how students are setting goals and solve computer-based tasks ( Azevedo et al., 2013 ; Winne, 2017 ). For example, using the software tool nStudy ( Winne, 2017 ) recently showed that trace data from students in forms of notes, bookmarks, or quotes can be used to understand the cycles of self-regulation. In a study of 285 students learning French in a business context, using log-file data ( Gelan et al., 2018 ) found that engaged and self-regulated students outperformed students who were “behind” in their study. In an introductory mathematics course amongst 922 students, Tempelaar et al. (2015) showed that a combination of self-reported learning dispositions from students in conjunction with log-data of actual engagement in mathematics tasks provide effective formative analytics feedback to students. Recently, Fincham et al. (2018) found that formative analytics could actively encourage 1,138 engineering learners to critically reflect upon one of their eight adopted learning strategies, and where needed adjust it.

Relation to the Development of Twenty-First Century Skills

Beyond providing markers for formative feedback on cognitive skills (e.g., mastery of mathematics, critical thinking), formative analytics tools have also been used for more twenty-first century affective (e.g., anxiety, self-efficacy) and behavioral (e.g., group working) skills. For example, a group widget developed by Scheffel et al. (2017) showed that group members were more aware of their online peers and their contributions. Similarly, providing automatic computer-based assessment feedback on mastery of mathematics exercises but also providing different options to work-out the next task allowed students with math anxiety to develop more self-efficacy over time when they actively engaged with formative analytics ( Tempelaar et al., 2018 ). Although implementing automated formative analytics is relatively easier with structured cognitive tasks (e.g., multiple choice questions, calculations), there is an emerging body of research that focuses on using more complex and unstructured data, such as text as well as emotion data ( Azevedo et al., 2013 ; Panadero et al., 2016 ; Trevors et al., 2016 ), that can effectively provide formative analytics beyond cognition.

Innovative Aspects of Pedagogy

By using fine-grained data and reporting this directly back to students in the form of feedback or dashboards, the educational practice is substantially influenced, and subsequently innovated. In particular, instead of waiting for feedback from a teacher at the end of an assessment task, students can receive formative analytics on demand (when they want to), or ask for the formative analytics that link to their own self-regulation strategies. This is a radical departure from more traditional pedagogies that either place the teacher at the center, or expect students to be fully responsible for their SRL.

Level of Adoption in Educational Practice

Beyond the widespread practice of formative analytics in computer-based assessment ( Scherer et al., 2017 ), there is an emerging field of practice whereby institutions are providing analytics dashboards directly to students. For example, in a recent review on the use of learning analytics dashboards, Bodily et al. (2018) conclude that many dashboards use principles and conceptualizations of SRL, which could be used to support teachers and students, assuming they have the capability to use these tools. However, substantial challenges remain as to how to effectively provide these formative analytics to teachers ( Herodotou et al., 2019 ) and students ( Scherer et al., 2017 ; Tempelaar et al., 2018 ), and how to make sure positive SRL strategies nested within students are encouraged and not hampered by overly prescriptive and simplistic formative analytics solutions.

The method of Teachback, and the name, were originally devised by the educational technologist Gordon Pask (1976) , as a means for two or more people to demonstrate that they are progressing toward a shared understanding of a complex topic. It starts with an expert, teacher, or more knowledgeable student explaining their knowledge of a topic to another person who has less understanding. Next, the less knowledgeable student attempts to teach back what they have learned to the more knowledgeable person. If that is successful, the one with more knowledge might then explain the topic in more detail. If the less knowledgeable person has difficulty in teaching back, the person with more expertise tries to explain in a clearer or different way. The less knowledgeable person teaches it again until they both agree.

A classroom teachback session could consist of pairs of students taking turns to teach back to each other a series of topics set by the teacher. For example, a science class might be learning the topic of “eclipses.” The teacher splits the class into pairs and asks one student in each pair to explain to the other what they know about “eclipse of the sun.” Next, the class receives instruction about eclipses from the teacher, or a video explanation. Then, the second student in the pair teaches back what they have just learned. The first student asks questions to clarify such as, “What do you mean by that?” If either student is unsure, or the two disagree, then they can ask the teacher. The students may also jointly write a short explanation, or draw a diagram of the eclipse, to explain what they have learned.

The method is based on the educational theory of “radical constructivism” (e.g., von Glaserfeld, 1987 ) which sees knowledge as an adaptive process, allowing people to cope in the world of experience by building consensus through mutually understood language. It is a cybernetic theory, not a cognitive one, in which structured conversation and feedback among individuals create a system that “comes to know” by creating areas of mutual understanding.

Some doctors and healthcare professionals have adopted teachback in their conversations with patients to make sure they understand instructions on how to take medication and manage their care. In a study by Negarandeh et al. (2013) with 43 diabetic patients, a nurse conducted one 20-min teachback session for each patient, each week over 3 weeks. A control group ( N = 40) spent similar times with the nurse, but received standard consultations. The nurse asked questions such as “When you get home, your partner will ask you what the nurse said. What will you tell them?” Six weeks after the last session, those patients who learned through teachback knew significantly more about how to care for their diabetes than the control group patients. Indeed, a systematic review study of 12 published articles covering teachback for patients showed positive outcomes on a variety of measures, though not all were statistically significant ( Ha Dinh et al., 2016 ).

Teachback has strong relevance in a world of social and conversational media, with “fake news” competing for attention alongside verified facts and robust knowledge. How can a student “come to know” a new topic, especially one that is controversial. Teachback can be a means to develop the skills of questioning knowledge, seeking understanding, and striving for agreement.

The conversational partner in Teachback could be an online tutor or fellow student, or an Artificial Intelligence (AI) system that provides a “teachable agent”. With a teachable agent, the student attempts to teach a recently-learned topic to the computer and can see a dynamic map of the concepts that the computer agent has “learned” ( www.teachableagents.org/ ). The computer could then attempt to teachback the knowledge. Alternatively, AI techniques can enhance human teachback by offering support and resources for a productive conversation, for example to search for information or clarify the meaning of a term.

Rudman (2002) demonstrated a computer-based variation on teachback. In this study, one person learned the topic of herbal remedies from a book and became the teacher. A second person then attempted to learn about the same topic by holding a phone conversation with the more-knowledgeable teacher. The phone conversation between the two people was continually monitored by an AI program that detected keywords in the spoken dialogue. Whenever the AI program recognized a keyword or phrase in the conversation (such as the name of a medicinal herb, or its properties), it displayed useful information on the screen of the learner, but not the teacher. Giving helpful feedback to the learner balanced the conversation, so that both could hold a more constructive discussion.

The method has seen some adoption into medical practice ( https://bit.ly/2Xr9qY5 ). It has also been tested at small scale for science education ( Gutierrez, 2003 ). Reciprocal teaching has been adopted in some schools for teaching of reading comprehension ( Oczuks, 2003 ).

Placed-Based Learning

Place-based learning derives learning opportunities from local community settings. These help students to connect abstract concepts from the classroom and textbooks with practical challenges encountered in their own localities. “Place” can refer to learning about physical localities, but also the social and cultural layers embedded within neighborhoods; and engaging with communities and environments as well as observing them. It can be applied as much to arts and humanities focused learning as science-based learning. Place-based learning can encompass service learning, where students, and teachers solve local community problems, and through place-based learning acquire and learn a range of skills ( Sobel, 2004 ). Mobile and networked technologies have opened up new possibilities for constructing and sharing knowledge, and reaching out to different stakeholders. Learning can take place while mobile, enabling communication across students and teachers, and beyond the field site. The physical and social aspects of the environment can be enhanced or augmented by digital layers to enable a richer experience, and greater access to resources and expertise.

Place-based learning draws upon experiential models of learning (e.g., Kolb, 1984 ), where active engagement with a situation and resulting experiences are reflected upon to help conceptualize learning, which in turn may trigger further explorations or experimentation. It may be structured as problem-based learning. Unplanned or unintentional learning outcomes may occur as a result of engagements, so place-based learning also draws on incidental learning (e.g., Kerka, 2000 ). Place-based learning declares that a more “authentic” and meaningful learning experience can happen in relevant environments, aligning with situated cognition, that states that knowledge is situated within physical, social and cultural contexts ( Brown et al., 1989 ). Learning episodes are often encountered with and through other people, a form of socio-cultural learning (e.g., Vygotsky, 1978 ). Networked technologies can enhance what experiences may be possible, and through the connections that might be made, recently articulated as connectivism (e.g., Siemens, 2005 ; Ito et al., 2013 ).

Place-based learning draws on a range of pedagogies, and in part derives its authority from research into their efficacy (e.g., experiential learning, situated learning, problem-based learning). For example, in a study of 400 US high school students Ernst and Monroe (2004) found that environment-based teaching both significantly improved students' critical thinking skills, and also their disposition toward critical thinking. Research has shown that learning is very effective if carried out in “contexts familiar to students' everyday lives” ( Bretz, 2001 , p.1112). In another study, Linnemanstons and Jordan (2017) found that educators perceived students to display greater engagement and understanding of concepts when learning through experiential approaches in a specific place. Semken and Freeman (2008) trialed a method to test whether “sense of place” could be measured as learning outcome when students are taught through place-based science activities. Using a set of psychometric surveys tested on a cohort of 31 students, they “observed significant gains in student place attachment and place meaning” (p.1042). In an analysis of 23 studies exploring indigenous education in Canada, Madden (2015) showed that place-based education can play an effective role in decolonizing curriculum, fostering understandings of shared histories between indigenous and non-indigenous learners in Canada. Context-aware systems that are triggered by place can provide location relevant learning resources ( Kukulska-Hulme et al., 2015 ), enhancing the ecology of tools available for place-based learning. However, prompts to action from digital devices might also be seen as culturally inappropriate in informal, community based learning where educational activities and their deployment needs to be considered with sensitivity ( Gaved and Peasgood, 2017 ).

Critical thinking and problem solving are central to this experiential-based approach to learning. Contextually based, place-based learning requires creativity and innovation by participants to manage and respond to often unexpected circumstances with unexpected learning opportunities and outcomes likely to arise. As an often social form of learning, communication and collaboration are key skills developed, with a need to show sensitivity to local circumstances. An ability to learn the skills to manage social and cross-cultural interactivity will be central for a range of subject areas taught through place-based learning, such as language learning or human geography. Increasingly, place-based learning is enhanced or augmented by mobile and networked technologies, so digital literacy skills need to be acquired to take full advantage of the tools now available.

Place-based learning re-associates learning with local contexts, at a time when educators are under pressure to fit into national curricula and a globalized world. It seeks to re-establish students with a sense of place, and recognize the opportunities of learning in and from local community settings, using neighborhoods as the specific context for experiential and problem-based learning. It can provide a mechanism for decolonializing curriculum, recognizing that specific spaces can be understood to have different meanings to different groups of people, and allowing diverse voices to be represented. Digital and networked technologies extend the potential for group and individual learning, reaching out and sharing knowledge with a wider range of stakeholders, enabling flexibility in learning, and a greater scale of interactions. Networked tools enable access to global resources, and learning beyond the internet, with smartphones and tablets (increasingly owned by the learners themselves) as well as other digital tools linked together for gathering, analyzing and reflection on data and interactions. Context and location aware technologies can trigger learning resources on personal devices, and augment physical spaces: augmented reality tools can dynamically overlay data layers and context sensitive virtual information ( Klopfer and Squire, 2008 ; Wu et al., 2013 ).

Place-based learning could be said to pre-date formal classroom based learning in the traditional sense of work based learning (e.g., apprenticeships), or informal learning (e.g., informal language learning). Aspects of place-based learning have a long heritage, such as environmental education and learning though overcoming neighborhood challenge, with the focus on taking account of learning opportunities “beyond the schoolhouse gate” ( Theobald and Curtiss, 2000 ). Place-based learning aligns with current pedagogical interests in education that is “multidisciplinary, experiential, and aligned with cultural and ecological sustainability” ( Webber and Miller, 2016 , p.1067).

Learning With Robots

Learning through interaction and then reflecting upon the outcomes of these interactions prompted Papert (1980) to develop the Logo Turtles. It can be argued that these turtles were one of the first robots to be used in schools whose theoretical premises were grounded within a Constructivist approach to learning. Constructivism translates into a pedagogy where students actively engage in experimental endeavors often based within real–world problem solving undertakings. This was how the first turtles were used to assist children to understand basic mathematical concepts. Logo turtles have morphed into wheeled robots in current Japanese classrooms where 11- and 12-year olds learn how to program them and then compete in teams to create the code needed to guide their robots safely through an obstacle course. This latter approach encourages children to “Think and Learn Together with Information and Communication Technology” as discussed by Dawes and Wegerif (2004) . Vygotsky's theoretical influence is then foregrounded in this particular pedagogical context, where his sociocultural theory recognizes and emphasizes the role of language within any social interaction to prompt cognitive development.

The early work of Papert has been well documented but more recently Benitti (2012) reviewed the literature about the use of robotics in schools. The conclusions reached from this meta-analysis, where the purpose of each study was taken into account, together with the type of robot used and the demographics of the children who took party in the studies suggested that the use of robots in classrooms can enhance learning. This was found particularly with the practical teaching in STEM subjects, although some studies did not reveal improvements in learning. Further work by Ospennikova et al. (2015) showed how this technology can be applied to teaching physics in Russian secondary schools and supports the use of learning with robots in STEM subjects. Social robots for early language learning have been explored by Kanero et al. (2018) ; this has proved to be positive for story telling skills ( Westlund and Breazeal, 2015 ). Kim et al. (2013) have illustrated that social robots can assist with the production of more speech utterances for young children with ASD. However, none of the above studies illustrate that robots are more effective than human teachers, but this pedagogy is ripe for more research findings.

Teaching a robot to undertake a task through specific instructions mimics the way human teachers behave with pupils when they impart a rule set or heuristics to the pupils using a variety of rhetoric techniques in reaction to the learner's latest attempt at completing a given task. This modus operandi has been well documented by Jerome Bruner and colleagues and has been termed as “scaffolding” ( Wood et al., 1979 ). This latter example illustrates a growing recognition of the expanding communicative and expressive potential found through working with robots and encouraging teamwork and collaboration.

The robot can undertake a number of roles, with different levels of involvement in the learning task. Some of the examples mentioned above demonstrate the robot taking on a more passive role ( Mubin et al., 2013 ). This is when it can be used to teach programming, such as moving the robot on a physical route with many obstacles. Robots can also act as peers and learn together with the student or act as a teacher itself. The “interactive cat” (iCat) developed by Philips Research is an example of a robotic teacher helping language learning. It has a mechanical rendered cat face and can express emotion. This was an important feature with respect to social supportiveness, an important attribute belonging to human tutors. Research showed that social supportive behavior exhibited by the robot tutor had a positive effect on students' learning. The supportive behaviors exhibited by iCat tutor were non-verbal behavior, such as smiling, attention building, empathy, and communicativeness.

Interest in learning with robots in the classroom and beyond is growing but purchasing expensive equipment which will require technical support can prevent adoption. There are also ethical issues that need to be addressed since “conversations” with embodied robots that can support both learning and new forms of assessment must all sustain equity within an ethical framework. As yet these have not been agreed within the AI community.

Learning With Drones

Outdoor fieldwork is a long-standing student-centered pedagogy across a range of disciplines, which is increasingly supported by information technology ( Thomas and Munge, 2015 , 2017 ). Within this tradition, drone-based learning, a recent innovation, is being used to support fieldwork by enhancing students' capability to explore outdoor physical environments. When students engage in outdoor learning experiences, reflect on those experiences, conceptualize their learning and experiment with new actions, they are engaging in experiential learning ( Kolb, 1984 ). The combination of human senses with the multimedia capabilities of a drone (image and video capture) means that the learning experience can be rich and multimodal. Another key aspect is that learning takes place through research, scientific data collection and analysis; drones are typically used to assist with data collection from different perspectives and in places that can be difficult to access. In the sphere of informal and leisure learning in places such as nature reserves and cultural heritage sites ( Staiff, 2016 ), drone-based exploration is based on discovery and is a way to make the visitor experience more attractive.

There is not yet much research evidence on drone-based learning, but there are some case studies, teachers' accounts based on observations of their students, and pedagogically-informed suggestions for how drones may be applied to educational problems and the development of students' knowledge and practical skills. For example, a case study conducted in Malaysia with postgraduate students taking a MOOC ( Zakaria et al., 2018 ) was concerned with students working on a video creation task using drones, in the context of problem-based learning about local issues. The data analysis showed how active the students had been during a task which involved video shooting and editing/production. In the US, it was reported that a teacher introduced drones to a class of elementary students with autism in order to enhance their engagement and according to the teacher the results were “encouraging” since the students stayed on task better and were more involved with learning ( Joch, 2018 ). In the context of education in Australia, Sattar et al. (2017) give suggestions for using drones to develop many kinds of skills, competences and understanding in various disciplines, also emphasizing the learners' active engagement.

Sattar et al. (2017) argue that using drone technology will prepare and equip students with the technical skills and expertise which will be in demand in future, enhance their problem-solving skills and help them cope with future technical and professional requirements; students can be challenged to develop skills in problem-solving, analysis, creativity and critical thinking. Other ideas put forward in the literature suggest that drone-based learning can stimulate curiosity to see things that are hidden from view, give experience in learning through research and analyzing data, and it can help with visual literacies including collecting visual data and interpreting visual clues. Another observation is that drone-based learning can raise issues of privacy and ethics, stimulating discussion of how such technologies should be used responsibly when learning outside the classroom.

Drones enable learners to undertake previously impossible actions on field trips, such as looking inside inaccessible places or inspecting a landscape from several different perspectives. There is opportunity for rich exploration of physical objects and spaces. Drone-based learning can be a way to integrate skills and literacies, particularly orientation and motor skills with digital literacy. It is also a new way to integrate studies with real world experiences, showing students how professionals including land surveyors, news reporters, police officers and many others use drones in their work. Furthermore, it has been proposed as an assistive technology, enabling learners who are not mobile to gain remote access to sites they would not be able to visit ( Mangina et al., 2016 ).

Accounts of adoption into educational practice suggest that early adopters with an interest in technology have been the first to experiment with drones. There are more accounts of adoption in community settings, professional practice settings and informal learning than in formal education at present. For example, Hodgson et al. (2018) describe how ecologists use drones to monitor wildlife populations and changes in vegetation. Drones can be used to capture images of an area from different angles, enabling communities to collect evidence of environmental problems such as pollution and deforestation. They are used after earthquakes and hurricanes, to assess the damage caused by these disasters, to locate victims, to help deliver aid, and to enhance understanding of assistance needs ( Sandvik and Lohne, 2014 ). They also enable remote monitoring of illegal trade without having to confront criminals.

Citizen Inquiry

Citizen science is an increasingly popular activity that has the potential to support growth and development in learning science. Active participation by the public in scientific research encourages this. This is due to its potential to educate the public—including young people—and to support the development of skills needed for the workplace, and contribute to findings of real science research. An experience that allows people to become familiar with the work of scientists and learn to make their own science has potential for learning. Citizen science activities can take place online on platforms such as Zooniverse, which hosts some of the largest internet-based citizen science projects or nQuire ( nQuire.org.uk ), which scaffolds a wide range of inquiries, or can be offline in a local area (e.g., a bioblitz). In addition, mobile and networked technologies have opened up new possibilities for these investigations (see e.g., Curtis, 2018 ).

Most current citizen science initiatives engage the general public in some way. For example, they may be in the role of volunteers, often non-expert individuals, in projects generated by scientists such as species recognition and counting. In these types of collaboration the public contributes to data collection and analysis tasks such as observation and measurement. The key theory which underpins this work is that of inquiry learning. “ Inquiry-based learning is a powerful generalized method for coming to understand the natural and social world through a process of guided investigation ” ( Sharples et al., 2013 , p.38). It has been described as a powerful way to encourage learning by encouraging learners to use higher-order thinking skills during the conduct of inquiries and to make connections with their world knowledge.

Inquiry learning is a pedagogy with a long pedigree. First proposed by Dewey as learning through experience it came to the fore in the discovery learning movement of the sixties. Indeed, the term citizen inquiry has been coined which “ fuses the creative knowledge building of inquiry learning with the mass collaborative participation exemplified by citizen science, changing the consumer relationship that most people have with research to one of active engagement ” ( Sharples et al., 2013 , p.38).

Researchers using this citizen inquiry paradigm have described how it “ shifts the emphasis of scientific inquiry from scientists to the general public, by having non-professionals (of any age and level of experience) determine their own research agenda and devise their own science investigations underpinned by a model of scientific inquiry. It makes extensive use of web 2.0 and mobile technologies to facilitate massive participation of the public of any age, including youngsters, in collective, online inquiry-based activities” ( Herodotou et al., 2017b ). This shift offers more opportunities for learning in these settings.

Research has shown that learning can be developed in citizen science projects. Herodotou et al. (2018) citing a review by Bonney et al. (2009) have found that systematic involvement in citizen science projects produces learning outcomes in a number of ways, including increasing accuracy and degree of self-correction of observations. A number of studies have examined the learning which takes place during the use of iSpot (see Scanlon et al., 2014 ; Silvertown et al., 2015 ). Preliminary results showed that novice users can reach a fairly sophisticated understanding of identification over time ( Scanlon et al., 2014 ). Also, Aristeidou et al. (2017 , p 252) examined citizen science activities on nQuire, and reported that some participants perceived learning as a reason for feeling satisfied with their engagement, with comments such as “insight into some topics” and “new information.”

Through an online survey, Edwards et al. (2017) reported that citizen science participants of the UK Wetland Bird Survey and the Nest Record Scheme had learned on various dimensions. This was found to be related in part to their prior levels of education. Overall, there is a growing number of studies investigating the relationship between citizen science and learning with some positive indications that projects can be designed to encourage learning (Further studies on learning from citizen science are also discussed by Ballard et al., 2017 , and Boakes et al., 2016 ).

The skills required by citizens in the twenty-first century are those derived from citizen science projects. They “ need the skills and knowledge to solve problems, evaluate evidence, and make sense of complex information from various sources .” ( Ferguson et al., 2017 , p.12). As noted by OECD (2015) a significant skill students need to develop is learn to “ think like a scientist .” This is perceived as an essential skill across professions and not only the science-related ones. In particular, STEM education and jobs are no longer viewed as options for the few or for the “gifted.” “ Engagement with STEM can develop critical thinking, teamwork skills, and civic engagement. It can also help people cope with the demands of daily life. Enabling learners to experience how science is made can enhance their content knowledge in science, develop scientific skills and contribute to their personal growth. It can also increase their understanding of what it means to be a scientist ” ( Ferguson et al., 2017 , p.12).

One of the innovations of this approach is that it enables potentially any citizen to engage and understand scientific activities that are often locked behind the walls of experimental laboratories. Thinking scientifically should not be restricted to scientists; it should be a competency that citizens develop in order to engage critically and reflect on their surroundings. Such skills will enable critical understanding of public debates such as fake news and more active citizenship. Technologically, the development of these skills can be supported by platforms such as nQuire, the vision of which is to scaffold the process of scientific research and facilitate development of relevant skills amongst citizens.

Citizen science activities are mainly found in informal learning settings, with rather limited adoption to formal education. “ For example, the Natural History Museum in London offers citizen science projects that anyone can join as an enjoyable way to interact with nature. Earthworm Watch is one such project that runs every spring and autumn in the UK. It is an outdoor activity that asks people to measure soil properties and record earthworms in their garden or in a local green space. Access to museums such as the Natural History Museum is free of charge allowing all people, no matter what their background, to interact with such activities and meet others with similar interests .” ( Ferguson et al., 2017 , p.13) At the moment, adoption is dependent on individual educators rather than a policy. Two Open University examples are the incorporation of the iSpot platform into a range of courses from short courses such as Neighborhood Nature to MOOCs such as An introduction to Ecology on the FutureLearn platform. In recent years there are more accounts of citizen science projects within school settings (see e.g., Doyle et al., 2018 ; Saunders et al., 2018 ; Schuttler et al., 2018 ).

In this paper, we discussed six innovative approaches to teaching and learning that originated from seven Innovating Pedagogy reports ( Sharples et al., 2012 , 2013 , 2014 , 2015 , 2016 ; Ferguson et al., 2017 , 2019 ), drafted between 2012 and 2019 by leading academics in Educational Technology at the OU and institutions in the US, Singapore, Israel, and Norway. Based upon an extensive peer-review by seven OU authors, evidence and impact of six promising innovative approaches were gathered, namely formative analytics, teachback, place-based learning, learning with robots, learning with drones, and citizen inquiry. For these six approaches there is strong or emerging evidence that they can effectively contribute to the development of skills and competences such as critical thinking, problem-solving, digital literacy, thinking like a scientist, group work, and affective development.

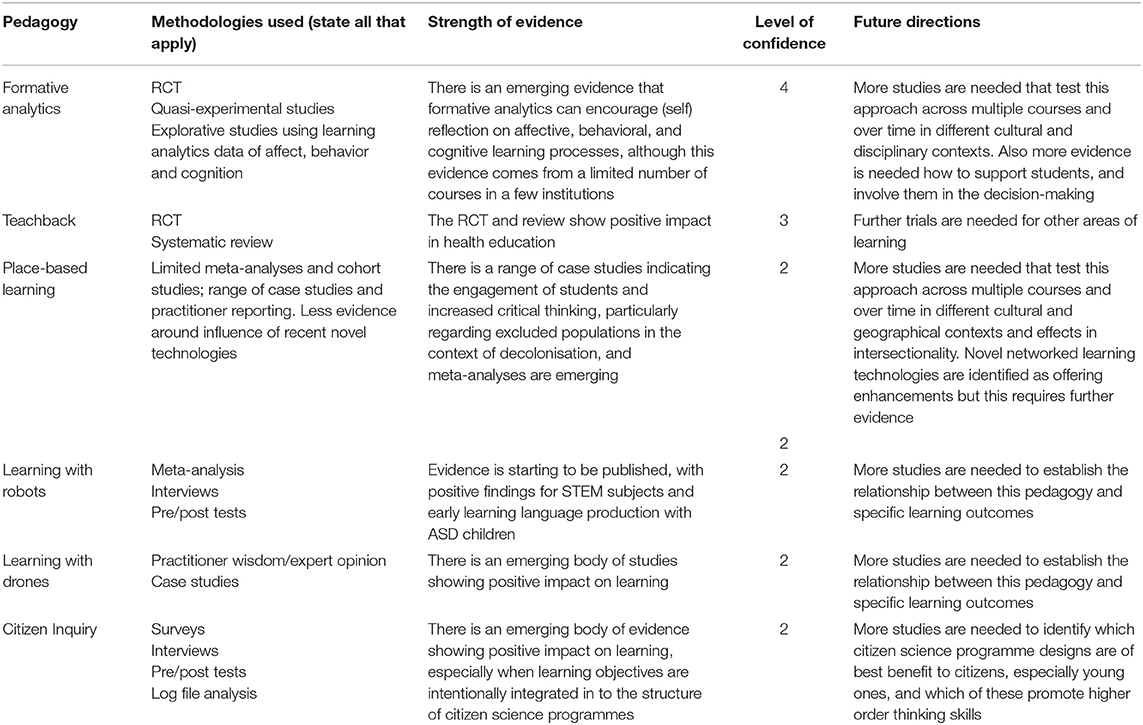

The maturity of each pedagogy in terms of evidence generation varies with some pedagogies such as learning with drones being less mature and others such as formative analytics being more advanced. In Table 1 , we used the evidence classifications in Figures 1 , 2 to provide our own assessment of the overall quality of evidence (strength of evidence and level of confidence (scale 1–5) based on NESTA's standards of evidence shown in Figure 2 ) for each pedagogy, as a means to identify gaps in current knowledge and direct future research efforts.

Table 1 . Future directions of selected pedagogies.

Figure 2 . Standards of evidence by Nesta.

The proposed pedagogies have great potential in terms of reducing the distance between aspirations or vision for the future of education and current educational practice. This is evident in their relevance to effective educational theories including experiential learning, inquiry learning, discovery learning, and self-regulated learning, all of which are interactive and engaging ways of learning. Also, the review of existing evidence showcases their potential to support learning processes and desirable learning outcomes in both the cognitive and emotional domain. Yet, this list of pedagogies is not exhaustive; additional pedagogies that could potentially meet the selection criteria—and which can be found in the Innovating Pedagogy report series—are for example, playful learning emphasizing the need for play, exploration and learning through failure, virtual studios stressing learning flexibility through arts and design, and dynamic assessment during which assessors support learners in identifying and overcoming learning difficulties.

Conclusions

In this paper we presented six approaches to teaching and learning and stressed the importance of evidence in transforming the educational practice. We devised and applied an integrated framework for selection that could be used by both researchers and educators (teachers, pre-service teachers, educational policy makers etc.) as an assessment tool for reflecting on and assessing specific pedagogical approaches, either currently in practice or intended to be used in education in the future. Our framework goes beyond existing frameworks that focus primarily on the development of skills and competences for the future, by situating such development within the context of effective educational theories, evidence from research studies, innovative aspects of the pedagogy, and its adoption in educational practice. We made the case that learning is a science and that the testing of learning interventions and teaching approaches before applying these to practice should be a requirement for improving learning outcomes and meeting the expectations of an ever-changing society. We wish this work to spark further dialogue between researchers and practitioners and signal the necessity for evidence-based professional development that will inform and enhance the teaching practice.

Author Contributions

CH: introduction, discussion, confusion sections, revision of manuscript. MS: teachback. MG: place-based learning. BR: formative analytics. ES: citizen inquiry. AK-H: learning with drones. DW: learning with robots.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Anderson, T., and Shattuck, J. (2012). Design-based research: a decade of progress in education research? Educ. Res. 41, 16–25. doi: 10.3102/0013189X11428813

CrossRef Full Text | Google Scholar

Aristeidou, M., Scanlon, E., and Sharples, M. (2017). “Design processes of a citizen inquiry community,” in Citizen Inquiry: Synthesising Science and Inquiry Learning , eds C. Herodotou, M. Sharples, and E. Scanlon (Abingdon: Routledge), 210–229. doi: 10.4324/9781315458618-12

Azevedo, R., Harley, J., Trevors, G., Duffy, M., Feyzi-Behnagh, R., Bouchet, F., et al. (2013). “Using trace data to examine the complex roles of cognitive, metacognitive, and emotional self-regulatory processes during learning with multi-agent systems,” in International Handbook of Metacognition and Learning Technologies , eds R. Azevedo and V. Aleven (New York, NY: Springer New York), 427–449. doi: 10.1007/978-1-4419-5546-3_28

Ballard, H. L., Dixon, C. G. H., and Harris, E. M. (2017). Youth-focused citizen science: examining the role of environmental science learning and agency for conservation. Biol. Conserv. 208, 65–75. doi: 10.1016/j.biocon.2016.05.024

Batty, R., Wong, A., Florescu, A., and Sharples, M. (2019). Driving EdTech Futures: Testbed Models for Better Evidence . London: Nesta.

Google Scholar

Benitti, F. B. V. (2012). Exploring the educational potential of robotics in schools: a systematic review. Comput. Educ. 58, 978–988. doi: 10.1016/j.compedu.2011.10.006

Boakes, E. H., Gliozzo, G., Seymour, V., Harvey, M., Smith, C., Roy, D. B., et al. (2016). Patterns of contribution to citizen science biodiversity projects increase understanding of volunteers' recording behaviour. Sci. Rep. 6:33051. doi: 10.1038/srep33051

PubMed Abstract | CrossRef Full Text | Google Scholar

Bodily, R., Kay, J., Aleven, V., Jivet, I., Davis, D., Xhakaj, F., et al. (2018). “Open learner models and learning analytics dashboards: a systematic review,” in Proceedings of the 8th International Conference on Learning Analytics and Knowledge (Sydney, NSW: ACM), 41–50.

Bonney, R., Cooper, C. B., Dickinson, J., Kelling, S., Phillips, T., Rosenberg, K. V., et al. (2009). Citizen science: a developing tool for expanding science knowledge and scientific literacy. Bioscience 59, 977–984. doi: 10.1525/bio.2009.59.11.9

Bretz, S. L. (2001). Novak's theory of education: human constructivism and meaningful learning. J. Chem. Educ. 78:1107. doi: 10.1021/ed078p1107.6

Brown, J. S., Collins, A., and Duguid, P. (1989). Situated cognition and the culture of learning. Educ. Res. 18, 32–42. doi: 10.3102/0013189X018001032

Council of the European Union (2018). Council Recommendations of 22 May 2018 on Key Competences for Lifelong Learning . Brussel: Council of the European Union.

Curtis, V. (2018). “Online citizen science and the widening of academia: distributed engagement with research and knowledge production,” in Palgrave Studies in Alternative Education (Cham: Palgrave Macmillan). doi: 10.1007/978-3-319-77664-4

Dawes, L., and Wegerif, R. (2004). Thinking and Learning With ICT: Raising Achievement in Primary Classrooms . London: Routledge. doi: 10.4324/9780203506448

D'Mello, S., Dieterle, E., and Duckworth, A. (2017). Advanced, analytic, automated (AAA) measurement of engagement during learning. Educ. Psychol. 52, 104–123. doi: 10.1080/00461520.2017.1281747

Doyle, C., Li, Y., Luczak-Roesch, M., Anderson, D., Glasson, B., Boucher, M., et al. (2018). What is Online Citizen Science Anyway? An Educational Perspective. arXiv [Preprint]. arXiv:1805.00441.

Ebert-May, D., Derting, T. L., Hodder, J., Momsen, J. L., Long, T. M., and Jardeleza, S. E. (2011). What we say is not what we do: effective evaluation of faculty professional development programs. BioScience 61, 550–558. doi: 10.1525/bio.2011.61.7.9

Edwards, R., McDonnell, D., Simpson, I., and Wilson, A. (2017). “Educational backgrounds, project design and inquiry learning in citizen science,” in Citizen Inquiry: Synthesising Science and Inquiry Learning , eds C. Herodotou, M. Sharples, and E. Scanlon (Abingdon: Routledge), 195–209. doi: 10.4324/9781315458618-11

Ernst, J., and Monroe, M. (2004). The effects of environment-based education on students' critical thinking skills and disposition toward critical thinking. Environ. Educ. Res. 10, 507–522. doi: 10.1080/1350462042000291038

Ferguson, R., Barzilai, S., Ben-Zvi, D., Chinn, C. A., Herodotou, C., Hod, Y., et al. (2017). Innovating Pedagogy 2017: Open University Innovation Report 6 . Milton Keynes: The Open University.

Ferguson, R., and Clow, D. (2017). “Where is the evidence? A call to action for learning analytics,” in Proceedings of the 6th Learning Analytics Knowledge Conference (Vancouver, BC: ACM), 56–65.

Ferguson, R., Coughlan, T., Egelandsdal, K., Gaved, M., Herodotou, C., Hillaire, G., et al. (2019). Innovating Pedagogy 2019: Open University Innovation Report 7 . Milton Keynes: The Open University.