My Data Mesh Thesis

I wanted to write a post with my thesis about the Data Mesh paradigm coined by Zhamak Dehghani . To be honest, I’m still wondering what a Data Mesh is.

Looking at different articles, videos and talking to other people wondering the same thing, you realize some ideas differ considerably. It seems that the Data Mesh paradigm has some abstract ideas, and hence it has different interpretations. Although if I would like to say what a Data Mesh is in a short definition, I would pick the following:

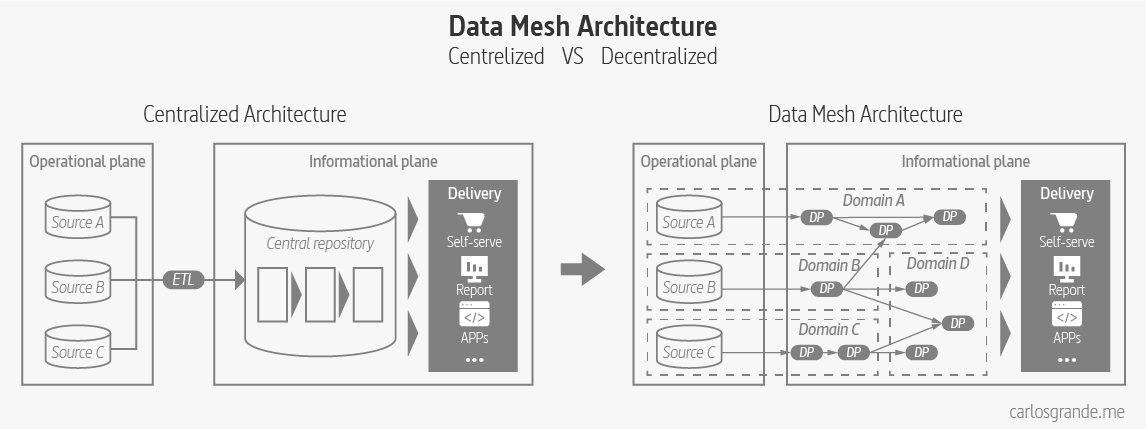

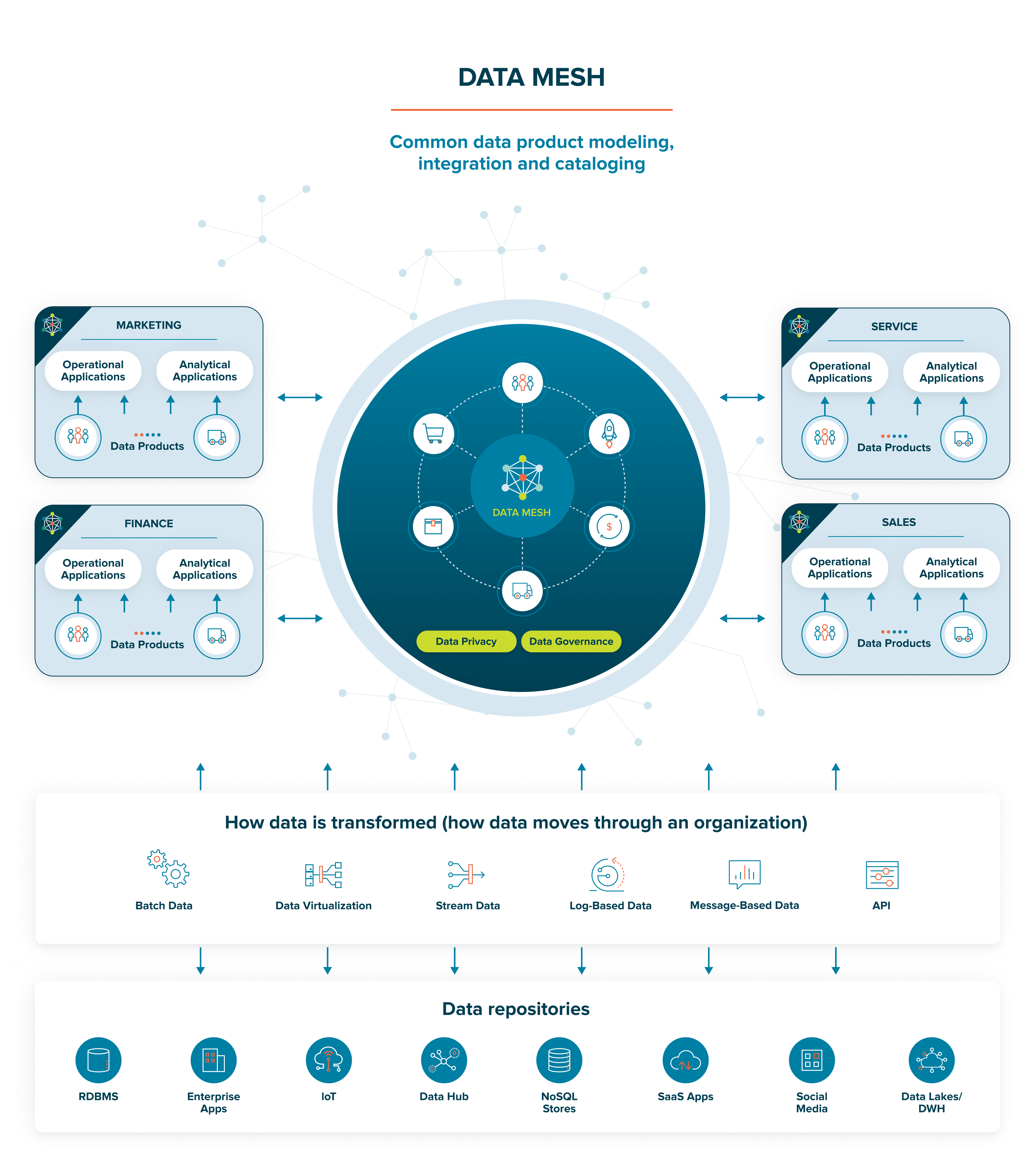

Data meshes are a decentralization technique of the ownership, transformation & serving of data. It is proposed as a solution for centralized architectures, where growth is limited by its dependencies and complexity.

Data Mesh: Centralized VS decentralized architecture

The goal of this article is to try and bring some clarity to all concepts associated with a Data Mesh , including an end to end storytelling with a thesis structure. This is just my public personal attempt to make sense of all the information I have found about this matter.

CONTENTS: 1. Context 1.1 First Generation: Data Warehouse Architecture 1.2 Second Generation: Data Lake Architecture 1.3 Third Generation: Multimodal Cloud Architecture 2. Principles 2.1 Domain-oriented ownership 2.2 Data as a Product 2.3 Self-serve Data Platform 2.4 Federated Computational Governance 3. Data Mesh Architecture 3.1 Data Product 3.2 Roles 3.3 Self-Serve Platform 3.4 Data Governance 3.5 BluePrint 4. Data Mesh Implementation 4.1 Investment Factors 4.2 Building a Data Mesh 5. Case Studies 5.1 Zalando 5.2 Intuit 5.3 Saxo Bank 5.4 JP Morgan Chase 5.5 Kolibri Games 5.6 Netflix 5.7 Adevinta 5.8 HelloFresh 6. Conclussion 7. Data Mesh Vocabulary 8. Discovery Roadmap 8.1 Zhamak Dehghani 8.2 Introductory Content 8.3 Deep Dive Acknowledgements References and links

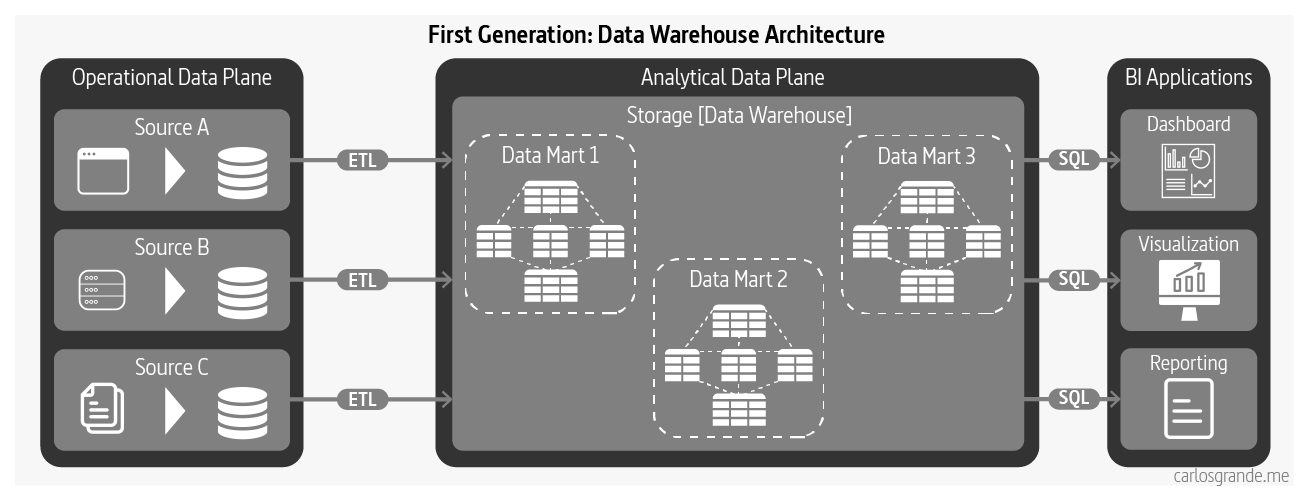

1.1 First Generation: Data Warehouse Architecture

Data warehousing architecture today is influenced by early concepts such as facts and dimensions formulated in the 1960s. The architecture intends to flow data from operational systems to business intelligence systems that traditionally have served the management with operations and planning of an organization. While data warehousing solutions have greatly evolved, many of the original characteristics and assumptions of their architectural model remain the same. (Dehghani, 2022)

Data Warehouse Architecture

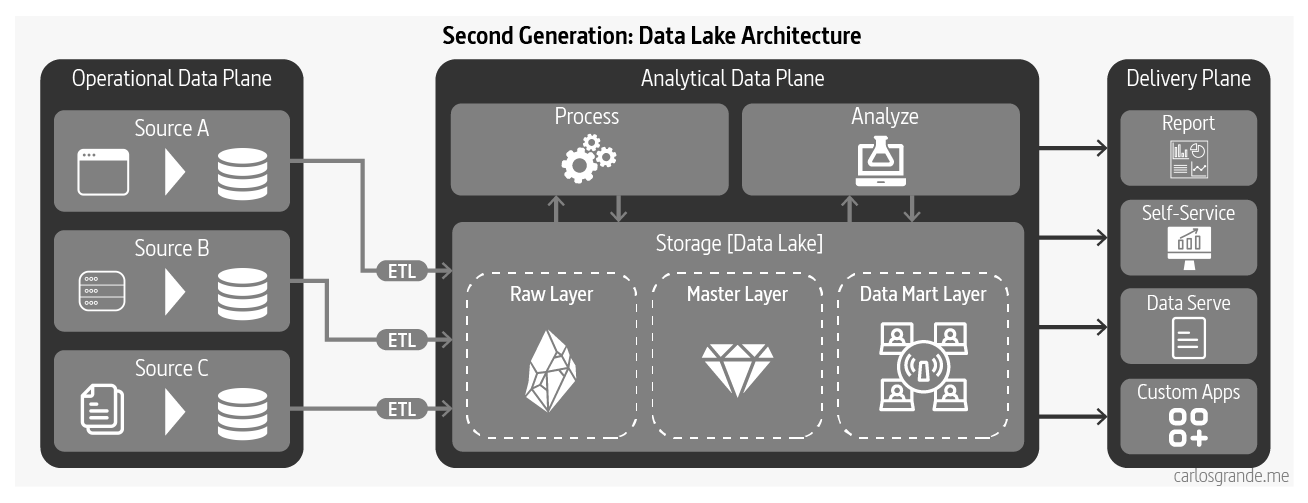

1.2 Second Generation: Data Lake Architecture

Data lake architecture was introduced in 2010 in response to challenges of data warehousing architecture in satisfying the new uses of data; access to data based on data science and machine learning model training workflows, and supporting massively parallelized access to data.

Unlike data warehousing, data lake assumes no or very little transformation and modeling of the data upfront; it attempts to retain the data close to its original form. Once the data becomes available in the lake, the architecture gets extended with elaborate transformation pipelines to model the higher value data and store it in lakeshore marts.

This evolution to data architecture aims to improve ineffectiveness and friction introduced by extensive upfront modeling that data warehousing demands. The upfront transformation is a blocker and leads to slower iterations of model training. Additionally, it alters the nature of the operational system’s data and mutates the data in a way that models trained with transformed data fail to perform against the real production queries. (Dehghani, 2022)

Data Lake Architecture

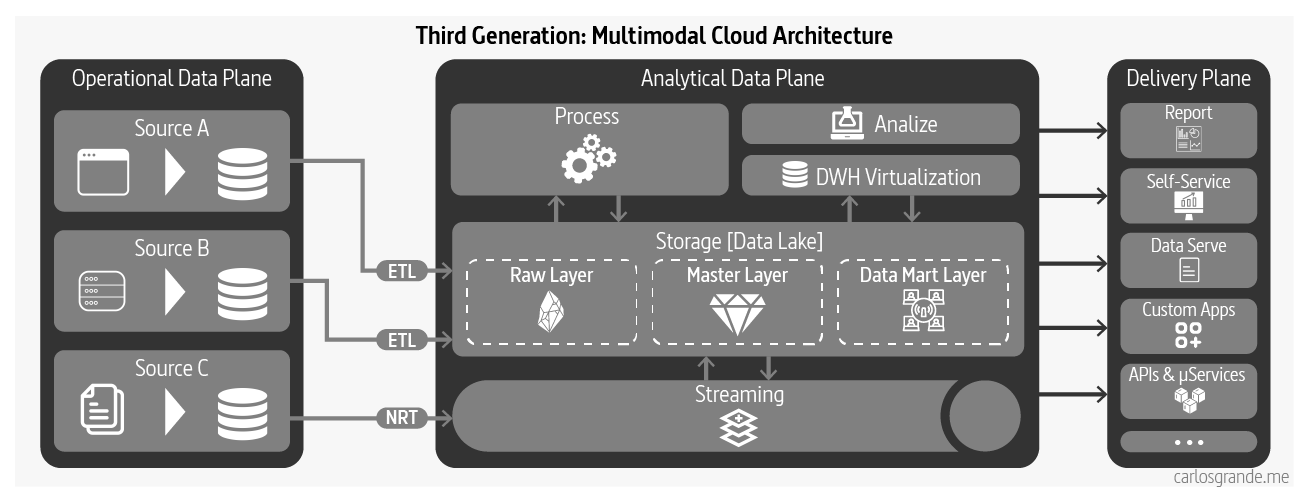

1.3 Third Generation: Multimodal Cloud Architecture

The third and current generation data architectures are more or less similar to the previous generations, with a few modern twists:

- Streaming for real-time data availability with architectures such as Kappa

- Attempting to unify the batch and stream processing for data transformation with frameworks such as Apache Beam

- Fully embracing cloud based managed services with modern cloud-native implementations with isolated compute and storage

- Convergence of warehouse and lake , either extending data warehouse to include embedded ML training, e.g. Google BigQuery ML, or alternatively build data warehouse integrity, transactionality and querying systems into data lake solutions, e.g., Databricks Lakehouse

The third generation data platform is addressing some of the gaps of the previous generations such as real-time data analytics, as well as reducing the cost of managing big data infrastructure. However it suffers from many of the underlying characteristics that have led to the limitations of the previous generations. (Dehghani, 2022)

Multimodal Cloud Architecture

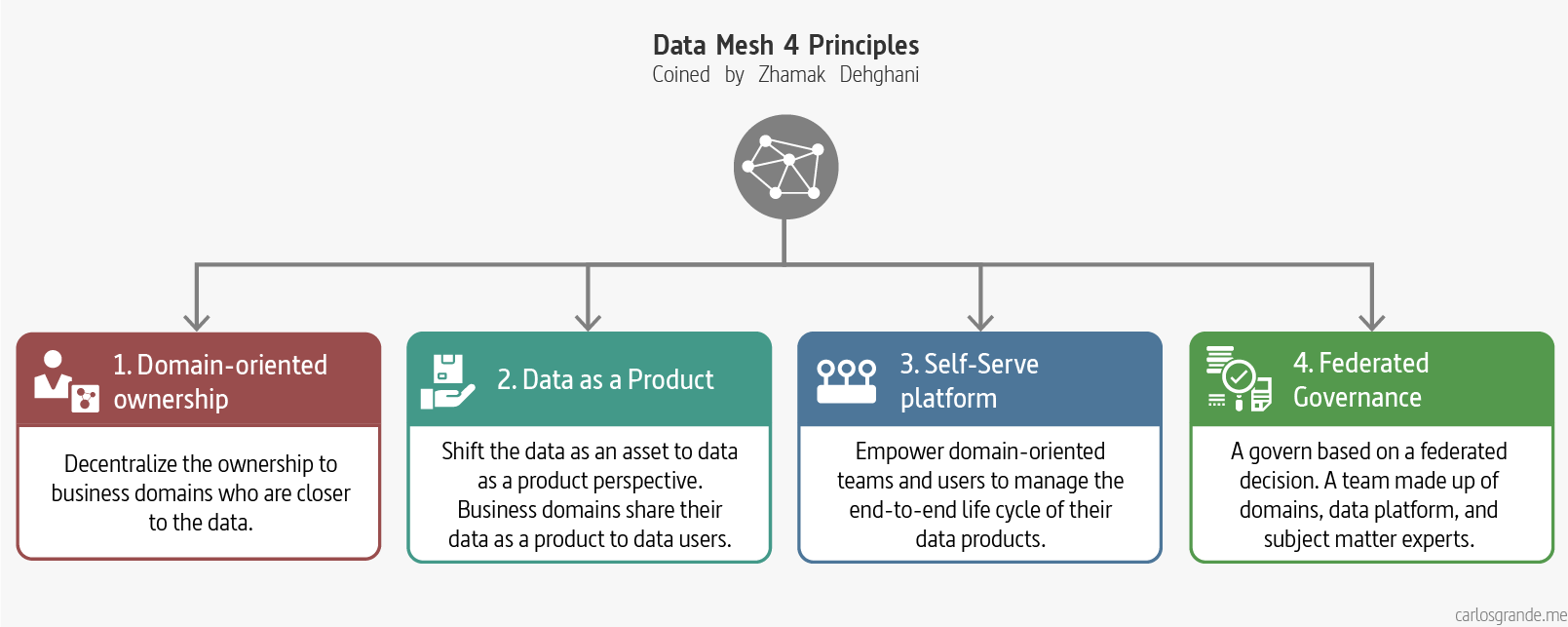

2. Principles

Data Mesh Principles

2.1 Domain-oriented ownership

This principle aim to decentralize the ownership of sharing analytical data to business domains who are closest to the data — either are the source of the data or its main consumers. Decompose the data artefacts (data, code, metadata, policies) - logically - based on the business domain they represent and manage their life cycle independently. (Dehghani, 2022)

2.2 Data as a Product

This simply means applying widely used product thinking to data and, in doing so, making data a first-class citizen: supporting operations with its owner and development team behind it.

Existing or new business domains become accountable to share their data as a product served to data users – data analysts and data scientists. Data as a product introduces a new unit of logical architecture called, data product quantum, controlling and encapsulating all the structural components — data, code, policy and infrastructure dependencies — needed to share data as a product autonomously. (Dehghani, 2022)

2.3 Self-serve Data Platform

The principle of creating a self-serve infrastructure is to provide tools and user-friendly interfaces so that generalist developers can develop analytical data products where, previously, the sheer range of operational platforms made this incredibly difficult.

A new generation of self-serve data platform to empower domain-oriented teams to manage the end-to-end life cycle of their data products, to manage a reliable mesh of interconnected data products and share the mesh’s emergent knowledge graph and lineage, and to streamline the experience of data consumers to discover, access, and use the data products. (Dehghani, 2022)

2.4 Federated Computational Governance:

This is an inevitable consequence of the first principle. Wherever you deploy decentralised services—microservices, for example—it’s essential to introduce overarching rules and regulations to govern their operation. As Dehghani puts it, it’s crucial to "maintain an equilibrium between centralisation and decentralisation".

A data governance operational model that is based on a federated decision making and accountability structure, with a team made up of domains, data platform, and subject matter experts — legal, compliance, security, etc. It creates an incentive and accountability structure that balances the autonomy and agility of domains, while respecting the global conformance, interoperability and security of the mesh. The governance model heavily relies on codifying and automated execution of policies at a fine-grained level, for each and every data product. (Dehghani, 2022)

3. Data Mesh Architecture

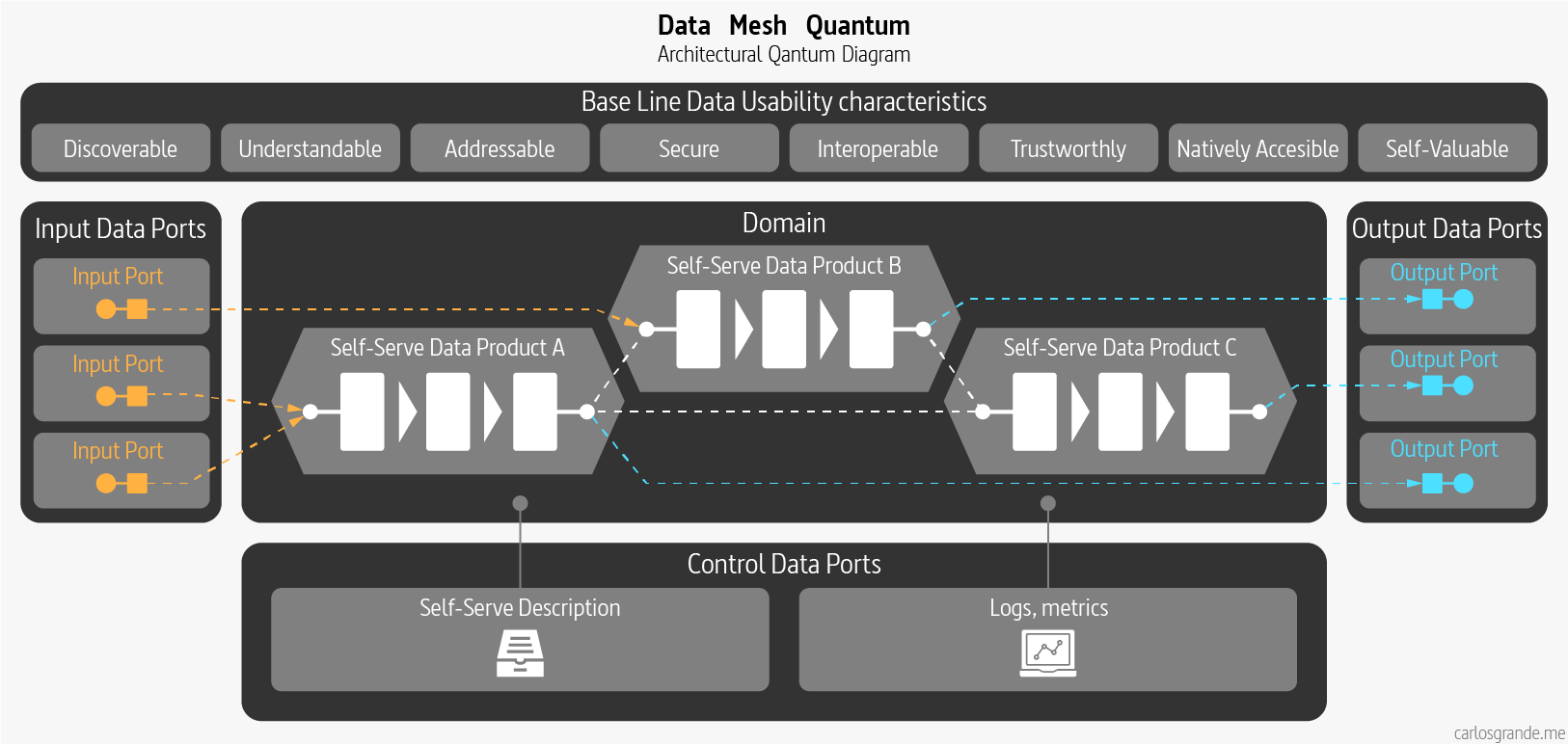

3.1 data product.

A data product consists of the code including pipelines, the data itself including metadata and the infrastructure required to run the pipelines. The goal is to have application code and data pipelines code under the same domain owned by the same team. As you can see, we are shifting responsibility to the people who actually understand the domain and create the data, instead of “data” owners in the data plane that usually struggle to understand the data and create friction between teams. This means that the people who change the application and within the data, are in charge of owning that change using schema versioning and documentation to broadcast the data evolution to the different stakeholders. This ensures that data schema changes can be implemented easily by the data creators instead of data analysts trying to adapt to changes after the fact.

Data Mesh Quantum: Data Products

The idea behind the Domain Ownership principle is to use Domain Driven Design in the data plane alongside the operational plane to close the gap between the two planes. The goal is to split teams around business domains being each team fully cross functional, but not only on the operational level ( DevOps ) but also on the analytical level. Each team should have a data owner, data engineers and QA teams that not only validate mircroservices but also data quality.

Following the Zhamak Dehghani book we find two specific key roles related to a data product domain:

Data product developer roles : A group of roles responsible for developing, serving and maintaining the domain’s data products as long as the data products remain to exist and serve its consumers. Data product developers will be working alongside regular developers in the domain. Each domain team may serve one or multiple data products.

Key domain specific objects:

- Transformation code, the domain-specific logic that generates and maintains the data

- Tests to verify and maintain the domain’s data integrity.

- Tests to continuously monitor that the data product meets its quality guarantees.

- Generating data products meta-data such as its schema, documentation, etc.

Data product owner : A role accountable for the success of domain’s data products in delivering value, satisfying and growing the data consumers, and defining the lifecycle of the data products.

Domain data product owners must have a deep understanding of who the data users are , how they use the data,and what are the native methods that they are comfortable with consuming the data. The conversation between users of the data and product owners is a necessary piece for establishing the interfaces of data products.

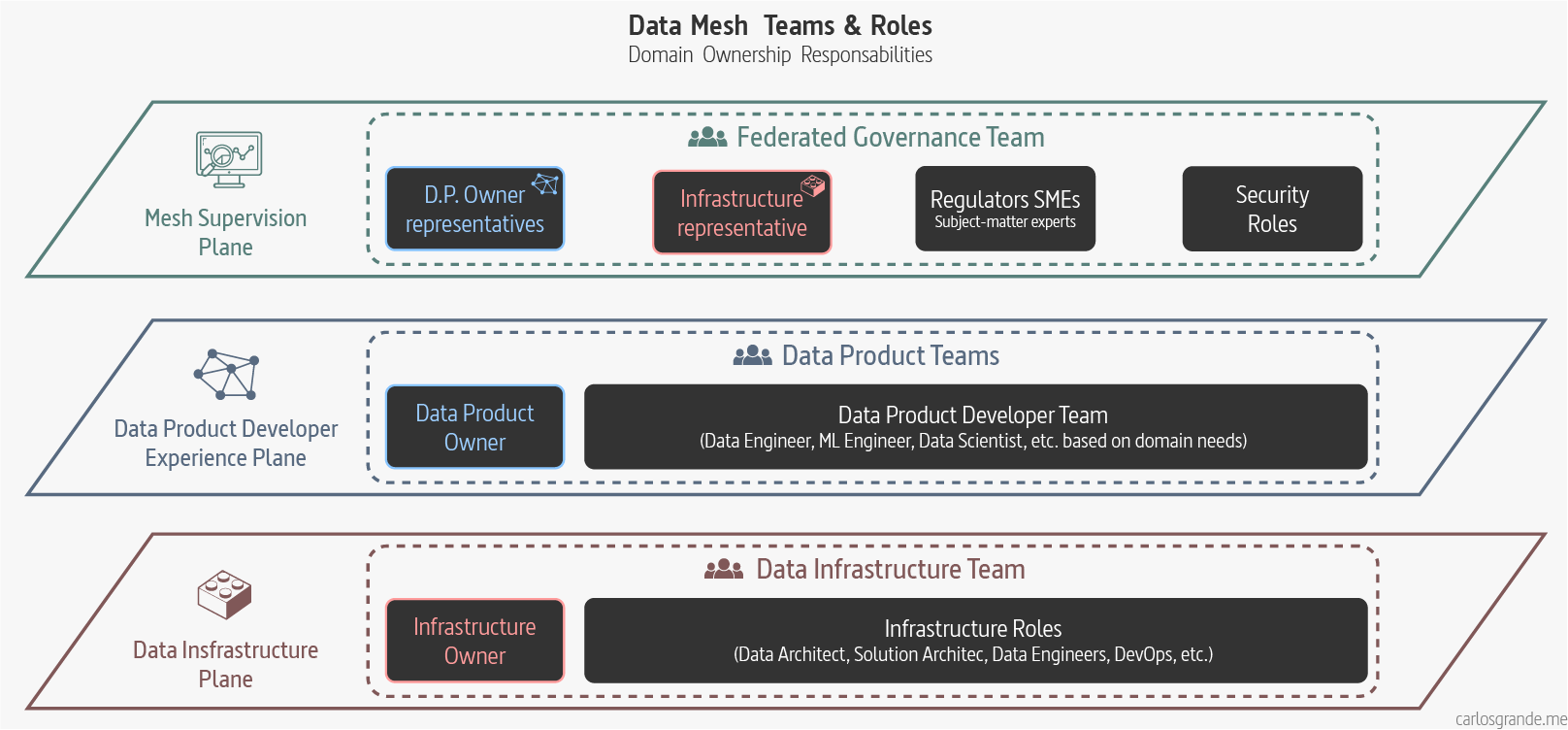

Data Mesh Architect : A role responsible for the infrastructure team, with the big picture of the Data Mesh, ensuring the data is self-served and acting as the link between the infrastructure layer and the Federated Governance team.

Following the Data Mesh layer Architecture we would have three team types:

- Data infrastructure teams (located on the infrastructure plane): Providing the underliying infrastructure required to build, run and monitor data products.

- Data Product teams (located on the Data Product developer plane): Supporting the common data product developer journey. They are conformed by the Data Product Developer roles and a Data Product Owner.

- Federated Computatinal Governance Team (located on the mesh supervision plane): maintaining an equilibrium between centralization and decentralization ; what decisions need to be localized to each domain and what decisions should be made globally for all domains.

In a data mesh, each data product team is in charge of dealing with the data related to the team domain. They must gather the data and move it to the right storage so it can be easily consume by data users. Data engineers will be part of the team and they may use stream engines to move the data and perform ETL or run data pipelines in a batch or micro batch fashion. The key is that a data pipeline is simply an internal complexity and implementation of the data domain and is handled internally within the domain instead of having separate data engineering teams.

On this next visualization, I tried to reflect the teams and roles involved in a Data Mesh. These roles are not exclusive, they may be conditioned by the company, the organizational structure, and the platform differing considerably, but it's important to highlight the different layers and the teams to which they belong .

Data Mesh Teams & Roles

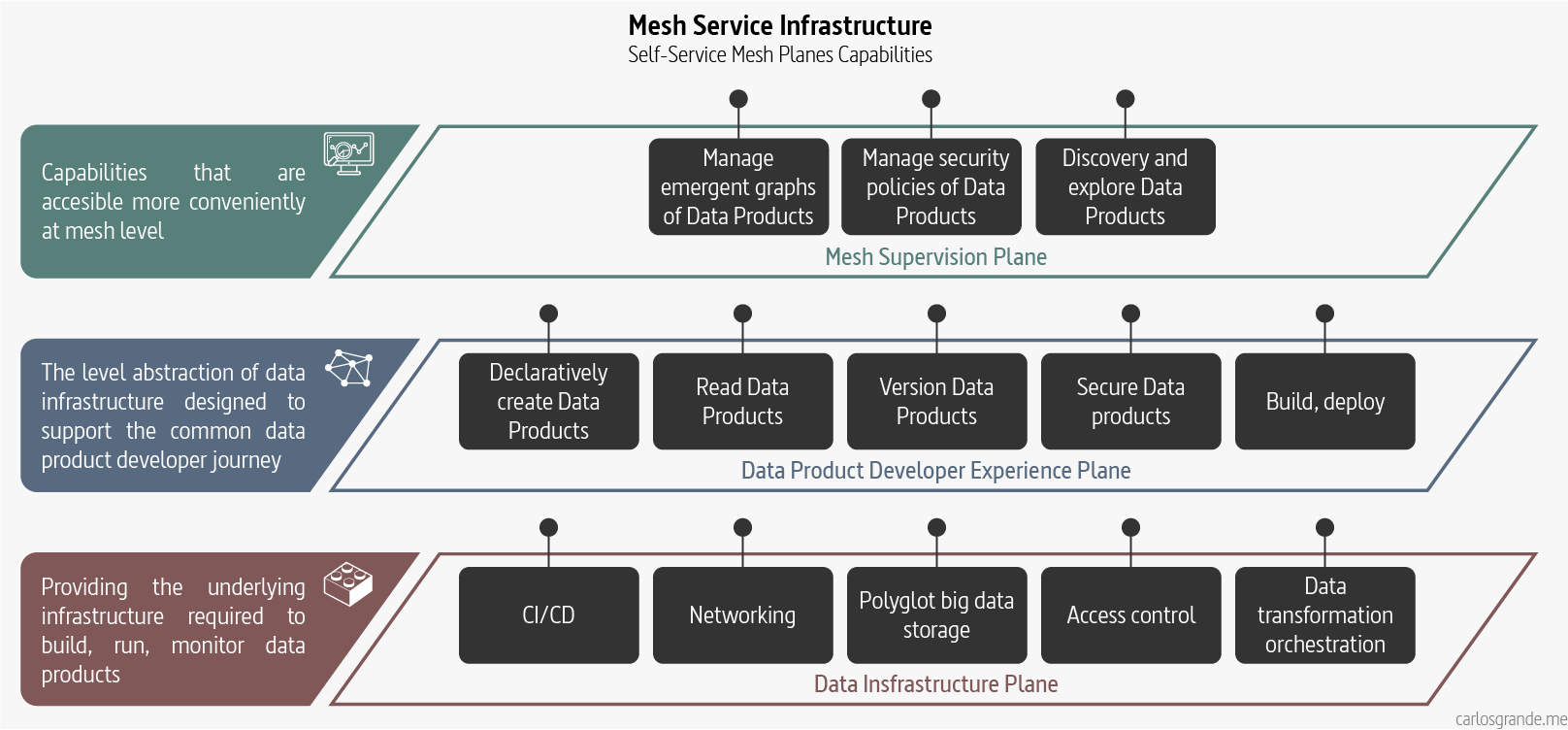

3.3 Self-Serve Platform

Data Mesh’s fourth principle, self-serve data infrastructure as a platform, exists. It is not that we have any shortage of data and analytics platforms and technologies, but we need to make changes to them so that they can scale out sharing, accessing and using analytical data, in a decentralized manner , for a new population of generalist technologists. This is the key differentiation of data platforms that enable a Data Mesh implementation.

Mesh Service Infrastructure

3.4 Data Governance

A data mesh implementation requires a governance model that embraces decentralization, interoperability through global standardization, and an automated execution of decisions in the platform. The idea is to create a team to maintain an equilibrium between centralization and decentralization ; that is what decisions need to be localized to each domain and what decisions should be made globally for all domains.

Data mesh’s federated governance, embraces change and multiple bounded contexts. A supportive organizational structure, incentive model and architecture is necessary for the federated governance model to function in order to arrive at global decisions and standards for interoperability , while respecting autonomy of local domains, and implement global policies effectively.

The idea is to localize decisions as close to the source as possible while keeping interoperability and integration standards at a global level, so the mesh components can be easily integrated. In a data mesh, tools can be used to enforce global policies such as GDPR enforcement or access management and also local policies where each domain sets its own policies for their data products such as access control or data retention.

| Pre Data Mesh Governance | Data Mesh Governance |

|---|---|

| Centralized team | Federated team |

| Responsible for data quality | Responsible for defining how to model what constitutes quality |

| Responsible for data security | Responsible for defining aspects of data security i.e. data sensivity levels for the platform to build in and monitor automatically |

| Responsible for complying with regulation | Responsible for defining the regulation requirements for the platform to build in and monitor automatically |

| Centralized custodianship of data | Federated custodianship of data by domains |

| Responsible for global canonical data modeling | Responsible for modeling polysemes, data elements that cross the boundaries of multiple domains |

| Team is independent from domains | Team is made of domains representatives |

| Aiming for a well defined static structure of data | Aiming for enabling effective mesh operation embracing a continuously changing and dynamic topology of the mesh |

| Centralized technology used by monolithic lake/warehouse | Self-serve platform technologies used by each domain |

| Measure success based on number or volume of governed data (tables) | Measure success based on the network effect, the connections representing the consumption of data on the mesh |

| Manual process with human intervention | Automated processes implemented by the platform |

| Prevent error | Detect error and recover through platform's automated processing |

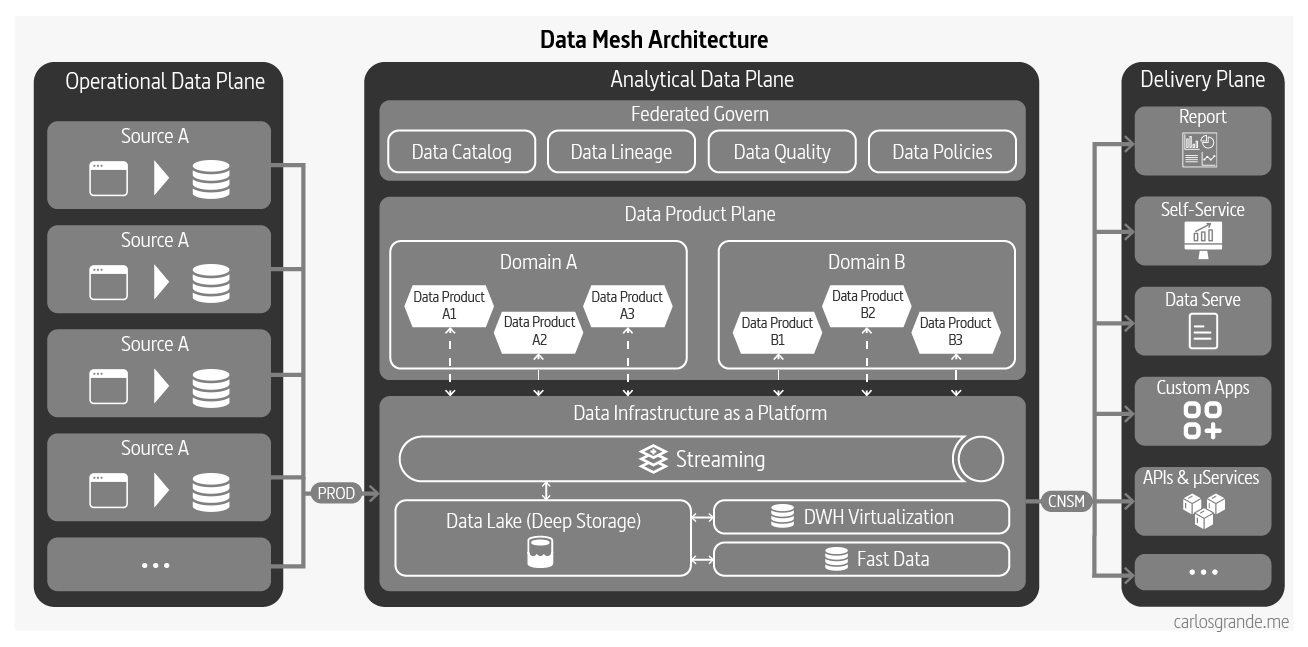

3.5 Data Mesh Architecture

There are many applied Data Mesh architectures, some are more decentralized than others, and we have different tools and services. Even though there isn't a Data Mesh architecture itself, we can define and relate their main components.

Deep Store: a Repository store to make data addressable using URLs, access control, versioning, encryption, metadata, and observability. Where you can easily monitor and govern the data stored in a data lake.

New modern engines have been created to be able to unify real time and batch data and perform OLAP queries with very low latency. As an example, Apache Druid can ingest and store massive amounts of data in a cost efficient way, minimizing the needs for data lakes.

Data Warehouse / Data Virtualization: A fast data layer in a relational database used for data analysis, particularly of historical data.

The main advantage of Data Virtualization is speed-to-market, where we can build a solution in a fraction of the time it takes to build a data warehouse. This is because you don’t need to design and build the data warehouse and the ETL to copy the data into it, and also don’t need to spend as much time testing.

Stream engine platform

A stream engine platform such as Kafka or Pulsar to migrate to microservices and unify batch and streaming. This is the first step in order to close the gap between OLTP and OLAP workloads, both can use the streaming platform either to develop event driven microservices or to move data.

These platforms will allow you to duplicate the data in different formats or databases in a reliable way. This way you can start serving the same data in different shapes to match the needs of the downstream consumers.

Metadata and Data Catalogs

A collection of metadata, combined with data management and search tools, that helps analysts and other data users to find the data that they need, serves as an inventory of available data, and provides information to evaluate fitness data for intended uses.

Query Engines

This type of tools focus on querying different data sources and formats in an unified way. The idea is to query your data lake using SQL queries like if it was a relational database, although it has some limitations. Some of these tools can also query NoSQL databases and much more. Query engines are the slowest option but provide the maximum flexibility.

Some query engines can integrate with data catalogs and join data from different data sources.

Data Mesh Architecture

4. Data Mesh Implementation

Currently, we have many kinds of Data Meshes, some highly centralized and others more de-centralized. Migrating to a decentralized architecture is difficult and time consuming, it takes a long time to reach the right level of maturity to be able to run it at a scale. Although there are technical challenges, the main difficulty is trying to change the organization mindset.

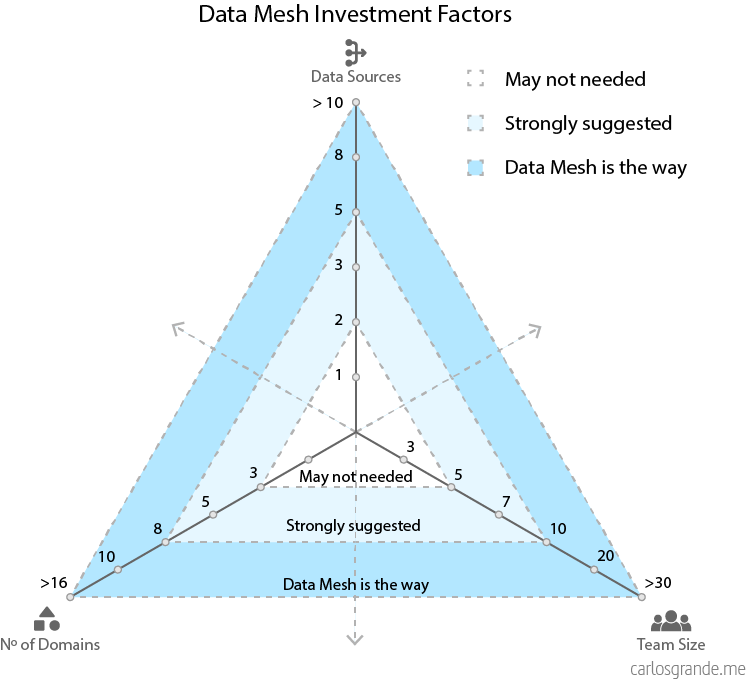

4.1 Data Mesh Investment Factors

Data Mesh is difficult to implement because of the de-centralized nature, but at the end, it is required in order to solve the scalability issues that companies are currently facing. Centralized architectures work better for small companies or companies which are not data driven.

Only implement a data mesh if you have difficulties scaling, team friction, data quality issues, bottlenecks and governance/securities problems. You must also have a Big Data problem with huge amounts of structure and unstructured data.

In my opinion, to be able to decide if your company needs the Data Mesh paradigm, there are so many factors you should analyze before taking the step. That said, after reading different articles, there are three main factors to keep in mind:

- The number of data sources your company has to feed the analytical Data Platform.

- The size of your data team , how many data analysts, data engineers, and product managers.

- The number of data domains your company has. How many functional teams (marketing, sales, operations, etc.) rely on your data sources to drive decision-making, and how many products does your company have?

Data Mesh Investment Factors

4.2 Building a Data Mesh

In their blogs, Javier Ramos and Sven Balnojan have done an excellent job explaining the different steps required to build a data mesh. I really recommend checking their articles to get more details.

- Data Mesh Applied by Sven Balnojan

- Building a Data Mesh: A beginners guide by Javier Ramos

I have tried to summarize the different steps to decentralize your architecture and, start building a Data Mesh.

Step 1: Addressable data

Adress your data by standardizing path names and using the REST approach to name the data products using resource names, and add SLAs to the end points and monitor them to make sure the data is always available.

Re route your query engines and BI tools to use the new data products which are independent and addressable.

The data infrastructure team will be in charge of this step, still using a centralized approach.

Step 2: Discoverability (Metadata and Data Catalog)

Create a space to find the new data source with the following capabilities:

- Search, discover and “add to the cart” for data within your enterprise.

- Request access and grant access to data products in a way that is usable to data owners and consumers without the involvement of a central team.

In this step, work on the data product features adding tests for data quality, lineage, monitoring, etc.

Step 3 : Decentralize and implement DDD

Now we can start adding nodes to our data mesh.

- Migrate the ownership into the domain team creating the data moving towards a de centralized architecture. Each team must own their data assets, ETL pipelines, quality, testing, etc.

- Introduce the federated governance for data standardization,security and interoperability, by introducing DataOps practices and improving observability and self services capabilities. This way you can unify your OLTP and OLAP processes and tooling.

Once you have created your first “data microservice”, repeat the above process breaking the legacy data monolith into more decentralized services.

5. Case Studies

5.1 zalando.

An excellent presentation by Max Schultze and Arif Wider, about the Zalando analytics cloud journey. The presentation begins with their legacy analytics and how they manage to evolve this legacy from the ingestion, storage, and serving layer.

Data Mesh in Practice: How Europe's Leading Online Platform for Fashion Goes Beyond the Data Lake by Max Schultze (Zalando)

A great post from Tristan Baker about the followed strategy at Intuit. They have migrated from an on-premise architecture of centrally-managed analytics data sets and data infrastructure tools to a fully cloud-native set of data and tools. Tristan will take you through a full articulation of their vision, inherent challenges, and strategy for building better data-driven systems at Intuit.

Intuit’s Data Mesh Strategy

5.3 Saxo Bank

An outstanding post by Sheetal Pratik about the Saxo Journey. They show how they implement the Data Mesh paradigm and focus on the Governance Framework and Data Mesh architecture. The post has very clear diagrams to explain their points.

Enabling Data Discovery in a Data Mesh: The Saxo Journey

5.4 JP Morgan Chase

An AWS blog co-authored with Anu Jain, Graham Person, and Paul Conroy from JP Morgan Chase.

They provide a blueprint for instantiating data lakes that implements the mesh architecture in a standardized way using a defined set of cloud services, we enable data sharing across the enterprise while giving data owners the control and visibility they need to manage their data effectively.

How JPMorgan Chase built a data mesh architecture to drive significant value to enhance their enterprise data platform

5.5 Kolibri Games

A presentation by António Fitas, Barr Moses and, Scorr O'Leary. They talks about the evolution of teams on the data/engineering side as well as the pain points of their setup at that point, and discuss how to evaluate whether data mesh is right for a company and how to measure the return on investment of data mesh, especially the increase in agility and the increase in the number of decisions you deem "data driven".

Kolibri Games' Data Mesh Journey and Measuring Data Mesh ROI

5.6 Netflix

A presentation by Justin Cunningham about the Keystone Platform’s unique approach to declarative configuration and schema evolution, as well as our approach to unifying batch and streaming data and processing covered in depth.

Malla de datos de Netflix: procesamiento de datos componibles - Justin Cunningham

5.7 Adevinta

An excellent post by Xavier Gumara Rigol, about how Adevinta evolved from a centralised approach to data for analytics to a data mesh by setting some working agreements.

Building a data mesh to support an ecosystem of data products at Adevinta

5.8 HelloFresh

In this blog, Clemence W. Chee describes their journey of implementing the Data Mesh principles by showing the different phases they have faced.

HelloFresh Journey to the Data Mesh

6. Conclusion

Data Mesh isn't a static platform nor an architecture. Data Mesh is a product continuously evolving , and it may have different interpretations, but the core principles always remain. Domain-driven design, decentralization, data ownership, automation, observability, and federated governance.

The main important aspect, as Zhamak Dehghani mentions, is to stop thinking about data as an asset, like something we want to keep and collect. The new way of imaging the data should shift from an asset to a product. The moment we think about data as a product, we start delighting the experience of the consumers, shifting the perspective from the producer collecting data to the producer serving the data.

Start building a Data Mesh can be overwhelming. First, we need to understand this is an evolutionary path starting from your own company vision and introduce the Mesh principles slowly. We may start by select two or three source-aligned use cases , locating the domain working backwards from the use case to the sources and empower those teams to start serving those data products . Then, think about the platform capabilities we need and put the platform team in place to start building this first generation Data Mesh. Finally, we iterate with new use cases moving towards the Data Mesh vision.

7. Data Mesh Vocabulary

Data Mesh is a complex paradigm with many abstract terms. At the next table, I tried to extract the main vocabulary around this topic.

| Word | Definition |

|---|---|

| A data mesh is a set of read-only products, designed for sharing the data on the outside for non-real-time consumption/analytics. They are shared in an interoperable way so you can combine data from multiple domains owned by each domain team. | |

| A business domain, where experts analyze data and build reports themselves, with minimal IT support. A data domain should create and publish their data as a product for the rest of the business to consume as well. | |

| A Data Product is a collection of datasets concerning a certain topic which has risen to fulfill a certain purpose, yet which can support multiple purposes or be used as building block for multiple other data products. | |

| A role accountable for the success of domain’s data products in delivering value, satisfying and growing the data consumers, and defining the lifecycle of the data products. | |

| A role responsible for the infrastructure team, with the big picture of the Data Mesh, ensuring the data is self-served and acting as the link between the infrastructure layer and the Federated Governance team. | |

| Analytical data reflecting the business facts generated by the operational systems. This is also called native data product. | |

| Analytical data that is an aggregate of multiple upstream domains. | |

| Analytical data transformed to fit the needs of one or multiple specific use cases and consuming applications. This is also called fit-for-purpose domain data. | |

| Refers to the encapsulated private data contained within the service itself. As a sweeping statement, this is the data that has always been considered "normal"—at least in your database class in college. The classic data contained in a SQL database and manipulated by a typical application is inside data. | |

| Data on the outside refers to the information that flows between these independent services. This includes messages, files, and events. It's not your classic SQL data. | |

| Smallest unit of architecture that can be independently deployed with high functional cohesion, and includes all the structural elements required for its function. | |

| Input data ports to the data product. | |

| Output data ports from the data product. |

8. Discovery Roadmap

To elaborate this Thesis, I have been recollecting links and resources about the Data Mesh paradigm. I wanted to share the discovery path I've followed to understand the Data Mesh implications.

8.1 Zhamak Dehghani

How to Move Beyond a Monolithic Data Lake to a Distributed Data Mesh

Data Mesh Principles and Logical Architecture

Introduction to Data Mesh: A Paradigm Shift in Analytical Data Management by Zhamak Dehghani (Part I)

How to Build a Foundation for Data Mesh: A Principled Approach by Zhamak Dehghani (Part II)

8.2 Introductory Content

Data Mesh Score calculator

Decentralizing Data: From Data Monolith to Data Mesh with Zhamak Dehghani, Creator of Data Mesh

Data Mesh: The Four Principles of the Distributed Architecture by Eugene Berko

How to achieve Data Mesh teams and culture?

8.3 Deep Dive

Anatomy of Data Products in Data Mesh

- Building a Data Mesh: A beginners guide

Data Mesh Applied: Moving step-by-step from mono data lake to decentralized 21st-century data mesh.

There’s More Than One Kind of Data Mesh: Three Types of Data Meshes

Building a successful Data Mesh – More than just a technology initiative

How the **ck (heck) do you build a Data Mesh?

Data Mesh architecture patterns

Data Mesh: Topologies and domain granularity

Acknowledgements

I am so grateful to Data Mesh Learning Community founded and run by Scott Hirleman. I appreciate the help from the community, the meetups, and the resources published and organized. It has been a great point to start researching for this thesis.

I also wanted to acknowledge all the authors from my sources for sharing their knowledge.

References and links

- Dehghani, Z. Data Mesh: Delivering Data-Driven Value at Scale (1st ed.). O’Reilly.

- Data Mesh Learning Community

- What is a Data Mesh and How Not to Mesh it Up

- More Resources like this here

Privacy Overview

Data Mesh and its Contribution to Data Governance : A case study within the financial asset management industry

- Laurens Christiaanse

- Department of Information Science

Student thesis : Master's Thesis

| Date of Award | 1 Sept 2023 |

|---|---|

| Original language | English |

| Supervisor | Karel Lemmen (Supervisor) & Pien Walraven (Examiner) |

- Data Governance

- Contributions

- Data Domains

- Self-Serve Data Platform

- Data as a Product

- Federated Computational Governance

- Usability Characteristics

Master's Degree

- Master Business Process management & IT (BPMIT)

File : application/pdf, 1.54 MB

Type : Thesis

Voicebox is here!

What is a Data Mesh: Principles and Architecture

Get the latest in your inbox

Defining Data Mesh

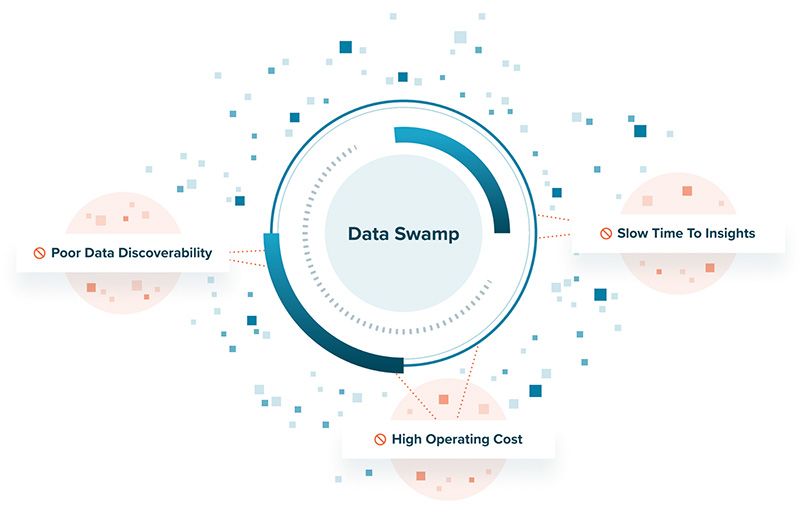

Rather than dwell on the definitions (Gartner counts at least three) of data mesh, I’ll go with my lay version:

data mesh [dey-tuh- mesh]

A decentralized architecture capability to solve the data swamp problem, reduce data analytics cost, and speed actionable insights to better enable data-informed business decisions.

There, I said it without the buzzwords like “data democratization” or “paradigm shift.” Mea culpa for throwing in “actionable insight.” Let’s decompose what data mesh means for the real working class, our data engineers, architects, and scientists.

Why Use Data Mesh

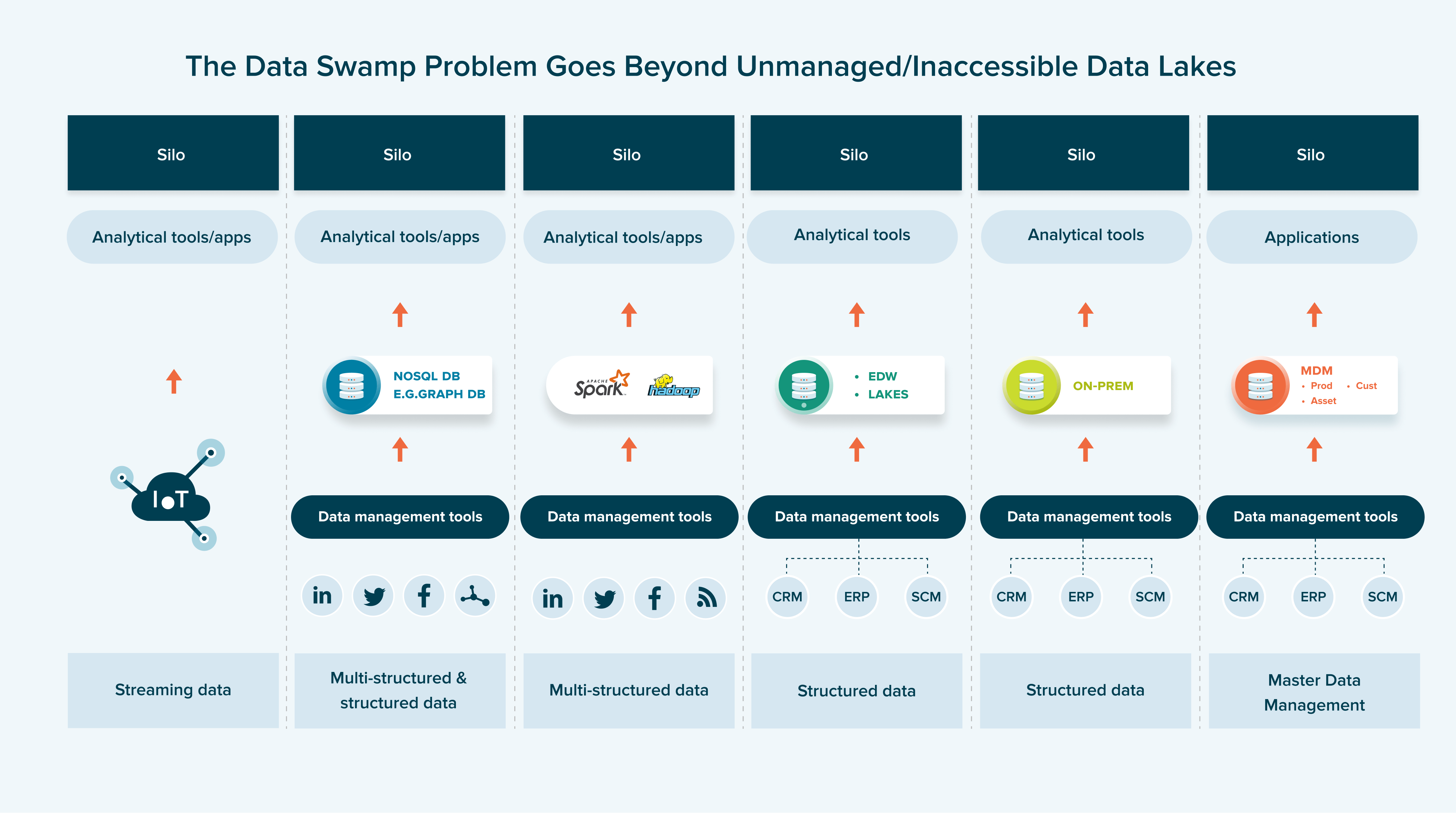

Data swamps are losing their role as a centralized data platform

In the mid-1990s, data warehousing was bursting onto the data management scene. Fueled by the hype of the fabled “beer and diapers” story, businesses were pouring tens of millions of dollars to build huge data monoliths to handle the consumption, storage, transformation, and output of data in one central system to answer business questions that required complex data analytics such as “who are the high-value customers most likely to buy X?”

The thesis of data warehousing worked like a charm at that time . However, as the appetite for data analytics increased, so did the need for more data to be ingested. The complexity and pace of data pipelines soared (as did the nickname “data wranglers”). I began to see the cracks forming in the data warehouse theory and delved into its growing failure to get value from analytical data in my master’s research.

As social media and the iPhone became the norm, many turned to a second generation of data analytics architecture called data lakes. While traditional data warehouses used an Extract-Transform-Load (ETL) process to ingest data, data lakes instead rely on an Extract-Load-Transform (ELT) process that puts data into cheap BLOB storage. This eliminated the big shortcomings of data warehouses but spurred the “Let’s just collect everything” theology of data swamps.

Further learning: Data Lake Acceleration

Is Data Mesh Right For Your Organization?

Fast forward to today. Only 32% of companies are realizing tangible and measurable value from data (“trapped value”), according to a study by Accenture. The roaring demand for “discovery or iterative style analytics” (where consumers don’t really know the questions or data they need) is raising access to data to a whole new level with new/expanding data sources (or “wide data”) across multi- and hybrid cloud environments, thrusting massive friction onto traditional data lakes and warehouses.

Nowhere is this pain more visible than among data and analytics teams where:

- Data scientists consider themselves 40% a vacuum, 40% a janitor, and 20% a fortune-teller Toward Data Science

- 78% of data engineers wished that their job came with a therapist to help with work-related stress Survey commissioned by data.world and DataKitchen

- 65% of large, data-intensive firms have a CDO or CAO, but the average tenure is just 2.5 years Harvard Business Review

Data Mesh Principles

A data mesh aims to create an architectural foundation for getting value from analytical data and historical facts at scale – scale being applied to the constant change of data landscape, proliferation of data and analytics demand, diversity of transformation and processing that use cases require, and speed of response to change.

To achieve this objective, most experts agree that the thesis of data mesh is based on four precepts:

- Decentralize the ownership of analytical data to business domains closest to the source of the data or its main consumers. This removes the need for authoritarian bottlenecks of data teams, warehouses, and lake architecture, scaling out data access, consumers, and use cases.

- Make access and use of data products easy and self-service . This removes the friction of data sharing, from source to consumption, streamlining the experience of data users to discover, access, and use data products for their use cases.

- Federate the governance of data based on an appropriate operating model that balances decision-making and accountability. This precept builds on domain-oriented ownership and data as a product.

- Manage data as a product and a development methodology. Consider how data teams can create value in their organizations. Think features like discoverability trustworthiness, reusability, and value. This facilitates, regardless of silos, the sharing of data with users.

Data mesh inverts the traditional data warehouse/lake ideology by transforming data gatekeepers into data liberators to give every “data citizen” (not just the data scientist, engineer, or analyst) easy access and the ability to work with data comfortably, regardless of their technical know-how, to reduce the cost of data analytics and speed time to insight .

However, the rise of data mesh does not mean the fall of data lakes; rather, they are complementary architectures. A data lake is a good solution for storing vast amounts of data in a centralized location. And data mesh is the best solution for fast data retrieval, integration, and analytics. In a nutshell, think of a data mesh as connective tissue to data lakes and/or other sources.

Introduced by Thoughtworks, data mesh is “a shift in modern distributed architecture that applies platform thinking to create self-serve data infrastructure, treating data as the product.” It is a data and analytics platform where data can remain within different databases, rather than being consolidated into a single data lake.

Like a data fabric architecture, a data mesh architecture comprises four layers . To provide useful information to data and analytics professionals, I’ll break the data mesh rule by also talking about specific technologies and representative vendors.

- Storage: This is where much of your data lives once it’s ingested and organized from OLTP databases, data lakes, data warehouses, graph databases, and various files. The composite of analytic systems equates to your central data platform. Representative vendors are Snowflake, Databricks , and Google.

- Analytics: This layer is responsible for delivering the process data to the end-users, including business intelligence analysts, data scientists, and business stakeholders who consume the data with the help of reports and analytic models. Representative vendors are Tableau, PowerBI, and SAS.

- Governance: This is where processes, roles, policies, standards, and metrics ensure the effective and efficient use of your data. Data catalogs define who can take what action, upon what data, in what situations, and using what methods. Representative vendors are Alation , Collibra, and Promethium.

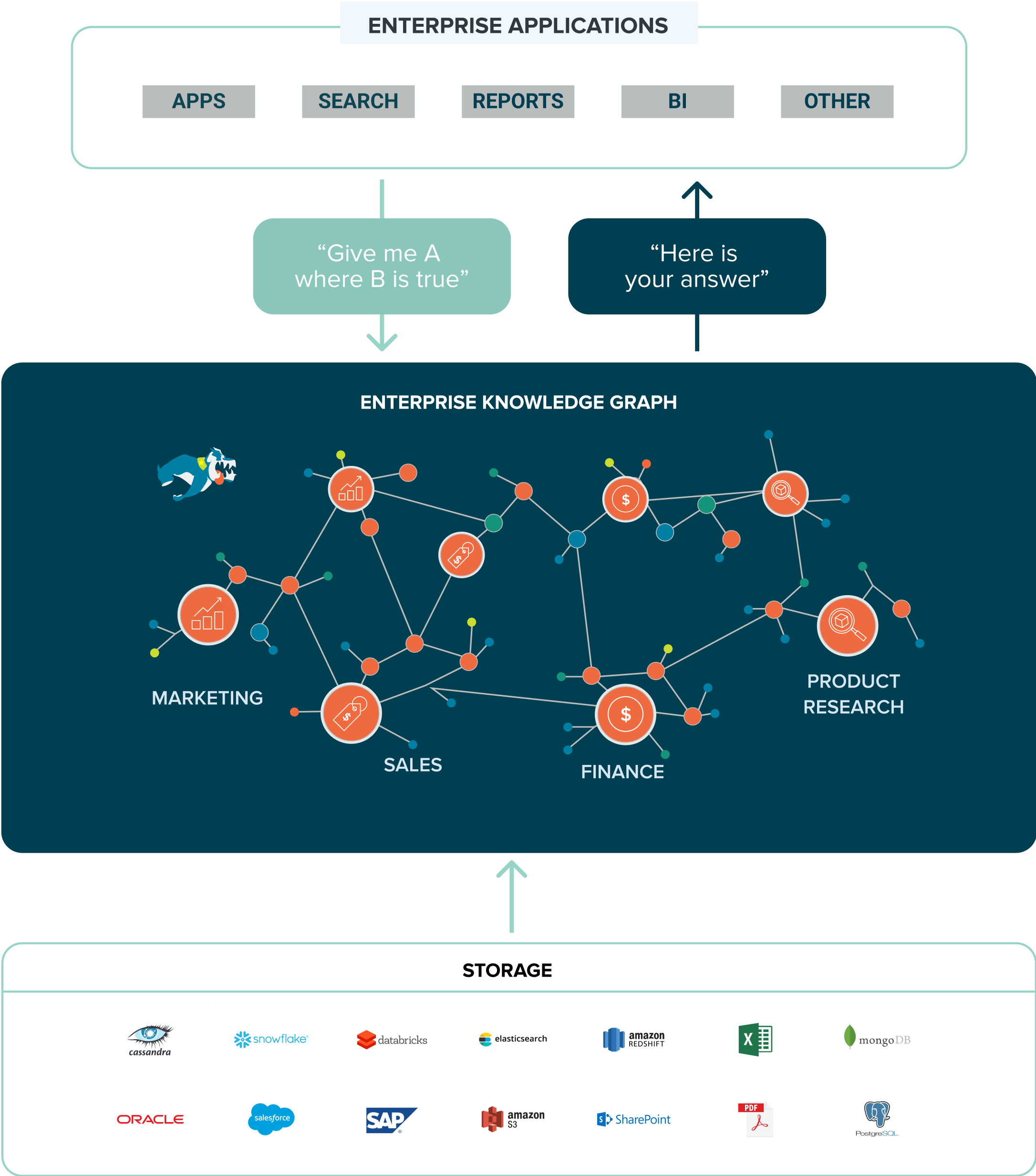

- Semantic: This is the virtual “connective tissue” of the data mesh. Knowledge graphs (not to be confused with graph databases) connect any data into a canonical data model, harmonize it into real-world business meaning and relationships, and enable self-service data exploration and discovery.

VentureBeat says a data mesh architecture “connects various data sources (including data lakes) into a coherent infrastructure, where all data is accessible if you have the right authority to access it.” This doesn’t mean there is one big, hairy data warehouse or lake (see data swamp problem) — the laws of physics and the demand for analytics mean that large, disparate data sets can’t just be joined together over huge distances with decent performance. Not to mention the costs of moving, transforming, and maintaining the data (and ETL | ELT).

Enter the semantic layer. A semantic layer represents a network of real-world entities— i.e., objects, events, situations, or concepts — and illustrates the relationships to answer complex cross-domain questions that can be shared and re-used based on fine-grained data access policies and business rules. It is comprised of three layers (and a knowledge catalog that interfaces with governance tools):

- Business meaning: This is a business representation of data. It enables users to quickly discover and access data using standard search terms — like customer, recent purchase, and prospect. Data can be shared and reused through a common, standards-based vocabulary.

- Data storytelling (inferencing) : Creates new relationships by interpreting your source data against your data model. By expressing all explicit and inferred relationships and connections between your data sources, you create a richer, more accurate view of your data and cut down on data preparation.

- Virtualization: Provides an alternative to costly, slow ETL integration and permanent transformation of source data. Virtual graphs allow you to leave data where it is and bring it together at query time to reflect the latest changes, which scales analytics use cases and users at minimal cost and reduces data latency.

The big payoff of a semantic layer is in providing a better way to enable self-service, federated queries, enabling you to build and deploy analytics data products quickly and efficiently.

Further learning: How Knowledge Graphs Work

Data Mesh Benefits

Generally, organizations handling and analyzing a large amount of data sources should seriously consider evolving to a data mesh architecture. Other signals include a high number of data domains and functions teams that demand data products (especially advanced analytics such as predictive and simulation modeling), frequent data pipeline bottlenecks, and prioritizing data governance.

Stardog commissioned Forrester Consulting to interview four decision-makers with experience implementing Stardog. For this commissioned study, Forrester aggregated the interviewees’ experiences and combined the results into a single composite organization. The key findings were that the composite organization using the Stardog Enterprise Knowledge Graph platform realized the following over three years:

- 320 percent return on investments

- $4.7 million total data scientist productivity improvement

- $2.6 million in infrastructure savings from avoided copying and moving data

- $2.4 million in incremental profit from enhanced quantity, quality, and speed of insights

Knowledge Graphs 101

How to Overcome a Major Enterprise Liability and Unleash Massive Potential

Let’s stay in touch

Subscribe to get our latest content and stay up to date on news and events.

This website stores cookies on your computer which are used to improve your website experience and provide more customized services to you. To find out more about the cookies we use, see our privacy policy.

Data Mesh Principles and Logical Architecture

Our aspiration to augment and improve every aspect of business and life with data, demands a paradigm shift in how we manage data at scale. While the technology advances of the past decade have addressed the scale of volume of data and data processing compute, they have failed to address scale in other dimensions: changes in the data landscape, proliferation of sources of data, diversity of data use cases and users, and speed of response to change. Data mesh addresses these dimensions, founded in four principles: domain-oriented decentralized data ownership and architecture, data as a product, self-serve data infrastructure as a platform, and federated computational governance. Each principle drives a new logical view of the technical architecture and organizational structure.

03 December 2020

Zhamak is the director of emerging technologies at Thoughtworks North America with focus on distributed systems architecture and a deep passion for decentralized solutions. She is a member of Thoughtworks Technology Advisory Board and contributes to the creation of Thoughtworks Technology Radar.

data analytics

Core principles and logical architecture of data mesh

Logical architecture: domain-oriented data and compute, logical architecture:data product the architectural quantum, logical architecture: a multi-plane data platform, logical architecture: computational policies embedded in the mesh, principles summary and the high level logical architecture.

For more on Data Mesh, Zhamak went on to write a full book that covers more details on strategy, implementation, and organizational design.

The original writeup, How to Move Beyond a Monolithic Data Lake to a Distributed Data Mesh - which I encourage you to read before joining me back here - empathized with today’s pain points of architectural and organizational challenges in order to become data-driven, use data to compete, or use data at scale to drive value. It offered an alternative perspective which since has captured many organizations’ attention, and given hope for a different future. While the original writeup describes the approach, it leaves many details of the design and implementation to one’s imagination. I have no intention of being too prescriptive in this article, and kill the imagination and creativity around data mesh implementation. However I think it’s only responsible to clarify the architectural aspects of data mesh as a stepping stone to move the paradigm forward.

This article is written with the intention of a follow up. It summarizes the data mesh approach by enumerating its underpinning principles, and the high level logical architecture that the principles drive. Establishing the high level logical model is a necessary foundation before I dive into detailed architecture of data mesh core components in future articles. Hence, if you are in search of a prescription around exact tools and recipes for data mesh, this article may disappoint you. If you are seeking a simple and technology-agnostic model that establishes a common language, come along.

The great divide of data

What do we really mean by data? The answer depends on whom you ask. Today’s landscape is divided into operational data and analytical data . Operational data sits in databases behind business capabilities served with microservices, has a transactional nature, keeps the current state and serves the needs of the applications running the business. Analytical data is a temporal and aggregated view of the facts of the business over time, often modeled to provide retrospective or future-perspective insights; it trains the ML models or feeds the analytical reports.

The current state of technology, architecture and organization design is reflective of the divergence of these two data planes - two levels of existence, integrated yet separate. This divergence has led to a fragile architecture. Continuously failing ETL (Extract, Transform, Load) jobs and ever growing complexity of a labyrinth of data pipelines, is a familiar sight to many who attempt to connect these two planes, flowing data from operational data plane to the analytical plane, and back to the operational plane.

Figure 1: The great divide of data

Analytical data plane itself has diverged into two main architectures and technology stacks: data lake and data warehouse ; with data lake supporting data science access patterns, and data warehouse supporting analytical and business intelligence reporting access patterns. For this conversation, I put aside the dance between the two technology stacks: data warehouse attempting to onboard data science workflows and data lake attempting to serve data analysts and business intelligence. The original writeup on data mesh explores the challenges of the existing analytical data plane architecture.

Figure 2: Further divide of analytical data - warehouse

Figure 3: Further divide of analytical data - lake

Data mesh recognizes and respects the differences between these two planes: the nature and topology of the data, the differing use cases, individual personas of data consumers, and ultimately their diverse access patterns. However it attempts to connect these two planes under a different structure - an inverted model and topology based on domains and not technology stack - with a focus on the analytical data plane. Differences in today's available technology to manage the two archetypes of data, should not lead to separation of organization, teams and people who work on them. In my opinion, the operational and transactional data technology and topology is relatively mature, and driven largely by the microservices architecture; data is hidden on the inside of each microservice, controlled and accessed through the microserivce’s APIs. Yes there is room for innovation to truly achieve multi-cloud-native operational database solutions, but from the architectural perspective it meets the needs of the business. However it’s the management and access to the analytical data that remains a point of friction at scale. This is where data mesh focuses.

I do believe that at some point in the future our technologies will evolve to bring these two planes even closer together, but for now, I suggest we keep their concerns separate.

Data mesh objective is to create a foundation for getting value from analytical data and historical facts at scale - scale being applied to constant change of data landscape , proliferation of both sources of data and consumers , diversity of transformation and processing that use cases require , speed of response to change . To achieve this objective, I suggest that there are four underpinning principles that any data mesh implementation embodies to achieve the promise of scale, while delivering quality and integrity guarantees needed to make data usable : 1) domain-oriented decentralized data ownership and architecture, 2) data as a product, 3) self-serve data infrastructure as a platform, and 4) federated computational governance.

While I expect the practices, technologies and implementations of these principles vary and mature over time, these principles remain unchanged.

I have intended for the four principles to be collectively necessary and sufficient ; to enable scale with resiliency while addressing concerns around siloing of incompatible data or increased cost of operation. Let's dive into each principle and then design the conceptual architecture that supports it.

Domain Ownership

Data mesh, at core, is founded in decentralization and distribution of responsibility to people who are closest to the data in order to support continuous change and scalability. The question is, how do we decompose and decentralize the components of the data ecosystem and their ownership. The components here are made of analytical data , its metadata , and the computation necessary to serve it.

Data mesh follows the seams of organizational units as the axis of decomposition. Our organizations today are decomposed based on their business domains. Such decomposition localizes the impact of continuous change and evolution - for the most part - to the domain’s bounded context . Hence, making the business domain’s bounded context a good candidate for distribution of data ownership.

In this article, I will continue to use the same use case as the original writeup, ‘a digital media company’. One can imagine that the media company divides its operation, hence the systems and teams that support the operation, based on domains such as ‘podcasts’, teams and systems that manage podcast publication and their hosts; ‘artists’, teams and systems that manage onboarding and paying artists, and so on. Data mesh argues that the ownership and serving of the analytical data should respect these domains. For example, the teams who manage ‘podcasts’, while providing APIs for releasing podcasts, should also be responsible for providing historical data that represents ‘released podcasts’ over time with other facts such as ‘listenership’ over time. For a deeper dive into this principle see Domain-oriented data decomposition and ownership .

To promote such decomposition, we need to model an architecture that arranges the analytical data by domains. In this architecture, the domain’s interface to the rest of the organization not only includes the operational capabilities but also access to the analytical data that the domain serves. For example, ‘podcasts’ domain provides operational APIs to ‘create a new podcast episode’ but also an analytical data endpoint for retrieving ‘all podcast episodes data over the last <n> months’. This implies that the architecture must remove any friction or coupling to let domains serve their analytical data and release the code that computes the data, independently of other domains. To scale, the architecture must support autonomy of the domain teams with regard to the release and deployment of their operational or analytical data systems.

The following example demonstrates the principle of domain oriented data ownership. The diagrams are only logical representations and exemplary. They aren't intended to be complete.

Each domain can expose one or many operational APIs, as well as one or many analytical data endpoints

Figure 4: Notation: domain, its analytical data and operational capabilities

Naturally, each domain can have dependencies to other domains' operational and analytical data endpoints. In the following example, 'podcasts' domain consumes analytical data of 'users updates' from the 'users' domain, so that it can provide a picture of the demographic of podcast listeners through its 'Podcast listeners demographic' dataset.

Figure 5: Example: domain oriented ownership of analytical data in addition to operational capabilities

Note: In the example, I have used an imperative language for accessing the operational data or capabilities, such as 'Pay artists'. This is simply to emphasize the difference between the intention of accessing operational data vs. analytical data. I do recognize that in practice operational APIs are implemented through a more declarative interface such as accessing a RESTful resource or a GraphQL query.

Data as a product

One of the challenges of existing analytical data architectures is the high friction and cost of discovering, understanding, trusting, and ultimately using quality data . If not addressed, this problem only exacerbates with data mesh, as the number of places and teams who provide data - domains - increases. This would be the consequence of our first principle of decentralization. Data as a product principle is designed to address the data quality and age-old data silos problem; or as Gartner calls it dark data - “the information assets organizations collect, process and store during regular business activities, but generally fail to use for other purposes”. Analytical data provided by the domains must be treated as a product, and the consumers of that data should be treated as customers - happy and delighted customers.

The original article enumerates a list of capabilities , including discoverability, security, explorability, understandability, trustworthiness , etc., that a data mesh implementation should support for a domain data to be considered a product. It also details the roles such as domain data product owner that organizations must introduce, responsible for the objective measures that ensure data is delivered as a product. These measures include data quality, decreased lead time of data consumption, and in general data user satisfaction through net promoter score . Domain data product owner must have a deep understanding of who the data users are, how do they use the data, and what are the native methods that they are comfortable with consuming the data. Such intimate knowledge of data users results in design of data product interfaces that meet their needs. In reality, for the majority of data products on the mesh, there are a few conventional personas with their unique tooling and expectations, data analysts and data scientists. All data products can develop standardized interfaces to support them. The conversation between users of the data and product owners is a necessary piece for establishing the interfaces of data products.

Each domain will include data product developer roles , responsible for building, maintaining and serving the domain's data products. Data product developers will be working alongside other developers in the domain. Each domain team may serve one or multiple data products. It’s also possible to form new teams to serve data products that don’t naturally fit into an existing operational domain.

Note: this is an inverted model of responsibility compared to past paradigms. The accountability of data quality shifts upstream as close to the source of the data as possible.

Architecturally, to support data as a product that domains can autonomously serve or consume, data mesh introduces the concept of data product as its architectural quantum . Architectural quantum, as defined by Evolutionary Architecture , is the smallest unit of architecture that can be independently deployed with high functional cohesion, and includes all the structural elements required for its function.

Data product is the node on the mesh that encapsulates three structural components required for its function, providing access to the domain's analytical data as a product.

- Code : it includes (a) code for data pipelines responsible for consuming, transforming and serving upstream data - data received from domain’s operational system or an upstream data product; (b) code for APIs that provide access to data, semantic and syntax schema, observability metrics and other metadata; (c) code for enforcing traits such as access control policies, compliance, provenance, etc.

- Data and Metadata : well that’s what we are all here for, the underlying analytical and historical data in a polyglot form. Depending on the nature of the domain data and its consumption models, data can be served as events, batch files, relational tables, graphs, etc., while maintaining the same semantic. For data to be usable there is an associated set of metadata including data computational documentation, semantic and syntax declaration, quality metrics, etc; metadata that is intrinsic to the data e.g. its semantic definition, and metadata that communicates the traits used by computational governance to implement the expected behavior e.g. access control policies.

- Infrastructure : The infrastructure component enables building, deploying and running the data product's code, as well as storage and access to big data and metadata.

Figure 6: Data product components as one architectural quantum

The following example builds on the previous section, demonstrating the data product as the architectural quantum. The diagram only includes sample content and is not intended to be complete or include all design and implementation details. While this is still a logical representation it is getting closer to the physical implementation.

Figure 7: Notation: domain, its (analytical) data product and operational system

Figure 8: Data products serving the domain-oriented analytical data

Note: Data mesh model differs from the past paradigms where pipelines (code) are managed as independent components from the data they produce; and often infrastructure, like an instance of a warehouse or a lake storage account, is shared among many datasets. Data product is a composition of all components - code, data and infrastructure - at the granularity of a domain's bounded context.

Self-serve data platform

As you can imagine, to build, deploy, execute, monitor, and access a humble hexagon - a data product - there is a fair bit of infrastructure that needs to be provisioned and run; the skills needed to provision this infrastructure are specialized and would be difficult to replicate in each domain. Most importantly, the only way that teams can autonomously own their data products is to have access to a high-level abstraction of infrastructure that removes complexity and friction of provisioning and managing the lifecycle of data products. This calls for a new principle, Self-serve data infrastructure as a platform to enable domain autonomy .

The data platform can be considered an extension of the delivery platform that already exists to run and monitor the services. However the underlying technology stack to operate data products, today, looks very different from the delivery platform for services. This is simply due to divergence of big data technology stacks from operational platforms. For example, domain teams might be deploying their services as Docker containers and the delivery platform uses Kubernetes for their orchestration; However the neighboring data product might be running its pipeline code as Spark jobs on a Databricks cluster. That requires provisioning and connecting two very different sets of infrastructure, that prior to data mesh did not require this level of interoperability and interconnectivity. My personal hope is that we start seeing a convergence of operational and data infrastructure where it makes sense. For example, perhaps running Spark on the same orchestration system, e.g. Kubernetes.

In reality, to make analytical data product development accessible to generalist developers, to the existing profile of developers that domains have, the self-serve platform needs to provide a new category of tools and interfaces in addition to simplifying provisioning. A self-serve data platform must create tooling that supports a domain data product developer’s workflow of creating, maintaining and running data products with less specialized knowledge that existing technologies assume; self-serve infrastructure must include capabilities to lower the current cost and specialization needed to build data products. The original writeup includes a list of capabilities that a self-serve data platform provides, including access to scalable polyglot data storage, data products schema, data pipeline declaration and orchestration, data products lineage, compute and data locality , etc.

The self-serve platform capabilities fall into multiple categories or planes as called in the model. Note: A plane is representative of a level of existence - integrated yet separate. Similar to physical and consciousness planes, or control and data planes in networking. A plane is neither a layer and nor implies a strong hierarchical access model.

Figure 9: Notation: A platform plane that provides a number of related capabilities through self-serve interfaces

A self-serve platform can have multiple planes that each serve a different profile of users. In the following example, lists three different data platform planes:

- Data infrastructure provisioning plane : supports the provisioning of the underlying infrastructure, required to run the components of a data product and the mesh of products. This includes provisioning of a distributed file storage, storage accounts, access control management system, the orchestration to run data products internal code, provisioning of a distributed query engine on a graph of data products, etc. I would expect that either other data platform planes or only advanced data product developers use this interface directly. This is a fairly low level data infrastructure lifecycle management plane.

- Data product developer experience plane : this is the main interface that a typical data product developer uses. This interface abstracts many of the complexities of what entails to support the workflow of a data product developer. It provides a higher level of abstraction than the 'provisioning plane'. It uses simple declarative interfaces to manage the lifecycle of a data product. It automatically implements the cross-cutting concerns that are defined as a set of standards and global conventions, applied to all data products and their interfaces.

- Data mesh supervision plane : there are a set of capabilities that are best provided at the mesh level - a graph of connected data products - globally. While the implementation of each of these interfaces might rely on individual data products capabilities, it’s more convenient to provide these capabilities at the level of the mesh. For example, ability to discover data products for a particular use case, is best provided by search or browsing the mesh of data products; or correlating multiple data products to create a higher order insight, is best provided through execution of a data semantic query that can operate across multiple data products on the mesh.

The following model is only exemplary and is not intending to be complete. While a hierarchy of planes is desirable, there is no strict layering implied below.

Figure 10: Multiple planes of self-serve data platform *DP stands for a data product

Federated computational governance

As you can see, data mesh follows a distributed system architecture; a collection of independent data products, with independent lifecycle, built and deployed by likely independent teams. However for the majority of use cases, to get value in forms of higher order datasets, insights or machine intelligence there is a need for these independent data products to interoperate; to be able to correlate them, create unions, find intersections, or perform other graphs or set operations on them at scale. For any of these operations to be possible, a data mesh implementation requires a governance model that embraces decentralization and domain self-sovereignty, interoperability through global standardization, a dynamic topology and most importantly automated execution of decisions by the platform . I call this a federated computational governance. A decision making model led by the federation of domain data product owners and data platform product owners, with autonomy and domain-local decision making power, while creating and adhering to a set of global rules - rules applied to all data products and their interfaces - to ensure a healthy and interoperable ecosystem. The group has a difficult job: maintaining an equilibrium between centralization and decentralization ; what decisions need to be localized to each domain and what decisions should be made globally for all domains. Ultimately global decisions have one purpose, creating interoperability and a compounding network effect through discovery and composition of data products.

The priorities of the governance in data mesh are different from traditional governance of analytical data management systems. While they both ultimately set out to get value from data, traditional data governance attempts to achieve that through centralization of decision making, and establishing global canonical representation of data with minimal support for change. Data mesh's federated computational governance, in contrast, embraces change and multiple interpretive contexts.

Placing a system in a straitjacket of constancy can cause fragility to evolve. -- C.S. Holling, ecologist

A supportive organizational structure, incentive model and architecture is necessary for the federated governance model to function: to arrive at global decisions and standards for interoperability, while respecting autonomy of local domains, and implement global policies effectively.

Figure 11: Notation: federated computational governance model

As mentioned earlier, striking a balance between what shall be standardized globally, implemented and enforced by the platform for all domains and their data products, and what shall be left to the domains to decide, is an art. For instance the domain data model is a concern that should be localized to a domain who is most intimately familiar with it. For example, how the semantic and syntax of 'podcast audienceship' data model is defined must be left to the 'podcast domain' team. However in contrast, the decision around how to identify a 'podcast listener' is a global concern. A podcast listener is a member of the population of 'users' - its upstream bounded context - who can cross the boundary of domains and be found in other domains such as 'users play streams'. The unified identification allows correlating information about 'users' who are both 'podcast listeners' and 'stream listeners'.

The following is an example of elements involved in the data mesh governance model. It’s not a comprehensive example and only demonstrative of concerns relevant at the global level.

Figure 12: : Example of elements of a federated computational governance: teams, incentives, automated implementation, and globally standardized aspects of data mesh

Many practices of pre-data-mesh governance, as a centralized function, are no longer applicable to the data mesh paradigm. For example, the past emphasis on certification of golden datasets - the datasets that have gone through a centralized process of quality control and certification and marked as trustworthy - as a central function of governance is no longer relevant. This had stemmed from the fact that in the previous data management paradigms, data - in whatever quality and format - gets extracted from operational domain’s databases and gets centrally stored in a warehouse or a lake that now requires a centralized team to apply cleansing, harmonization and encryption processes to it; often under the custodianship of a centralized governance group. Data mesh completely decentralizes this concern. A domain dataset only becomes a data product after it locally, within the domain, goes through the process of quality assurance according to the expected data product quality metrics and the global standardization rules. The domain data product owners are best placed to decide how to measure their domain’s data quality knowing the details of domain operations producing the data in the first place. Despite such localized decision making and autonomy, they need to comply with the modeling of quality and specification of SLOs based on a global standard, defined by the global federated governance team, and automated by the platform.

The following table shows the contrast between centralized (data lake, data warehouse) model of data governance, and data mesh.

| Pre data mesh governance aspect | Data mesh governance aspect |

|---|---|

| Centralized team | Federated team |

| Responsible for data quality | Responsible for defining how to model what constitutes quality |

| Responsible for data security | Responsible for defining aspects of data security i.e. data sensitivity levels for the platform to build in and monitor automatically |

| Responsible for complying with regulation | Responsible for defining the regulation requirements for the platform to build in and monitor automatically |

| Centralized custodianship of data | Federated custodianship of data by domains |

| Responsible for global canonical data modeling | Responsible for modeling - data elements that cross the boundaries of multiple domains |

| Team is independent from domains | Team is made of domains representatives |

| Aiming for a well defined static structure of data | Aiming for enabling effective mesh operation embracing a continuously changing and a dynamic topology of the mesh |

| Centralized technology used by monolithic lake/warehouse | Self-serve platform technologies used by each domain |

| Measure success based on number or volume of governed data (tables) | Measure success based on the network effect - the connections representing the consumption of data on the mesh |

| Manual process with human intervention | Automated processes implemented by the platform |

| Prevent error | Detect error and recover through platform’s automated processing |

Let’s bring it all together, we discussed four principles underpinning data mesh:

| Domain-oriented decentralized data ownership and architecture | the ecosystem creating and consuming data can scale out as the number of sources of data, number of use cases, and diversity of access models to the data increases; simply increase the autonomous nodes on the mesh. |

| Data as a product | data users can easily discover, understand and securely use high quality data with a delightful experience; data that is distributed across many domains. |

| Self-serve data infrastructure as a platform | the domain teams can create and consume data products autonomously using the platform abstractions, hiding the complexity of building, executing and maintaining secure and interoperable data products. |

| Federated computational governance | data users can get value from aggregation and correlation of independent data products - the mesh is behaving as an ecosystem following global interoperability standards; standards that are baked computationally into the platform. |

These principles drive a logical architectural model that while bringing analytical data and operational data closer together under the same domain, it respects their underpinning technical differences. Such differences include where the analytical data might be hosted, different compute technologies for processing operational vs. analytical services, different ways of querying and accessing the data, etc.

Figure 13: Logical architecture of data mesh approach

I hope by this point, we have now established a common language and a logical mental model that we can collectively take forward to detail the blueprint of the components of the mesh, such as the data product, the platform, and the required standardizations.

Acknowledgments

I am grateful to Martin Fowler for helping me refine the narrative and structure of this article, and for hosting it.

Special thanks to many ThoughtWorkers who have been helping create and distill the ideas in this article through client implementations and workshops.

Also thanks to the following early reviewers who provided invaluable feedback: Chris Ford, David Colls and Pramod Sadalage.

03 December 2020: Published

Data mesh: Real examples and lessons learned

Data is changing. are you keeping up.

Data and how we use it are constantly evolving in today's fast-paced world. And as we continue to rely on data accessibility to drive growth, it will only become more complicated to manage. Centralized data platforms have long served as the foundation of modern business intelligence and analytics and, in most cases, continue to deliver meaningful business value. But, like all foundations, over time, cracks begin to show. Data solutions are now bursting at the seams as the number and diversity of data sources and use cases becomes too complicated to manage with a traditional, centralized approach. Moreover, this rapidly increasing demand for business intelligence and analytics is inadvertently creating insight bottlenecks, preventing the delivery of deep, valuable insights. And truth be told, it's a big ask to address the above — it takes a cultural shift in ways of working that truly sets organizations on a path of readiness to innovate and leverage their data at a much faster pace.

So, what are some problems of not addressing data issues? The accidental creation of data latency generates a delay and a lack of access to the correct information, leading to the use of rogue data repositories and shadow BI solutions. Regulatory requirements surrounding data are becoming increasingly complex, and all who work with data must comply. Dependance on tribal knowledge generates stagnation in innovation and ideas. The list can go on and on. So, what does it take to not only avoid the problems previously mentioned but to thrive and grow in an ever-changing data landscape? How can organizations move forward when the path ahead can appear unclear and confusing? We feel the paramount solution to the change and potential problems in today's data landscape is through Data Mesh. Here are some brief examples of the Data mesh work we've conducted with our clients Gilead and Saxo Bank.

Success based on real-world use cases

Thoughtworks has been working with Gilead, an American biopharmaceutical company, for over a year in developing the case and planning the implementation for Data mesh. Gilead has a robust experimentation culture, established people practices and innovative technology thought leadership, but like many enterprises, it faced numerous challenges adopting the Data mesh approach to deliver data-driven value at scale. Thoughtworks is actively assisting Gilead in their approach to Data mesh in building an Enterprise Data and AI Platform leveraging their prior experiences to establish new guiding principles. When reviewing opportunities, Gilead saw value in a new organizational and operational model backed by data, but by using a Data mesh approach, it also allows them the opportunity to engage in a cloud transformation initiative. While their previous experience and realized opportunity for Data mesh allow Gilead to create guiding principles moving forward, such as managing their data as a product and adopting cloud-first architecture, it's only the tip of the iceberg in their journey.

Thoughtworks has also been working with Saxo Bank, a European online investment bank, to democratize data while empowering clients with information and agility to act with confidence. Because of the bank’s complex ecosystem, the data found within Saxo Bank's platform must be transparent, trustworthy and co-sharable, with the Saxo app being white-listed in every environment. So, Saxo Bank and Thoughtworks partnered to bring Data mesh to their organization. Thoughtworks created a data workbench for Saxo Bank to make their data assets searchable and discoverable. Much like how one would search for a product on Amazon, one types in the name of the data asset they're seeking in a search bar, and results backed by a business catalog of consistent business definitions appear. The data workbench also has product descriptions for each data asset along with the data asset's number of uses and user feedback, so one knows that the data is trustworthy. At a high level, since deploying their Data mesh initiative, Saxo Bank has seen a reduced cost of customer acquisition, more efficient costs of operation and increased defense due to the reduced chances of compliance and regulatory quagmires.

More in-depth information about Gilead and Saxo Bank can be found in the video below.

Some lessons learned along the way

Our Data Mesh work with Gilead, Saxo Bank and other organizations have taught us much about what it takes to succeed. While not exhaustive, here's a brief overview of some of the lessons we've learned along the way in empowering our clients with Data mesh:

Mindset, organizational and operational models are the most significant barriers to adopting Data Mesh.

Educating stakeholders and domain teams about Data Mesh is critical to success.

Developing a product mindset needs to start with discovery from the consumer perspective.

Creating foundational data products that can be reused and repurposed for multiple use cases helps solidify Data mesh.

Data products need to be compliant with global and local policies.

Choosing the right implementation partner to adopt Data Mesh within your organization is crucial.

Getting started with Data mesh