7.3 Problem-Solving

Learning objectives.

By the end of this section, you will be able to:

- Describe problem solving strategies

- Define algorithm and heuristic

- Explain some common roadblocks to effective problem solving

People face problems every day—usually, multiple problems throughout the day. Sometimes these problems are straightforward: To double a recipe for pizza dough, for example, all that is required is that each ingredient in the recipe be doubled. Sometimes, however, the problems we encounter are more complex. For example, say you have a work deadline, and you must mail a printed copy of a report to your supervisor by the end of the business day. The report is time-sensitive and must be sent overnight. You finished the report last night, but your printer will not work today. What should you do? First, you need to identify the problem and then apply a strategy for solving the problem.

The study of human and animal problem solving processes has provided much insight toward the understanding of our conscious experience and led to advancements in computer science and artificial intelligence. Essentially much of cognitive science today represents studies of how we consciously and unconsciously make decisions and solve problems. For instance, when encountered with a large amount of information, how do we go about making decisions about the most efficient way of sorting and analyzing all the information in order to find what you are looking for as in visual search paradigms in cognitive psychology. Or in a situation where a piece of machinery is not working properly, how do we go about organizing how to address the issue and understand what the cause of the problem might be. How do we sort the procedures that will be needed and focus attention on what is important in order to solve problems efficiently. Within this section we will discuss some of these issues and examine processes related to human, animal and computer problem solving.

PROBLEM-SOLVING STRATEGIES

When people are presented with a problem—whether it is a complex mathematical problem or a broken printer, how do you solve it? Before finding a solution to the problem, the problem must first be clearly identified. After that, one of many problem solving strategies can be applied, hopefully resulting in a solution.

Problems themselves can be classified into two different categories known as ill-defined and well-defined problems (Schacter, 2009). Ill-defined problems represent issues that do not have clear goals, solution paths, or expected solutions whereas well-defined problems have specific goals, clearly defined solutions, and clear expected solutions. Problem solving often incorporates pragmatics (logical reasoning) and semantics (interpretation of meanings behind the problem), and also in many cases require abstract thinking and creativity in order to find novel solutions. Within psychology, problem solving refers to a motivational drive for reading a definite “goal” from a present situation or condition that is either not moving toward that goal, is distant from it, or requires more complex logical analysis for finding a missing description of conditions or steps toward that goal. Processes relating to problem solving include problem finding also known as problem analysis, problem shaping where the organization of the problem occurs, generating alternative strategies, implementation of attempted solutions, and verification of the selected solution. Various methods of studying problem solving exist within the field of psychology including introspection, behavior analysis and behaviorism, simulation, computer modeling, and experimentation.

A problem-solving strategy is a plan of action used to find a solution. Different strategies have different action plans associated with them (table below). For example, a well-known strategy is trial and error. The old adage, “If at first you don’t succeed, try, try again” describes trial and error. In terms of your broken printer, you could try checking the ink levels, and if that doesn’t work, you could check to make sure the paper tray isn’t jammed. Or maybe the printer isn’t actually connected to your laptop. When using trial and error, you would continue to try different solutions until you solved your problem. Although trial and error is not typically one of the most time-efficient strategies, it is a commonly used one.

Another type of strategy is an algorithm. An algorithm is a problem-solving formula that provides you with step-by-step instructions used to achieve a desired outcome (Kahneman, 2011). You can think of an algorithm as a recipe with highly detailed instructions that produce the same result every time they are performed. Algorithms are used frequently in our everyday lives, especially in computer science. When you run a search on the Internet, search engines like Google use algorithms to decide which entries will appear first in your list of results. Facebook also uses algorithms to decide which posts to display on your newsfeed. Can you identify other situations in which algorithms are used?

A heuristic is another type of problem solving strategy. While an algorithm must be followed exactly to produce a correct result, a heuristic is a general problem-solving framework (Tversky & Kahneman, 1974). You can think of these as mental shortcuts that are used to solve problems. A “rule of thumb” is an example of a heuristic. Such a rule saves the person time and energy when making a decision, but despite its time-saving characteristics, it is not always the best method for making a rational decision. Different types of heuristics are used in different types of situations, but the impulse to use a heuristic occurs when one of five conditions is met (Pratkanis, 1989):

- When one is faced with too much information

- When the time to make a decision is limited

- When the decision to be made is unimportant

- When there is access to very little information to use in making the decision

- When an appropriate heuristic happens to come to mind in the same moment

Working backwards is a useful heuristic in which you begin solving the problem by focusing on the end result. Consider this example: You live in Washington, D.C. and have been invited to a wedding at 4 PM on Saturday in Philadelphia. Knowing that Interstate 95 tends to back up any day of the week, you need to plan your route and time your departure accordingly. If you want to be at the wedding service by 3:30 PM, and it takes 2.5 hours to get to Philadelphia without traffic, what time should you leave your house? You use the working backwards heuristic to plan the events of your day on a regular basis, probably without even thinking about it.

Another useful heuristic is the practice of accomplishing a large goal or task by breaking it into a series of smaller steps. Students often use this common method to complete a large research project or long essay for school. For example, students typically brainstorm, develop a thesis or main topic, research the chosen topic, organize their information into an outline, write a rough draft, revise and edit the rough draft, develop a final draft, organize the references list, and proofread their work before turning in the project. The large task becomes less overwhelming when it is broken down into a series of small steps.

Further problem solving strategies have been identified (listed below) that incorporate flexible and creative thinking in order to reach solutions efficiently.

Additional Problem Solving Strategies :

- Abstraction – refers to solving the problem within a model of the situation before applying it to reality.

- Analogy – is using a solution that solves a similar problem.

- Brainstorming – refers to collecting an analyzing a large amount of solutions, especially within a group of people, to combine the solutions and developing them until an optimal solution is reached.

- Divide and conquer – breaking down large complex problems into smaller more manageable problems.

- Hypothesis testing – method used in experimentation where an assumption about what would happen in response to manipulating an independent variable is made, and analysis of the affects of the manipulation are made and compared to the original hypothesis.

- Lateral thinking – approaching problems indirectly and creatively by viewing the problem in a new and unusual light.

- Means-ends analysis – choosing and analyzing an action at a series of smaller steps to move closer to the goal.

- Method of focal objects – putting seemingly non-matching characteristics of different procedures together to make something new that will get you closer to the goal.

- Morphological analysis – analyzing the outputs of and interactions of many pieces that together make up a whole system.

- Proof – trying to prove that a problem cannot be solved. Where the proof fails becomes the starting point or solving the problem.

- Reduction – adapting the problem to be as similar problems where a solution exists.

- Research – using existing knowledge or solutions to similar problems to solve the problem.

- Root cause analysis – trying to identify the cause of the problem.

The strategies listed above outline a short summary of methods we use in working toward solutions and also demonstrate how the mind works when being faced with barriers preventing goals to be reached.

One example of means-end analysis can be found by using the Tower of Hanoi paradigm . This paradigm can be modeled as a word problems as demonstrated by the Missionary-Cannibal Problem :

Missionary-Cannibal Problem

Three missionaries and three cannibals are on one side of a river and need to cross to the other side. The only means of crossing is a boat, and the boat can only hold two people at a time. Your goal is to devise a set of moves that will transport all six of the people across the river, being in mind the following constraint: The number of cannibals can never exceed the number of missionaries in any location. Remember that someone will have to also row that boat back across each time.

Hint : At one point in your solution, you will have to send more people back to the original side than you just sent to the destination.

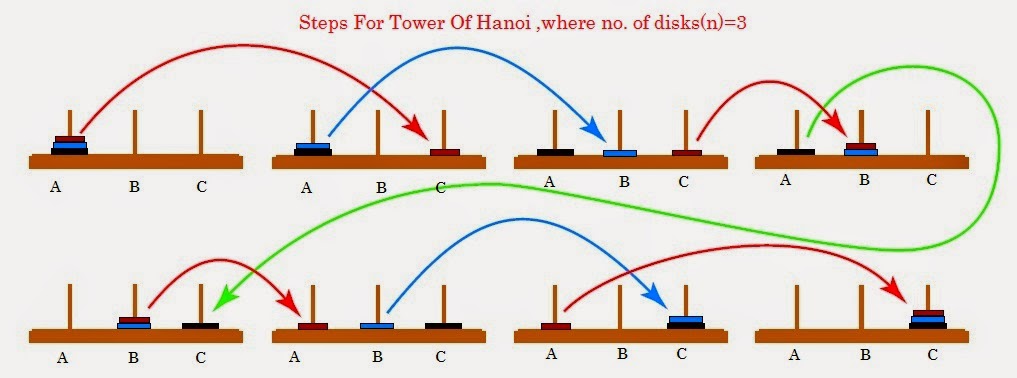

The actual Tower of Hanoi problem consists of three rods sitting vertically on a base with a number of disks of different sizes that can slide onto any rod. The puzzle starts with the disks in a neat stack in ascending order of size on one rod, the smallest at the top making a conical shape. The objective of the puzzle is to move the entire stack to another rod obeying the following rules:

- 1. Only one disk can be moved at a time.

- 2. Each move consists of taking the upper disk from one of the stacks and placing it on top of another stack or on an empty rod.

- 3. No disc may be placed on top of a smaller disk.

Figure 7.02. Steps for solving the Tower of Hanoi in the minimum number of moves when there are 3 disks.

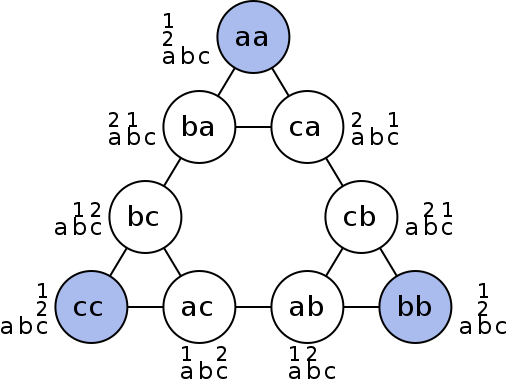

Figure 7.03. Graphical representation of nodes (circles) and moves (lines) of Tower of Hanoi.

The Tower of Hanoi is a frequently used psychological technique to study problem solving and procedure analysis. A variation of the Tower of Hanoi known as the Tower of London has been developed which has been an important tool in the neuropsychological diagnosis of executive function disorders and their treatment.

GESTALT PSYCHOLOGY AND PROBLEM SOLVING

As you may recall from the sensation and perception chapter, Gestalt psychology describes whole patterns, forms and configurations of perception and cognition such as closure, good continuation, and figure-ground. In addition to patterns of perception, Wolfgang Kohler, a German Gestalt psychologist traveled to the Spanish island of Tenerife in order to study animals behavior and problem solving in the anthropoid ape.

As an interesting side note to Kohler’s studies of chimp problem solving, Dr. Ronald Ley, professor of psychology at State University of New York provides evidence in his book A Whisper of Espionage (1990) suggesting that while collecting data for what would later be his book The Mentality of Apes (1925) on Tenerife in the Canary Islands between 1914 and 1920, Kohler was additionally an active spy for the German government alerting Germany to ships that were sailing around the Canary Islands. Ley suggests his investigations in England, Germany and elsewhere in Europe confirm that Kohler had served in the German military by building, maintaining and operating a concealed radio that contributed to Germany’s war effort acting as a strategic outpost in the Canary Islands that could monitor naval military activity approaching the north African coast.

While trapped on the island over the course of World War 1, Kohler applied Gestalt principles to animal perception in order to understand how they solve problems. He recognized that the apes on the islands also perceive relations between stimuli and the environment in Gestalt patterns and understand these patterns as wholes as opposed to pieces that make up a whole. Kohler based his theories of animal intelligence on the ability to understand relations between stimuli, and spent much of his time while trapped on the island investigation what he described as insight , the sudden perception of useful or proper relations. In order to study insight in animals, Kohler would present problems to chimpanzee’s by hanging some banana’s or some kind of food so it was suspended higher than the apes could reach. Within the room, Kohler would arrange a variety of boxes, sticks or other tools the chimpanzees could use by combining in patterns or organizing in a way that would allow them to obtain the food (Kohler & Winter, 1925).

While viewing the chimpanzee’s, Kohler noticed one chimp that was more efficient at solving problems than some of the others. The chimp, named Sultan, was able to use long poles to reach through bars and organize objects in specific patterns to obtain food or other desirables that were originally out of reach. In order to study insight within these chimps, Kohler would remove objects from the room to systematically make the food more difficult to obtain. As the story goes, after removing many of the objects Sultan was used to using to obtain the food, he sat down ad sulked for a while, and then suddenly got up going over to two poles lying on the ground. Without hesitation Sultan put one pole inside the end of the other creating a longer pole that he could use to obtain the food demonstrating an ideal example of what Kohler described as insight. In another situation, Sultan discovered how to stand on a box to reach a banana that was suspended from the rafters illustrating Sultan’s perception of relations and the importance of insight in problem solving.

Grande (another chimp in the group studied by Kohler) builds a three-box structure to reach the bananas, while Sultan watches from the ground. Insight , sometimes referred to as an “Ah-ha” experience, was the term Kohler used for the sudden perception of useful relations among objects during problem solving (Kohler, 1927; Radvansky & Ashcraft, 2013).

Solving puzzles.

Problem-solving abilities can improve with practice. Many people challenge themselves every day with puzzles and other mental exercises to sharpen their problem-solving skills. Sudoku puzzles appear daily in most newspapers. Typically, a sudoku puzzle is a 9×9 grid. The simple sudoku below (see figure) is a 4×4 grid. To solve the puzzle, fill in the empty boxes with a single digit: 1, 2, 3, or 4. Here are the rules: The numbers must total 10 in each bolded box, each row, and each column; however, each digit can only appear once in a bolded box, row, and column. Time yourself as you solve this puzzle and compare your time with a classmate.

How long did it take you to solve this sudoku puzzle? (You can see the answer at the end of this section.)

Here is another popular type of puzzle (figure below) that challenges your spatial reasoning skills. Connect all nine dots with four connecting straight lines without lifting your pencil from the paper:

Did you figure it out? (The answer is at the end of this section.) Once you understand how to crack this puzzle, you won’t forget.

Take a look at the “Puzzling Scales” logic puzzle below (figure below). Sam Loyd, a well-known puzzle master, created and refined countless puzzles throughout his lifetime (Cyclopedia of Puzzles, n.d.).

What steps did you take to solve this puzzle? You can read the solution at the end of this section.

Pitfalls to problem solving.

Not all problems are successfully solved, however. What challenges stop us from successfully solving a problem? Albert Einstein once said, “Insanity is doing the same thing over and over again and expecting a different result.” Imagine a person in a room that has four doorways. One doorway that has always been open in the past is now locked. The person, accustomed to exiting the room by that particular doorway, keeps trying to get out through the same doorway even though the other three doorways are open. The person is stuck—but she just needs to go to another doorway, instead of trying to get out through the locked doorway. A mental set is where you persist in approaching a problem in a way that has worked in the past but is clearly not working now.

Functional fixedness is a type of mental set where you cannot perceive an object being used for something other than what it was designed for. During the Apollo 13 mission to the moon, NASA engineers at Mission Control had to overcome functional fixedness to save the lives of the astronauts aboard the spacecraft. An explosion in a module of the spacecraft damaged multiple systems. The astronauts were in danger of being poisoned by rising levels of carbon dioxide because of problems with the carbon dioxide filters. The engineers found a way for the astronauts to use spare plastic bags, tape, and air hoses to create a makeshift air filter, which saved the lives of the astronauts.

Researchers have investigated whether functional fixedness is affected by culture. In one experiment, individuals from the Shuar group in Ecuador were asked to use an object for a purpose other than that for which the object was originally intended. For example, the participants were told a story about a bear and a rabbit that were separated by a river and asked to select among various objects, including a spoon, a cup, erasers, and so on, to help the animals. The spoon was the only object long enough to span the imaginary river, but if the spoon was presented in a way that reflected its normal usage, it took participants longer to choose the spoon to solve the problem. (German & Barrett, 2005). The researchers wanted to know if exposure to highly specialized tools, as occurs with individuals in industrialized nations, affects their ability to transcend functional fixedness. It was determined that functional fixedness is experienced in both industrialized and nonindustrialized cultures (German & Barrett, 2005).

In order to make good decisions, we use our knowledge and our reasoning. Often, this knowledge and reasoning is sound and solid. Sometimes, however, we are swayed by biases or by others manipulating a situation. For example, let’s say you and three friends wanted to rent a house and had a combined target budget of $1,600. The realtor shows you only very run-down houses for $1,600 and then shows you a very nice house for $2,000. Might you ask each person to pay more in rent to get the $2,000 home? Why would the realtor show you the run-down houses and the nice house? The realtor may be challenging your anchoring bias. An anchoring bias occurs when you focus on one piece of information when making a decision or solving a problem. In this case, you’re so focused on the amount of money you are willing to spend that you may not recognize what kinds of houses are available at that price point.

The confirmation bias is the tendency to focus on information that confirms your existing beliefs. For example, if you think that your professor is not very nice, you notice all of the instances of rude behavior exhibited by the professor while ignoring the countless pleasant interactions he is involved in on a daily basis. Hindsight bias leads you to believe that the event you just experienced was predictable, even though it really wasn’t. In other words, you knew all along that things would turn out the way they did. Representative bias describes a faulty way of thinking, in which you unintentionally stereotype someone or something; for example, you may assume that your professors spend their free time reading books and engaging in intellectual conversation, because the idea of them spending their time playing volleyball or visiting an amusement park does not fit in with your stereotypes of professors.

Finally, the availability heuristic is a heuristic in which you make a decision based on an example, information, or recent experience that is that readily available to you, even though it may not be the best example to inform your decision . Biases tend to “preserve that which is already established—to maintain our preexisting knowledge, beliefs, attitudes, and hypotheses” (Aronson, 1995; Kahneman, 2011). These biases are summarized in the table below.

Were you able to determine how many marbles are needed to balance the scales in the figure below? You need nine. Were you able to solve the problems in the figures above? Here are the answers.

Many different strategies exist for solving problems. Typical strategies include trial and error, applying algorithms, and using heuristics. To solve a large, complicated problem, it often helps to break the problem into smaller steps that can be accomplished individually, leading to an overall solution. Roadblocks to problem solving include a mental set, functional fixedness, and various biases that can cloud decision making skills.

References:

Openstax Psychology text by Kathryn Dumper, William Jenkins, Arlene Lacombe, Marilyn Lovett and Marion Perlmutter licensed under CC BY v4.0. https://openstax.org/details/books/psychology

Review Questions:

1. A specific formula for solving a problem is called ________.

a. an algorithm

b. a heuristic

c. a mental set

d. trial and error

2. Solving the Tower of Hanoi problem tends to utilize a ________ strategy of problem solving.

a. divide and conquer

b. means-end analysis

d. experiment

3. A mental shortcut in the form of a general problem-solving framework is called ________.

4. Which type of bias involves becoming fixated on a single trait of a problem?

a. anchoring bias

b. confirmation bias

c. representative bias

d. availability bias

5. Which type of bias involves relying on a false stereotype to make a decision?

6. Wolfgang Kohler analyzed behavior of chimpanzees by applying Gestalt principles to describe ________.

a. social adjustment

b. student load payment options

c. emotional learning

d. insight learning

7. ________ is a type of mental set where you cannot perceive an object being used for something other than what it was designed for.

a. functional fixedness

c. working memory

Critical Thinking Questions:

1. What is functional fixedness and how can overcoming it help you solve problems?

2. How does an algorithm save you time and energy when solving a problem?

Personal Application Question:

1. Which type of bias do you recognize in your own decision making processes? How has this bias affected how you’ve made decisions in the past and how can you use your awareness of it to improve your decisions making skills in the future?

anchoring bias

availability heuristic

confirmation bias

functional fixedness

hindsight bias

problem-solving strategy

representative bias

trial and error

working backwards

Answers to Exercises

algorithm: problem-solving strategy characterized by a specific set of instructions

anchoring bias: faulty heuristic in which you fixate on a single aspect of a problem to find a solution

availability heuristic: faulty heuristic in which you make a decision based on information readily available to you

confirmation bias: faulty heuristic in which you focus on information that confirms your beliefs

functional fixedness: inability to see an object as useful for any other use other than the one for which it was intended

heuristic: mental shortcut that saves time when solving a problem

hindsight bias: belief that the event just experienced was predictable, even though it really wasn’t

mental set: continually using an old solution to a problem without results

problem-solving strategy: method for solving problems

representative bias: faulty heuristic in which you stereotype someone or something without a valid basis for your judgment

trial and error: problem-solving strategy in which multiple solutions are attempted until the correct one is found

working backwards: heuristic in which you begin to solve a problem by focusing on the end result

Share This Book

- Increase Font Size

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

9 Chapter 9. Problem-Solving

CHAPTER 9: PROBLEM SOLVING

How do we achieve our goals when the solution is not immediately obvious? What mental blocks are likely to get in our way, and how can we leverage our prior knowledge to solve novel problems?

CHAPTER 9 LICENSE AND ATTRIBUTION

Source: Multiple authors. Memory. In Cognitive Psychology and Cognitive Neuroscience. Wikibooks. Retrieved from https://en.wikibooks.org/wiki/ Cognitive_Psychology_and_Cognitive_Neuroscience

Wikibooks are licensed under the Creative Commons Attribution-ShareAlike License.

Cognitive Psychology and Cognitive Neuroscience is licensed under the GNU Free Documentation License.

Condensed from original version. American spellings used. Content added or changed to reflect American perspective and references. Context and transitions added throughout. Substantially edited, adapted, and (in some parts) rewritten for clarity and course relevance.

Cover photo by Pixabay on Pexels.

Knut is sitting at his desk, staring at a blank paper in front of him, and nervously playing with a pen in his right hand. Just a few hours left to hand in his essay and he has not written a word. All of a sudden he smashes his fist on the table and cries out: “I need a plan!”

Knut is confronted with something every one of us encounters in his daily life: he has a problem, and he does not know how to solve it. But what exactly is a problem? Are there strategies to solve problems? These are just a few of the questions we want to answer in this chapter.

We begin our chapter by giving a short description of what psychologists regard as a problem. Afterward we will discuss different approaches towards problem solving, starting with gestalt psychologists and ending with modern search strategies connected to artificial intelligence. In addition we will also consider how experts solve problems.

The most basic definition of a problem is any given situation that differs from a desired goal. This definition is very useful for discussing problem solving in terms of evolutionary adaptation, as it allows us to understand every aspect of (human or animal) life as a problem. This includes issues like finding food in harsh winters, remembering where you left your provisions, making decisions about which way to go, learning, repeating and varying all kinds of complex movements, and so on. Though all of these problems were of crucial importance during the human evolutionary process, they are by no means solved exclusively by humans. We find an amazing variety of different solutions for these problems in nature (just consider, for example, the way a bat hunts its prey compared to a spider). We will mainly focus on problems that are not solved by animals or evolution; we will instead focus on abstract problems, such as playing chess. Furthermore, we will not consider problems that have an obvious solution. For example, imagine Knut decides to take a sip of coffee from the mug next to his right hand. He does not even have to think about how to do this. This is not because the situation itself is trivial (a robot capable of recognizing the mug, deciding whether it is full, then grabbing it and moving it to Knut’s mouth would be a highly complex machine) but because in the context of all possible situations it is so trivial that it no longer is a problem our consciousness needs to be bothered with. The problems we will discuss in the following all need some conscious effort, though some seem to be solved without us being able to say how exactly we got to the solution. We will often find that the strategies we use to solve these problems are applicable to more basic problems, too.

Non-trivial, abstract problems can be divided into two groups: well-defined problems and ill- defined problems.

WELL-DEFINED PROBLEMS

For many abstract problems, it is possible to find an algorithmic solution. We call problems well-defined if they can be properly formalized, which involves the following properties:

• The problem has a clearly defined given state. This might be the line-up of a chess game, a given formula you have to solve, or the set-up of the towers of Hanoi game (which we will discuss later).

• There is a finite set of operators, that is, rules you may apply to the given state. For the chess game, e.g., these would be the rules that tell you which piece you may move to which position.

• Finally, the problem has a clear goal state: The equations is resolved to x, all discs are moved to the right stack, or the other player is in checkmate.

A problem that fulfils these requirements can be implemented algorithmically. Therefore many well-defined problems can be very effectively solved by computers, like playing chess.

ILL-DEFINED PROBLEMS

Though many problems can be properly formalized, there are still others where this is not the case. Good examples for this are all kinds of tasks that involve creativity, and, generally speaking, all problems for which it is not possible to clearly define a given state and a goal state. Formalizing a problem such as “Please paint a beautiful picture” may be impossible.

Still, this is a problem most people would be able to approach in one way or the other, even if the result may be totally different from person to person. And while Knut might judge that picture X is gorgeous, you might completely disagree.

The line between well-defined and ill-defined problems is not always neat: ill-defined problems often involve sub-problems that can be perfectly well-defined. On the other hand, many everyday problems that seem to be completely well-defined involve — when examined in detail — a great amount of creativity and ambiguity. Consider Knut’s fairly ill-defined task of writing an essay: he will not be able to complete this task without first understanding the text he has to write about. This step is the first subgoal Knut has to solve. In this example, an ill-defined problem involves a well-defined sub-problem

RESTRUCTURING: THE GESTALTIST APPROACH

One dominant approach to problem solving originated from Gestalt psychologists in the 1920s. Their understanding of problem solving emphasizes behavior in situations requiring relatively novel means of attaining goals and suggests that problem solving involves a process called restructuring. With a Gestalt approach, two main questions have to be considered to understand the process of problem solving: 1) How is a problem represented in a person’s mind?, and 2) How does solving this problem involve a reorganization or restructuring of this representation?

HOW IS A PROBLEM REPRESENTED IN THE MIND?

In current research internal and external representations are distinguished: an internal representation is one held in memory, and which has to be retrieved by cognitive processes, while an external representation exists in the environment, such like physical objects or symbols whose information can be picked up and processed by the perceptual system.

Generally speaking, problem representations are models of the situation as experienced by the solver. Representing a problem means to analyze it and split it into separate components, including objects, predicates, state space, operators, and selection criteria.

The efficiency of problem solving depends on the underlying representations in a person’s mind, which usually also involves personal aspects. Re-analyzing the problem along different dimensions, or changing from one representation to another, can result in arriving at a new understanding of a problem. This is called restructuring . The following example illustrates this:

Two boys of different ages are playing badminton. The older one is a more skilled player, and therefore the outcome of matches between the two becomes predictable. After repeated defeats the younger boy finally loses interest in playing. The older boy now faces a problem, namely that he has no one to play with anymore. The usual options, according to M. Wertheimer (1945/82), range from “offering candy” and “playing a different game” to “not playing at full ability” and “shaming the younger boy into playing.” All of these strategies aim at making the younger boy stay.

The older boy instead comes up with a different solution: He proposes that they should try to keep the birdie in play as long as possible. Thus, they change from a game of competition to one of cooperation. The proposal is happily accepted, and the game is on again. The key in this story is that the older boy restructured the problem, having found that his attitude toward the game made it difficult to keep the younger boy playing. With the new type of game the problem is solved: the older boy is not bored, and the younger boy is not frustrated. In some cases, new representations can make a problem more difficult or much easier to solve. In the latter case insight – the sudden realization of a problem’s solution – may be the key to finding a solution.

There are two very different ways of approaching a goal-oriented situation . In one case an organism readily reproduces the response to the given problem from past experience. This is called reproductive thinking .

The second way requires something new and di fferent to achieve the goal—prior learning is of little help here. Such productive thinking is sometimes argued to involve insight . Gestalt psychologists state that insight problems are a separate category of problems in their own right.

Tasks that might involve insight usually have certain features: they require something new and non-obvious to be done, and in most cases they are difficult enough to predict that the initial solution attempt will be unsuccessful. When you solve a problem of this kind you often have a so called “aha” experience: the solution pops into mind all of a sudden. In one moment you have no idea how to answer the problem, and you feel you are not making any progress trying out different ideas, but in the next moment the problem is solved.

For readers who would like to experience such an effect, here is an example of an insight problem: Knut is given four pieces of a chain; each made up of three links. The task is to link it all up to a closed loop. To open a link costs 2 cents, and to close a link costs 3 cents. Knut has 15 cents to spend. What should Knut do?

If you want to know the correct solution, turn to the next page.

To show that solving insight problems involves restructuring , psychologists have created a number of problems that are more difficult to solve for participants with previous experiences, since it is harder for them to change the representation of the given situation.

For non-insight problems the opposite is the case. Solving arithmetical problems, for instance, requires schemas, through which one can get to the solution step by step.

Sometimes, previous experience or familiarity can even make problem solving more difficult. This is the case whenever habitual directions get in the way of finding new directions – an effect called fixation .

FUNCTIONAL FIXEDNESS

Functional fixedness concerns the solution of object use problems . The basic idea is that when the usual function an object is emphasized, it will be far more difficult for a person to use that object in a novel manner. An example for this effect is the candle problem : Imagine you are given a box of matches, some candles and tacks. On the wall of the room there is a cork-board. Your task is to fix the candle to the cork-board in such a way that no wax will drop on the floor when the candle is lit. Got an idea?

Here’s a clue: when people are confronted with a problem and given certain objects to solve it, it is difficult for them to figure out that they could use the objects in a different way. In this example, the box has to be recognized as a support rather than as a container— tack the matchbox to the wall, and place the candle upright in the box. The box will catch the falling wax.

A further example is the two-string problem : Knut is left in a room with a pair of pliers and given the task to bind two strings together that are hanging from the ceiling. The problem he faces is that he can never reach both strings at a time because they are just too far away from each other. What can Knut do?

Solution: Knut has to recognize he can use the pliers in a novel function: as weight for a pendulum. He can tie them to one of the strings, push it away, hold the other string and wait for the first one to swing toward him.

MENTAL FIXEDNESS

Functional fixedness as involved in the examples above illustrates a mental set: a person’s tendency to respond to a given task in a manner based on past experience. Because Knut maps an object to a particular function he has difficulty varying the way of use (i.e., pliers as pendulum’s weight).

One approach to studying fixation was to study wrong-answer verbal insight problems . In these probems, people tend to give an incorrect answer when failing to solve a problem rather than to give no answer at all.

A typical example: People are told that on a lake the area covered by water lilies doubles every 24 hours and that it takes 60 days to cover the whole lake. Then they are asked how many days it takes to cover half the lake. The typical response is “30 days” (whereas 59 days is correct).

These wrong solutions are due to an inaccurate interpretation , or representation , of the problem. This can happen because of sloppiness (a quick shallow reading of the problem and/or weak monitoring of their efforts made to come to a solution). In this case error feedback should help people to reconsider the problem features, note the inadequacy of their first answer, and find the correct solution. If, however, people are truly fixated on their incorrect representation, being told the answer is wrong does not help. In a study by P.I. Dallop and

R.L. Dominowski in 1992 these two possibilities were investigated. In approximately one third of the cases error feedback led to right answers, so only approximately one third of the wrong answers were due to inadequate monitoring.

Another approach is the study of examples with and without a preceding analogous task. In cases such like the water-jug task, analogous thinking indeed leads to a correct solution, but to take a different way might make the case much simpler:

Imagine Knut again, this time he is given three jugs with different capacities and is asked to measure the required amount of water. He is not allowed to use anything except the jugs and as much water as he likes. In the first case the sizes are: 127 cups, 21 cups and 3 cups. His goal is to measure 100 cups of water.

In the second case Knut is asked to measure 18 cups from jugs of 39, 15 and 3 cups capacity.

Participants who are given the 100 cup task first choose a complicated way to solve the second task. Participants who did not know about that complex task solved the 18 cup case by just adding three cups to 15.

SOLVING PROBLEMS BY ANALOGY

One special kind of restructuring is analogical problem solving. Here, to find a solution to one problem (i.e., the target problem) an analogous solution to another problem (i.e., the base problem) is presented.

An example for this kind of strategy is the radiation problem posed by K. Duncker in 1945:

As a doctor you have to treat a patient with a malignant, inoperable tumor, buried deep inside the body. There exists a special kind of ray which is harmless at a low intensity, but at sufficiently high intensity is able to destroy the tumor. At such high intensity, however, the ray will also destroy the healthy tissue it passes through on the way to the tumor. What can be done to destroy the tumor while preserving the healthy tissue?

When this question was asked to participants in an experiment, most of them couldn’t come up with the appropriate answer to the problem. Then they were told a story that went something like this:

A general wanted to capture his enemy’s fortress. He gathered a large army to launch a full- scale direct attack, but then learned that all the roads leading directly towards the fortress were blocked by landmines. These roadblocks were designed in such a way that it was possible for small groups of the fortress-owner’s men to pass over them safely, but a large group of men would set them off. The general devised the following plan: He divided his troops into several smaller groups and ordered each of them to march down a different road, timed in such a way that the entire army would reunite exactly when reaching the fortress and could hit with full strength.

Here, the story about the general is the source problem, and the radiation problem is the target problem. The fortress is analogous to the tumor and the big army corresponds to the highly intensive ray. Likewise, a small group of soldiers represents a ray at low intensity. The s olution to the problem is to split the ray up, as the general did with his army, and send the now harmless rays towards the tumor from different angles in such a way that they all meet when reaching it. No healthy tissue is damaged but the tumor itself gets destroyed by the ray at its full intensity.

M. Gick and K. Holyoak presented Duncker’s radiation problem to a group of participants in 1980 and 1983. 10 percent of participants were able to solve the problem right away, but 30 percent could solve it when they read the story of the general before. After being given an additional hint — to use the story as help — 75 percent of them solved the problem.

Following these results, Gick and Holyoak concluded that analogical problem solving consists of three steps:

1. Recognizing that an analogical connection exists between the source and the base problem.

2. Mapping corresponding parts of the two problems onto each other (fortress ® tumour, army ® ray, etc.)

3. Applying the mapping to generate a parallel solution to the target problem (using little groups of soldiers approaching from different directions ® sending several weaker rays from different directions)

Next, Gick and Holyoak started looking for factors that could help the recognizing and mapping processes.

The abstract concept that links the target problem with the base problem is called the problem schema. Gick and Holyoak facilitated the activation of a schema with their participants by giving them two stories and asking them to compare and summarize them. This activation of problem schemas is called “schema induction“.

The experimenters had participants read stories that presented problems and their solutions. One story was the above story about the general, and other stories required the same problem schema (i.e., if a heavy force coming from one direction is not suitable, use multiple smaller forces that simultaneously converge on the target). The experimenters manipulated how many of these stories the participants read before the participants were asked to solve the radiation problem. The experiment showed that in order to solve the target problem, reading two stories with analogical problems is more helpful than reading only one story. This evidence suggests that schema induction can be achieved by exposing people to multiple problems with the same problem schema.

HOW DO EXPERTS SOLVE PROBLEMS?

An expert is someone who devotes large amounts of their time and energy to one specific field of interest in which they, subsequently, reach a certain level of mastery. It should not be a surprise that experts tend to be better at solving problems in their field than novices (i.e., people who are beginners or not as well-trained in a field as experts) are. Experts are faster at coming up with solutions and have a higher rate of correct solutions. But what is the difference between the way experts and non-experts solve problems? Research on the nature of expertise has come up with the following conclusions:

1. Experts know more about their field,

2. their knowledge is organized differently, and

3. they spend more time analyzing the problem.

Expertise is domain specific— when it comes to problems that are outside the experts’ domain of expertise, their performance often does not differ from that of novices.

Knowledge: An experiment by Chase and Simon (1973) dealt with the question of how well experts and novices are able to reproduce positions of chess pieces on chess boards after a brief presentation. The results showed that experts were far better at reproducing actual game positions, but that their performance was comparable with that of novices when the chess pieces were arranged randomly on the board. Chase and Simon concluded that the superior performance on actual game positions was due to the ability to recognize familiar patterns: A chess expert has up to 50,000 patterns stored in his memory. In comparison, a good player might know about 1,000 patterns by heart and a novice only few to none at all. This very detailed knowledge is of crucial help when an expert is confronted with a new problem in his field. Still, it is not only the amount of knowledge that makes an expert more successful. Experts also organize their knowledge differently from novices.

Organization: In 1981 M. Chi and her co-workers took a set of 24 physics problems and presented them to a group of physics professors as well as to a group of students with only one semester of physics. The task was to group the problems based on their similarities. The students tended to group the problems based on their surface structure (i.e., similarities of objects used in the problem, such as sketches illustrating the problem), whereas the professors used their deep structure (i.e., the general physical principles that underlie the problems) as criteria. By recognizing the actual structure of a problem experts are able to connect the given task to the relevant knowledge they already have (e.g., another problem they solved earlier which required the same strategy).

Analysis: Experts often spend more time analyzing a problem before actually trying to solve it. This way of approaching a problem may often result in what appears to be a slow start, but in the long run this strategy is much more effective. A novice, on the other hand, might start working on the problem right away, but often reach dead ends as they chose a wrong path in the very beginning.

_________________________________________________________________________________________________________________________________________________________

Chase, W. G., & Simon, H. A. (1973). Perception in chess. Cognitive psychology, 4(1), 55-81.

Chi, M. T., Feltovich, P. J., & Glaser, R. (1981). Categorization and representation of physics problems by experts and novices. Cognitive science, 5(2), 121-152.

Duncker, K., & Lees, L. S. (1945). On problem-solving. Psychological monographs, 58(5).

Gick, M. L., & Holyoak, K. J. (1980). Analogical problem solving. Cognitive psychology, 12(3), 306-355. Gick, M. L., & Holyoak, K. J. (1983). Schema induction and analogical transfer. Cognitive psychology, 15(1), 1-38.

Goldstein, E.B. (2005). Cogntive Psychology. Connecting Mind, Research, and Everyday Experience. Belmont: Thomson Wadsworth.

R.L. Dominowski and P. Dallob, Insight and Problem Solving. In The Nature of Insight, R.J. Sternberg & J.E. Davidson (Eds). MIT Press: USA, pp.33-62 (1995).

Wertheimer, M., (1945). Productive thinking. New York: Harper.

ESSENTIALS OF COGNITIVE PSYCHOLOGY Copyright © 2023 by Christopher Klein is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Explore Psychology

Psychology Articles, Study Guides, and Resources

Insight Learning Theory: Definition, Stages, and Examples

Insight learning theory is all about those “lightbulb moments” we experience when we suddenly understand something. Instead of slowly figuring things out through trial and error, insight theory says we can suddenly see the solution to a problem in our minds. This theory is super important because it helps us understand how our brains work…

Insight learning theory is all about those “lightbulb moments” we experience when we suddenly understand something. Instead of slowly figuring things out through trial and error, insight theory says we can suddenly see the solution to a problem in our minds.

This theory is super important because it helps us understand how our brains work when we learn and solve problems. It can help teachers find better ways to teach and improve our problem-solving skills and creativity. It’s not just useful in school—insight theory also greatly impacts science, technology, and business.

In this article

What Is Insight Learning?

Insight learning is like having a lightbulb moment in your brain. It’s when you suddenly understand something without needing to go through a step-by-step process. Instead of slowly figuring things out by trial and error, insight learning happens in a flash. One moment, you’re stuck, and the next, you have the solution.

This type of learning is all about those “aha” experiences that feel like magic. The key principles of insight learning involve recognizing patterns, making connections, and restructuring our thoughts. It’s as if our brains suddenly rearrange the pieces of a puzzle, revealing the big picture. So, next time you have a brilliant idea pop into your head out of nowhere, you might just be experiencing insight learning in action!

Three Components of Insight Learning Theory

Insight learning, a concept rooted in psychology, comprises three distinct properties that characterize its unique nature:

1. Sudden Realization

Unlike gradual problem-solving methods, insight learning involves sudden and profound understanding. Individuals may be stuck on a problem for a while, but then, seemingly out of nowhere, the solution becomes clear. This sudden “aha” moment marks the culmination of mental processes that have been working behind the scenes to reorganize information and generate a new perspective .

2. Restructuring of Problem-Solving Strategies

Insight learning often involves a restructuring of mental representations or problem-solving strategies . Instead of simply trying different approaches until stumbling upon the correct one, individuals experience a shift in how they perceive and approach the problem. This restructuring allows for a more efficient and direct path to the solution once insight occurs.

3. Aha Moments

A hallmark of insight learning is the experience of “aha” moments. These moments are characterized by a sudden sense of clarity and understanding, often accompanied by a feeling of satisfaction or excitement. It’s as if a mental lightbulb turns on, illuminating the solution to a previously perplexing problem.

These moments of insight can be deeply rewarding and serve as powerful motivators for further learning and problem-solving endeavors.

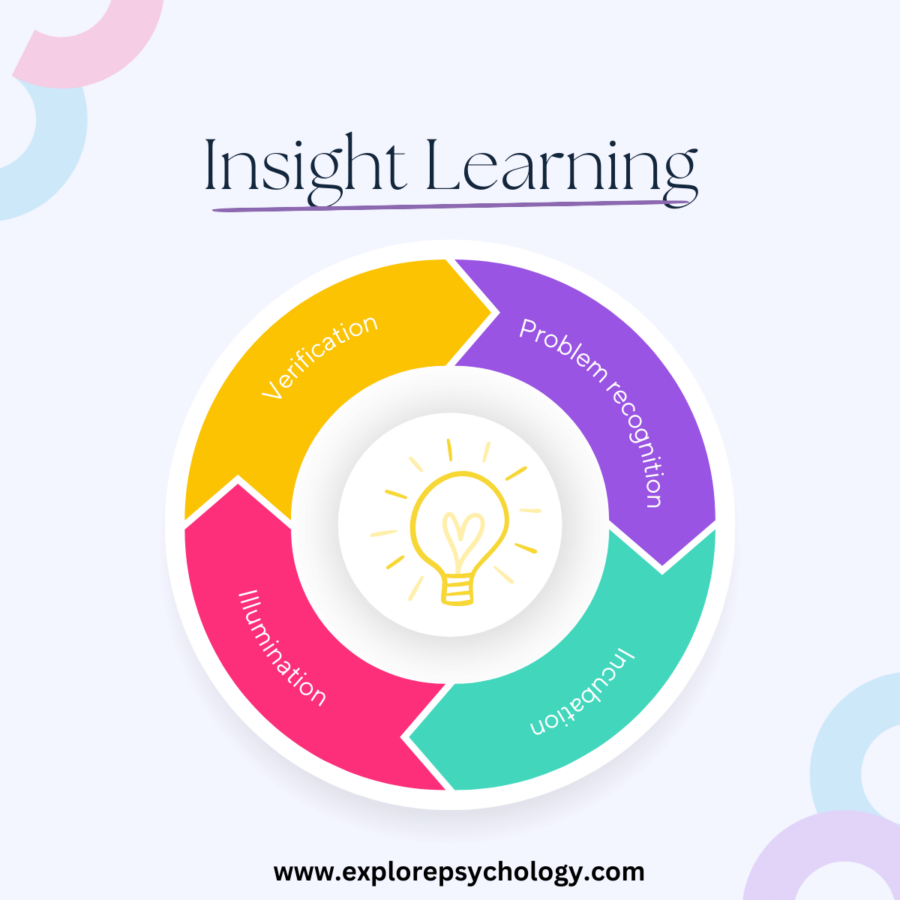

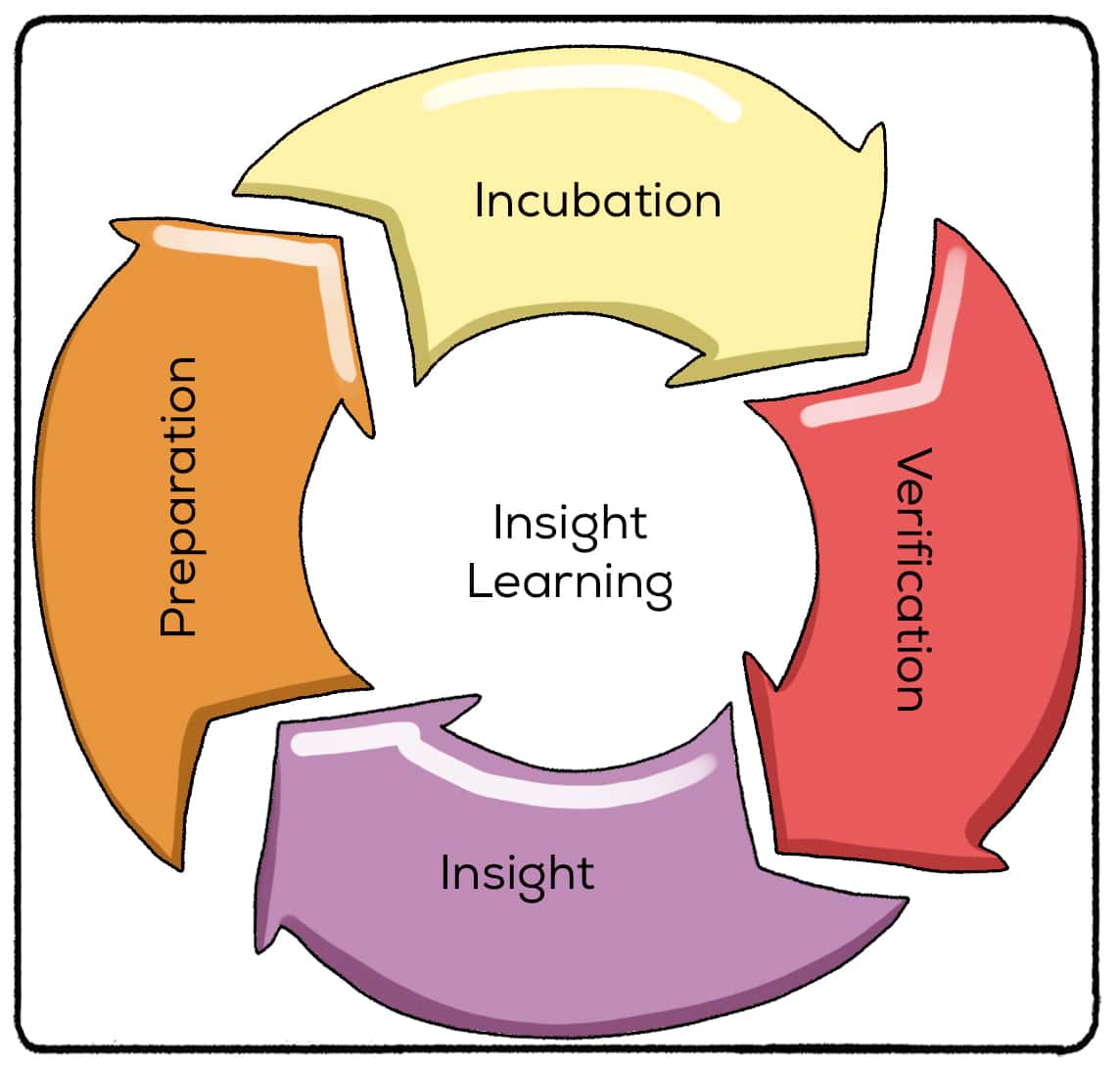

Four Stages of Insight Learning Theory

Insight learning unfolds in a series of distinct stages, each contributing to the journey from problem recognition to the sudden realization of a solution. These stages are as follows:

1. Problem Recognition

The first stage of insight learning involves recognizing and defining the problem at hand. This may entail identifying obstacles, discrepancies, or gaps in understanding that need to be addressed. Problem recognition sets the stage for the subsequent stages of insight learning by framing the problem and guiding the individual’s cognitive processes toward finding a solution.

2. Incubation

After recognizing the problem, individuals often enter a period of incubation where the mind continues to work on the problem unconsciously. During this stage, the brain engages in background processing, making connections, and reorganizing information without the individual’s conscious awareness.

While it may seem like a period of inactivity on the surface, incubation is a crucial phase where ideas gestate, and creative solutions take shape beneath the surface of conscious thought.

3. Illumination

The illumination stage marks the sudden emergence of insight or understanding. It is characterized by a moment of clarity and realization, where the solution to the problem becomes apparent in a flash of insight.

This “aha” moment often feels spontaneous and surprising, as if the solution has been waiting just below the surface of conscious awareness to be revealed. Illumination is the culmination of the cognitive processes initiated during problem recognition and incubation, resulting in a breakthrough in understanding.

4. Verification

Following the illumination stage, individuals verify the validity and feasibility of their insights by testing the proposed solution. This may involve applying the solution in practice, checking it against existing knowledge or expertise, or seeking feedback from others.

Verification serves to confirm the efficacy of the newfound understanding and ensure its practical applicability in solving the problem at hand. It also provides an opportunity to refine and iterate on the solution based on real-world feedback and experience.

Famous Examples of Insight Learning

Examples of insight learning can be observed in various contexts, ranging from everyday problem-solving to scientific discoveries and creative breakthroughs. Some well-known examples of how insight learning theory works include the following:

Archimedes’ Principle

According to legend, the ancient Greek mathematician Archimedes experienced a moment of insight while taking a bath. He noticed that the water level rose as he immersed his body, leading him to realize that the volume of water displaced was equal to the volume of the submerged object. This insight led to the formulation of Archimedes’ principle, a fundamental concept in fluid mechanics.

Köhler’s Chimpanzee Experiments

In Wolfgang Köhler’s experiments with chimpanzees on Tenerife in the 1920s, the primates demonstrated insight learning in solving novel problems. One famous example involved a chimpanzee named Sultan, who used sticks to reach bananas placed outside his cage. After unsuccessful attempts at using a single stick, Sultan suddenly combined two sticks to create a longer tool, demonstrating insight into the problem and the ability to use tools creatively.

Eureka Moments in Science

Many scientific discoveries are the result of insight learning. For instance, the famed naturalist Charles Darwin had many eureka moments where he gained sudden insights that led to the formation of his influential theories.

Everyday Examples of Insight Learning Theory

You can probably think of some good examples of the role that insight learning theory plays in your everyday life. A few common real-life examples include:

- Finding a lost item : You might spend a lot of time searching for a lost item, like your keys or phone, but suddenly remember exactly where you left them when you’re doing something completely unrelated. This sudden recollection is an example of insight learning.

- Untangling knots : When trying to untangle a particularly tricky knot, you might struggle with it for a while without making progress. Then, suddenly, you realize a new approach or see a pattern that helps you quickly unravel the knot.

- Cooking improvisation : If you’re cooking and run out of a particular ingredient, you might suddenly come up with a creative substitution or alteration to the recipe that works surprisingly well. This moment of improvisation demonstrates insight learning in action.

- Solving riddles or brain teasers : You might initially be stumped when trying to solve a riddle or a brain teaser. However, after some time pondering the problem, you suddenly grasp the solution in a moment of insight.

- Learning a new skill : Learning to ride a bike or play a musical instrument often involves moments of insight. You might struggle with a certain technique or concept but then suddenly “get it” and experience a significant improvement in your performance.

- Navigating a maze : While navigating through a maze, you might encounter dead ends and wrong turns. However, after some exploration, you suddenly realize the correct path to take and reach the exit efficiently.

- Remembering information : When studying for a test, you might find yourself unable to recall a particular piece of information. Then, when you least expect it, the answer suddenly comes to you in a moment of insight.

These everyday examples illustrate how insight learning is a common and natural part of problem-solving and learning in our daily lives.

Exploring the Uses of Insight Learning

Insight learning isn’t an interesting explanation for how we suddenly come up with a solution to a problem—it also has many practical applications. Here are just a few ways that people can use insight learning in real life:

Problem-Solving

Insight learning helps us solve all sorts of problems, from finding lost items to untangling knots. When we’re stuck, our brains might suddenly come up with a genius idea or a new approach that saves the day. It’s like having a mental superhero swoop in to rescue us when we least expect it!

Ever had a brilliant idea pop into your head out of nowhere? That’s insight learning at work! Whether you’re writing a story, composing music, or designing something new, insight can spark creativity and help you come up with fresh, innovative ideas.

Learning New Skills

Learning isn’t always about memorizing facts or following step-by-step instructions. Sometimes, it’s about having those “aha” moments that make everything click into place. Insight learning can help us grasp tricky concepts, master difficult skills, and become better learners overall.

Insight learning isn’t just for individuals—it’s also crucial for innovation and progress in society. Scientists, inventors, and entrepreneurs rely on insight to make groundbreaking discoveries and develop new technologies that improve our lives. Who knows? The next big invention could start with someone having a brilliant idea in the shower!

Overcoming Challenges

Life is full of challenges, but insight learning can help us tackle them with confidence. Whether it’s navigating a maze, solving a puzzle, or facing a tough decision, insight can provide the clarity and creativity we need to overcome obstacles and achieve our goals.

The next time you’re feeling stuck or uninspired, remember: the solution might be just one “aha” moment away!

Alternatives to Insight Learning Theory

While insight learning theory emphasizes sudden understanding and restructuring of problem-solving strategies, several alternative theories offer different perspectives on how learning and problem-solving occur. Here are some of the key alternative theories:

Behaviorism

Behaviorism is a theory that focuses on observable, overt behaviors and the external factors that influence them. According to behaviorists like B.F. Skinner, learning is a result of conditioning, where behaviors are reinforced or punished based on their consequences.

In contrast to insight learning theory, behaviorism suggests that learning occurs gradually through repeated associations between stimuli and responses rather than sudden insights or realizations.

Cognitive Learning Theory

Cognitive learning theory, influenced by psychologists such as Jean Piaget and Lev Vygotsky , emphasizes the role of mental processes in learning. This theory suggests that individuals actively construct knowledge and understanding through processes like perception, memory, and problem-solving.

Cognitive learning theory acknowledges the importance of insight and problem-solving strategies but places greater emphasis on cognitive structures and processes underlying learning.

Gestalt Psychology

Gestalt psychology, which influenced insight learning theory, proposes that learning and problem-solving involve the organization of perceptions into meaningful wholes or “gestalts.”

Gestalt psychologists like Max Wertheimer emphasized the role of insight and restructuring in problem-solving, but their theories also consider other factors, such as perceptual organization, pattern recognition, and the influence of context.

Information Processing Theory

Information processing theory views the mind as a computer-like system that processes information through various stages, including input, processing, storage, and output. This theory emphasizes the role of attention, memory, and problem-solving strategies in learning and problem-solving.

While insight learning theory focuses on sudden insights and restructuring, information processing theory considers how individuals encode, manipulate, and retrieve information to solve problems.

Related reading:

- What Is Kolb’s Learning Cycle?

- What Is Latent Learning?

What Is Scaffolding in Psychology?

- What Is Observational Learning?

Kizilirmak, J. M., Fischer, L., Krause, J., Soch, J., Richter, A., & Schott, B. H. (2021). Learning by insight-like sudden comprehension as a potential strategy to improve memory encoding in older adults . Frontiers in Aging Neuroscience , 13 , 661346. https://doi.org/10.3389/fnagi.2021.661346

Lind, J., Enquist, M. (2012). Insight learning and shaping . In: Seel, N.M. (eds) Encyclopedia of the Sciences of Learning . Springer, Boston, MA. https://doi.org/10.1007/978-1-4419-1428-6_851

Osuna-Mascaró, A. J., & Auersperg, A. M. I. (2021). Current understanding of the “insight” phenomenon across disciplines . Frontiers in Psychology , 12, 791398. https://doi.org/10.3389/fpsyg.2021.791398

Salmon-Mordekovich, N., & Leikin, M. (2023). Insight problem solving is not that special, but business is not quite ‘as usual’: typical versus exceptional problem-solving strategies . Psychological Research , 87 (6), 1995–2009. https://doi.org/10.1007/s00426-022-01786-5

Explore Psychology covers psychology topics to help people better understand the human mind and behavior. Our team covers studies and trends in the modern world of psychology and well-being.

Related Articles:

What is Kolb’s Learning Cycle and How Does it Work?

David A. Kolb, an influential American educational theorist, is best known for his work on experiential learning theory. Central to this theory is Kolb’s learning cycle, which comprises four stages: Concrete Experience, Reflective Observation, Abstract Conceptualization, and Active Experimentation. This cycle explains how individuals learn through a continuous process of experiencing, reflecting, conceptualizing, and experimenting. …

Kinesthetic Learning: Definition and Examples

Hands-on learning that helps you master new skills.

What Is Observational Learning in Psychology?

There are many ways to learn, but one of the most common involves observing what other people are doing. Consider how often you watch others, whether it’s a family member, a teacher, or your favorite YouTuber. This article explores the theory of observational learning, the steps that are involved, and some of the factors that…

What Is Latent Learning? Definition and Examples

Latent learning refers to learning that is not immediately displayed. Essentially, it learning that happens as you live your life. You might not consciously try to notice and remember it, but your brain picks it up anyway. While you might not demonstrate such learning right away, it’s something that might come in handy later when…

What Are Kolb’s Learning Styles?

Learn about the diverging, assimilating, converging, and accommodating styles.

Scaffolding refers to the temporary support that adults or other competent peers offer when a person is learning a new skill or trying to accomplish a task. The concept was first introduced by the Russian psychologist Lev Vygotsky, who was best known for his theories that emphasized the importance of social interaction in the learning…

Thinking and Intelligence

Introduction to Thinking and Problem-Solving

What you’ll learn to do: describe cognition and problem-solving strategies.

Imagine all of your thoughts as if they were physical entities, swirling rapidly inside your mind. How is it possible that the brain is able to move from one thought to the next in an organized, orderly fashion? The brain is endlessly perceiving, processing, planning, organizing, and remembering—it is always active. Yet, you don’t notice most of your brain’s activity as you move throughout your daily routine. This is only one facet of the complex processes involved in cognition. Simply put, cognition is thinking, and it encompasses the processes associated with perception, knowledge, problem solving, judgment, language, and memory. Scientists who study cognition are searching for ways to understand how we integrate, organize, and utilize our conscious cognitive experiences without being aware of all of the unconscious work that our brains are doing (for example, Kahneman, 2011).

Learning Objectives

- Distinguish between concepts and prototypes

- Explain the difference between natural and artificial concepts

- Describe problem solving strategies, including algorithms and heuristics

- Explain some common roadblocks to effective problem solving

CC licensed content, Original

- Modification, adaptation, and original content. Provided by : Lumen Learning. License : CC BY: Attribution

CC licensed content, Shared previously

- What Is Cognition?. Authored by : OpenStax College. Located at : https://openstax.org/books/psychology-2e/pages/7-1-what-is-cognition . License : CC BY: Attribution . License Terms : Download for free at https://openstax.org/books/psychology-2e/pages/1-introduction

- A Thinking Man Image. Authored by : Wesley Nitsckie. Located at : https://www.flickr.com/photos/nitsckie/5507777269 . License : CC BY-SA: Attribution-ShareAlike

General Psychology Copyright © by OpenStax and Lumen Learning is licensed under a Creative Commons Attribution 4.0 International License , except where otherwise noted.

Share This Book

- Skip to main content

- Skip to primary sidebar

IResearchNet

Problem Solving

Problem solving, a fundamental cognitive process deeply rooted in psychology, plays a pivotal role in various aspects of human existence, especially within educational contexts. This article delves into the nature of problem solving, exploring its theoretical underpinnings, the cognitive and psychological processes that underlie it, and the application of problem-solving skills within educational settings and the broader real world. With a focus on both theory and practice, this article underscores the significance of cultivating problem-solving abilities as a cornerstone of cognitive development and innovation, shedding light on its applications in fields ranging from education to clinical psychology and beyond, thereby paving the way for future research and intervention in this critical domain of human cognition.

Introduction

Problem solving, a quintessential cognitive process deeply embedded in the domains of psychology and education, serves as a linchpin for human intellectual development and adaptation to the ever-evolving challenges of the world. The fundamental capacity to identify, analyze, and surmount obstacles is intrinsic to human nature and has been a subject of profound interest for psychologists, educators, and researchers alike. This article aims to provide a comprehensive exploration of problem solving, investigating its theoretical foundations, cognitive intricacies, and practical applications in educational contexts. With a clear understanding of its multifaceted nature, we will elucidate the pivotal role that problem solving plays in enhancing learning, fostering creativity, and promoting cognitive growth, setting the stage for a detailed examination of its significance in both psychology and education. In the continuum of psychological research and educational practice, problem solving stands as a cornerstone, enabling individuals to navigate the complexities of their world. This article’s thesis asserts that problem solving is not merely a cognitive skill but a dynamic process with profound implications for intellectual growth and application in diverse real-world contexts.

The Nature of Problem Solving

Problem solving, within the realm of psychology, refers to the cognitive process through which individuals identify, analyze, and resolve challenges or obstacles to achieve a desired goal. It encompasses a range of mental activities, such as perception, memory, reasoning, and decision-making, aimed at devising effective solutions in the face of uncertainty or complexity.

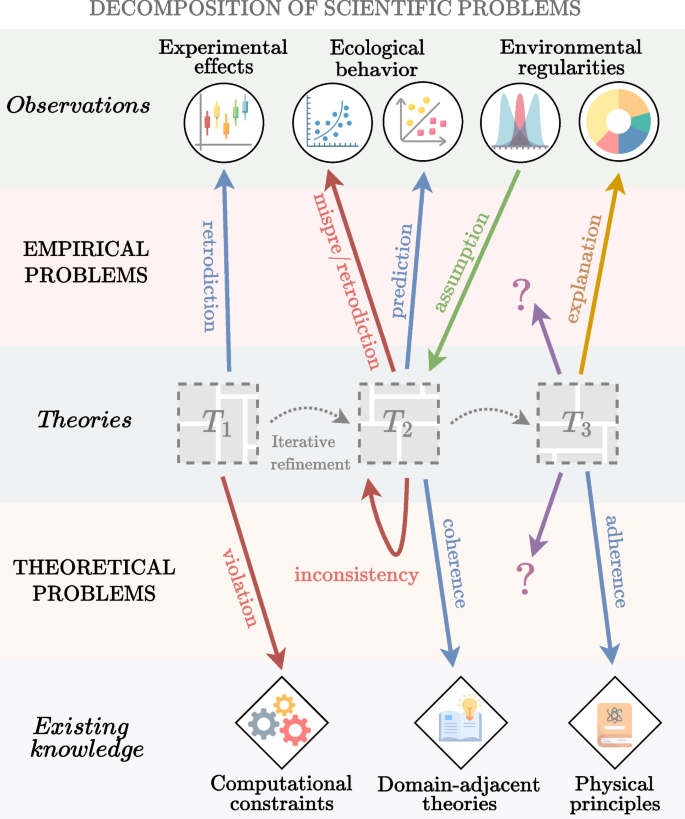

Problem solving as a subject of inquiry has drawn from various theoretical perspectives, each offering unique insights into its nature. Among the seminal theories, Gestalt psychology has highlighted the role of insight and restructuring in problem solving, emphasizing that individuals often reorganize their mental representations to attain solutions. Information processing theories, inspired by computer models, emphasize the systematic and step-by-step nature of problem solving, likening it to information retrieval and manipulation. Furthermore, cognitive psychology has provided a comprehensive framework for understanding problem solving by examining the underlying cognitive processes involved, such as attention, memory, and decision-making. These theoretical foundations collectively offer a richer comprehension of how humans engage in and approach problem-solving tasks.

Problem solving is not a monolithic process but a series of interrelated stages that individuals progress through. These stages are integral to the overall problem-solving process, and they include:

- Problem Representation: At the outset, individuals must clearly define and represent the problem they face. This involves grasping the nature of the problem, identifying its constraints, and understanding the relationships between various elements.

- Goal Setting: Setting a clear and attainable goal is essential for effective problem solving. This step involves specifying the desired outcome or solution and establishing criteria for success.

- Solution Generation: In this stage, individuals generate potential solutions to the problem. This often involves brainstorming, creative thinking, and the exploration of different strategies to overcome the obstacles presented by the problem.

- Solution Evaluation: After generating potential solutions, individuals must evaluate these alternatives to determine their feasibility and effectiveness. This involves comparing solutions, considering potential consequences, and making choices based on the criteria established in the goal-setting phase.

These components collectively form the roadmap for navigating the terrain of problem solving and provide a structured approach to addressing challenges effectively. Understanding these stages is crucial for both researchers studying problem solving and educators aiming to foster problem-solving skills in learners.

Cognitive and Psychological Aspects of Problem Solving

Problem solving is intricately tied to a range of cognitive processes, each contributing to the effectiveness of the problem-solving endeavor.

- Perception: Perception serves as the initial gateway in problem solving. It involves the gathering and interpretation of sensory information from the environment. Effective perception allows individuals to identify relevant cues and patterns within a problem, aiding in problem representation and understanding.

- Memory: Memory is crucial in problem solving as it enables the retrieval of relevant information from past experiences, learned strategies, and knowledge. Working memory, in particular, helps individuals maintain and manipulate information while navigating through the various stages of problem solving.

- Reasoning: Reasoning encompasses logical and critical thinking processes that guide the generation and evaluation of potential solutions. Deductive and inductive reasoning, as well as analogical reasoning, play vital roles in identifying relationships and formulating hypotheses.

While problem solving is a universal cognitive function, individuals differ in their problem-solving skills due to various factors.

- Intelligence: Intelligence, as measured by IQ or related assessments, significantly influences problem-solving abilities. Higher levels of intelligence are often associated with better problem-solving performance, as individuals with greater cognitive resources can process information more efficiently and effectively.

- Creativity: Creativity is a crucial factor in problem solving, especially in situations that require innovative solutions. Creative individuals tend to approach problems with fresh perspectives, making novel connections and generating unconventional solutions.

- Expertise: Expertise in a specific domain enhances problem-solving abilities within that domain. Experts possess a wealth of knowledge and experience, allowing them to recognize patterns and solutions more readily. However, expertise can sometimes lead to domain-specific biases or difficulties in adapting to new problem types.

Despite the cognitive processes and individual differences that contribute to effective problem solving, individuals often encounter barriers that impede their progress. Recognizing and overcoming these barriers is crucial for successful problem solving.

- Functional Fixedness: Functional fixedness is a cognitive bias that limits problem solving by causing individuals to perceive objects or concepts only in their traditional or “fixed” roles. Overcoming functional fixedness requires the ability to see alternative uses and functions for objects or ideas.

- Confirmation Bias: Confirmation bias is the tendency to seek, interpret, and remember information that confirms preexisting beliefs or hypotheses. This bias can hinder objective evaluation of potential solutions, as individuals may favor information that aligns with their initial perspectives.

- Mental Sets: Mental sets are cognitive frameworks or problem-solving strategies that individuals habitually use. While mental sets can be helpful in certain contexts, they can also limit creativity and flexibility when faced with new problems. Recognizing and breaking out of mental sets is essential for overcoming this barrier.

Understanding these cognitive processes, individual differences, and common obstacles provides valuable insights into the intricacies of problem solving and offers a foundation for improving problem-solving skills and strategies in both educational and practical settings.

Problem Solving in Educational Settings

Problem solving holds a central position in educational psychology, as it is a fundamental skill that empowers students to navigate the complexities of the learning process and prepares them for real-world challenges. It goes beyond rote memorization and standardized testing, allowing students to apply critical thinking, creativity, and analytical skills to authentic problems. Problem-solving tasks in educational settings range from solving mathematical equations to tackling complex issues in subjects like science, history, and literature. These tasks not only bolster subject-specific knowledge but also cultivate transferable skills that extend beyond the classroom.

Problem-solving skills offer numerous advantages to both educators and students. For teachers, integrating problem-solving tasks into the curriculum allows for more engaging and dynamic instruction, fostering a deeper understanding of the subject matter. Additionally, it provides educators with insights into students’ thought processes and areas where additional support may be needed. Students, on the other hand, benefit from the development of critical thinking, analytical reasoning, and creativity. These skills are transferable to various life situations, enhancing students’ abilities to solve complex real-world problems and adapt to a rapidly changing society.

Teaching problem-solving skills is a dynamic process that requires effective pedagogical approaches. In K-12 education, educators often use methods such as the problem-based learning (PBL) approach, where students work on open-ended, real-world problems, fostering self-directed learning and collaboration. Higher education institutions, on the other hand, employ strategies like case-based learning, simulations, and design thinking to promote problem solving within specialized disciplines. Additionally, educators use scaffolding techniques to provide support and guidance as students develop their problem-solving abilities. In both K-12 and higher education, a key component is metacognition, which helps students become aware of their thought processes and adapt their problem-solving strategies as needed.