The ultimate AI assistant for grading assignments.

Grade assignments 10x faster and provide personalized writing feedback. GradeWrite streamlines grading process with bulk-grading, auto-checkers, AI re-grades, custom rubrics, and much more.

How It Works

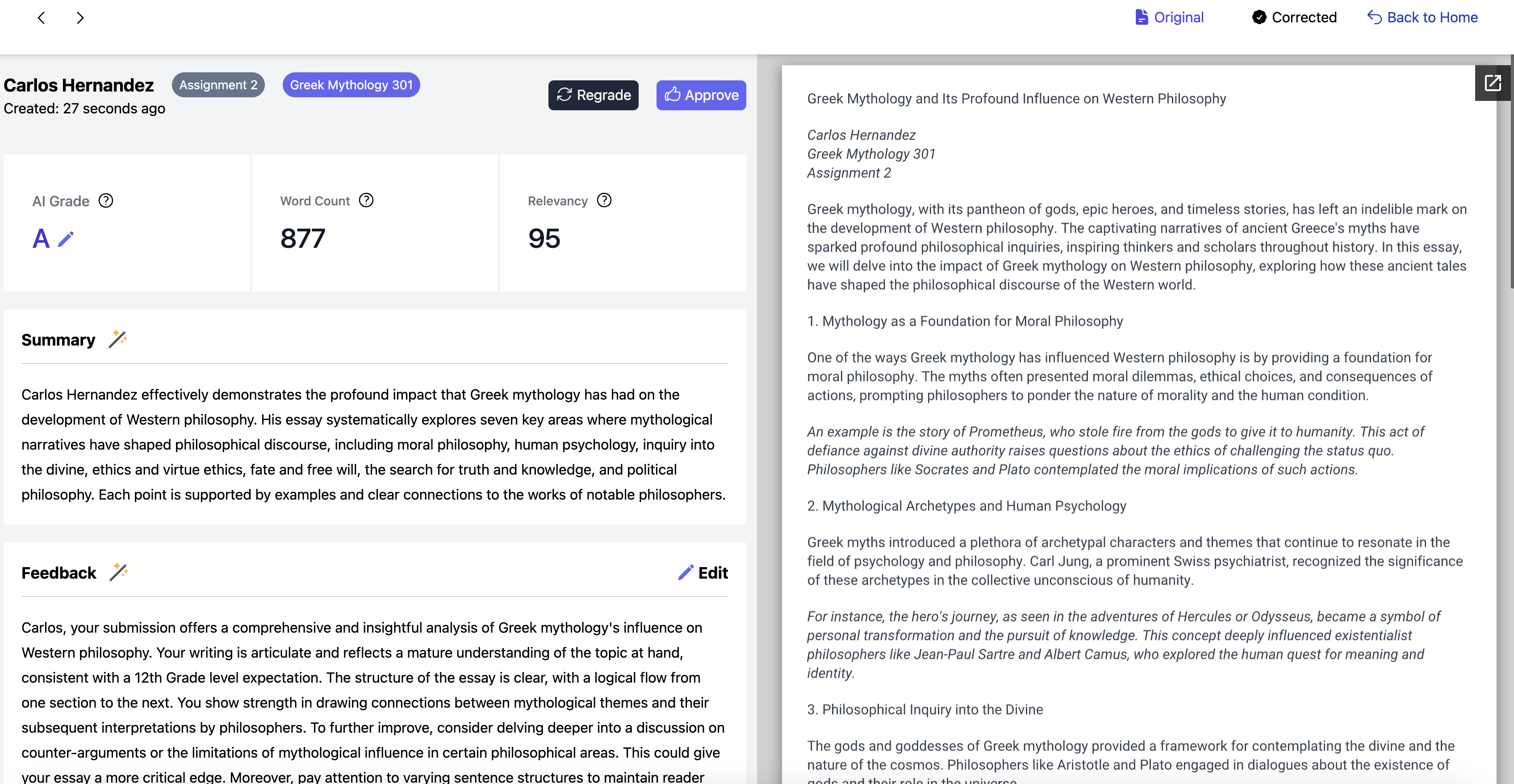

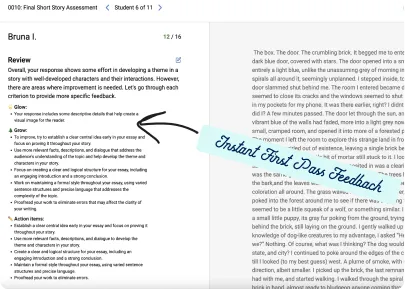

Seamless, Interactive Grading with AI

Leverage the AI grading co-pilot to streamline your grading process with AI-powered precision and ease that helps you grade 10x faster.

Features and Benefits

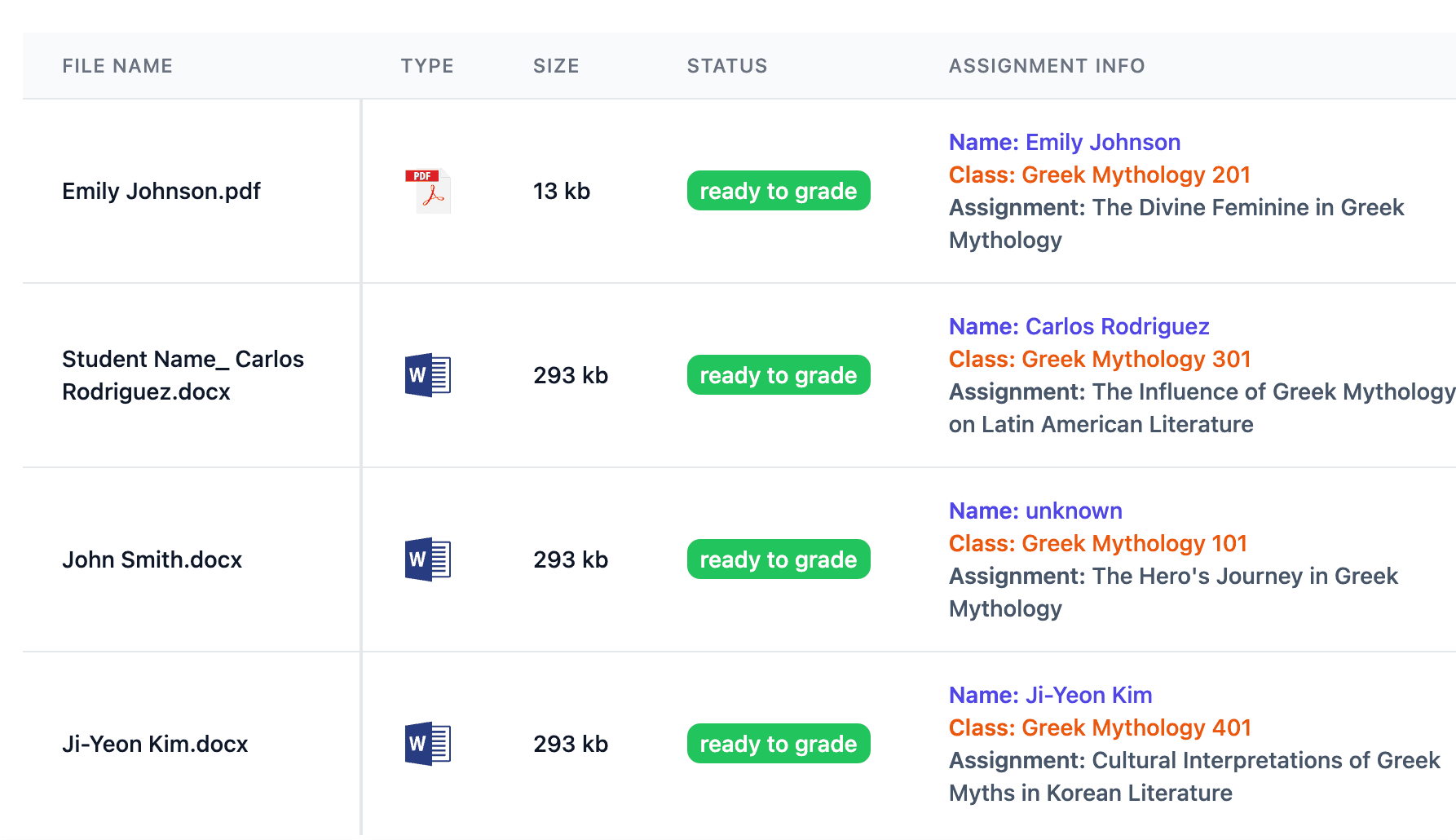

Bulk uploads and 5000+ word limit, grade 10x faster with streamlined grading.

GradeWrite AI's bulk upload feature allows you to upload multiple files at once, saving you time and effort. Our system also supports files up to 3000 words, allowing you to grade longer assignments with ease. Additionally, our side-by-side grading feature allows you to view the original submission and the AI-generated feedback simultaneously, ensuring a seamless grading experience.

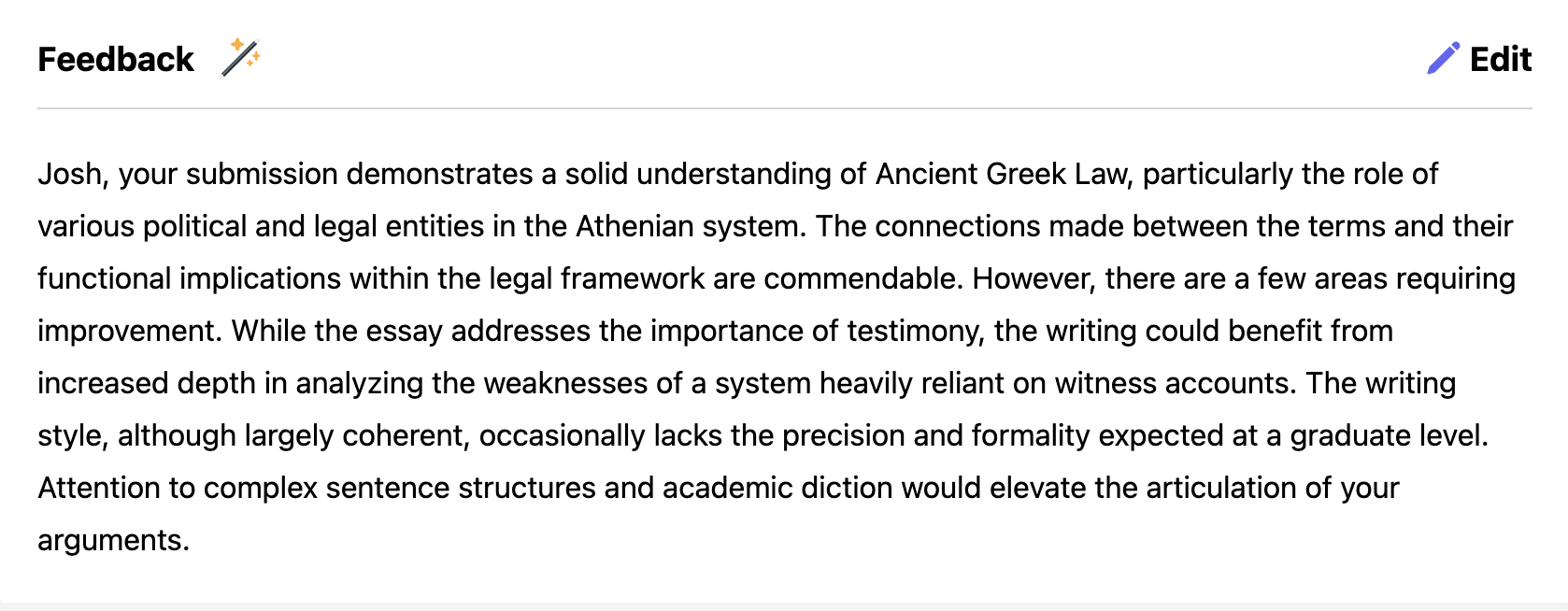

AI enhanced, custom writing feedback

Iterate feedback with ai-generated suggestions.

Use GradeWrite.AI’s AI-generated feedback to quickly and easily generate detailed, constructive feedback. You can easily iterate on this feedback by editing it, or refreshing AI feedback. This feature not only saves you time, but also helps students improve their writing skills. Our AI-generated feedback is designed to enhance your feedback, not replace it.

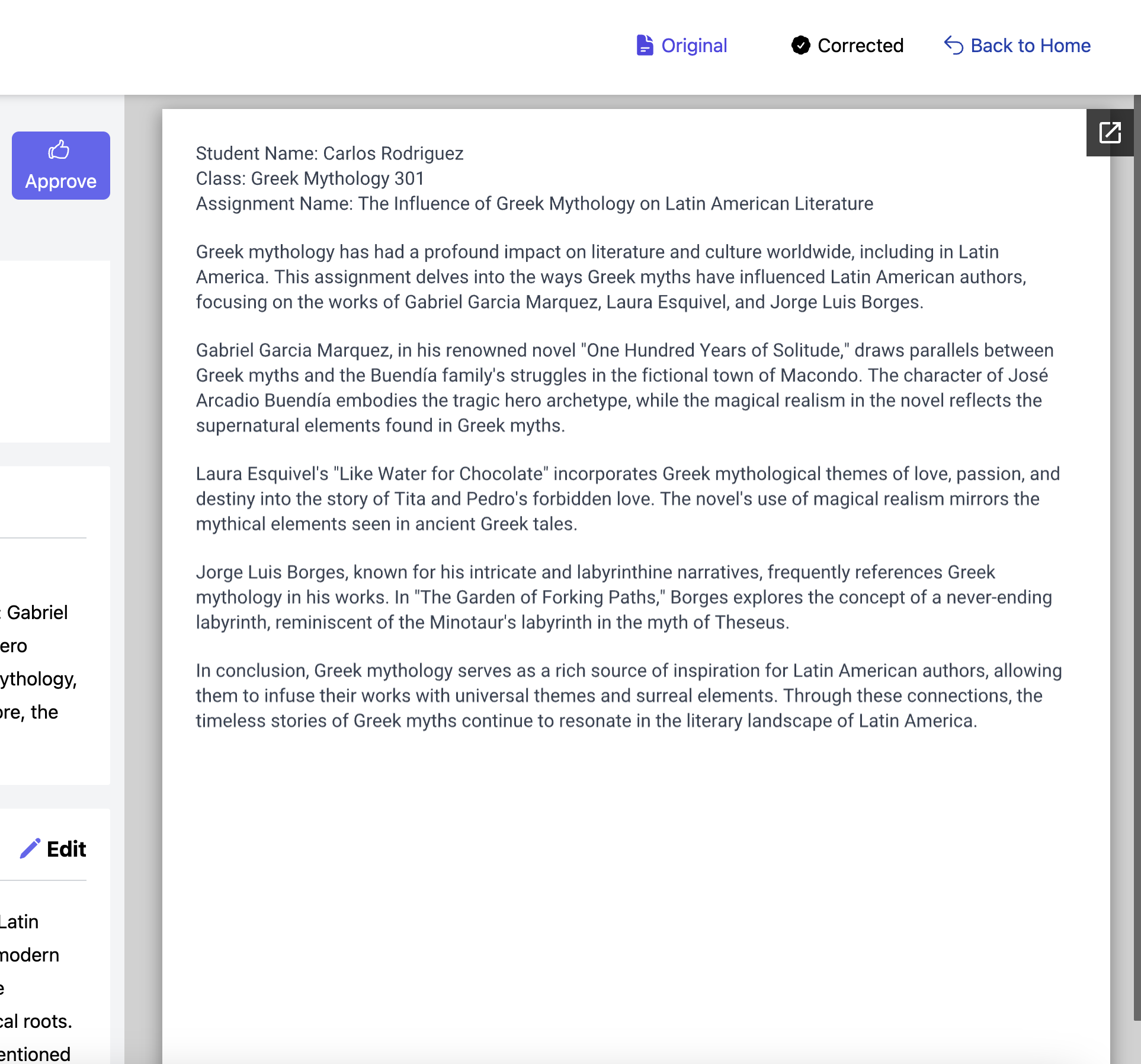

Side by side view of original submission and AI-generated feedback

Easily see original submission and ai-generated feedback.

GradeWrite.AI’s side-by-side view allows you to easily compare the original submission and the AI-generated feedback. This feature ensures that you don’t miss any important details, while saving you time and effort.

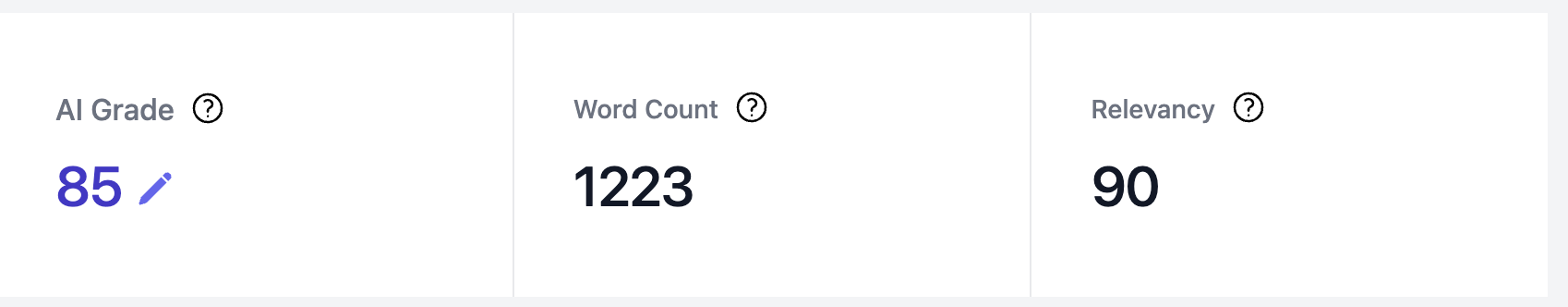

Auto-checkers for word count, AI detection, and rubric adherence

Flag issues early with auto-checkers.

GradeWrite.AI’s auto-checkers ensure that every submission meets your standards. Our system checks for word count, AI detection, and rubric adherence, flagging any issues early in the grading process. This allows you to focus on the most important aspects of grading, while ensuring that every submission meets your standards.

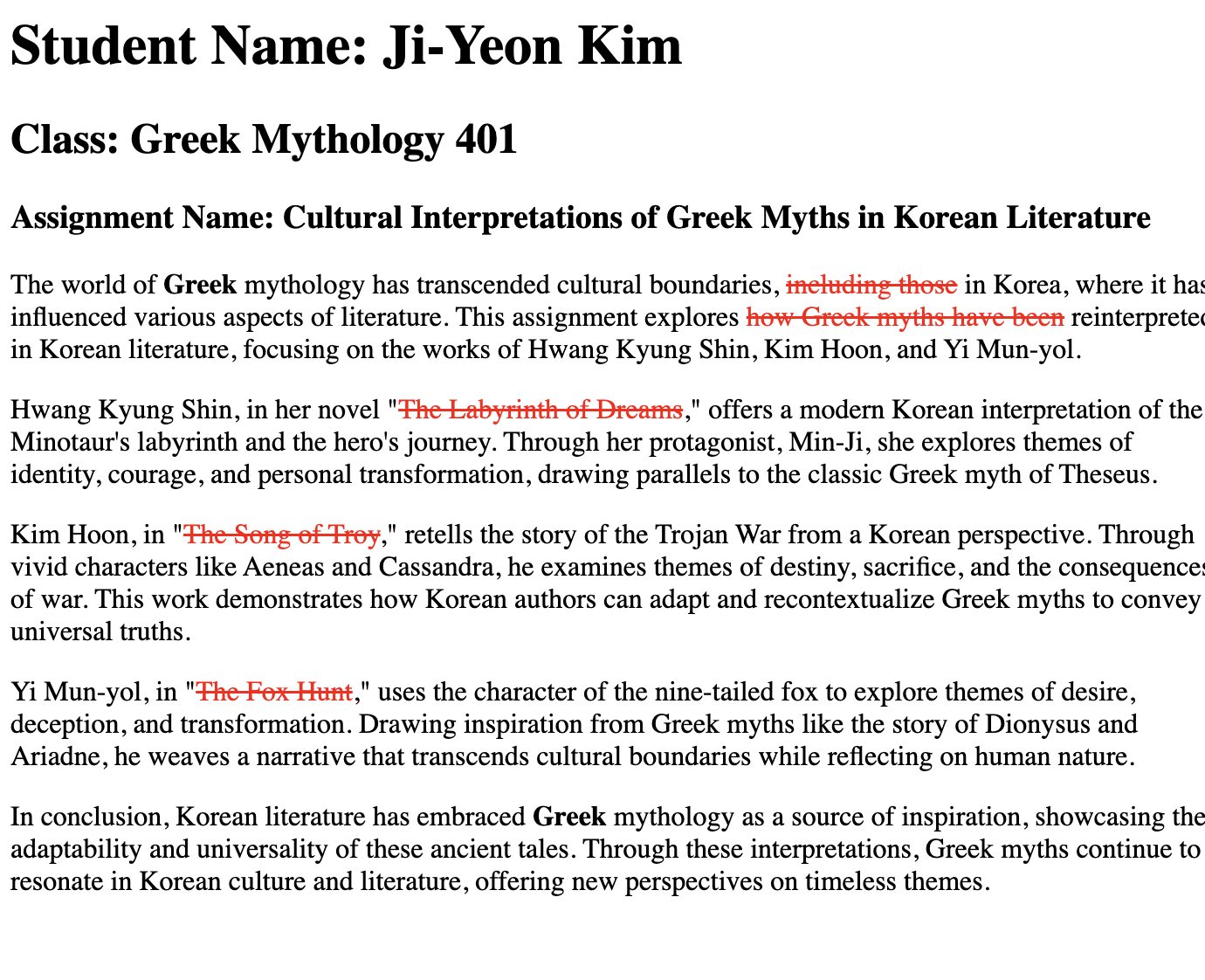

Auto-summaries of assignments for quick review

Get a quick overview of assignments.

GradeWrite.AI’s auto-summaries provide a quick overview of each assignment, allowing you to quickly review submissions. This feature saves you time and effort, while ensuring that you don’t miss any important details.

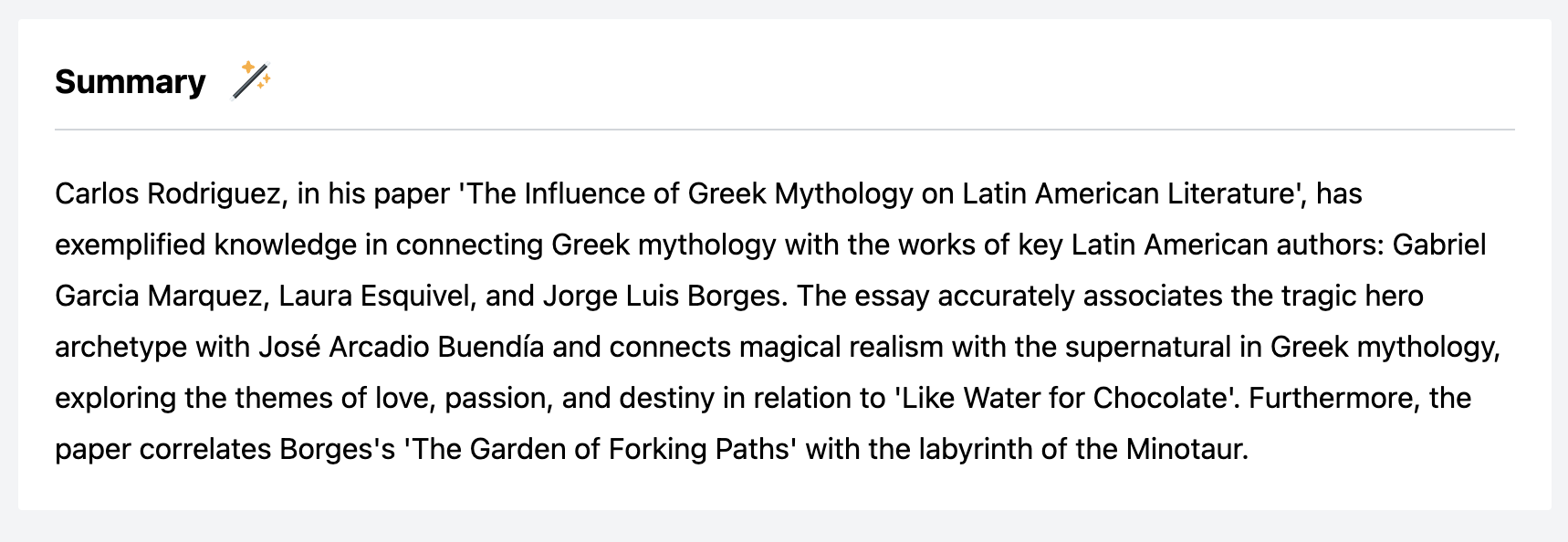

Automated grammar and spelling checks (beta)

Allow students to submit polished work.

Say goodbye to manual proofreading with our automated grammar and spelling check feature. This tool ensures every submission is polished and error-free, saving time and enhancing the quality of student work.

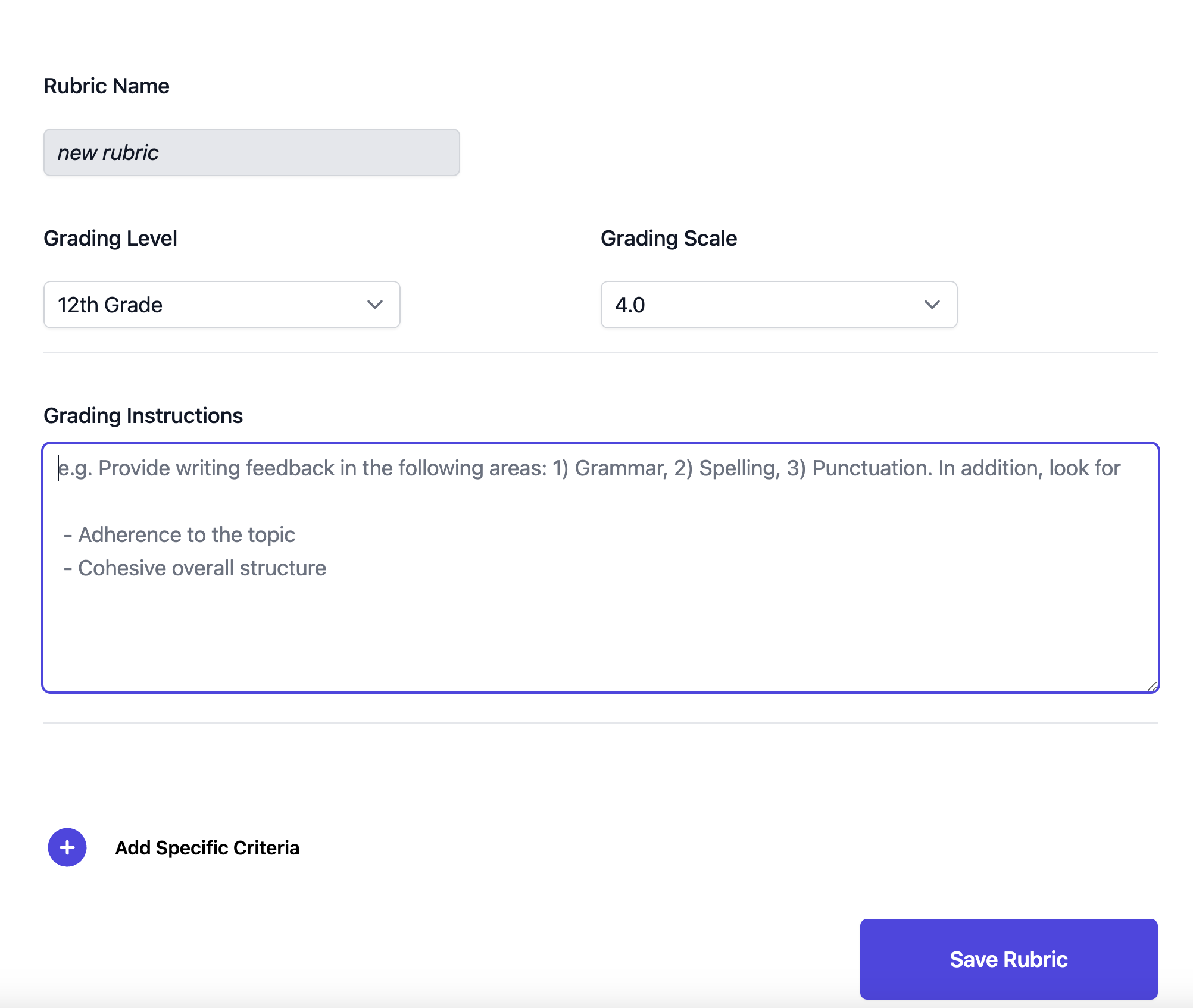

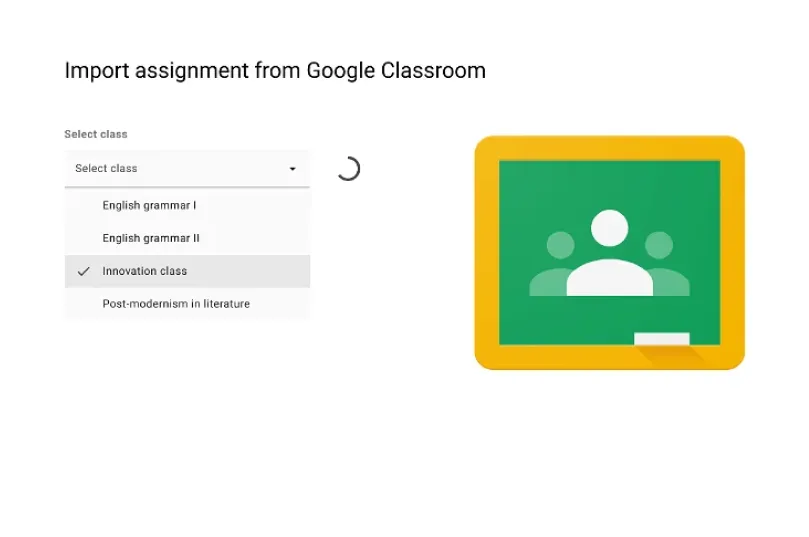

Custom rubrics to teach AI how to grade

Flexible custom rubrics.

Create custom rubrics that align with your specific grading criteria. This feature allows you to tailor the AI’s grading process to match your unique teaching style, ensuring consistent and personalized assessments.

Streamline your grading process, today

Grade assignments 10x faster and provide personalized writing feedback with AI. GradeWrite streamlines grading process with bulk-grading, auto-checkers, AI re-grades, custom rubrics, and much more.

Frequently asked questions

AI Essay Grader

CoGrader is the Free AI Essay Grader for Teachers that helps you save 80% of the time grading essays with instant first-pass feedback & grades, based on your rubrics. Grade Narrative, Informative or Argumentative essays using CoGrader. It's free to use for up to 100 essays/month.

Pick your Rubric to Grade Essays with AI

We have +30 Rubrics in our Library - but you can also build your own rubrics.

Argumentive Essays

Rubrics from 6th to 12th Grade and Higher Education. Grades Claim/Focus, Support/Evidence, Organization and Language/Style.

Informative Essays

Rubrics from 6th to 12th Grade and Higher Education. Grades Clarity/Focus, Development, Organization and Language/Style.

Narrative Essays

Rubrics from 6th to 12th Grade and Higher Education. Grades Plot/Ideas, Development/Elaboration, Organization and Language/Style.

Analytical Essays

Rubrics from 6th to 12th Grade and Higher Education. Grades Claim/Focus, Analysis/Evidence, Organization and Language/Style.

AP Essays, DBQs & LEQs

Grade Essays from AP Classes, including DBQs & LEQs. Grades according to the AP rubrics.

+30 Rubrics Available

You can also build your own rubric/criteria.

Your AI Essay Grading Tool

It's a never-ending task that consumes valuable time and energy, often leaving teachers frustrated and overwhelmed

With CoGrader, grading becomes a breeze. You will have more time for what really matters: teaching, supporting students and providing them with meaningful feedback.

Meet your AI Grader

Leverage Artificial Intelligence (AI) to get First-Pass Feedback on your students' assignments instantaneously, detect ChatGPT usage and see class data analytics.

Save time and Effort

Streamline your grading process and save hours or days.

Ensure fairness and consistency

Remove human biases from the equation with CoGrader's objective and fair grading system.

Provide better feedback

Provide lightning-fast comprehensive feedback to your students, helping them understand their performance better.

Class Analytics

Get an x-ray of your class's performance to spot challenges and strengths, and inform planning.

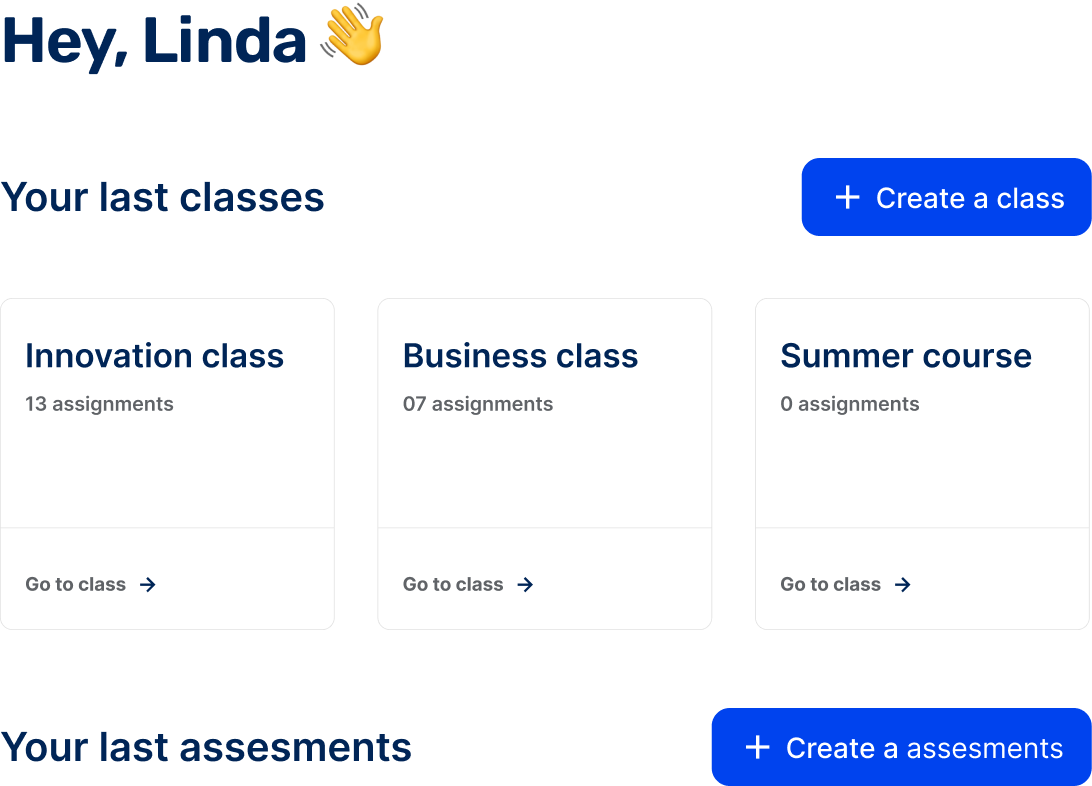

Google Classroom Integration

Import assignments from Google Classroom to CoGrader, and export reviewed feedback and grades back to it.

Canvas and Schoology compatibility

Export your assignments in bulk and upload them to CoGrader with one click.

Used at 1000+ schools

Backed by UC Berkeley

Teachers love CoGrader

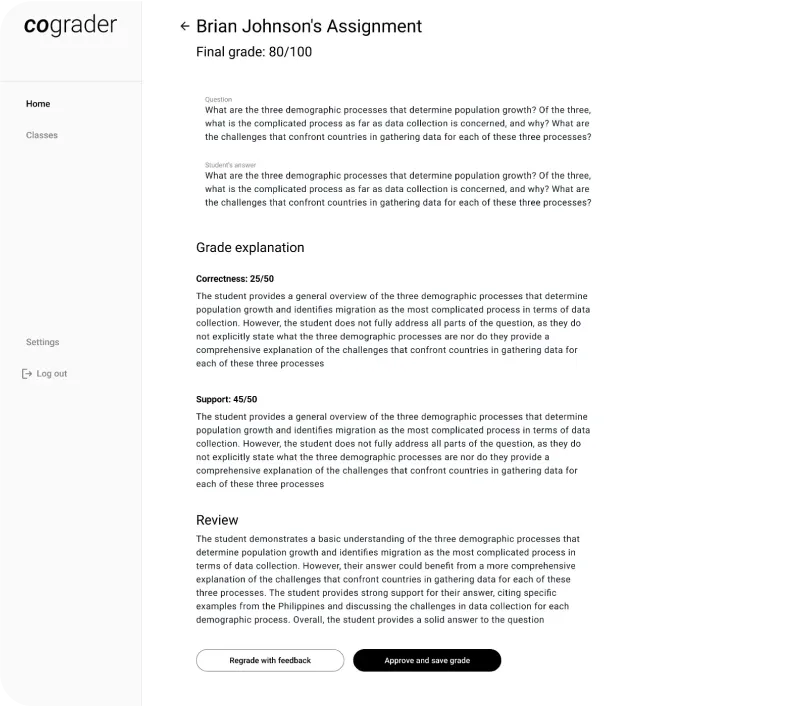

How does CoGrader work?

It's easy to supercharge your grading process

Import Assignments from Google Classroom

CoGrader will automatically import the prompt given to the students and all files they have turned in.

Define Grading Criteria

Use our rubric template, based on your State's standards, or set up your own grading criteria to align with your evaluation standards, specific requirements and teaching objectives. Built in Rubrics include Argument, Narrative and Informative pieces.

Get Grades, Feedback and Reports

CoGrader generates detailed feedback and justification reports for each student, highlighting areas of improvement together with the grade.

Review and Adjust

The teacher has the final say! Adjust the grades and the feedback so you can make sure every student gets the attention they deserve.

Want to see what Education looks like in 2023?

Get started right away, not sure yet, frequently asked questions.

CoGrader: the AI copilot for teachers.

You can think of CoGrader as your teaching assistant, who streamlines grading by drafting initial feedback and grade suggestions, saving you time and hassle, and providing top notch feedback for the kids. You can use standardized rubrics and customize criteria, ensuring that your grading process is fair and consistent. Plus, you can detect if your student used ChatGPT to answer the assignment.

CoGrader considers the rubric and your grading instructions to automatically grade and suggest feedback, using AI. Currently CoGrader integrates with Google Classroom and will soon integrate with other LMS. If you don't use Google Classroom, let your LMS provider know that you are interested, so they speed up the process.

Try it out! We have designed CoGrader to be user-friendly and intuitive. We offer training and support to help you get started. Let us know if you need any help.

Privacy matters to us and we're committed to protecting student privacy. We are FERPA-compliant. We use student names to match assignments with the right students, but we quickly change them into a code that keeps the information private, and we get rid of the original names. We don't keep any other personal information about the students. The only thing we do keep is the text of the students' answers to assignments, because we need it for our grading service. This information is kept safe using Google’s secure system, known as OAuth2, which follows all the rules to make sure the information stays private. For a complete understanding of our commitment to privacy and the measures we take to ensure it, we encourage you to read our detailed privacy policy.

CoGrader finally allows educators to provide specific and timely feedback. In addition, it saves time and hassle, ensures consistency and accuracy in grading, reduces biases, and promotes academic integrity.

Soon, we'll indicate whether students have used ChatGPT or other AI systems for assignments, but achieving 100% accurate detection is not possible due to the complexity of human and AI-generated writing. Claims to the contrary are misinformation, as they overlook the nuanced nature of modern technology.

CoGrader uses cutting-edge generative AI algorithms that have undergone rigorous testing and human validation to ensure accuracy and consistency. In comparisons to manual grading, CoGrader typically shows only a small difference of up to ~5% in grades, often less than the variance between human graders. Some teachers have noted that this variance can be influenced by personal bias or the workload of grading. While CoGrader works hard to minimize errors and offer reliable results, it is always a good practice to review and validate the grades (and feedback) before submitting them.

CoGrader is designed to assist educators by streamlining the grading process with AI-driven suggestions. However, the final feedback and grades remain the responsibility of the educator. While CoGrader aims for accuracy and fairness, it should be used as an aid, not a replacement, for professional judgment. Educators should review and validate the grades and feedback before finalizing results. The use of CoGrader constitutes acceptance of these terms, and we expressly disclaim any liability for errors or inconsistencies. The final grading decision always rests with the educator.

Just try it out! We'll guide you along the way. If you have any questions, we're here to help. Once you're in, you'll experience saving countless hours and procrastination, and make grading efficient, fair, and helpful.

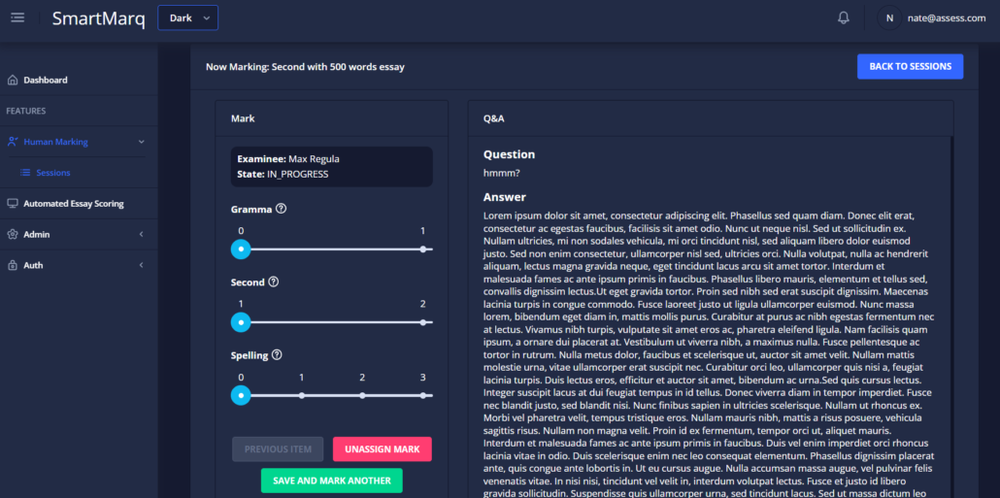

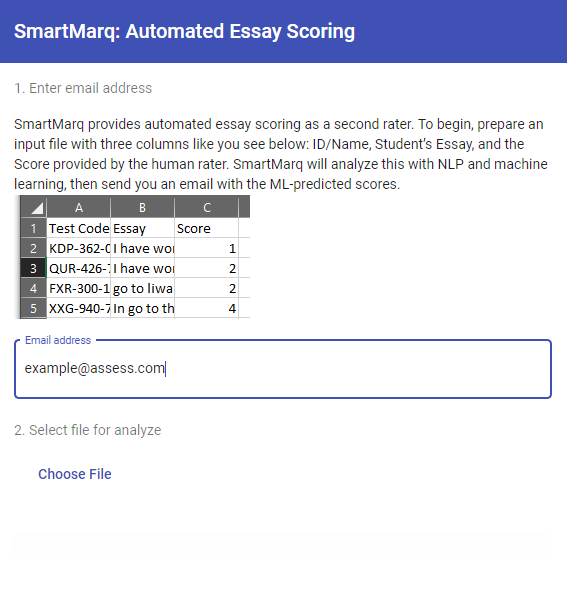

SmartMarq: Human and AI Essay marking

Easily manage the essay marking process, then use modern machine learning models to serve as a second or third rater, reducing costs and timelines.

Define Rubrics

Create your scoring rubrics and performance descriptors

Manage Raters

Assign essays to be scored, then view results

Gather Ratings

Raters can easily move through and leave scores and comments

Auto-Scoring

Implement automated essay scoring to flag unusual scores

SmartMarq will streamline your essay marking process

SmartMarq makes it easy to implement large-scale, professional essay scoring.

- Reduce timelines for marking

- Increase convenience by managing fully online

- Implement business rules to ensure quality

- Once raters are done, run the results through our AI to train a custom machine learning model for your data, obtaining a second “rater.”

Note that our powerful AI scoring is customized, specific to each one of your prompts and rubrics – not developed with a shotgun approach based on general heuristics.

Fully integrated into our FastTest ecosystem

We pride ourselves on providing a single ecosystem with configurable modules that covers the entire test development and delivery cycle. SmartMarq is available both standalone, and as part of our online delivery platform. If you have open-response items, especially extended constructed response (ECR) items, our platforms will improve the process needed to mark these items. Leverage our user-friendly, highly scalable online marking module to manage hundreds (or just a few) raters, with single or multi-marking situations.

“FastTest reduced the workhours needed to mark our student essays by approximately 60%, cutting it from a multi-day district-wide project to a single day!”

A K-12 FastTest Client

Manage Users

Upload users and manage assignments to groups of students

Create Rubrics

Create your rubrics, including point values and descriptor

Tag Rubrics to Items

When authoring items, simply assign the rubrics you want to use

Set Marking Rules

Require multiple markers, adjudication of disagreements, and visibility limitations? Users can be specified to see only THEIR students, or have the entire population anonymized and randomized. Configure as you need.

Deliver tests online

Students write their essays or other ECR responses

Users mark responses

Users (e.g., teachers) log in and mark student responses on your specified rubrics, as well as flag responses or leave comments. Admins can adjudicate any disagreements.

Score examinees

Examinees will be automatically scored. For example, if your test has 40 multiple choice items and an essay with two 5-point rubrics, the total score is 50. We also support the generalized partial credit model from item response theory, or exporting results to analyze in other software like FACETS.

Sign up for a SmartMarq account

Simply upload your student essays and human marking results, and our AI essay scoring system will provide an additional set of marks.

Need a complete platform to manage the entire assessment cycle, from item banking to online delivery to scoring? FastTest provides the ideal solution. It includes an integrated version of SmartMarq with advanced options like scoring rubrics with the Generalized Partial Credit Model .

Chat with the experts

Ready to make your assessments more effective?

Online Assessment

Psychometrics.

What Is an Automated Essay Scoring System & 7 Best Platforms to Use

Are you a teacher drowning in a sea of ungraded essays? Confronting piles of ungraded essays can be challenging and time-consuming. But what if I told you that there’s a solution? What if I told you that there are tools for teachers that can help you save hours on grading essays using AI? In this blog, we will dive into the world of Automated Essay Scoring, revealing how it can help educators like you streamline and speed up the grading process, allowing you more time to focus on teaching. Looking for a way to save time grading essays? Consider EssayGrader's AI essay grader . It's a valuable tool that can help you save time grading essays using AI.

What Is Automated Essay Scoring?

Automated essay scoring (AES) is a computer-based assessment system that automatically scores or grades the student responses by considering appropriate features. The AES research started in 1966 with the Project Essay Grader (PEG) by Ajay et al. (1973).

Automated Essay Scoring (AES) - PEG System

To grade the essay, PEG evaluates the writing characteristics such as grammar, diction, construction, etc. A modified version of the PEG by Shermis et al. (2001) was released, focusing on grammar checking with a correlation between human evaluators and the system. AES systems like Dong et al. (2017) and others developed in 2014 used deep learning techniques.

Automated Essay Scoring (AES) - IEA System

- Foltz et al. (1999) introduced an Intelligent Essay Assessor (IEA) by evaluating content using latent semantic analysis to produce an overall score

- Powers et al. (2002) proposed E-rater and Intellimetric by Rudner et al. (2006)

- Bayesian Essay Test Scoring System (BESTY) by Rudner and Liang (2002).

Automated Essay Scoring (AES) - Evolution

The vast majority of essay scoring systems in the 1990s followed traditional approaches like pattern matching and a statistical-based approach. Since the last decade, however, they have started using regression-based and natural language processing techniques.

Automated Essay Scoring (AES) - Importance

AES systems are used by educational institutions, testing organizations, and online learning platforms. AES systems are designed to replicate the grading process carried out by human raters.

Related Reading

- Best AI Tools For Teachers

- AI Rubric Generator

- Grading Papers

- Assessment Tools For Teachers

- How To Grade Papers

- Essay Grading Rubric

- Can ChatGPT Grade Essays

5 Benefits of AI Powered Automated Essay Scoring

1. Efficiency And Timeliness

AI-powered assessments are highly efficient and timely. They can process and grade assignments in minutes, whereas a human grader might take hours. This speed enhances efficiency and provides students with prompt feedback crucial for learning.

2. Eliminating Bias

Unlike human graders, AI is not susceptible to biases. It evaluates assignments based on predefined criteria without being influenced by factors like gender, ethnicity, or personal preferences. This ensures fair assessments and helps create a more inclusive learning environment.

3. Adaptive Learning And Personalized Assessments

Adaptive learning and personalized assessments powered by AI represent a dynamic educational shift. These technologies tailor the learning experience to individual students, maximizing their understanding and performance.

Learning Paces for Individual Progress

Adaptive learning systems continuously assess students' progress and adjust the content and pace accordingly. For example, suppose a student excels in one area but struggles in another. In that case, the system can provide additional support in the challenging subject while allowing faster progress in areas of strength. This personalization ensures that students get the right level of challenge and support, preventing boredom or frustration.

Tailored Assessments to Enhance Student Engagement

Personalized assessments analyze a student's learning history and preferences to design assessments that cater to their strengths and interests. For instance, a student passionate about history might have a history-themed project instead of a generic assignment. This approach increases engagement and provides a more accurate measurement of a student's abilities.

4. Tailoring Assessments To Individual Learners

AI-powered tools for eLearning analyze a student's learning patterns and customize assessments accordingly. This adaptability ensures that each learner is challenged at their own pace, promoting a more personalized and effective learning experience.

5. Continuous Feedback Loops

AI provides continuous feedback to learners beyond grading. By identifying strengths and weaknesses, these tools empower students to focus on areas needing improvement. This real-time feedback loop fosters a culture of ongoing learning and improvement.

Revolutionizing Essay Grading Efficiency with AI

EssayGrader is the most accurate AI grading platform trusted by 30,000+ educators worldwide. On average it takes a teacher 10 minutes to grade a single essay, with EssayGrader that time is cut down to 30 seconds That's a 95% reduction in the time it takes to grade an essay, with the same results. With EssayGrader, Teachers can:

- Replicate their grading rubrics (so AI doesn't have to do the guesswork to set the grading criteria)

- Setup fully custom rubrics

- Grade essays by class

- Bulk upload of essays

- Use our AI detector to catch essays written by AI

- Summarize essays with our Essay summarizer

Primary school, high school, and even college professors grade their students' essays with the help of our AI tool. Over half a million essays were graded by 30,000+ teachers on our platform. Save 95% of your time for grading school work with our tool to get high-quality, specific and accurate writing feedback for essays in seconds. Get started for free today!

How Does Automated Essay Scoring Work?

The first and most critical thing to know is that there is not an algorithm that “reads” the student essays. Instead, you need to train an algorithm. If you are a teacher and don’t want to grade your essays, you can’t just throw them in an essay scoring system. You have to grade the essays (or at least a large sample) and then use that data to fit a machine-learning algorithm. Data scientists use the term train the model, which sounds complicated, but if you have ever done simple linear regression, you have experience with training models.

There are three steps for automated essay scoring

1. Establish your data set (collate student essays and grade them). 2. Determine the features (predictor variables that you want to pick up on). 3. Train the machine learning model.

Here’s an extremely oversimplified example:

- You have a set of 100 student essays, which you have scored on a scale of 0 to 5 points. The essay is on Napoleon Bonaparte, and you want students to know certain facts, so you want to give them “credit” in the model if they use words like Corsica, Consul, Josephine, Emperor, Waterloo, Austerlitz, St. Helena. You might also add other Features such as Word Count, the number of grammar and spelling errors, etc.

- You create a map of which students used each of these words, as 0/1 indicator variables. You can then fit a multiple regression with seven predictor variables (did they use each of the seven words) and the 5-point scale as your criterion variable. Using this model to predict each student’s score from just their essay text, you can then.

How Do Automated Essay Scoring Systems Score Essays?

If they are on paper, then automated essay scoring won’t work unless you have an extremely good software for character recognition that converts it to a digital text database. Most likely, you have delivered the exam as an online assessment and already have the database. If so, your platform should include functionality to manage the scoring process, including multiple custom rubrics.

Some rubrics you might use:

- Supporting arguments

- Organization

- Vocabulary / word choice

How Do You Pick the Features for Automated Essay Scoring Systems?

This is one of the key research problems. In some cases, it might be something similar to the Napoleon example. Suppose you had a complex item on Accounting, where examinees review reports and spreadsheets and need to summarize a few key points. You might pull out a few key terms as features (mortgage amortization) or numbers (2.375%) and consider them Features. I saw a presentation at Innovations In Testing 2022 that did precisely this. Think of them as where you are giving the students “points” for using those keywords, though because you are using complex machine learning models, it is not simply giving them a single unit point. It’s contributing towards a regression-like model with a positive slope.

Utilizing AI to Identify Key Patterns in Student Writing

In other cases, you might not know. Maybe it is an item on an English test delivered to English language learners, and you ask them to write about what country they want to visit someday. You have no idea what they will write about. But you can tell the algorithm to find the words or terms used most often and try to predict the scores with that. Maybe words like “jetlag” or “edification” show up in students who tend to get high scores, while words like “clubbing” or “someday” tend to be used by students with lower scores. The AI might also pick up on spelling errors. I worked as an essay scorer in grad school, and I can’t tell you how many times I saw kids use “Ludacris” (the name of an American rap artist) instead of “ludicrous” when trying to describe an argument. They had never seen the word used or spelled correctly. Maybe the AI model finds that to be a negative weight.

How Do You Train a Model for Automated Essay Scoring Systems?

If you are familiar with data science , you know there are many models, many of which have many parameterization options. This is where more research is required. What model works the best on your particular essay, and doesn’t take 5 days to run on your data set? That’s for you to figure out. There is a trade-off between simplicity and accuracy. Complex models might be accurate but take days to run. A simpler model might take 2 hours but with a 5% drop in accuracy. It’s up to you to evaluate. If you have experience with Python and R, you know that many packages provide this analysis out of the box—it is a matter of selecting a model that works.

How Can You Implement Automated Essay Scoring Without Writing Code from Scratch?

Several products are on the market. Some are standalone, and some are integrated with a human-based essay scoring platform. More on that later!

How Accurate Are Automated Essay Scoring Systems Compared to Human Graders?

Automated Essay Scoring (AES) systems are a subject of ongoing debate regarding their accuracy and reliability compared to human graders. Research studies have been conducted to compare AES scores to human scores, analyzing their correlations and discrepancies. The consensus among psychometricians is that AES algorithms perform as well as a second human grader, which makes them very effective in this role. It is not recommended to rely solely on AES scores, as it is often impossible.

The Appeal of Automated Writing Evaluation

Despite the attractiveness of automated feedback and the numerous writing traits it can assess, using Automated Writing Evaluation (AWE) is a contentious topic. Advocates argue that AWE programs can evaluate and respond to student writing as effectively as humans but in a more cost and time-efficient manner, providing significant advantages. The feedback delivered by AWE programs is designed to support process-writing approaches that emphasize multiple drafting through scaffolding suggestions and explanations, promoting learner autonomy and motivation. Integrating AWE programs into the curriculum aligns with efforts towards personalized assessment and instruction, offering consistent writing evaluation across various disciplines.

Criticisms and Concerns Regarding AWE in Education

In contrast, critics express skepticism about the implications of implementing AWE in classrooms. Concerns have been raised regarding the potential negative effects of AWE on teaching and learning. For instance, there are fears that students may alter their writing to meet AWE assessment criteria, potentially undermining the learning process. It is also suggested that teachers might feel compelled to support such adjustments to improve test scores, which could impact their teaching practices. Some argue that AWE systems may not adequately address the social and communicative aspects of writing, as they are rooted in cognitive information-processing models.

The Need for Contextualized Research on AWE Integration

The debate surrounding the integration of AWE in writing instruction emphasizes the potential impacts of AWE, whether positive or negative. The discussion often overlooks the ecological perspective, which considers phenomena in their context and explores the interactions shaping that context. Empirical evidence supporting the claims made in the debate is limited, with much of the existing research focusing on decontextualized and psychometrically driven assessments of AWE validity. Scholars have called for more contextualized studies to understand how AWE can be integrated effectively, rather than solely determining if AWE is a valid assessment tool.

Evaluating AWE: Beyond Automated and Human Score Comparisons

AWE validity has been traditionally evaluated by comparing automated and human scores, which remains a fundamental aspect of interpreting AWE assessment outcomes. While this comparison is essential, it should not be the sole determinant of AWE effectiveness. Scholars have emphasized the need for studies focusing on the practical implementation of AWE in educational settings, shedding light on the operational aspects of AWE integration and its implications for teaching and learning. Such studies can provide valuable insights into the actual impact of AWE on writing instruction, going beyond theoretical debates to offer empirical evidence supporting or refuting claims about AWE efficacy.

- Time Management For Teachers

- ChatGPT For Teachers Free

- Rubric For Writing Assignments

- Grading Practices

- Responding To Students Writing

- How Much Time Do Teachers Spend Grading

- Grading Essays

- How To Give Feedback On An Essay

- How To Grade Work

- Feedback Tools For Teachers

- Grading Tips For Teachers

- Grading Methods For Teachers

- Essay Grader Free Online

- Essay Grader For Teachers

- Tips for Grading Essays Faster

- Tips for Grading an Essay

- Tips for Grading Essays

- Tips for Quick Essays Grading

- Tips for Teaching and Grading Five Paragraph Essay

- Automated Essay Grading

- Essay Grading Software

- Essay Grading Website

- Free Automated Essay Grading Software

- Grading Essays Online

- Grading Essays with AI

- 12 Smart Ideas to Grade Essays Faster

- How to Grade College Essays Faster

- How to Grade Essays Faster

7 Best Automated Essay Scoring Software Platforms

1. EssayGrader

EssayGrader is the most accurate AI grading platform trusted by 30,000+ educators worldwide. On average, a teacher takes 10 minutes to grade a single essay. With EssayGrader, that time is cut down to 30 seconds, representing a 95% reduction in grading time with the same results. You can replicate your grading rubrics, setup fully custom rubrics, grade essays by class, bulk upload essays, use the AI detector, and summarize essays with the Essay summarizer. Over half a million essays have been graded on this platform. It is suitable for grades from primary school to college.

2. SmartMarq

SmartMarq makes it easy to implement large-scale, professional essay scoring. It is available both standalone and as part of an online delivery platform. If you have open-response items, especially extended constructed response items, SmartMarq's online marking module can help. It offers a user-friendly, highly scalable online marking module to manage hundreds of raters.

3. Project Essay Grade (PEG)

This automated grading software uses AI technology to analyze written prose. PEG can make calculations based on over 300 measurements and provide results similar to those of a human analyzer. The software uses advanced statistical techniques, natural language processing, and semantic and syntactic analysis for fully automated grading.

4. Essay Marking Software

This automated grading program offers custom rubrics and scoring guidelines. You can establish custom marking rules, manage teams of markers, and simplify the grading procedure. It allows you to log in and score student essays based on rubrics, driving quality assessment and feedback.

5. ETS e-Rater Scoring Engine

This application automates the evaluation of essays using a combination of human raters and AI capabilities. It can generate individualized feedback or batch-process essays. The tool is versatile and can be used in various learning contexts.

6. IntelliMetric

IntelliMetric is an AI-based essay-grading tool that can evaluate written prompts irrespective of the writing degree. It drastically reduces the time needed to evaluate writing without compromising accuracy. The software offers exhaustive adaptive feedback and features a Legitimacy function to detect irrelevant or inappropriate submissions.

7. Knowledge Analysis Technologies (KAT)

KAT is an automated essay-grading software based on extensive research. It can produce consistent and accurate results and integrate with third-party services. The system includes latent semantic analysis (LSA) to compare semantic similarities between words and the intelligent essay assessor (IEA) to evaluate text meaning, grammar, style, and mechanics.

5 Best Practices for Integrating Automated Essay Scoring Systems

1. Establishing Validity and Reliability

I recommend ensuring that the AES system maintains **high agreement rates** with human raters to establish reliability. You should also evaluate the system's **validity** by assessing potential threats like construct misrepresentation, vulnerability to cheating, and impact on student behavior. In addition, it is vital to validate the AES system using a variety of scenarios beyond just high human-computer agreement, such as off-topic essays, gibberish, and paraphrased answers.

2. Providing Transparency and Explainability

I advise making the AES scoring process transparent to students and teachers. Explaining how the system evaluates essays is crucial to avoid students gaming the system. Ensure the AES system can provide meaningful feedback and not just a score.

3. Aligning with Pedagogical Goals

I recommend selecting an AES system that aligns with your specific writing assessment goals and rubrics. Customizing the AES system to your prompts and rubrics is also essential rather than using a generic approach. I suggest using the AES system to complement human grading, not a replacement.

4. Managing the Scoring Process

I encourage establishing clear scoring rubrics and performance descriptors. Assigning essays to raters and managing the scoring workflow efficiently is beneficial. Utilize the AES system to flag unusual scores for human review.

5. Providing Training and Support

I recommend training teachers on how to interpret AES scores and feedback. It is crucial to support students in understanding the AES system and how to improve their writing. Continuously monitor the AES system's performance and make adjustments as needed.

Save Time While Grading Schoolwork — Join 30,000+ Educators Worldwide & Use EssayGrader AI, The Original AI Essay Grader

Let me tell you about EssayGrader . It's a revolutionary AI grading platform transforming how teachers evaluate essays. Imagine this: the average teacher spends about 10 minutes grading a single essay, right? With EssayGrader, though, that time is slashed to just 30 seconds. That's a 95% reduction in grading time while maintaining the same high-quality results you'd expect from a human grader. It's no wonder more than 30,000 educators worldwide already trust EssayGrader for their grading needs.

Setting Up Grading Criteria

The genius of EssayGrader lies in how it replicates your grading rubrics. Do you know those rubrics you painstakingly create to ensure fairness in grading? With our AI tool, you don't have to leave any of it to chance. You get to set the grading criteria, and the important elements you look for in an essay, and our AI will follow them to the tee.

Customized Rubrics

You may be a teacher who wants to go beyond the standard grading rubric. With EssayGrader, you can set up fully customized rubrics. You can tweak the grading criteria to match your exact preferences. This way, you get the feedback you want from essays, every single time.

Scoring by Category

You may be a teacher who needs to grade essays by class or by topic. With EssayGrader, that's not a problem at all. You can grade essays according to specific categories, making tracking progress easier and identifying areas where students need improvement.

Bulk Uploads

Imagine a scenario where you have a pile of essays to grade in one go. That's no problem with EssayGrader . Simply use the bulk upload feature to upload multiple essays at once, saving you time and effort. It's efficient, it's quick, and it's hassle-free.

AI Detection

Now, here's a nifty feature: our AI detector. It's designed to catch AI essays, ensuring the work is authentic and original. You can rest easy knowing that the essays you're grading are the real deal—student work that deserves your attention.

Essay Summarizer

Finally, there's our essay summarizer. With this tool, you can quickly summarize essays, giving you a snapshot of each piece's main ideas and arguments. It's a handy feature that lets you get to the heart of an essay without reading through every line.

Save Time, Get Quality Feedback

All in all, EssayGrader is a game-changer for teachers. It's fast, efficient, and accurate. And with over half a million essays already graded on our platform, you can trust that EssayGrader delivers the desired results. So why waste time on manual grading when you could be saving 95% of your time with our tool? Get high-quality, specific, and accurate writing feedback in seconds. Get started with EssayGrader today—for free.

- Grading Websites

- Essay Grader For Teachers Free

- AI Grading Tools For Teachers

- Grading Apps For Teachers

- How To Use Chat GPT To Grade Essays

- AI Grading Software

- Cograder Reviews

Save hours by grading essays in 30 seconds or less.

Related blogs

Learn practical tips on how to avoid bias in the classroom while fostering an equitable learning environment for every student.

Know how to keep students engaged in classroom. Improve participation and build an engaging learning environment.

Support ELL students with proven strategies and tools that foster language development, engagement, and academic growth in an inclusive classroom.

Grade with AI superpowers

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique.

Subscribe to get our latest content by email.

Grade all your essays in minutes , not hours

Your personal ai essay grader, for busy teachers.

Stop neglecting your family time with school work

What you can expect:

- Personalized Feedback

- Custom rubrics & Grading scales

- Supports PDF files

- Grade essays in bulk

- Writing Errors list

- Writing Improvement Suggestions

- Grade up to 750 essays per month

Frequently Asked Questions

e-rater ® Scoring Engine

Evaluates students’ writing proficiency with automatic scoring and feedback

Selection an option below to learn more.

How the e-rater engine uses AI technology

ETS is a global leader in educational assessment, measurement and learning science. Our AI technology, such as the e-rater ® scoring engine, informs decisions and creates opportunities for learners around the world.

The e-rater engine automatically:

- assess and nurtures key writing skills

- scores essays and provides feedback on writing using a model built on the theory of writing to assess both analytical and independent writing skills

About the e-rater Engine

This ETS capability identifies features related to writing proficiency.

How It Works

See how the e-rater engine provides scoring and writing feedback.

Custom Applications

Use standard prompts or develop your own custom model with ETS’s expertise.

Use in Criterion ® Service

Learn how the e-rater engine is used in the Criterion ® Service.

FEATURED RESEARCH

E-rater as a Quality Control on Human Scores

See All Research (PDF)

Ready to begin? Contact us to learn how the e-rater service can enhance your existing program.

IMAGES

VIDEO